Serviços Personalizados

Journal

Artigo

Indicadores

-

Citado por SciELO

Citado por SciELO -

Acessos

Acessos

Links relacionados

-

Citado por Google

Citado por Google -

Similares em

SciELO

Similares em

SciELO -

Similares em Google

Similares em Google

Compartilhar

TecnoLógicas

versão impressa ISSN 0123-7799versão On-line ISSN 2256-5337

TecnoL. vol.21 no.41 Medellín jan./abr. 2018

Artículo de investigación/Research article

MindFluctuations: Poetic, Aesthetic and Technical Considerations of a Dance Spectacle Exploring Neural Connections

MindFluctuations: consideraciones Poéticas, Estéticas y Técnicas de un Espectáculo de Baile Explorando Conexiones Neuronales

Tania Fraga1

1 Architect and artist, Ph.D. in Communication and Semiotics, Institute of Mathematics and Art of Sao Paulo, University of Brasilia, Sao PauloBrazil, taniafraga.pesquisa@gmail.com

Fecha de recepción: 30 de septiembre de 2017/ Fecha de aceptación: 23 de noviembre de 2017

Como citar / How to cite

T. Fraga, MindFluctuations: Poetic, Aesthetic and Technical Considerations of a Dance Spectacle Exploring Neural Connections. TecnoLógicas, vol. 21, no. 41, pp. 81102, 2018.

Abstract

In this era of coevolution of humans and computers, we are witnessing a lot of fear that humanity will lose control and autonomy. This is a possibility. Another is the development of symbiotic systems among men and machines. To accomplish this goal, it is necessary to research ways to apply some key concepts that have a catalytic effect over such ideas. In the discussion of this hypothesis through the case study of the spectacle MindFluctuations—an experimental artwork exploring neural connections—we are looking for ways to develop them from conception to realization. The discussion also establishes a process of reflection on the design, development and production of this dance spectacle. This artwork uses a customized Java application, NumericVariations, to explore the quoted neural connections for spectacles, performances and sitespecific installations. Its approach was made possible by recent neuroscience research and the development of a Brain Computer Interface (BCI) integrated with a virtual reality framework, which enable an experimental interactive virtual reality artwork to emerge. A neural helmet connected to the computer and entwined with mathematical procedures enabled a symbiosis of humans with computers.

Keywords: Computer Art, Algorithmic Art, Virtual Reality, Brain Computer Interface BCI, Java3DTM.

Resumen

En esta era de coevolución de los seres humanos y las computadoras estamos presenciando mucho miedo de que la humanidad pierda el control y la autonomía. Esta es una posibilidad. Otro es el desarrollo de sistemas simbióticos entre el hombre y las máquinas. Para lograr este objetivo es necesario investigar formas de aplicar algunos conceptos clave capaces de tener un efecto catalizador sobre tales ideas. Al discutir esta posibilidad a través del estudio de caso del espectáculo MindFluctuations, una obra experimental que explora las conexiones neuronales, estamos buscando formas de desarrollarlas discutiendo este caso particular desde su concepción hasta su realización. También establece un proceso de reflexión sobre el diseño, desarrollo y producción de este espectáculo de danza. Esta obra de arte utiliza una aplicación personalizada, NumericVariations, para explorar las conexiones neuronales citadas para espectáculos, actuaciones e instalaciones específicas del sitio. Su enfoque fue posible gracias a la reciente investigación en neurociencia y al desarrollo de una Interfaz de Computadora Cerebral (BCI) integrada con un marco de realidad virtual, que permitió que surgiera una obra experimental de realidad virtual interactiva. Un casco neuronal, conectado a la computadora y entrelazado con procedimientos matemáticos, propició una simbiosis de seres humanos con computadoras.

Palabras clave: Arte informático, Arte algorítmico, Realidad virtual, Interfaz informática de cerebro BCI, Java3DTM.

1. Introduction

In this era of coevolution of humans and computers, we are witnessing a lot of fear that humanity will lose control and autonomy. This is a possibility. Another is the development of symbiotic systems among men and machines. “Symbiosis is a state found in Nature in which two or more organisms act in complementary ways to achieve survival (…). From my point of view, the possibility of deeply interacting with machines, to be turned inside out by seeing my own mind fluctuations translated into something through the action of a computer is fascinating. By externalizing a few emotional states and thought processes, facing the fluctuations of one’s own mind it may be possible to glimpse at this mysterious, unfathomable process we call 'thought' [1]. I do not believe machines will substitute humans but, since they are built mirroring 'humans' own decision and reasoning processes [2], they have had an increasingly complementary role in human lives. Such role I have called symbiosis” as cited in [3], (p 3). However, it is not the goal of this article to focus on symbiosis. Here it is only necessary to point out its importance as a key concept for the spectacle set up, since it is able to have a catalyst effect over the spectacle’s development.

In the discussion of this possibility through the case study of the spectacle MindFluctuations, we are looking for ways to understand what Roy Ascott defines as the confluence of dry computational systems with wet biological ones. This particular case study is discussed from conception to realization. This spectacle was quite complex and involved several professionals such as musicians, choreographers, dancers, light designer, stage manager, sculptor, programmers and scientists from various fields, among others.

Computer Art has existed for more than 60 years but there still is a lot of resistance in the art world to appraise it as an art form. In the meantime, there is an increasing number of artists who are using customized software and hardware developed for artistic goals, opening a large field of possibilities for artworks produced through computer coding. “Usually such artworks are evaluated with past criteria that deny their main characteristics, which are to be what they never were before. Therefore, such artworks have to be approached with different evaluation methods. There are many differences between computer artworks and other means of creating art. There are convergences and divergences which are not at the focus here. The present approach does not intend to enhance one kind of art by depreciating the other” as quoted in [4] (p 186), but rather understand the singular aspects that characterize a spectacle, an installation or a performance using realtime virtual reality, as described in [5].

It is important to note that “similar to traditional artworks, computer artworks are poetic and aesthetic creations of human minds evoking emotions, sensations and a labyrinth of representations and, sometimes, ambiguities and paradoxes. These subjective sets intensify mental connections allowing the human sensory apparatus to be entwined with mathematics. They establish different relationships causing different readings. Therefore, numerical relations and functions are woven with sensory experiences and the results are not always predictable”, as cited in [4] (p 186).

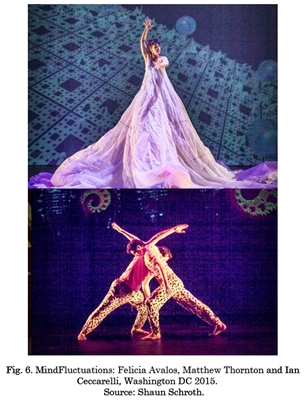

The spectacle MindFluctuations has been designed in conjunction with the American choreographer Maida Withers and premiered on March 19th, 2015, in Washington DC. It will happen in the future in sitespecific installations and performances. To achieve neural connections with poetic and aesthetic expressions, this artwork searched for ways to use neural data and other related procedures able to be translated by numbers, a procedure described in [3].

In this spectacle, the dancers wore two neural helmets to capture data related to their emotional states. After these states were scanned and used as input, they were interpreted by the computer and influenced virtual scenarios projected over a screen on the backstage. The resulting scenarios are artificially alive systems allowing the dancers to “symbiotically interfere in the algorithmic nature of artificial 'seeds'”, as cited in [6] (p. 169). Composers and musicians John Driscoll and Steve Hilmy performed the music live in a sound environment created with robotic instruments and electronic music.

Many conceptual, aesthetic and poetic aspects of other similar works have been extensively discussed elsewhere in articles such as [6], [8], [9], [10], [11], [12], [14]. Here, the aim is to describe how the design and development of MindFluctuations have established the necessary ground for the production to unfold. It also highlights the poetic and aesthetic aspects that are necessary to develop and perform an interactive artwork such as this. This discussion appears in the section ‘The poetic and aesthetic approaches within MindFluctuations’.

MindFluctuations employs the customized virtual reality software NumericVariations to explore neural connections for spectacles, performances and sitespecific installations, a topic extensively described in [6], [7]. It uses a neural headset and a Brain Computer Interface (BCI) to achieve its aim. In MindFluctuations, the dancers experimenting with these settings and environments faced a major challenge. Their understanding of the emerging configurations and of their different ways to perceive and respond to these virtual realms allowed them to constantly re invent their own ways to perceive the environment where they were immersed exploring their own sensory system, their own aesthesis—from the Greek 'Aisthēsía'. The performers Ederson Lopes and Mirtes Calheiros worked with other similar software, EpicurusGarden, described in [22]. They have stated several times that such awareness constantly arose in their minds during the interaction with similar virtual worlds. Several aspects of this software and its brain computer interface (BCI) are presented in the section titled “The application NumericVariations”.

In these realms, affective fields emerge and their sensory stimuli establish a complex network of data and relationships. They create many possible configurations that may or may not have been foreseen by the artistprogrammer as made explicit in [4]. Since computer languages were not designed for artistic expression, sometimes it is necessary to subvert their use. Such innovative and original attempts exploring the immanent potential of computer languages allow the emergence of new poetic and aesthetic solutions, as pointed out in [4] and [15].

In virtual realities there are autonomous agents that respond to the emotions of the dancers. These scenarios are a set of 11 virtual domains showing processes inherent to an evolutionary dream journey. These domains are virtual realities inspired by nature. Meanwhile, they start from processes much simpler than the ones existing in the natural world; they are emergent processes similar to the waves of the sea, the clouds meandering in the sky or the snowflakes: always the same and never the same. In these virtual domains, 'trees' grow, emotions inseminate particles that spread throughout space where virtual flocks of preys and predators fly around, either acting as cannibals or chasing each other and dancing when they meet. The virtual camera slides and glides in the virtual space, balls collide and roll in virtual platforms. All these processes, these varied set of changes, are influenced by the dancers’ emotions and take place in a specific way in each performance. Although general stage configurations are repeated, the end result is unique in each performance and can never happen again in exactly the same way. They show processes of becoming while happening—something metaphorically similar to living things—and are, at the same time, a visual representation of a pure mathematical universe.

Finally, the Conclusion section presents a few reflections that establish and coalesce the process of creating, developing and producing the spectacle.

2. Method

This article aims to show the professional development of an interactive computer artwork and its application in the field of performance by presenting the spectacle MindFluctuations as a case study. It reflects upon the poetic, aesthetic and technical aspects involved in its setup and the resolution of many of the problems that arose during production. It applies a method of successive approximations to the desired goals, described in detail in [16]. In this approach, art, architecture and design created with the aid of computational devices have a dialogue with the choreography, the music and the stage space. The transdisciplinary intersection of these fields has enabled the development of the investigation so that they could constitute a fundamental set of strategies and actions allowing the production of the spectacle and its complementary products.

3. Results and discussion

Due to the complexity of the spectacle setup, the questions related to its poetic, aesthetic and technical approaches are divided into two different sections. First, we inquire about what needs to be developed in order to perform interactive computer art with fullness and strength. How can one do it? How to express something so that what is being done causes the audience to poetically feel and sense that situation? How to articulate what is done so that the computer technology works and introduces itself without hiding and disrupting the poetic and aesthetic goals of the work? How can one intertwine a complex set of relations to create something meaningful? Second, in order to perform interactive computer art with fullness and strength, one also has to ask: How do the devices work? How to convert the various formats involved when working with digital and analog fields? What strategies are needed so that one can choose the best result from a wide range of possibilities? How to deal with interference and degradation of signals in mixed technological environments?. We considered such reflections while searching for answers to these questions.

It is desirable to point out the marked differences between working with videos in databases and live interaction happening in real time in virtual reality set ups. An aphorism sums up the possible field that presents itself to the computational artist programming such virtual realities and the choreographers working with them: “If a picture is worth a thousand words, an interactive simulation, a virtual reality, is worth thousands of millions of them”, as quoted in [6] (p. 170). Snapshots of these virtual worlds during interactions show only some views of these microuniverses; they are nothing more than glimpses. Videos show the diversity and dynamics of these virtual realities; however, they are only moments of an interaction that was captured, frozen in a time line. Examples can be found in the videos on YouTube, Vimeo and the author’s site: http://taniafraga.art.br/; https://vimeo.com/taniafraga; https://www.youtube.com,/user/taniafraga1.

One must understand that applications or software that create virtual realities have 3D objects, agents or virtual ‘beings’ (live processes) that inhabit these spaces. It is very different from a video that captures the interaction and presents it linearly, always repeating the same sequence previously captured. The computer artworks addressed here are immanently different from a video, since what they present is the process of becoming, coming into being, as can be seen in several videos in [14], [17], [18], [19], [20], [21].

As stated earlier, these micro universes allow realtime variations of many autonomous processes that follow a set of rules. However, they manifest themselves in singular manners in each performance; they are similar scenarios to others previously shown but never exactly the same at each presentation of the work, either in sitespecific installations, shows or performances. The same 'seed' is presented at various times and creates a singular result. The latter carries the potential to never happen again in the same way. That is because they are similar to the processes that happen in life and nature, although much simpler than those. As said before, these processes are both a visual and audible representation of a fluid mathematical universe.

3.1 The poetic and aesthetic approaches within MindFluctuations

Firstly, the term artistic research will be briefly presented here due to the importance of its poetic and aesthetic goals. As discussed by Busch, for the present approach, artistic research means “art that understands itself as research, in that scientific processes or conclusions become the instrument of art and are used in the artworks” [23]. Such instrument is related to artwork poetics. “Poetics is an adopted word, borrowed from the literary domain and transferred into the field of contemporary art. Here it is used with semiotic freedom”, as quoted in [4] (p. 187), to explore many relations established during the conception, design, development and production of the spectacle. In brief, the word poetics is used here mainly to describe the way results are produced and the tools employed to endow a set of virtual signs with meaning. Perhaps the Greek word techne—which is the etymological source of technology—could also be applied to the development of techniques for virtual poetics, but such use could become misleading. For the present approach, it is enough to say that such techniques emerged from doubts related to the actual construct of sciences and the models of artistic research close to the scientific territory. On the other side, the aesthetic qualities of the artwork are related to the qualities of expression and sensory perceptions, as portrayed in [24]. Its expressive and sensory fields are entwined within the artwork; for example, for a theoretical analysis, it is hard to separate the way parameters for interaction are chosen and the way neural data are transformed. Objective and subjective choices are interlaced.

For this approach, many other questions were formulated. For instance: How to maximize expressive, aesthetic and poetic qualities in order to create something meaningful using data from sets of neurons turned into works of art? How may biological and electronic brains work together?

We highlight the fact that “the virtual domains are compositions of colors, movements and shapes carefully selected rather than aimless mixtures of whatever is randomly available. In this artwork, shimmering colors, forms, lights and cameras in movements, metaphorically express the unending changes of life. Space and time create almost unbounded numeric variations” as cited in [4] (p. 187), a perplexity the author has never totally grasped. They condense, out of the author’s mind, in entwined geometries, shifting structures creating delicate wave patterns over wire frame constructions, suggesting living things, as can be seen in the videos in [17], [23], [18], [19], [20], [21], [22], and described in [4] (p.187). Also see Fig. 1 “Flickering lights are combined with darkness; colors and shapes, space and time are woven together as a tapestry: the burning heat of the reds; the unfathomable depths of the indigo and navy blues; the ranges of dark grays and blacks; all establishing contrasts with bright bold tropical colors. There are hidden 'seeds' waiting for the users' emotions to trigger growth behaviors; there are flight behavioral patterns allowing some agents to persecute or flee; there are changing relations among the velocities, the positions and the gravities, distinguishing, for example, determined realm or even the camera movement flying through it; and many, many other functions, characterizing the agents' behaviors, which are woven through numeric threads”, as quoted in [4] (p.187188). Furthermore, “from a poetic and aesthetic viewpoints lightness, weightlessness, fluidity, clarity, simplicity and visceral humanmachine symbiosis are vectors pointing to the solutions I have intended to reach”, as cited in [3], (p. 7).

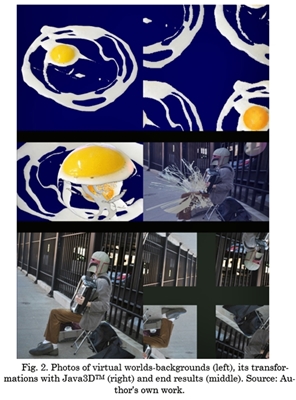

The applied geometric transformations were related to time and space variables as well as numeric variations, synthesized in the name of the application. They were studied and chosen from a wide range of possible choices. The pictures used in texture mapping—in GIF format—resulted from images carefully photographed, chosen and reworked (Fig. 2). The behavior of virtual agents entwined with the human emotions of the dancers create results that are not literal. The shapes and colors were defined in ranges so that, even when affected by random factors, they maintain their proportions, hues, saturation and values within harmonic sets; therefore, their visual qualities are not lost during interactions. In the meantime, it was possible to entanglement the weaving of intertwined nets in which the virtual and the human were united to present these metaphorical realities. Consequently, it is appropriate to call them symbiotic relationships, as discussed in [6] (p. 179) and [25] (p. 26).

Also, there is no desire to control what the audience will feel while leading them towards a metaphorical journey. The latter contains different meanings open to interpretation for the diverse eyes of different beholders. The whole set of 11 virtual realms was created with the intention of going from a beginning to an end—any beginning, any ending, but an end that is not a final one but, maybe, another beginning. Its abstract setup can be understood either as a journey pointing to the amazing development of the brain or the evolution of life. The journey begins with 'TheEgg'—a symbol with clear connotations and denotations as ovum—and finishes with 'Panspermia', a concept developed by Mautner [26] that refers to seeding the Universe with ideas or life. They allow the public to have as much freedom as possible for their own interpretation of the journey, which is presented in [4] (p. 188).

The French thinker Edmund Couchot has classified the kinds of computer interactions as exogenous and endogenous [15]. Exogenous interaction is the paradigmatic questionanswer approach we are accustomed to when working with computers. On the other hand, endogenous interactions happen between autonomous processes (agents) that act without any human control.

As an artist, I had a visceral desire to find ways to research symbiotic processes among computers and humans that would aim to explore modes of actions between humans and machines. The potential of computers would be explored to expand human features. Human sensitivities would be utilized to affect machine processes. Thus, I entertained the idea of implementing artworks using human emotions to influence the behavior of autonomous agents’, either physical or virtual robots. Therefore, it would be possible to experiment with the way human emotions affect autonomous processes—which I have researched since 2004, when I visited Rosalind Picard’s laboratory at MIT.

This should happen in such way that virtual agents would have to be influenced when perceiving and acting on their own robotic or virtual environments, or both.

Looking for concepts that could express this kind of interaction, the concept of exoendogenous interactivity was created by [7]. What is this? After the computer digitizes the user's input data in an exogenous way, such data will be used by autonomous endogenous processes in the virtual domains, affecting—as said above—the way in which the agents perceive their own virtual environments and, therefore, possibly determining their own behavior. In the future, this approach will be used to extend these solutions to another artwork NumericTessitures, a work in progress described in [8] in which users will be mixed with virtual and robotic agents. It has been assumed that this kind of research might allow the emergence of meaningful processes arising from a kind of very interesting humanmachine symbiosis, thus proposing an approach to achieve collective interaction.

It was reasoned that, since the human body acts by externalizing the brain's electrical impulses, these impulses could be used to interfere with virtual agents' behaviors. In summary, one may say that human behaviors may be conscious instructions or unconscious results of sensory and emotional mind fluctuations. Someone helpless to control their own unconscious impulses may have them read and translated to numbers. These states are due to the action of neural fields captured and scanned through noninvasive devices, such as the Emotiv neuroheadset [27], which is connected to the human brain. When translated into numbers, such data may be used by electromechanical systems. The present art experiment was conceived for that purpose. Such stimuli could affect the autonomous agents existing in the virtual realms. Some of the images resulting from such an experiment are shown in Fig. 1, 5 and 6 and in a few videos in [19], [20], [21].

A project such as MindFluctuations requires the use of algorithms that involve intensive calculations for its realtime processing. These algorithms were implemented in Java3DTM for games by Andrew Davison [29], adapted to the Java framework by Pedro Garcia, and reinvented for aesthetic and poetical purpose by myself. In past decades, such projects would need supercomputers for their implementation. Today, it is possible to carry them out with personal computers and this article aims to provide an overview of the technical strategies found to bring such project to the general public.

In the 90s, one minute of animation— 1800 frames—could take weeks for processing. These animations could only be presented in videos. In the following decades, these animations, sounds and precomputed images began to be stored in databases. The contents of the latter can be constantly accessed and presented in interactive ways. Such procedures often create the illusion that animations, sounds and images are being processed in real time. This situation is still employed and causes numerous misconceptions as there is a lack of knowledge among the public about the processes that are occurring on stage. Performances using video databases are quite different from what takes place in real time on stage using virtual reality technology, because the latter occurs as processes of becoming—as stated many times before.

In the case of MindFluctuations, after the general structure of the customized software—NumericVariations—was created, it was necessary to continue its development with the choreographer. Therefore, in February 2014, after the basic structure was defined and the algorithms used in the software were running satisfactorily, the choreographer Maida Withers traveled to São Paulo for a 15day residency that allowed her to experiment with the application’s interface. From the beginning, a set of virtual realities were planned as a journey with 11 virtual worlds outlining a beta version of the application. Based on this draft, a joint development process took place for about a year. It required the development of research methods and trials to achieve it. There was the need for improvement and constant adaptation while creating new versions of the application, which were sent to Maida Withers in the USA for testing by dancers and musicians. Two sitespecific installations in exhibitions were mounted with them (Fig. 3). Both exhibitions took place in 2014. One of them was held in Santa Maria, at the Contemporary Art Symposium, where it was possible for the public to use the neural helmet to interact with virtual worlds. The other one was organized in Brasilia, in EmMeio # 6 exhibition at the Museum of the Republic in Brazil. In that opportunity, a video of neural activity was used to affect virtual worlds.

To illustrate the joint development of this project, extracts of some correspondence with Maida Withers exchanged via email and Skype conversations are presented below: “I (Tania) am finishing the tests for the new version. It is as you (Maida) asked me. I will put it on Dropbox as soon as I finish the tests; thus you may work with them.

There are big changes in many of the worlds due to the emotional state values I am using. For example, the camera in the ‘Musician in LA’ and the ‘Beginning’ may become very crazy if the dancer is in a state of calmness and will work more harmoniously if they are excited. In ‘Blackness’ and ‘Whiteness’, the balls go berserk for the same state; and in 'Whiteness', they even may fly. In the bot worlds, it is hard to perceive the changes with the bots. However, the cannibals may become fierce and all the pink bots may be eaten and only the blue ones will survive and stay on scene. Regarding the fractal growing trees, they also grow or shrink depending on the emotional state of the dancer. If this state fluctuates a lot, some chaotic phenomena may occur due to the type of number I used (float). In 'BrainEruption' (the cars) and 'Panspermia' there are explosions of particles each time the dancer is excited. I tested the application in two exhibitions here and it is working very well.

If you are not seeing these effects, it is because the window that reads the data on the Emotiv software is not properly set. (…) This window MUST be over the end of the window of the affective suite of the neuroheadset at the Emotiv control panel. This small window reads the position of the orange line and passes values to the worlds. I used such values as parameters for changing agents’ behaviors. I am sending a video with my brain wave activity that has exactly the same size of this small window.

By using the video instead of the Emotiv Control Panel, we may simulate the brain activity without the helmet. If you put the small translucent window over this video (resize its window and use the smallest possible size for it) it will be as if someone was interacting with the worlds. This will also be our plan B, just in case the helmet disconnects during the spectacle. (…) Also, the viewpoints have much more time now, between 4 and 13 seconds (we can have any necessary amount of time we want and I will program them when you have decided the time for each section). The walkthrough (initial view point animation) is much better if it happens slowly, but you must have to wait until it finishes before taking any other action. Therefore, the computer will be crazy trying to run too many threads at once. (…) I think it is very important for you to work out the differences with the dancers' emotions. The computers’ set up needs to be:

Two identical computers with two video cards—running in parallel—each one with the virtual world on the stage projector and the Emotiv software in the offstage monitor. The small translucent window will go directly over the affective state window at the Emotiv software. The translucent window may be dragged but it is also easily put in place through numbers at any location inside the screen area. It will go to the exact point we want it to go. But since this value will change in different computers, we must give the correct coordinates (numbers) in a text file and set it up by the final week before the spectacle. (...) If necessary, we need to facilitate the setup for the staff working offstage: a video splitter may duplicate the projector’s signal to another monitor in front of this person.

For example, computer 1 is running 'TheEgg' (world 0) with one dancer using helmet 1. Computer 2 is being prepared. The ball to connect to world 1, 'Blackness', is waiting on the screen and the dancer using the other headset—helmet 2—is prepared with computer 2 and also waiting. At time T, a cue is given, and the technical staff offstage clicks the ball. World 1—'Blackness'—begins; the dancer enters the stage, the computers are switched and the spectacle goes on. At this pointcomputer 1 now has the ball to run world 2—'Beginning'. The first dancer wearing helmet 1 comes to the computer setup, puts saline solution on all the connectors and verifies that everything is connected and working well, and so on and so forth (…).

The purpose to have 2 outputs for each computer is that I need to extend the desktop up to 2 sources (minimum): monitor and projector. The projector shows the realtime Java3DTM application while the monitor runs the Emotiv software and reads the dancer’s emotions, which will then be sent to the computer to be processed. Therefore, the output signals are two: projector and monitor. In general, they are SVGA and DVI. If so, 2 connectors to convert them to HDMI will probably be necessary. This may happen since everything now is HDMI. The video card needs to be compatible at least with OpenGL2.’

3.2 The application NumericVariations

The first result of the art research applied to MindFluctuations was achieved by the construction of the robotic artwork Caracolomobile [3]. This robot was built with an award from the Brazilian Cultural Institute Itaú, in 2010. Adopting this approach, the customized application NumericVariations was created. The latter inquired: What are virtual realities? How may someone describe them? How are virtual reality artwork and any other interactive computer application different? Looking for answers one may say that a virtual reality, realm, domain or world, is a realtime simulation of a 3D environment with geometrical, topological and physical characteristics similar to the physical world. Although all simulations can share such characteristics, the artworks are conceived to develop singular and specific features.

In the application, the computer mediates dozens of processes linked together through thousands of lines of code. NumericVariations is written in Java using the API Java3DTM. It took three years for it to be programmed. It has a repertoire of around 280 Java classes with approximately 70,000 lines of code. It uses algorithms for collision detection and avoidance, organization and growth of fractal trees, elevation of fractal terrains, particles, and behaviors related to collective flocks (with prey and predator behaviors, as well as proximity, separation and alignment parameters for flights), among others. It was originally implemented by Davison [29]—as said before. In it, the user's emotional data interfere with the behavior of endogenous virtual agents. As described above, NumericVariations portrays a set of 11 virtual domains. There are complete arrays of sub systems such as packages for geometries, autonomous artificial intelligence and artificial life behaviors, animations, materials, lights (illuminations), camera movements, interactions, navigation, utilities, input and output controls, mathematical functions, scene graph creations, and images for textures and animations, among others. Fig. 1 shows a few of those virtual worlds.

The use of neural data added a few more unknown variables to this situation. To use them for exhibitions and performances, it is important to understand their working conditions. In response to them, the artistprogrammers must have, at their fingertips, some strategies to deal with possible delays and signal losses that may occur during the data transfer between the computer and the helmet readings. While the dancers wearing the helmet are feeling something such as excitement or frustration, some sets of neurons in their brain will fire, creating variations in the electric gradients of neural fields. “The neuroheaset captures the variations of these electrical impulses and the helmet interprets and sends them to the computer. These electrical impulses are then translated into numerical data. These data are programmed to interfere with the behaviors of the autonomous agents within the virtual environments”, a procedure described in [7] (p. 193).

It is interesting to inform the public that they will not have literal interaction. Since literal interaction is becoming well known, people usually look for it without being aware of other much more interesting alternatives. For the artists participating in such experiments, the mixed endogenous and exogenous interactions are much more challenging, since within this kind of experimentation lies the vitality and more thoughtprovoking ways to conceptualize innovative computer artworks. As said before, I have called this type of mixed interplay exoendogenous interactions [7]. If the resulting mixed interactions become too literal, they could be exchanged for much simpler exogenous types of interaction. In such case, the use of neural data could be characterized as nothing more than an approach to seek attention instead of developing a set of much more interesting research inquiries.

It is necessary to define algorithms for the behaviors of each virtual object or autonomous agent in a virtual domain. As noted before, the algorithms used were implemented in Java3DTM by Andrew Davidson [29]. They were adapted and transformed for the achievement of the project’s goals. “They need to be added to other properties, such as: colors, shapes, proportions, textures, illumination, movements, accelerations, velocities and gravities, camera location, paths and related movements, among many other attributes. Redundant procedures must be studied and backups prepared since faults, losses, and noises may happen during the data transit among computers and devices”, as quoted in [7] (p. 193).

To create an artwork, all these characteristics must be planned; the way they entwine must be chosen. These features must always ensure the best aesthetic and poetic contents throughout the whole artwork. Faults must be changed into improvements. In general, artworks are focused on perceptions and sensory relations, even if these are not easily unveiled. It does not matter if they are subjected to realistic renderings or other more abstract and dashing ones.

Therefore, lights, illumination, colors, materials, proportions, and any similar artistic characteristics must always be carefully chosen. If photographs are used, they must have the best possible resolution, thus enabling them to be shown in big projection screens. If pixelation occurs, the images must be designed to maintain the quality of the whole.

An interface is a boundary between two things. The software NumericVariations allows to create an interface between the dancers’ brain and the computer. This application establishes a flexible temporary noninvasive membrane between biological and electronic brains. It is rather different from a prosthetic device, which would aid one to overcome, for example, a disability. Also, it is rather different from the scientific goal of understanding the workings of biological or electromechanical brains. This work, as artresearch [23], aims to achieve poetic and aesthetic possibilities resulting from the experimentation with this type of application. It is not focused on the operating aspects of either kind of brain or any possible practical applications. It is not a procedure that helps machines become more intelligent, affective or sensitive. It is not research to understand the way biological brains work. Neither it is software aiming to achieve the development of algorithms. It is an artwork for fruition, for expression of poetic and aesthetic qualities, for experimentation with perceptions and sensations [30] (p. 182).

As said before, a similar approach was made possible by the creation of the robotic artwork Caracolomobile. The latter had a neural interface described in [3] by recent neuroscience research presented in [31], [32], artificial intelligence research shown in [1], [2], [20] and a Brain Computer Interface (BCI) discussed in [7], [33], [34] integrated with virtual reality software made possible by the use of a framework that has been in development since 2003. These factors have established the basis for the creation of this experimental and interactive artwork and the achieved solutions have allowed the emergence of a symbiosis—as described by David Norman [25] (p. 26)—where users' emotional states such as excitements and frustrations affect the virtual realms in the computer, thus creating slightly different configurations at each presentation of the artwork. These final outcomes take into account the fact that the audience will perceive activities due to fluctuations of human mental states, something unimaginable in the recent past.

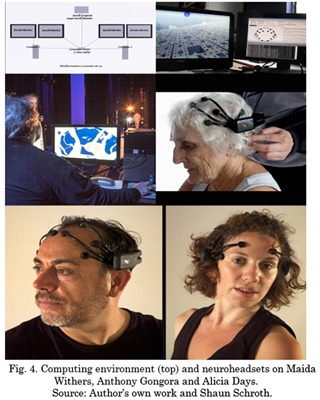

In order to achieve the artwork’s main goal and to answer the questions outlined during its conception, it was necessary to understand the possibilities available in the market. Also, it was important to reach an agreement regarding the logistical difficulties someone has to face when creating custom software to be presented in spectacles, exhibitions and performances. Therefore, firstly it was necessary to find a sufficiently complex device that would allow data to be reliable and simple enough to enable the manipulation and use by nontechnical users, such as dancers or the public. Secondly, there was the certainty that the most important feature of such artworks would be their aesthetic and poetic qualities. Thus, after experimenting with other available commercial devices [35], the Emotiv neuroheadset was chosen [28] (Fig. 4).

This headset was regarded as the most appropriate for our purposes. It offers a noninvasive system of neural monitoring and it enables to use the affective states of the dancers who wear it. Among the many possible affective states, those ranging from excitement to calm/frustration and viceversa were chosen. The helmets were connected to the computers via Bluetooth devices (dongles). Interferences with other radio signals occurred and the positioning of dongles on the stage required special attention.

What does this device do? It reads facial expressions, emotional states and a few cognitive data related to movements. After researching possible results of the use of such data, we concluded that the most interesting and challenging approach for the aforementioned artwork would be to use the helmet's affective suite. The next step was to find how and which of the obtained data would affect the virtual realms, thus allowing expressive and not literal poetic results.

The autonomous agents inhabiting the virtual realms were planned as sets of design principles that enable them to provide appropriate behaviors within a given virtual environment. They are small systems which can be regarded as relatively intelligent. An agent is any process that may be viewed as able to perceive its environment through sensors (virtual or physical) and then act upon them, as presented by Novig and Russel [2]. They are relatively simple sets of instructions programmed to produce complex and unpredicted behaviors. Although very simple, these sets of instructions organized as software may exceed the capabilities of their programmers. The Deep Blue chessplaying computer developed by IBM in 1997 and Stanley—the robotic car created at Stanford University that won the DARPA Grand Challenge in 2005—are just two examples that show the progress of the development of autonomous processes using computers.

Regarding hardware, for MindFluctuations a system of two identical computers was chosen to run the application NumericVariations. Each computer had two graphic cards and two monitors (Fig. 4) and ran consecutive virtual worlds. The monitor connected to the projector showed the virtual world that was being affected by neural activity of the dancer performing on stage. The software for the helmet and a prerecorded video of neural activity ran on another monitor. The video was shown as an alternative plan when loss of helmet signal happened. During the spectacle, this monitor was operated by the author; likewise the second computer that was simultaneously being prepared to run the following virtual world. Two data switchers connected the two computers and enabled to exchange them at the end of each section. Another switch used for the video projector system on stage allowed, sometimes, the transition between two consecutive virtual worlds to happen with a fading effect.

Taking into account the problems that could arise during the spectacle, two versions of the application were created: with two and with only one computer—in case one the two computers needed to be rebooted during the presentation. Both versions were installed on both computers. Because the whole system would be offstage and due to the total newness of this type of presentation. a short video explaining the operation of the system was created, uploaded to the Internet and projected on a TV in the theater foyer before the beginning of the performance [20]. The music was played live and the musicians, their instruments and devices were located in the orchestra pit in front of the stage. To simplify the activities that took place offstage, after each section was defined, automated viewpoint animations were created (walktroughs) for each virtual world. These animations started about 20 seconds after the beginning of the world and ended about 30 seconds before its end. The initial time was due to the need for flexibility for changing computers and the transition that was performed by the switcher device for the video projector. The final time was needed so that one could manually change the views depending of the development of each virtual world. For example, if the 'trees' grew too much, a point of view that moved away from them was chosen; if they shrank, an approaching view was selected, always presenting them in their entirety. Assisting stage personnel were trained to help dancers exchange helmets when so indicated the choreography. Each helmet has 16 sensors that need to be in contact with the users’ scalp. They also need to be kept moist with saline solution to ensure good electrical connection.

4. Conclusion

Semiotic universes fighting against stereotypes and the dulling of our capabilities constitute an intriguing art research approach. The profile of the artists who will explore such domains is the artistresearche; that is, one who delights in unknown exploratory adventures and the challenges of diving into other knowledge areas. The type of experimental artwork emerging from this context demands an intensive work of partnership, cooperation and collaboration with scientists and technical developers. The emergence and proliferation of garage laboratories begin to deploy and will probably boost such partnerships. The exercise of freedom, possible through the development of Computer Art, enriches this process and has a latent potential to uncover new and exciting niches of experimental research to be explored.

Another consideration that comes to mind is the philosophical problem arising from Goedel's theorem of incompleteness, adding a very interesting problem to the spectacle setup, due to the logical conclusion that no system alone can explain itself. Accordingly, perhaps we will never understand the whole unfathomable potential that the system of the biological brain presents to us. But, being optimistic, it is possible to foresee the furthering of changes due to the exchange of knowledge that open software and hardware communities are providing. This is promoting the emergence of an improvement causing transformations in society. The role of computer artists in this process is becoming relevant. Artists experimenting with sensory and semiotic characteristics may integrate them with other inherent aspects of computer languages, thus creating unexpected results. In this context, one wonders: What systems of organization resulting from these new sensory expressive gatherings could emerge or be developed? What new morphologies could be studied?

To broaden the topic, it is interesting to mention a few related studies, such as the one formulated by the Portuguese Eduardo Miranda. At the University of Plymouth in the United Kingdom, he developed a BCI device that allows individuals with severe disabilities to play music with only data from their own brain [37]. Another stimulation approach—although contrary to this work—is the research developed by the Chilean group EMOVERE. They use physiological parameters collected from sensors attached to their bodies to deliver data from the body of each performer to devices used in the spectacle. They train to control their emotional states, entering them at will using the Alba Emoting system, since they understand emotion “as a corporal event where different physical actions generate psychological states” [38], [39].

I am convinced that Computer Art courses need to be created. Beyond traditional disciplines to train artists, it is necessary to introduce the study of mathematics, physics, computer science topics and robotics. This logical universe is generally averse to artists, and it may be quite difficult, but not impossible, to include such subjects in a curriculum. Over the last 60 years, many computer artists—such as myself—often with very low budgets, have been developing creative strategies to design works and projects anchored in visual, gestural, auditory and tactile languages, integrating them with mathematics. The unfathomable field of possibilities open to artists is a melting pot for thought exercises that constitute a great reward for our endeavors.

In summary, it is possible to speculate on the growing capacity of the cognitive, affective and human sensory systems for developing potential symbiosis with machines, a procedure presented in [3] (p. 438, 494495). Unlikely it is unlikely that someday we might understand and relate with all factors inherent to the complexity of the phenomena involved in human neural affective and cognitive systems. But, while we are here on this planet, our task is to try, forever.

5. Acknowledgments

Maida Withers Dance Construction Company, SEAS School of Engineering and Computer sciences of The George Washington University, Institute of Mathematics and Art of São Paulo. Photographer: Shaun Schroth. Musicians: John Driscoll e Steve Hilmy. Light design: Izzy Einsidler. Sculptor: David Page. Scene manager: Tarythe Albrecht. Customized software in Java (Java3DTM API): Pedro Garcia and Tania Fraga. Software engineer: Mauro Pichiliani. Conception, implementation, Graphic interface, Interactive project, Photos and images: Tania Fraga. Mathematical consultant: Donizetti Louro.

Referencias

[1] E. B. Baum, What is thought? Cambridge: MIT Press, 2004. [ Links ]

[2] S. J. Russell and P. Norvig, Artificial intelligence. New Jersey: Prentice Hall, 2003. [ Links ]

[3] T. Fraga, “Caracolomobile: affect in computer systems,” AI Soc., vol. 28, no. 2, pp. 167176, May 2013. [ Links ]

[4] T. Fraga, “Arte computacional: diferencias y convergencias / Computer art: divergences and convergences,” in Poéticas de la biología de lo posible. Hábitat y vida, I. Garcia and Universidad Javeriana, Eds. Bogota: Pontificia Universidad Javeriana, 2012, pp. 87102. [ Links ]

[5] T. Fraga, “Por trás da cena: produção de espetáculos com cenários interativos / Behind the scene: production of spectacles with interactive scenarios,” in Estética de los mundos possibles, Universidad Javeriana, Ed. Bogotá: Pontificia Universidad Javeriana, 2016, pp. 183198. [ Links ]

[6] T. Fraga, “Technoetic syncretic environments,” Technoetic Arts, vol. 13, no. 1, pp. 169185, Jun. 2015. [ Links ]

[7] T. Fraga, “Numeric Tessituras,” Technoetic Arts, vol. 8, no. 2, pp. 243250, Nov. 2010. [ Links ]

[8] T. Fraga, “Reality, Virtuality and Visuality in the Xamantic Web,” in Reframing Consciousness, R. Ascott, Ed. Exeter: Intellect, 1999, pp. 211220. [ Links ]

[9] T. Fraga, “Xamantic Journey.” CDROM artist's collection, Sao Paulo, 1999. [ Links ]

[10] T. Fraga, “Inquiry into Allegorical Knowledge Systems for Telematic Art,” in Art, technology, consciousness (HB), R. Ascott, Ed. Bristol, UK: Intellect, 2000, pp. 5964. [ Links ]

[11] T. Fraga, “Virtualidade e realidade/Virtuality and reality,” in criagao e poéticas digitais, D. Domingues, Ed. Caxias do Sul: EDUCS, 2005, pp. 137147. [ Links ]

[12] M. L. Fragoso, “4D. arte computacional no Brasil: reflexao e experimentacao.,” Bras. UnB, 2005. [ Links ]

[13] T. Fraga, “NumericVariations: Exoendogenous Computer Art exploring neural connections,” in 4th computer art congress, 2014, pp. 147158. [ Links ]

[14] T. Fraga, “Exoendogenias,” in 3a Semana de Pesquisa da Escola de Comunicações e Artes da USP, 2012, pp. 4666. [ Links ]

[15] T. Fraga, “Jardim de Epicuro,” 2014. [Online]. Available:http://youtu.be/2v2L86J1JtA. [Accessed: 15Dec2017] [ Links ].

[16] W. Barja and T. Fraga, “Greater than or Equal to 4D,” in Maior ou Igual a 4D: Arte Computacional Interativa, 2004. [ Links ]

[17] T. Fraga, “Artes interativas e método relacional para criação de obras.” p. 15, 1988. [ Links ]

[18] T. Fraga, “NumericVariations,” 2015. [Online]. Available: https://www.youtube.com/watch?v=hf9nCtBLJ7k. [Accessed: 15Dec2017] [ Links ].

[19] T. Fraga, “NumericVariations,” 2014. [Online]. Available: https://www.youtube.com/watch?v=u0Z9IjR_VuU. [Accessed: 11Dec2017] [ Links ].

[20] T. Fraga, “MindFluctuations,” 2015. [Online]. Available: https://vimeo.com/124881728. [ Links ]

[21] T. Fraga, “MindFluctuations,” 2015. [Online]. Available: http://alturl.com/k263i/. [Accessed: 15Dec2017] [ Links ].

[22] T. Fraga, “MindFluctuations,” 2015. [Online]. Available: https://vimeo.com/126002412. [Accessed: 15Dec2017] [ Links ].

[23] K. Busch, “Artistic research and the poetics of knowledge,” 2014. [Online]. Available:http://www.artandresearch.org.uk/v2n2/busch.html. [Accessed: 15Dec2017] [ Links ].

[24] R. A. Guisepi, “The Arts.” [Online]. Available: http://historyworld.org/arts.htm. [Accessed: 15Dec2017] [ Links ].

[25] T. Fraga, “Jardim de Epicuro,” 2014. [Online]. Available:http://youtu.be/2v2L86J1JtA. [Accessed: 15Dec2017] [ Links ].

[26] D. Norman, O design do futuro, 1st ed. Rio de Janeiro: ROCCO, 2010. [ Links ]

[27] E. Couchot, “A tecnologia na arte: da fotografia á realidade virtual,” Porto Arte, vol. 13, no. 21, pp. 128130, 2004. [ Links ]

[28] T. Le (CEO) and D. G. M. (CTO), “Emotiv,” 2011. [Online]. Available: http://emotiv.com. [ Links ]

[29] A. Davison, Pro JavaTM 6 3D game development, 1st ed. New York: SpringVerlag, 2014. [ Links ]

[30] R. Picard, Affective computing. Cambridge: MIT, 2000. [ Links ]

[31] M. Nicolelis, Muito além do nosso eu. São Paulo: Companhia das Letras, 2011. [ Links ]

[32] A. Damásio, E o cérebro criou o homem. São Paulo: Companhia das Letras, 2011. [ Links ]

[33] T. Fraga, M. Pichiliani, and D. Louro, “Experimental Art with Brain Controlled Interface,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 8009, no. 1, Las Vegas, 2013, pp. 642651. [ Links ]

[34] M. C. Pichiliani, C. M. Hirata, and T. Fraga, “Exploring a Brain Controlled Interface for Emotional Awareness,” in 2012 Brazilian Symposium on Collaborative Systems, 2012, pp. 4952. [ Links ]

[35] K. Lee, S. Yang, and J. Lim, “NeuroSky,” 2017. [Online]. Available: http://neurosky.com/. [ Links ]

[36] T. Fraga and M. L. Fragoso, “21st Century Brazilian (experimental) Computer Art,” in Proceedings of the 3rd Computer Art Congress, 2012. [ Links ]

[37] AbleData, “Bci Music,” 2017. [Online]. Available: http://www.abledata.com/product/bcimusic . [Accessed: 15Dec2017] [ Links ].

[38] E. Group, “Emovere: Body, Sound and Movement,” 2015. [Online]. Available: http://www.emovere.cl/en/. [Accessed: 15Dec2017] [ Links ].

[39] “Alba technique.” [Online]. Available:www.albatechnique.com/. [Accessed: 14Nov2017] [ Links ]