1. Introduction

Critical reading competencies involve understanding, interpreting, and evaluating texts in different contexts, such as academic and non-specialized texts (Vozgova and Afanasyeva, 2020; Untari et al., 2023). A student must grasp and understand the content of a text, i.e., the words, expressions, phrases, and context (Din, 2020). Also, this competence involves the ability to articulate the parts of a text in a way that ensures the overall meaning is understood, identifying the formal structure, different voices, and the relationships between parts of the text. Finally, the critical ability to reflect and evaluate a text includes analyzing the validity of arguments, recognizing discursive strategies, and contextualizing information. Critical reading is essential in 21st-century education, promoting analytical thinking and complex problem-solving (Din, 2020; Vozgova and Afanasyeva, 2020).

Wallace (1999) studied critical pedagogy and critical reading courses to analyze power and ideological discourses in texts, using activities such as observation of reading practices and systemic-functional grammatical analysis. The results showed greater critical awareness and collaboration among students. The author recommended incorporating critical pedagogy into the curriculum to provide critical reading skills. Vozgova and Afanasyeva (2020) proposed pedagogical strategies to assess critical reading competence with activities before, during, and after reading to foster students' critical awareness. Furthermore, these authors introduce a three-dimensional approach to critical reading analysis (micro, meso, and macro) to understand textual meanings in social contexts. The authors included essential expansion activities to apply these skills in new situations, promoting critical thinking and collaboration. On the other hand, Tshering (2023) evaluated the implementation of the Four Resource Model (FRM) proposed by Freebody and Luke (1990) to teach critical reading in an English as a Second Language (ESL) course in Bhutan. The FRM helped students decode text, construct group meanings, analyze ideologies, and create new texts. The qualitative results showed that critical thinking was encouraged in the classes. However, the author noted that more time and professional training are needed to effectively implement these essential reading skills.

Different literature studies emphasize the importance of promoting creativity and innovation in activities that include critical reading competence. However, the literature suggested that teachers need specific training in this area to encourage critical thinking in students, thus developing responsible citizens who are less susceptible to misinformation (Rofiuddin and Priyatni, 2017; Ilyas, 2023). Rofiuddin and Priyatni (2017) evaluated a critical reading learning model to improve the critical consciousness of university students, helping them understand, evaluate, and respond to texts revealing ideological messages and practices of domination. These authors used a Research and Development (R&D) design. The design is applied to 56 students at Negeri Makassar University. The results show more appropriate results in critical reading skills than in the control group. Rofiuddin and Priyatni (2017) underlined the importance of critical reflection, the evaluation of diverse perspectives, and sociopolitical analysis and recommended its implementation in university classrooms to promote critical consciousness.

In the digital era, it is essential to create effective, and practical critical reading modules for students (Surdyanto & Kurniawan, 2020). Authors such as Surdyanto and Kurniawan (2020), employed models such as ADDIE (Analysis, Design, Development, Implementation, Evaluation) to carry out inferences and observations, interviews, and needs analysis to identify problems in teaching critical reading. 92% of students achieved high crucial thinking in all the practical modules. The study highlights the importance of learning and teaching materials that integrate language skills and content to develop critical understanding. The previous results are demonstrated by Jiménez-Pérez (2023), who recommends improving teaching practice, doing more research, and developing these skills at the beginning of the courses. On the other hand, Timarán-Buchely et al. (2021) developed a model to evaluate Critical Reading using Decision Trees. The model is based on the Saber Pro performance test of the students from the Pontificia Universidad Javeriana from Cali between 2017 and 2018. The independent variables of the Timarán-Buchely et al. (2021) model are the student's academic program, age, and transportation index. The results show that the J48 algorithm was the most accurate in the classification, achieving an accuracy of 68.85%, higher than other algorithms evaluated (Decision Stump (53.02%), LMT (62.37%), Random Forest (57.89%), Random Tree (53.89%) and RepTree (55.50%). These results help educational institutions understand the factors that affect academic performance and improve the quality of education.

As noted in the previous paragraphs, critical reading competence is essential for students of any professional career, and its advancement throughout the undergraduate degree is key to their professional practice. In addition, various authors in their works (Din, 2020; Vozgova & Afanasyeva, 2020; Untari et al., 2023) highlight the importance of carrying out different types of studies on critical reading competence, considering its impact on the development of long-term skills. This competence is essential not only for the analysis and interpretation of texts but also for fostering critical thinking and decision-making, which are fundamental aspects of the academic and professional fields in the years to come.

This study evaluates the added value in the critical reading competency of students at the Universidad Católica Luis Amigó, Medellín campus. The statistical analysis compares results from the Saber 11 test (taken at the end of high school) with a critical reading test administered by the university midway through the academic program. It is noteworthy that in previous work, Posada et al. (2023) analyzed the Quantitative Reasoning competency, examining its behavior through quartile-based statistical analysis. Additionally, this study develops and validates a statistical model that enables predictions and inferences about the performance of this competency in future years, facilitating the implementation of targeted improvement plans.

2. Methodology

The methodological design is quantitative, with a non-experimental, longitudinal, and correlational design (Montoya et al., 2018). The added value of the critical reading is evaluated by Universidad Católica Luis Amigó students. The following steps are proposed to estimate the added value and the critical reading model based on statistical assumptions.

2.1. Step 1: Sample selection and demographic characterization

The sample is taken from students who decided to participate in the critical reading institutional test. The data is gathered from the Admissions and Academic Registration Department and the Department of Virtual and Distance Education of the Universidad Católica Luis Amigó. The test is applied online on the virtual campus (Campus Virtual, 2023). All the students under analysis are in Medellín and are enrolled in different undergraduate programs. The students' added value analysis will be done in 2022. However, the model was developed in 2018, and its predictive capabilities were evaluated before (2019 to 2022). These past years have been evaluated during and after the COVID-19 pandemic.

2.2. Step 2: Data Collection

Data is collected from students who presented the institutional test that evaluates different skills, particularly critical reading. It is important to note that this test is carried out in the middle of the degree (between the fifth and seventh academic semester) and is carried out by expert teachers and members of the Universidad Católica Luis Amigó, together with the Department of Basic Sciences (Universidad Católica Luis Amigó, 2023). The student's consent to being evaluated for reading competence is given when they complete the form and take the institutional test. For these students, data from the Saber 11 tests is collected (Presidencia de la República, 2010). Additionally, demographic information and other qualitative and quantitative variables are gathered. The Saber 11 test assesses the skill development of eleventh-grade students and provides critical information on competencies such as reading comprehension. This data is essential for universities to design leveling courses and dropout prevention programs.

This test evaluates the educational quality of schools based on national standards and supports the creation of added value and educational quality indicators. These insights are instrumental for institutions' self-evaluation and the formulation of educational policies at various levels. Reading competence is assessed by examining students' ability to identify and understand the local content of a text, comprehend how its parts interconnect to convey a global meaning, and reflect on and evaluate its content. These skills are measured through questions that test students' interpretation of literary and informational texts. Additionally, the test assesses their ability to contextualize information and establish relationships between different statements and texts. This comprehensive evaluation provides a detailed understanding of students' abilities, highlighting their strengths and areas for improvement.

2.3. Step 3: Statistical variables analysis and selection

Critical reading competence is the dependent variable analyzed in association with sociodemographic factors and institutional test scores, which are treated as covariates. Table 1 provides a detailed description of the variables under study, categorized into dependent and covariate groups. The dependent variable, critical reading, represents the score obtained on the institution-administered test, measured on a scale from 1 to 100.

Table 1 Variables for the Critical Reading and Quantitative Reasoning tests.

| Variable | Code | Description | Variables range |

|---|---|---|---|

| Dependent variable | CRITICAL_READING | The score obtained in the critical reading test of the Institutional and Saber11 test | 1-100 |

| Covariables | SABER11_LANGUAGE | Saber 11 test score | 1-100 |

| PROGRAM | Academic Program to which it belongs | 1-19 | |

| SCHOOL_TYPE | Type of school graduated | 1: public 2: private | |

| STRATO_SE | Socioeconomic level | 1-6 | |

| WHO DOES HE LIVE WITH | Who does he live with | 1-4 | |

| MONTHLY_INCOME_FAMILY | Family Monthly Income | 1-5 | |

| HAS_SOURCE_OF_LABOR_INCOME | Have a source of income from work | 1-2 | |

| EDUCA_PADRE | Father's years of education | 1-5 | |

| EDUCA_MOTHER | Mother's years of education | 1-5 | |

| SEPARATED PARENTS | Has separated parents | 1-2 | |

| AVERAGE_ACCUMULA | Cumulative Career Average | 1-5 |

The covariates include the language score on the Saber 11 test (coded as saber11_language), the student's academic program, the type of school (public or private), socioeconomic level, living arrangements, monthly family income, whether the student has a source of income from work, the parents' years of education, whether the parents are separated, and the student's cumulative career average. According to Psyridou et al. (2024), these factors significantly influence the development of critical reading skills in children and adolescents. Their research demonstrates that high socioeconomic status, access to resources, engagement in literacy activities, parental involvement in schooling, and a structured home environment are associated with stronger critical reading skills. The study emphasizes the importance of the family environment and calls for further research to deepen understanding of these factors. Identifying the qualitative and quantitative variables is essential for appropriately applying measurement instruments, conducting value-added analysis, and building robust mathematical models.

2.4. Step 4: Development of a model for estimating critical reading

The model for estimating the Critical Reading score was developed using the multiple linear regression method. The dependent variable is critical reading, and the independent variables are those that showed an association in the correlation matrix. Multilevel or hierarchical linear models (MLM) are not used because the sample consists only of the Medellín Campus of Universidad Católica Luis Amigó. Instead, the 'forward' multiple linear regression method is applied to develop three proposed models, each incorporating independent variables associated with the Critical Reading test. The resulting regression model, described in Equation 1, offers significant insights into the factors influencing Critical Reading scores.

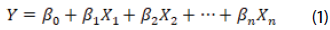

In Equation (1), Y is the dependent variable, Critical Reading score, β0 is the value of the score in the absence of independent variables, βi indicates how much the score is modified due to the independent variable, and Xi corresponds to the independent variables.

2.5. Step 5: Statistical analysis to quantify the added value of the institution

The Quintiles statistician is responsible for analyzing added value. These statistical analyses use positional measures that divide the data set into equal portions. Specifically, Quintile 1 includes the lowest 20% of results, while Quintile 5 represents the highest 20% (Montgomery, 2020; Posada-Hernández et al., 2023). The comparison is conducted by graphing the results on a Cartesian plane, where the x-axis corresponds to the Saber 11 test results, and the y-axis corresponds to the institutional test results. Additionally, bar graphs are used to display positive and negative values, enabling a more comprehensive analysis. The importance of added value estimation lies in the upper Quintiles, as they reflect the institution's significant contributions. All statistical analyses are conducted using SPSS software.

3. Results and discussion

3.1. Student participation in the test

The value-added analysis for 2022 focused on the population of students enrolled in the fifth semester during periods 01 and 02 of the year. Program directors at the Medellín campuses extended broad invitations to students to participate. Table 2 illustrates student participation trends over the years. Although student response in 2022 was notable, participation decreased compared to 2021. It is important to note that students typically take the test during the fifth semester of their undergraduate program, which consists of four months of academic study. The analyzed fifth-semester student population exhibits the following characteristics:

Educational background: 70% of students come from public educational institutions.

Socioeconomic status: 74% live in neighborhoods classified as levels 1, 2, and 3, with family incomes between 1 and 2 million pesos per month.

Living conditions: More than half (54.09%) live with relatives.

Employment status: 59% do not work.

Family dynamics: 60.4% have separated parents.

Parental education: Among fathers, 69% have a maximum high school education level, while this figure is 65% among mothers.

3.2. Institutional added value

The institutional test results were evaluated on a scale of 0 to 5, with an average score of 2.2-below the acceptable level of 3.0. Similarly, in the Saber 11 test, scored on a scale from 0 to 100, average results across locations did not exceed 56 points, falling short of the acceptable threshold of 60. Table 3 summarizes the averages for the Critical Reading and Saber 11 tests, offering an overview of student performance in these assessments. The distribution across quintiles reveals limited improvement in added value in critical reading. The highest percentage of students with added value is found in quintile Q2, encompassing 40% of the lowest scores. Conversely, quintiles Q3 and Q4 show negative added value. Notably, a minimal percentage (1%) of students in quintile Q5 achieve added value, indicating that even small progress is possible. While these results serve as a baseline for future improvement, significant potential for growth remains.

Table 3 Added value Critical Reading 2022, Medellín campus.

| Saber 11 test | Institutional test | % of students with added value studied | |||||

|---|---|---|---|---|---|---|---|

| Quintile | Score | Number of students | % | Score | Number of students | % | |

| Q1 | 0,0 - 19,9 | 0 | 0 | 0,0 - 0,9 | 43 | 8 | 8 |

| Q2 | 20,0 - 39,9 | 17 | 3 | 1,0 - 1,9 | 145 | 24 | 21 |

| Q3 | 40,0 - 59,9 | 363 | 61 | 2,0 - 2,9 | 283 | 47 | -13 |

| Q4 | 60,0 - 79,9 | 214 | 36 | 3,0 - 3,9 | 120 | 20 | -16 |

| Q5 | 80,0 - 100,0 | 2 | 0 | 4,0 - 5, 0 | 5 | 1 | 1 |

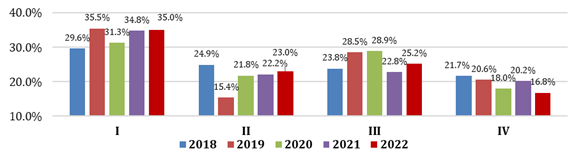

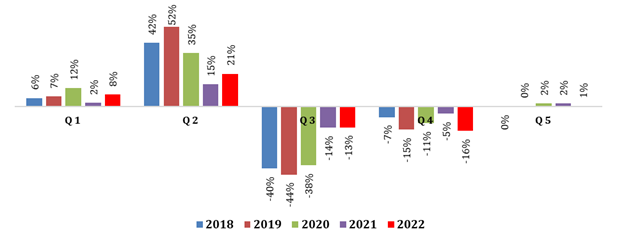

The comparison results obtained from 2018 to 2022 in the Critical Reading competence show no statistically significant changes in the added value in the quintiles; only an increase of 1% appears in quintile 5 when moving from 2018 to 2022. Figure 1 shows the results of the temporal analysis.

Figure 1 Percentage of students with added value by quintiles: Critical reading 2018-2022, Medellín campus.

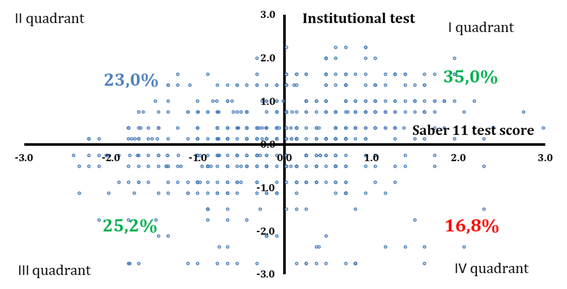

The distribution of deviations in test scores across the quadrants effectively visualizes the percentage of students demonstrating added value in Critical Reading competency. Figure 2 illustrates this distribution by quintiles. The institution's students are categorized into four quadrants based on their results in the Saber 11 Language tests and the Critical Reading Simulation. In Quadrant I, 35% of students score above the average on both tests, reflecting minimal institutional contribution. Quadrant II, encompassing 23% of students, demonstrates significant improvement in Critical Reading, with scores rising from below average in Saber 11 to above average in the Simulation. While this progress is encouraging, it underscores the need for continued improvement. Quadrant III, comprising 25.2% of students, includes those with low scores on both tests, indicating a lack of added value from the institution. Lastly, with 16.8% of students, Quadrant IV features those who scored above average in Saber 11 but below average in the institutional test.

Figure 2 Distribution of the Percentage of students by quadrants in the critical reading added value in 2022.

Figure 3 compares the percentages of students in the quadrants from 2018 to 2022. A slight increase is observed in Quadrant I, which has minimal impact on added value. Quadrant II shows a small increase, which remains significant for contributing to the added value for students. Conversely, Quadrant III reflects a slight rise in the percentage of students, which is unfavorable as it indicates an increase in the number of students with low scores in both the Saber 11 Language test and the Critical Reading Simulation. Lastly, Quadrant IV shows a slight decline in the percentage of students who enter the institution with above-average Saber 11 Language scores yet achieve below-average scores in the Critical Reading simulation.

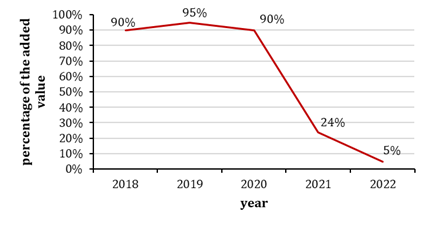

Figure 4 illustrates the percentage and temporal behavior of program performance in terms of added value. High percentages in previous years have represented the Critical Reading competency: in 2018, 90% of the programs demonstrated added value, increasing to 95% in 2019 before dropping back to 90% in 2020. However, a significant decline was observed in 2021 and 2022, with percentages falling to 24% and 5%, respectively.

3.3. Results of the development of a model that evaluates critical reading competence

The linear regression model was used to estimate the Critical Reading competency score at Universidad Católica Luis Amigó. First, the correlation matrix was used to assess the relationship between each competency and various sociodemographic and academic variables (Montgomery, 2020). Table 3 displays the correlations between the Critical Reading score and demographic variables, the Saber 11 score, the time taken for the Saber 11 test, and the institutional quantitative reasoning test score. A significant positive correlation is observed with the quantitative reasoning score (0.250), cumulative average (0.234), and Saber 11 scores in mathematics (0.208) and language (0.260). There is also a significant negative correlation between the time taken to complete the Saber 11 test (-0.114) and whether the student has a source of income from work (-0.160). Variables such as type of school, socioeconomic level, family income, and parents' education do not significantly correlate with the Critical Reading score.

Table 4 Correlation matrix of the Critical Reading competence.

| Variables | Critical reading score | |

|---|---|---|

| Critical Reading Score | Pearson correlation | 1 |

| Sig. (bilateral) | ||

| N | 379 | |

| Quantitative Reasoning Score | Pearson correlation | ,250 |

| Sig. (bilateral) | ,000 | |

| N | 379 | |

| Accumulated average | Pearson correlation | ,234 |

| Sig. (bilateral) | ,000 | |

| N | 379 | |

| Math Saber 11 test | Pearson correlation | ,208 |

| Sig. (bilateral) | ,000 | |

| N | 361 | |

| Language Saber 11 test | Pearson correlation | ,260 |

| Sig. (bilateral) | ,000 | |

| N | 361 | |

| Saber 11 presentation text | Pearson correlation | -,114 |

| Sig. (bilateral) | ,030 | |

| N | 361 | |

| Public or private school graduated | Pearson correlation | ,023 |

| Sig. (bilateral) | ,649 | |

| N | 379 | |

| What is your socioeconomic status? | Pearson correlation | -,003 |

| Sig. (bilateral) | ,949 | |

| N | 379 | |

| Who do you currently live with? | Pearson correlation | -,014 |

| Sig. (bilateral) | ,789 | |

| N | 379 | |

| What is your family's monthly income? | Pearson correlation | ,020 |

| Sig. (bilateral) | ,695 | |

| N | 379 | |

| Do you have any source of income from work? | Pearson correlation | -,160 |

| Sig. (bilateral) | ,002 | |

| N | 379 | |

| Father's Education | Pearson correlation | ,096 |

| Sig. (bilateral) | ,062 | |

| N | 379 | |

| Mother's Education | Pearson correlation | ,087 |

| Sig. (bilateral) | ,092 | |

| N | 379 | |

| Parents separated | Pearson correlation | ,074 |

| Sig. (bilateral) | ,148 | |

| N | 379 | |

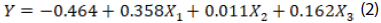

The results of the Critical Reading test are associated with the cumulative average, the Saber 11 Language and Mathematics tests, the time taken to complete the Saber 11 test, and the presence of employment income. Based on this analysis, a model is proposed. The multiple regression process was performed, and the resulting model is shown in Equation 2. This model was obtained using SPSS software.

In Equation (2), Y is the Critical Reading score, x1 is the cumulative average, x2 is the Saber 11 Language test score, and x3 is the Quantitative Reasoning test score. The Cumulative Average of the students represents the most significant contribution, around 0.358 units points of the change in the Critical Reading score. The results show that the 0.162 points contributed to the Quantitative Reasoning test score. In comparison, the Saber11 Language test score contributes only 0.011 points. As can be noted, the score obtained in Critical Reading is influenced, for the most part, by the Cumulative Average of the students.

3.4. Verification of assumptions of the multiple linear regression model for the critical reading test

Linearity

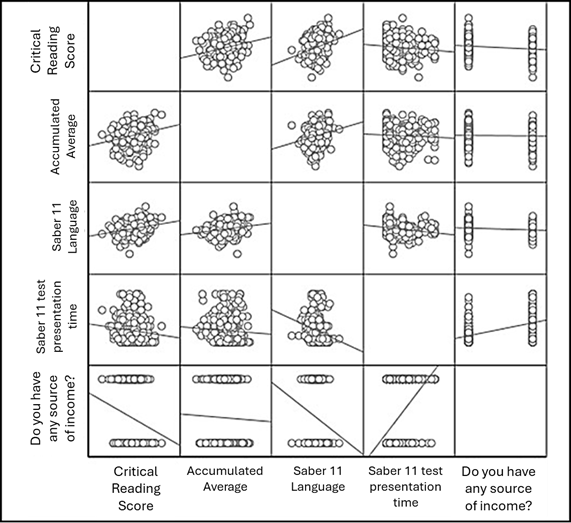

When associated with the independent variables, the dependent variable must follow a linear relationship. This assumption is validated by observing whether the data trend follows a linear pattern in the scatterplot (Montgomery, 2020). The dependent variable, Critical Reading, shows a linear trend with the cumulative average, the Saber 11 Language and Mathematics tests, the time taken to complete the Saber 11 test, and the presence of employment income. Figure 5 presents the results of this test.

Normality

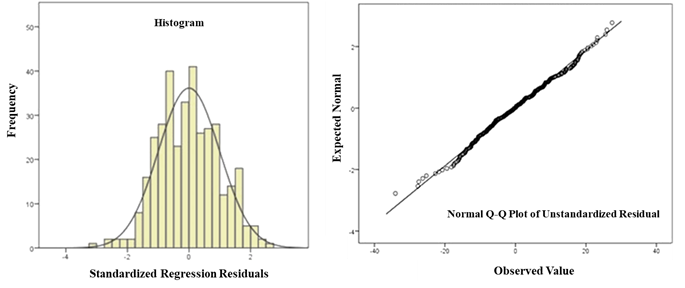

Residual analysis or model errors must follow a normal distribution (Montgomery, 2020). This ensures that both large and small residuals occur less frequently in the model's estimates. The normality of residuals is assessed using Q-Q or P-P plots, which show how well the residuals fit a linear pattern, or the Kolmogorov-Smirnov test, where the p-value should be greater than 0.05. Figure 6 presents the results of the normality test. The residuals exhibit a normal distribution, as the points closely follow the regression line. Additionally, the Kolmogorov-Smirnov test shows a significance of 0.051, and the Shapiro-Wilk test yields 0.096. Both values exceed 0.05, indicating that the residuals do not significantly deviate from a normal distribution.

Independence

The residuals or errors must be independent, as the statistical test requires. Independence is assessed using the Durbin-Watson statistic, which should be close to 2 for complete independence of the residuals. For the Critical Reading test, the Durbin-Watson statistic is 2.05, indicating that the residuals are independent. The multiple linear regression model for the Critical Reading variable shows a correlation coefficient (R) of 0.346, a coefficient of determination (R²) of 0.120, and an adjusted R² of 0.112. The standard error of the estimate is 0.53449, and the Durbin-Watson statistic is 2.055. These results suggest that the model explains approximately 12% of the variability in Critical Reading and does not exhibit significant autocorrelation in the residuals. The low values of the statistics found in this study are consistent with those reported by Aghajani and Gholamrezapour (2019) when analyzing Critical Thinking Skills, Critical Reading, and Foreign Language Reading.

Collinearity

There should be no collinearity between the independent variables, meaning there must be no linear dependence between them. If such a relationship exists, it is referred to as multicollinearity. Collinearity is assessed using the Variance Inflation Factor (VIF), which should be close to 1 to indicate the absence of multicollinearity. The variables in the model do not show linear dependence, and multicollinearity is not present, as the VIF values are close to 1. The model reveals the coefficients of the most significant predictor variables. The Saber 11 Language competence has a significant positive coefficient (B = 0.248, p < 0.001, VIF = 1.063), suggesting that higher language scores are associated with higher values of the dependent variable. Similarly, the Cumulative Career Average shows a significant positive coefficient (B = 7.609, p < 0.001, VIF = 1.040), indicating that a higher cumulative average is linked to an increase in the dependent variable.

Homoscedasticity

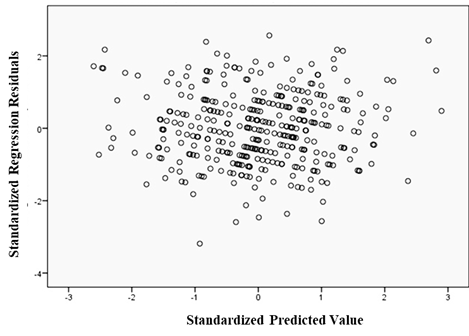

Homoscedasticity refers to the equality of variances between the residuals and the predicted values. This condition is examined using a scatter plot with ZPRED (predicted values) and ZRESID (residuals). The assumption of homoscedasticity implies that the variation in residuals remains consistent across the range of predicted values, meaning the scatter plot should not show any clear pattern. Figure 7 illustrates the dispersion of the Critical Reading score. Upon analyzing the scatter plot of predicted values and standardized residuals, it is evident that the variation is consistent, with no discernible pattern in the distribution.

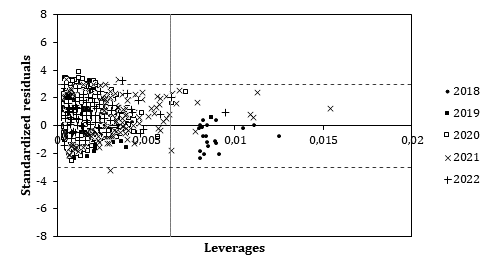

Figure 8 shows the William plot for the model described in Equation (2) and its predictive capabilities from 2018 to 2022. The William plot illustrates the influence of each data point and the standardized residuals in a regression model. The leverage values are represented on the X-axis, reflecting the influence of each data point on the regression coefficients; high leverage values can significantly affect the model. The standardized residuals are shown on the Y-axis, with values outside the ±2 range suggesting potential outliers. Yearly distribution analysis for 2018 and 2019 shows that most data points cluster around zero, with few high-leverage values. In 2020, some data points exhibit high leverage, though their residuals remain within acceptable limits. In 2021 and 2022, more variability is observed in both the residuals and leverage values. The analysis highlights several outliers, particularly in the last three years. Overall, only 1.66% of the data points are considered outliers, indicating that most of the data falls within the predictive capabilities of the model.

3.5. Discussion

The analysis of institutional added value revealed that the university's contribution to developing critical reading competence needs improvement. Most students did not show significant improvements in critical reading scores between the Saber 11 tests and the institutional exam, indicating a need for stronger support and the creation of improvement plans. Overall, the results suggest that critical reading competence does not reach acceptable levels, with an average score of 2.2 on the institutional test (on a scale from 0 to 5). This highlights the urgent need to improve educational strategies and teaching practices to strengthen this vital competence, especially in the early stages. Further research and development of critical reading skills are recommended to ensure comprehensive student training and to emphasize the importance of this issue. The quintile analysis revealed that 35% of the students exceeded the average in both tests, while 25.2% scored poorly. Effective pedagogical strategies and targeted teacher training in critical reading are essential for fostering critical thinking and helping students become responsible citizens less susceptible to misinformation. Measuring critical competence presents challenges due to linguistic and cultural differences. Jiménez-Pérez (2023) recommended improving teaching practices, conducting more studies in this research area, and developing these skills early in students' education. This study used a qualitative methodology with seven undergraduate students.

Critical thinking and reading competence were evaluated using argumentative texts, interviews, and written responses. The results showed improvements in students with higher English proficiency, who demonstrated a better ability to draw conclusions and express personal opinions. In contrast, students with lower English proficiency faced significant challenges in identifying the author's conclusions and purposes.

The study concludes that it is crucial to teach critical reading skills, suggesting that teachers focus on students with sufficient knowledge of English and use interesting topics to encourage critical thinking and literacy. A similar conclusion is made by Arifin's (2020) work. Le et al. (2024), show the difficulties in applying analysis, synthesis, and evaluation strategies in students. These authors discuss the need for pedagogical approaches that promote improving language skills and foster higher-order thinking processes through focused modules and workshops, such as rhetorical analysis and debates.

On the other hand, Untari et al. (2023) advocate for fostering an explicit understanding of texts, integrating real-world experiences with reading materials, and critically evaluating content. They also emphasize the importance of improving critical reading skills from an early age to promote deeper understanding and critical analysis of information. Similarly, Paakkari et al. (2024) highlight the role of parental support and family environment in enhancing critical reading skills. In contrast, statistical modeling reveals that sociodemographic variables such as the type of school attended, socioeconomic status, and family income did not significantly correlate with critical reading scores. This suggests that students' socioeconomic backgrounds do not strongly influence their critical reading performance. However, the students' cumulative average and quantitative reasoning skills significantly impact their critical reading performance at the 95% confidence level, indicating a direct relationship.

Based on the above, a linear model is proposed to predict critical reading scores. This model serves as a useful tool for identifying students who may require specific educational interventions. The developed regression model will enable comparisons between projected and actual data, helping to assess whether critical reading competence has improved or declined. By analyzing these results, educational and intervention strategies can be adjusted to ensure a more effective approach to developing critical reading skills.

4. Conclusions

The results of statistical modeling suggest that sociodemographic variables do not significantly influence critical reading performance. However, academic factors such as cumulative career average and quantitative reasoning skills directly correlate with critical reading performance. This highlights the importance of focusing educational interventions on strengthening these academic competencies to improve students' critical reading skills.

The analysis of ’he institutional added value at Universidad Católica Luis Amigó reveals that the development of critical reading competency among university students has not reached adequate levels, as evidenced by low scores on institutional tests. This underscores the urgency of implementing more effective educational strategies, developing improvement plans, and continuously training teachers to ensure comprehensive student training that prepares them to face information challenges and fosters critical thinking.