1. Introduction

The BDI model, developed by Bratman [1], is possibly the best-known model of practical reasoning agents. According to Bratman, the rational behavior of humans cannot be analyzed just in terms of beliefs and desires; the notion of intention is needed. Thus, an intention is considered more than a mere desire; it is something the agent is committed to. The process through which a desire becomes an intention is named intention formation and has two stages in BDI models: (i) desires, which are potential influences of an action, and (ii) intentions, which are desires the agent is committed to and that are achieved through the execution of a certain plan.

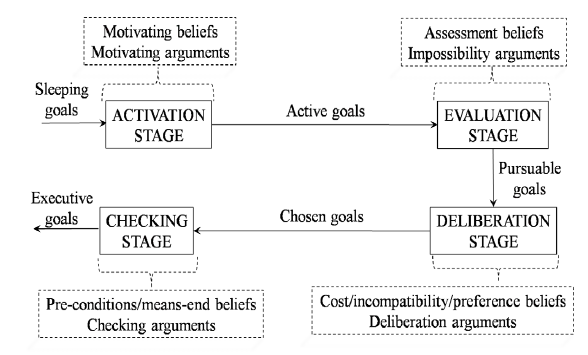

An extended model for intention formation has been proposed by Castelfranchi and Paglieri [2]. They propose a four-stage goal processing model, where the stages are: (i) activation, (ii) evaluation, (iii) deliberation, and (iv) checking. According to them, this extended model may have relevant consequences for the analysis of what an intention is and may better explain how an intention becomes what it is. This is especially useful when the agents need to explain and justify why a given desire became an intention and why another one did not. Consider a scenario of a natural disaster, where a set of robot agents wander an area in search of people needing help. When a person is seriously injured he/she must be taken to the hospital, otherwise he/she must be sent to a shelter. After the rescue work, the robots can be asked for an explanation of why a wounded person was sent to the shelter instead of taking him/her to the hospital, or why the robot decided to take to the hospital a person x first, instead of taking another person y. This scenario is used to show the performance of our proposal throughout the article

Unlike Bratman's theory, where desires and intentions are different mental states, Castelfranchi and Paglieri argue that intentions share many of the properties that desires have, thus in their approach both desires and intentions are considered as goals at different stages of processing. Consequently, four different statuses for a goal are defined: (i)active, (ii)pursuable, (iii)chosen and (iv)executive.

One key problem in BDI architectures is the relation and interplay between beliefs and goals. The approach proposed by Castelfranchi and Paglieri clarifies these processing and structural relationships, making explicit the function of beliefs in goal processing as diachronic and synchronic supports. This kind of support, that beliefs give to goals, can be easily provided using argumentation techniques, since arguments can act as filters in each goal processing stage (diachronic support) and can be saved for future analysis (synchronic support). Besides, an argument can put together both the supporting beliefs and the supported goal in just one structure, hence facilitating future analysis.

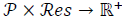

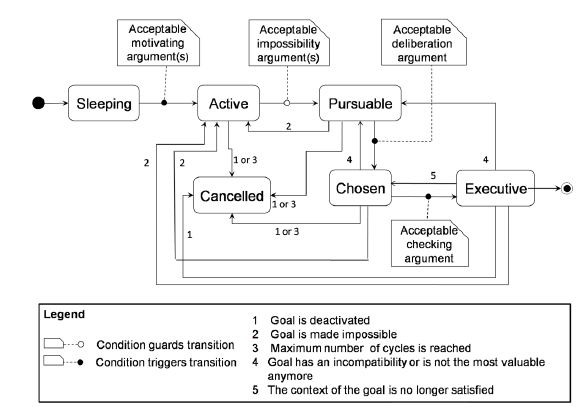

To the best of our knowledge, this extended model has not been formalized yet. Therefore, the aim of this work is to propose an argument-based computational formalization, where arguments act as filters between one stage and another and guide the transition of goals. Four types of arguments are defined; each one is associated with each stage of the goal processing cycle. On the one hand, arguments in activation, deliberation and checking stage act as supports for a goal to pass to the next stage; thus, if there is at least one acceptable supporting argument for a given goal, it will become active, chosen or executive, respectively. On the other hand, arguments in the evaluation stage act as attacks, preventing a goal from passing to the next stage. Thus, if there is at least one acceptable attacking argument for a given goal, it will not turn out to be pursuable. Fig. 1 shows a general schema of the goal processing stages and the status of goals after passing each stage, it also shows the necessary arguments that support or attack the pass of a goal to the next stage. For our approach, we also consider a status before the active one, it is called sleeping.

This extended model is also important because it may provide the agents the ability to make justified and consistent decisions, and to choose actions in the same manner. Finally, we use argumentation since it allows assessing the reasons that back up a conclusion, in this case, arguments provide reasons for a goal to change its status and to progress during the process of intention formation and this set of reasons can also be stored for future analysis. Considering that one of the aims of Castelfranchi and Paglieri is to better explain how an intention emerges, argumentation is an excellent approach for doing this.

This paper is organized as follows. Section 2 presents an overview of the goal processing model of Castelfranchi and Paglieri. The building blocks of the intention formation process are presented in Section 3. Section 4 presents the arguments and the argumentation procedure. Section 5 defines two frameworks, one containing particular information about the intention formation process for each goal and a general one with information about the entire process. Section 6 is devoted to the application of our proposal. In Section 7, we show how our proposal complies with the required properties in the theoretical model and we suggest other possible applications in Section 8. The main related works are presented in Section 9, and finally Section 10 is devoted to conclusions and future work.

2. Belief-based goal processing model

This section presents in summary form the four stages and the types of supporting beliefs defined in the goal processing model proposed by Castelfranchi and Paglieri.

a) In activation stage goals are activated by means of motivating beliefs. When a motivating belief is satisfied, the supported goal becomes active. For example, when a robot has the belief that there is a seriously wounded person, he may activate the goal of taking such person to the hospital.

b) In evaluation stage, the pursuability of active goals is evaluated. Such evaluation is made by using assessment beliefs. These beliefs represent impediments for pursuing a goal. For example, a robot knows that he can carry at most 80 kg, and a wounded person weights 90 kg. This fact makes impossible that the robot takes such person to the hospital. When there are no assessment beliefs for a certain goal, it becomes a pursuable goal.

Deliberation stage acts as a filter on the basis of incompatibilities and preferences among pursuable goals. Goals that pass this stage are called chosen goals. This stage is based on the following beliefs:

Cost beliefs are concerned with the costs an agent expects to sustain as a consequence of pursuing a certain goal, which involves the use of resources. These are internal resources of the agent rather than resources of the environment. For example, for taking a wounded to the hospital, the agent needs a certain amount of energy.

Incompatibility beliefs are concerned with the conflicts among goals that lead the agent to choose among them. For example, a robot has two goals that need a certain amount of energy each. If the robot has no enough energy for achieving both goals, a conflict between them arises.

Preference beliefs are applied to incompatible goals with the aim to establish a precedence order that determines which goal will become chosen. For instance, the robot could use a preference belief for choosing between the incompatible goals of the previous example.

a) In checking stage the aim is to evaluate whether the agent knows and is capable of performing the required actions to achieve a chosen goal; in other words, if the agent has a plan and he is capable of executing it. Goals that pass this stage are called executive goals. This stage is based on the following beliefs:

Precondition beliefs can be divided in two sub-classes: (i) incompetence beliefs, which are concerned with both the basic know-how and competence, and the sufficient skills and abilities needed to reach the goal, and (ii) lack of conditions beliefs, which are concerned with external conditions, opportunities, and resources.

Means-end beliefs, when the agent is competent to achieve a certain chosen goal, he must evaluate whether it has the necessary instruments for executing a plan.

3. Goals, beliefs and rules

Hereafter, let ℒ be a second order logical language which will be used to represent the goals, beliefs and rules of an agent. The following symbols Λ, V → , and ∼ denote the logical connectives conjunction, disjunction, implication, and negation, and ⊢ stands for the inference. We use 𝜑,𝜙, to denote atomic formulas of ℒ.

In this work, a goal is represented by using an atomic formula of ℒ. Along the intention formation process, it can be in one of the following states: active, pursuable, chosen or executive. In any of these states, a goal is represented by a grounded formula. However, before a goal becomes active, it has the form of a formula with variables; in this case, we call it a sleeping goal.

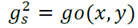

Definition 1. A sleeping goal is a predicate in ℒ of the form. Goal_Name (x1,….,xi…,xn).Let gs be the set of all sleeping goals.

When a goal is activated, it means that its variables have been unified with a given set of values. The set of these goals has the following structure:  such that

such that  is the set of active goals,

is the set of active goals,  is the set of pursuable goals, is the set of chosen goals,

is the set of pursuable goals, is the set of chosen goals,  is the set of executive goals, and

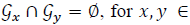

is the set of executive goals, and  is the set of cancelled goals. Notice that besides the possible four status of a goal, we consider cancelled goals. The reasons for a goal to be cancelled are explained with more detail in Section 5. Finally, the following condition must hold:

is the set of cancelled goals. Notice that besides the possible four status of a goal, we consider cancelled goals. The reasons for a goal to be cancelled are explained with more detail in Section 5. Finally, the following condition must hold:  {a,p,c,canc} , for with x ≠ y.

{a,p,c,canc} , for with x ≠ y.

In order to evaluate the worth each goal for the agent, we use the function IMPORTANCE:  → [0,1], which returns the importance of a given goal.

→ [0,1], which returns the importance of a given goal.

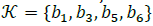

Like goals, the beliefs are represented by formulas of ℒ, and are saved in the knowledge base 𝒦, this structure stores all the kinds of beliefs used during the intention formation.

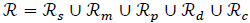

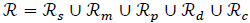

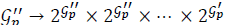

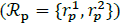

On the other hand, the set of rules has the following structure:  such that

such that  is the set of the standard rules (i.e. a rule that is made up of beliefs in both its premise and in its conclusion),

is the set of the standard rules (i.e. a rule that is made up of beliefs in both its premise and in its conclusion),  is the set of the motivating rules,

is the set of the motivating rules,  is the set of the impossibility rules,

is the set of the impossibility rules,  is the set of the deliberation rules, and

is the set of the deliberation rules, and  is the set of the checking rules. Each set of rule corresponds to one stage of the intention formation process. The following condition must hold:

is the set of the checking rules. Each set of rule corresponds to one stage of the intention formation process. The following condition must hold:  , with x ≠ y.

, with x ≠ y.

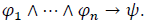

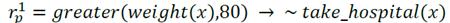

Definition 2. A motivating rule rm is an expression of the form  . In the case of the atomic formulas or the premise, they have to be unified with a belief of 𝒦 and in the case of 𝜓, it represents a goal. All 𝜓 are considered a sleeping goal and hence belong to

. In the case of the atomic formulas or the premise, they have to be unified with a belief of 𝒦 and in the case of 𝜓, it represents a goal. All 𝜓 are considered a sleeping goal and hence belong to

Definition 3. An impossibility rule rp is an expression of the form  What was said in the previous definition also holds for it.

What was said in the previous definition also holds for it.

It is important to highlight that if there is an impossibility rule for a certain goal 𝜓, there must be a motivating rule for it as well. This is because impossibility rules are part of the second stage, hence these only can refrain an already active goal. Therefore, it is necessary to have first a motivating rule that activates it. However, the opposite is not strictly necessary as impossibility rules are not required for a goal passes to the next stage.

Standard, motivating and impossibility rules are designed and entered by the programmer of the agent, and their content is dependent on the application domain. Otherwise, deliberation and checking rules are pre-defined and no new rules of these kinds can be defined by the user. Before presenting the deliberation and the checking rules, let us define the beliefs that made up these kinds of rules.

Unlike the beliefs that support the first two stages, the beliefs for the deliberation and checking stages are beliefs that express something about the goals, for instance, one of the beliefs expresses if there are plans for achieving a certain goal. Both stages are divided in two parts. In the case of the deliberation stage, the first part involves the evaluation of incompatibilities among pursuable goals (we do not go into detail about the kinds of incompatibilities and how they can be identified because it is not the main focus of this work and it is a broad topic). In the second part is about determining the most valuable goals from the set of incompatible ones. Regarding the checking stage, the first part is about the agent’s know-how, which is the same as saying whether or not the agent has at least a plan for achieving a goal, and the second one involves determining if the context of these plans is satisfied.

We believe that it is necessary to explain some details about these two stages. Although the checking stage occurs after the deliberation one, the plans associated with each goal are taken into account in both stages. In the deliberation stage, the plans are used in order to determine the rise of incompatibilities (you can read [18] for details about how incompatibilities are detected through plans) and in the checking one, it should be verified if there is at least one plan for a goal become executive. Thus, we can notice that the existence of plans for the goal has to be verified in the deliberation stage, since when there is no plan for a goal, it is not possible to determine if it has or not incompatibilities with other goals. Hence, the belief that expresses the existence of plans is generated in the deliberation stage but it is used, to generate the respective argument, in the checking stage. Note that when there is no plan for a goal, it will not pass the deliberation stage.

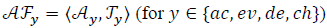

Thus, an agent has a set of plans P, where each plan has the following form:  encoding a plan-body program P for handling an goal g when the context condition

encoding a plan-body program P for handling an goal g when the context condition  is satisfied. Besides, there is a resource requirements list R

req

, which is composed of a list of pairs (res,n), where n>0 represents the necessary amount of resource res to perform the plan. Let us also define a resource summary Res, which contains the information about the available amount of resources of the agent; it has the same structure of R

req

. Let NEED_RES:

is satisfied. Besides, there is a resource requirements list R

req

, which is composed of a list of pairs (res,n), where n>0 represents the necessary amount of resource res to perform the plan. Let us also define a resource summary Res, which contains the information about the available amount of resources of the agent; it has the same structure of R

req

. Let NEED_RES:  be a function that returns the amount of a resource that a given plan needs.

be a function that returns the amount of a resource that a given plan needs.

Now, let us begin with the evaluation of the competence of the agent, the following function is in charge of that:

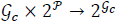

EVAL_COMPET:  , it takes the set of pursuable goals and returns those ones that have at least one plan associated with it along with such plan(s). For all these goals, a competence belief has to be generated.

, it takes the set of pursuable goals and returns those ones that have at least one plan associated with it along with such plan(s). For all these goals, a competence belief has to be generated.

Definition 4. A competence belief is an expression of the form has_plans_for(g).

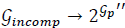

The next step is to evaluate the incompatibility among those goals that have at least one plan that allows the agent to achieve them. The following function is in charge of determining the set of goals that have no conflicts among them: NINCOMP_GOALS:  takes as input the set

takes as input the set  (where

(where  is the set of goals with at least one plan associated) and returns a set of compatible goals. A non-incompatibility belief has to be generated for each element of the returned set.

is the set of goals with at least one plan associated) and returns a set of compatible goals. A non-incompatibility belief has to be generated for each element of the returned set.

Definition 5. A non-incompatibility belief is an expression of the form ~ has_𝑖ncompatibility(𝑔).

So far we know the set of goals without incompatibilities, which become directly chosen ones. It is also simple to intuit that  NINCOMP_GOALS

NINCOMP_GOALS  (is the set of goals that have some kind of incompatibility, let us call this set

(is the set of goals that have some kind of incompatibility, let us call this set  . Now, it is necessary to divide

. Now, it is necessary to divide  in subsets according to the conflicts that exist among the goals. For instance, a subset of goals {g

6

,g

8

,g

9

} may be incompatible due to resources and other subset {g

10

,g

12

} may have terminal incompatibility. It could be also the case that a same goal belongs to more than one subset. The following function is in charge of dividing

in subsets according to the conflicts that exist among the goals. For instance, a subset of goals {g

6

,g

8

,g

9

} may be incompatible due to resources and other subset {g

10

,g

12

} may have terminal incompatibility. It could be also the case that a same goal belongs to more than one subset. The following function is in charge of dividing  into subsets: EVAL_INCOMP:

into subsets: EVAL_INCOMP:  , this function takes as input the set of incompatible goals and returns subsets of it, taking into account the different conflicts.

, this function takes as input the set of incompatible goals and returns subsets of it, taking into account the different conflicts.

Depending on the importance of the incompatible goals, some of them will pass to the next stage and become chosen. The function in charge of determining such set of goals is: EVAL_VALUE:  where

where  represents the set returned by EVAL_INCOMP. For all the goals returned by EVAL_VALUE, the following belief has to be created:

represents the set returned by EVAL_INCOMP. For all the goals returned by EVAL_VALUE, the following belief has to be created:

Definition 6. A value belief is an expression of the form most_valuable(g). It means that g is the most important goal of a set of incompatible ones.

So far we have defined the beliefs and functions that belong to the deliberation stage, including one belief that will be used in the checking stage. The next step is to evaluate if the context part of the plan(s) returned by EVAL_COMPET is satisfied. This task is in charge of the following function: EVAL_CONTEXT:  , it takes as input the result of EVAL_COMPET and returns the set of chosen goals that have the context of at least one of their associated plans satisfied. For each of these goals, a condition belief has to be generated.

, it takes as input the result of EVAL_COMPET and returns the set of chosen goals that have the context of at least one of their associated plans satisfied. For each of these goals, a condition belief has to be generated.

Definition 7. A condition belief is an expression of the form satisfied_context(g).

After a belief is generated, it has to be added to 𝒦 We want to point out that the agent may also generate or perceive by communication the negation of these beliefs. Due to the lack of space it is hard to go into details, however it is important to mention that the negation of these beliefs will serve for generating attacks to the arguments generated in these stages.

After having defined these beliefs, we can present the rules for deliberation and checking stages.

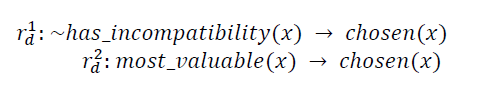

Definition 8. A deliberation rule rd is composed of a non-incompatibility belief or a value belief in the premise and a belief about the state of a goal g in the conclusion. For the rule to be triggered, g must be a pursuable goal. The set  of deliberation rules consists of:

of deliberation rules consists of:

Definition 9. A checking rule rc is composed of a competence belief and a conditions belief in the premise and a belief about the state of a goal g in the conclusion. For the rule be triggered, g must be a chosen goal. The single set Rc consists of:

4. Argumentation process

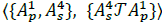

This section is devoted to the argumentation process that is carried out in each stage in order to determine which goals pass to the next stage. This argumentation process can be decomposed into the following steps: (i) constructing arguments, (ii) determining conflicts among arguments, and (iii) evaluating the acceptability of arguments.

4.1. Arguments

There are mainly two kinds of arguments, those that are specific for each stage and those that can be used in any of the four stages. We call the last ones standard arguments and the former ones take either the name of the supporting beliefs involved in each the stage or the name of the stage.

Definition 10. A standard argument is a pair A

s

= 〈𝒮,𝜑〉 such that (i)  ; (ii)

; (ii)  ; and (iii)

; and (iii)  is minimal and consistent (hereafter, minimal means that there is no

is minimal and consistent (hereafter, minimal means that there is no  such that

such that  , and consistent means that it is not the case that

, and consistent means that it is not the case that  and

and  , for any 𝜑 [3]).

, for any 𝜑 [3]).

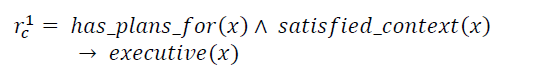

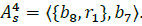

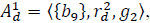

Definition 11. We use letter m for denoting motivating arguments, p for impossibility arguments, d for deliberation arguments, and c for checking arguments. These are represented by a tuple  such that:

such that:

4.2. Conflicts among arguments

The attacks among standards arguments are the well-known undercut and rebuttal [3]. An argument A1 s undercuts an argument A2 s when A1 s attacks part of or all the support of A2 s. And an argument A1 s rebuts an argument A2 s when it attacks the conclusion of A2 s. Formally:

Definition 12. An undercut for a standard argument 〈𝒮,𝜑〉 is a standard argument  where

where  .

.

Definition 13. A standard argument  is a rebbutal for

is a rebbutal for  is a tautology.

is a tautology.

An argument generated for a certain stage of the intention formation process can be only attacked by a standard argument, which may undercut its support.

Definition 14. A mixed undercut for a motivating (impossibility, deliberation or checking) argument

is a standard argument

is a standard argument  where

where  .

.

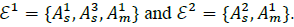

4.3. Evaluating the acceptability of arguments

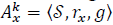

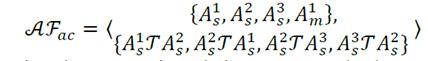

This evaluation is important, because it determines which goals pass from one stage to the next. First, we define an argumentation framework, and then we show how the evaluation is done. There is an argumentation framework for each stage, we use the following notation to differentiate them: 𝑎𝑐 for the activation stage, ev for the evaluation one, de for the deliberation one, and ch for the checking one. Definitions 15 and 16 are adapted from [4].

Definition 15. An argumentation framework is a pair  such that:

such that:

, where

, where  is a set of standard arguments and

is a set of standard arguments and  is a set of motivating or impossibility or deliberation or checking arguments;

is a set of motivating or impossibility or deliberation or checking arguments; , where

, where  is the attacks among standard arguments and

is the attacks among standard arguments and  is the mixed attacks. The following notation represents an attack relation:

is the mixed attacks. The following notation represents an attack relation:  which means that Ai attacks Aj (for sake of simplicity, hereafter, Ai and Aj represent any kind of argument).

which means that Ai attacks Aj (for sake of simplicity, hereafter, Ai and Aj represent any kind of argument).

The next step is to evaluate the arguments that make part of the argumentation framework  , taking into account the attacks among them. The aim is to obtain a subset of

, taking into account the attacks among them. The aim is to obtain a subset of  without conflicting arguments, and with the maximum amount of arguments. In order to obtain it, we use an acceptability semantics. The idea of a semantics is that given an argumentation framework, it determines zero or more sets of acceptable arguments (no conflicting arguments). These sets are also called extensions [4]. In our case, a semantics will determine if a given goal will become (i) active, which happens when at least one motivating argument, supporting it, belongs to an extension or (ii) pursuable, which happens when no impossibility argument, attacking the goal, belongs to an extension.

without conflicting arguments, and with the maximum amount of arguments. In order to obtain it, we use an acceptability semantics. The idea of a semantics is that given an argumentation framework, it determines zero or more sets of acceptable arguments (no conflicting arguments). These sets are also called extensions [4]. In our case, a semantics will determine if a given goal will become (i) active, which happens when at least one motivating argument, supporting it, belongs to an extension or (ii) pursuable, which happens when no impossibility argument, attacking the goal, belongs to an extension.

In this work, we use the preferred semantics, because it returns the maximal subsets of conflict-free goals. However, one drawback of this semantics is that it may return more than one subset. In such case, we will consider the number of arguments that support a belief or a goal. For example, if there is a preferred extension with three arguments supporting belief bi, and another preferred extension with two arguments supporting belief bj (for i≠j), then we will choose the first extension since there are more arguments supporting belief bi. In case of tie, we will choose the extension that maximizes the number of goals that can pass to the next stage. Following definition is adapted from [4]:

Definition 16. Let  be an argumentation framework and

be an argumentation framework and  ε.

ε.

ε is conflict-free iff there exist no A i ,A j Є ε such that A i attacks A j .

ε defends an argument A i iff for each argument A i Є A y , if A j attacks 𝐴 𝑖 , then there exist an argument A k Є ε such that A k attacks A j .

is admissible iff it is conflict-free and defends all its elements.

is admissible iff it is conflict-free and defends all its elements. A conflict-free ε is a complete extension iff we have ε={ A i │ ε defends A i }.

ε is a preferred extension iff it is a maximal (w.r.t the set inclusion) complete extension.

We can now define an acceptable argument:

Definition 17. An argument A

i

is acceptable if A

i

Є ε, such that ε is the preferred extension of argumentation framework  .

.

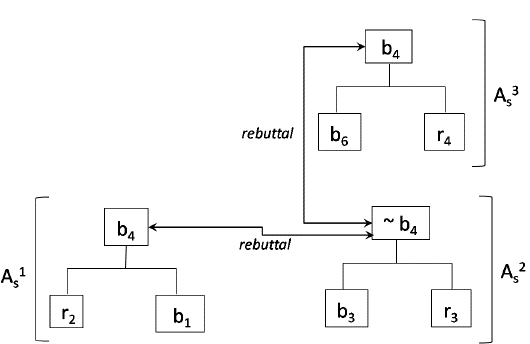

5. Global structures

So far we have defined the beliefs, rules and arguments necessary for the intention formation process and we also have presented the argumentation process. In this section, we present the goal life-cycle (see Fig. 2) that describes the states and transition relationships of goals at runtime. We also present an individual memory record that saves the cognitive path that led a goal to be in its current state, and a general framework for the intention formation process, which includes the structures necessary for the whole intention formation process, i.e. for all goals that have ever been activated by the agent.

Fig. 2 shows all the possible transitions of a goal from sleeping state until it becomes executive, when all the conditions are favorable for it, i.e. when there is at least one acceptable motivating argument supporting it, there is no impossibility argument attacking it, there is a deliberation argument supporting it and a checking one also supporting it. A goal may be also cancelled, it happens when:

The maximum number of cycles for being in a certain state is reached; it means that a goal cannot stay in a certain status during the whole life of the agent. This is even more important when the goal is chosen since some resources could be reserved for it and, in this case, it never becomes executive, such resources could have been used to reach other goals, or

It is deactivated, which happens when the agent receives new information that lets him generate new standard arguments and after a calculation of the preferred extension, the motivating (or deliberation) argument(s) that supported it are no longer acceptable.

Finally, a goal (pursuable or chosen) can be moved back to active state when the agent receives new information that leads to the recalculation of the preferred extension in the evaluation stage and such new preferred extension has an impossibility argument that attacks such goal.

The agent needs to store information about the progress of his goals considering the life cycle, in other words about the transitions of these from one stage to other. Such data will be saved in the individual memory record, which must be updated after a new transition happens.

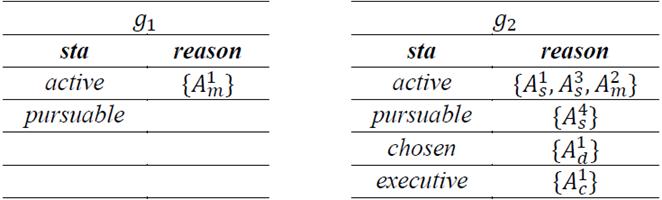

Definition 18. An individual memory record MR of a given goal g is a set of ordered pairs (sta, reason) where sta is one of the possible states of goal g ( i.e. active, pursuable, chosen executive, or cancelled) and reason is a set of arguments that allow g to be in sta (when sta Є {active , pursuable, chosen, executive}). Otherwise, when sta= cancelled, reason, is a number (1 or 3), such that each number represents the reason to cancel 𝑔 (see Fig. 2 for the meaning of each number).

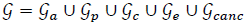

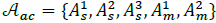

Besides the individual memory record, we also need a structure that stores information about the intention formation process of all goals and other elements that influence this process.

Definition 19. A General framework of the intention formation process is a tuple

, such that:

, such that:

η is the maximum number of cycles a goal can remain in a stage,

Monitor is a module in charge of supervising the status of the goals and keeping the consistency in the goal processing model by executing the following tasks:

1. Moving goals from,  , to

, to  , after η is reached.

, after η is reached.

2. Releasing resources when a chosen goal is moved from  to

to  ,

,  or

or

3. Moving deactivated goals from,  . A goal g is activated when there is one or more acceptable motivating arguments supporting it. In posterior reasoning cycles, the agent may obtain new information that triggers the generation of standard arguments, which may attack such motivating argument(s); if so, goal g must be deactivated. It is important to highlight that executive goals that are moved to

. A goal g is activated when there is one or more acceptable motivating arguments supporting it. In posterior reasoning cycles, the agent may obtain new information that triggers the generation of standard arguments, which may attack such motivating argument(s); if so, goal g must be deactivated. It is important to highlight that executive goals that are moved to  are those that has not been executed yet. This holds for this case and the next ones that involve executive goals.

are those that has not been executed yet. This holds for this case and the next ones that involve executive goals.

4. Moving goals from  . A goal g passes the evaluation stage when no impossibility argument (related to it) is acceptable, however in posterior reasoning cycles, the agent could generate an impossibility argument for g, whereby it turns out to be impossible to be achieved and has to be put in

. A goal g passes the evaluation stage when no impossibility argument (related to it) is acceptable, however in posterior reasoning cycles, the agent could generate an impossibility argument for g, whereby it turns out to be impossible to be achieved and has to be put in  .

.

5. Moving goals from  or

or  to

to  . A goal g passes the deliberation stage when a deliberation argument (related to it) is acceptable. As in previous cases, new incoming information could generate standard arguments that attack the supporting deliberation argument, whereby goal g is no longer chosen and becomes pursuable again.

. A goal g passes the deliberation stage when a deliberation argument (related to it) is acceptable. As in previous cases, new incoming information could generate standard arguments that attack the supporting deliberation argument, whereby goal g is no longer chosen and becomes pursuable again.

6. Moving goals from  to

to  . An executive goal becomes chosen again when there is an acceptable standard argument that attacks the checking argument that supported its status transition.

. An executive goal becomes chosen again when there is an acceptable standard argument that attacks the checking argument that supported its status transition.

7. Removing from 𝒦 all beliefs generated during the deliberation and/or the checking stage about a certain goal g when it is moved to  or to

or to

A general framework is unique for each agent and the parameter η must be defined by the programmer. Every task ℳonitor performs is important for the consistent and correct functioning of the intention formation process. In some situations, more than one task is required, for example, when a chosen goal is cancelled, it has to be moved to  and the beliefs generated for it during the deliberation stage must be also removed from 𝒦.

and the beliefs generated for it during the deliberation stage must be also removed from 𝒦.

6. Application: a natural disaster scenario

Let us suppose that BOB is a robot agent whose main goal is to wander an area attacked by a natural disaster in search of people needing help. When a person is seriously injured he/she must be taken to the hospital, otherwise he/she must be sent to a shelter. BOB can also communicate with other robots in order to bring support to them or to ask for support. For a better understanding among agents, the area is divided into numbered zones using ordered pairs.

Now, let’s see the basic mental states of agent BOB: Sleeping goals ( ) :

) :

g1 s = take_hospital (x) //take a person x to the hospital

g2 s = go( x,y) // go to zone (x,y)

g3 s = sen_shelter (x) // send a person x to the shelter

Motivating rules ( R m = {r1 m, r2 m, r3 m}):

r1 m = injured_severe(x) → take_hospital(x) // if person x is not severely injured, then take x to the hospital

r2 m = ~ injured_ severe (x) → send_shelter(x) // if person x is not severely injured, then send x to the shelter

r3 m = asked_for_help(x,y) →go (x, y) //if BOB is asked for help in zone (x,y), then go to that zone

//take a person x to the hospital

//take a person x to the hospital

//send a person x to the shelter

//send a person x to the shelter

In order to facilitate the readability, hereafter we use names b i to refer to beliefs and names r j to refer to standard rules.

It is important to mention that

From here, we will see the intention formation process.

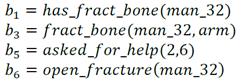

Activation stage:

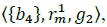

Based on his current mental state, the following arguments are generated (three standards and one motivating):

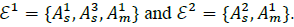

After the unification of variable x with man_32, we have a new belief: b4= injured _ severe(man_32). In the same manner, in motivating argument  variables x and y can be unified with 2 and 6 respectively, which entails g

1

= go(2,6). Then, the following is the argumentation framework for this stage, which consists of motivating and standard arguments and the possible attacks among them.

variables x and y can be unified with 2 and 6 respectively, which entails g

1

= go(2,6). Then, the following is the argumentation framework for this stage, which consists of motivating and standard arguments and the possible attacks among them.

Note that there are rebuttals between standard arguments (see Fig. 3) and no attack to the motivating argument. Applying the preferred semantics to  , we obtain two extensions:

, we obtain two extensions:

Since the quantity of standard arguments that support belief b4 are greater than the arguments that attack it, we can say that extension ε1 is the selected one to determine which arguments are acceptable, and therefore which goals become active. Nevertheless, notice before that since  , a new motivating argument can be generated:

, a new motivating argument can be generated:

, where g2= take_ hospital(man_32) Therefore,

, where g2= take_ hospital(man_32) Therefore,  is also modified by including this new argument, hence

is also modified by including this new argument, hence  and

and  remains the same. The selected preferred extension for

remains the same. The selected preferred extension for  is

is  . Therefore, two goals are activated, thus

. Therefore, two goals are activated, thus

Evaluation stage

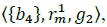

At this stage, BOB has two active goals, to take man_32 to the hospital and to go to zone (2,6). Let us suppose that new knowledge is added to BOB’s knowledge base (it can be obtained by communication or perception, however, these details are out of the scope of this work). Thus, let

where b2 = weight (man_32,70) b7 = availa (bed) and b8 = abtain (bed).

where b2 = weight (man_32,70) b7 = availa (bed) and b8 = abtain (bed).

By unifying ~𝑏7 with the impossibility rule  , the following impossibility argument is generated:

, the following impossibility argument is generated:

which would refrain the pass of g2 to the next stage. However, a new standard argument can also be generated by unifying b

8

with r1, thus

which would refrain the pass of g2 to the next stage. However, a new standard argument can also be generated by unifying b

8

with r1, thus  Notice that

Notice that  undercuts

undercuts  in belief b7. Thus, the argumentation framework for this stage is:

in belief b7. Thus, the argumentation framework for this stage is:

and the preferred extension for

and the preferred extension for is

is . Since there is no impossibility argument in ε

p

, no active goal is refrained to become pursuable. Hence,

. Since there is no impossibility argument in ε

p

, no active goal is refrained to become pursuable. Hence,  = {} and

= {} and  {g1,g2} and.

{g1,g2} and.

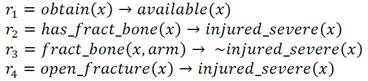

Deliberation stage

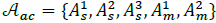

Let P ={P1,P2,P3,P4,P5,P6,P7} be the set of plans of agent BOB. Now, let us evaluate the competence of BOB: EVAL_COMPET  . Since there is at least one plan for achieving g1 and g2, the following beliefs are created and added to BOB’s knowledge base: b10= has_plans_for(g2),b11= has_plans_for(g1) and

. Since there is at least one plan for achieving g1 and g2, the following beliefs are created and added to BOB’s knowledge base: b10= has_plans_for(g2),b11= has_plans_for(g1) and

In order to illustrate briefly the incompatibility among goals, let Res={(energy,85)} be the available quantity of energy of BOB. Let NEED_RES(p6, energy)=30 and NEED_RES(p

2

energy)= 65 be the necessary energy each plan goal needs to be executed. Notice that there is enough energy for executing each goal, however the problem is that there is not enough energy for executing both of them, therefore both goals are incompatible. Then, the next step is to evaluate them based on their importance. Let IMPORTANCE (g1) = 0.7 and IMPORTANCE, (g2) = 0.9, hence, a value belief is created and added to BOB’s knowledge base:  , where b9= most_valuable(g2). It means that a deliberation argument can be generated:

, where b9= most_valuable(g2). It means that a deliberation argument can be generated:  , which support the pass of goal g2 to the next stage as there is no argument attacking it. The argumentation framework for this stage is the following:

, which support the pass of goal g2 to the next stage as there is no argument attacking it. The argumentation framework for this stage is the following:  , and the preferred extension is

, and the preferred extension is  Therefore,

Therefore,  and

and  , which means that goal g2 becomes chosen.

, which means that goal g2 becomes chosen.

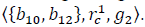

Checking stage

Let us recall that the next step is to verify if the plan or plans associated each chosen goal have their context satisfied. Thus, EVAL_CONTEXT ((g2,{p2})) ={g2}, hence b12 = satisfied_context(g2) is created its plan has its context satisfied. Finally

Both a competence belief and a condition belief were generated for the chosen goal g2, hence, the following checking argument can be generated:

. It means that goal g2 becomes executive and therefore BOB will execute take_hospital(man_32). Hence,

. It means that goal g2 becomes executive and therefore BOB will execute take_hospital(man_32). Hence,  and

and

Finally, let us show the individual memory record of goals g1 and g2 (see Table 1).

A MR shows, in summarized form, the main information on which all the transitions of both goals were based. It mainly stores the arguments that supported or attacked such transitions.

In the example, few arguments were generated. However, in more complex situations a goal could have more than one motivating argument or in the case it remains only as active, it could have more than one impossibility argument attacking its transition to the next stage. Another complex situation occurs when a goal is cancelled and reactivated. In the case the agent wants to know more details, he can check the argumentation frameworks, in which he can find whether or not the arguments were attacked, and which standard arguments were involved in the argumentation process. Let us also recall that we use short names for referring to generated arguments, hence the agent also knows what beliefs make of an argument. Thus, for instance, the agent can know if a pursuable goal became chosen because it has no incompatibilities or because it was the most valuable.

7. Properties of this approach

In this section, we demonstrate how our approach satisfies the desirable properties specified in [2].

Property 1. (Diachrony) Let g be a goal and 𝑠𝑡𝑎 its status in the intention formation process, the change of sta is supported by the presence or absence of (acceptable) arguments, which are made up of beliefs and rules in their premises and 𝑔 in their conclusions.

If ∃ A m such that CONC, (A m ) = g, then it supports the activation of g and therefore sta = active.

If ∄A p whose CONC (A p ) = g, then this absence supports the transition of gj and therefore sta = pursuable.

If ∃ A d such that CONC (A d )=g, then it supports the progress of g and therefore sta = chosen.

If ∃ A c whose CONC (A c )= g , then it supports the transition of 𝑔 and therefore sta = executive.

Thus, this approach provides support in each stage of the intention formation process by means of arguments, which put together both the beliefs and the goals in only one structure.

Likewise, the presence of certain beliefs acts as reasons for a given goal to advance to the next stage, and the absence of others allows the transition of the goal.

Property 2. (Synchrony) Let g be a goal and MR its individual memory record. This structure stores information about the supporting (or attacking) arguments generated since g is instantiated from a sleeping goal until it becomes executive.

Therefore, it is possible to know the entire cognitive path that led a given goal to its current state. Although the individual memory record is a simple structure, it can act as a pointer to other structures with more information about the state. Thus, depending on the status of the goal, the agent can check the respective argumentation framework to inspect all the arguments and attacks involved in the preferred extension calculation. The agent can also know the reason that made a goal to be cancelled or moved to the active status again.

Property 3. (Consistency between beliefs and goals) The change of states of any goal is entirely supported by beliefs, which are of different types depending on the stage. Therefore, the role of the beliefs is decisive for a goal to continue or not in the intention formation process. Such beliefs can be obtained by the agent of the diverse sources or generated by him, such as the beliefs of the deliberation and checking stages.

Some changes in the beliefs of the agent may lead to construct standard (or impossibility) arguments that deactivate or make a goal (or goals) impossible. Consistently with these, such goal(s) are cancelled or moved to the set of active goals, additionally, the beliefs generated in the deliberation and/or checking stages are also deleted from the knowledge base of the agent in order to maintain the equilibrium.

8. Applications

This subsection shows some applications that could be benefited by applying this model in their agents architecture.

Agents are autonomous interactive entities that may work cooperatively within the structure of a Multi-Agent System (MAS) or within a structure that may include humans. The scenario used in Section 6 is a good example of a situation where agents can and/or need to interact with both humans and other agents.

Another possible scenario is a medical treatment one. Chen et al. [5] present a framework for flexible human agent cooperative tasks, where the idea is to provide technological support for human decision making. For example, in the context of a simulated combat medical scenario, where a surgeon faces a life-altering decision for a wounded soldier, such as deciding whether or not to amputate a leg; he can make a better and more confident decision after conferring with other human experts. In this case, agents could put together the knowledge of these professionals and provide a detailed justification in favor of one or another decision. This kind of scenario belongs to the problem of task delegation (more precisely decision-making delegation) to agents in collaborative environments. Other examples of possible applications include disaster rescue [6,7], hospital triage [8], elderly care systems [9], crisis response [10], etc.

These kinds of step-by-step justifications are well supported by the goal processing model formalized in this article. Besides, agents act consistently with their beliefs; hence all goals that become intentions are in rational equilibrium with these.

9. Related work

Many works have highlighted the benefits of using argumentation in multi-agent settings, however, there are still few works that use argumentation inside the agent architecture. Agent deliberation is the focal point of the work of Kakas et al. [11]. This work is based on Logic Programming without Negation as Failure (LPwNF), which is used to implement an argumentation framework. Argumentation is used to entail preference and resolve some arising conflicts. Kakas et al. [12] presented the computational logic foundations of a model of agency called KGP (Knowledge, Goals and Plan), in this model, both goal decision and cycle theories for internal control are done through argumentation. Berariu [13] uses argumentation in order to maintain consistency of the belief base of BDI agents. A fully integrated argumentation-based agent (ABA) architecture with a highly modular structure is developed in [14].

Argumentation has also been used for generating goals and plans. Amgoud [15] proposes an argumentation-based framework to deal with conflicting desires. She uses the argumentation framework proposed by Dung [4] to determine the intentions of an agent from a set of contradictory desires. Amgoud and Kaci [16] study the generation of bipolar goals in argumentation-based negotiation. They claim that goals have two different sources: (i) from beliefs and (ii) from other goals and they propose explanatory and instrumental arguments to justify the adoption of goals. These arguments have a similar function of our motivating arguments. Finally, Rahwan and Amgoud [17] use argumentation for generating desires and plans. They propose three different argumentation frameworks, one for arguing about beliefs, other for arguing about desires and the third one for arguing about what plan used to achieve a desire.

As shown, most of the related works use argumentation in some specific parts of the reasoning cycle of an agent. Just the work of Kakas et al. [14] aims to create a fully argumentation-based agent architecture. In our case, our proposal uses argumentation for the process of intention formation (also called goal processing model). The work of Amgoud [15] partially shares our objective, starting from a set of conflicting desires; argumentation is used to resolve such conflicts and to decide which of them will become intentions. We start from a set of sleeping goals, which can be conflicting or not and use argumentation to filter the set of goals that will advance to the next stage. The main difference is the path a desire has to go over until it becomes an intention, which in our work is more fine-grained as it includes not only conflicts but impossibilities and checks whether there are conditions for a goal to be achieved.

10. Conclusions and future work

We presented an argumentation-based formalization for the intention formation process proposed by Castelfranchi and Paglieri. We defined types of beliefs, rules and arguments taking into account the features described in the abstract model. Arguments are built based on supporting beliefs and goals, and act as filters specifying what goals must pass from one stage to the next or not, and at the same time as reasons that support or attack such transitions.

Using argumentation during the intention formation process gives it flexibility since the results of the argumentation can vary under new updated beliefs, which leads to change the state of a goal either forward or backward. The state of a goal goes forward when the change leads it to be closer to become an intention and it goes backward when the change leads it to be farther of becoming an intention. Argumentation also allows the agent to make well founded and consistent decisions.

An interesting future work related to the application of this model is to investigate its relationship with a decision making process inside the mind of an agent. Currently, we are studying the relation of this model and the calculus of the strength of rhetorical arguments (threats, rewards and appeals) in a persuasive negotiation context.