1. Introduction

Precision agriculture (PA) consists integrated, information- and production based farming system that is designed to increase long term, site-specific and whole farm production efficiency, productivity and profitability improving production and environmental quality [25]. According to [28], massive data collection and real-time analysis will be required in the near future, involving sensors that record the variations and plant characteristics, with a quick and accurate diagnosis to intervene in the crop. Such sensors can be based on satellites, airplanes, Remotely Piloted Aircrafts (RPA), fixed on mobile robots or agricultural machines. Being possible to identify weeds, pests and diseases, leaf health through its reflectance, water stress, nutritional deficit, non-uniformity, planting failures, crop anomalies and other factors significant for decision making in the use of fertilizers and pesticides, and for mitigation measures.

Currently, the farm management requires high-resolution images, usually in centimeters [26,57]. According to [57], there are still some limitations to the application of sensor systems based on remote sensing in farm management, such as: timely collection and delivery of images, lack of high spatial resolution data, interpretation of images and data extraction, and the integration of this data with agronomic data in specialized systems.

Given these limitations, it is expected that RPA are efficient remote sensing tools for the PA. RPA has an expanded role that complements the images of manned aircrafts and satellites, providing agricultural support [15], enabling flexibility in data collection of high spatial and temporal resolution, allowing the efficient use of resources, protecting the environment, providing information related to management treatments as use of localized application and variable rate machines, sowing, application of fertilizers and pesticides [16].

Thus, there is the PA ability to follow technological development based on more flexible and accessible platforms to farmers, such as RPA. This technology allied to the PA allows a closer and more frequent follow-up of diverse cultures with better management and handling.

Therefore, this review was aimed to bring the definitions about RPA, to evidence their current applications in agriculture among several authors and to study the potentialities and alternatives of this new technology applied to PA.

agriculture

Remote digital imaging has emerged and become popular through unmanned aircraft systems (UAS). For [20], UAS are composed by aircrafts with associated elements and employed without pilot on board, which may be remotely piloted aircraft (RPA) or an autonomous aircraft. Fully autonomous aircrafts are those that cannot be intervened once started the flight.

According to [20], the term unmanned aerial vehicles (UAV) is obsolete, since aviation organizations use the term aircraft. Thus, it was adopted a term that is recognized worldwide in order to have a standardization. Another reason is due to the operational complexity of these aircrafts, since it requires a ground station, the link between the aircraft and the pilot, besides other elements necessary to make a safe flight. Therefore, the term ‘vehicle’ would be inappropriate to represent the entire system.

Research related to UAS have been developed worldwide by providing information on these or about the popularly known as drones, whose name originated in the United States and is related to drone, a male bee and its tinnitus, due to the produced sound when operating. There are other terminations found in the literature, including unmanned aerial vehicles (UAVs) [3,26,31,40], Remotely Piloted Aircraft Systems (RPAS) [7], Remotely Piloted Aircraft (RPA) [9,20,56], Remotely Piloted Vehicles (RPV) [12,39], Unmanned Aircraft Systems (UAS) [20,52], Remotely Operated Aircraft (ROA) [46].

In view of this situation and several terminologies that have arisen to represent these aircrafts, the [20] has designated the term RPA as standard, which is then used in this review.

RPAs are aircrafts in which the pilot is not on board, whose control is remote through interface as a computer, simulator, digital device or remote control, in contrast to the autonomous aircrafts that once programmed have no external intervention during the flight. RPAs are considered a subcategory of unmanned aircrafts.

Most RPAs have: GNSS, which provides position information; motors; autopilot and/or remote control; cameras for obtaining images and videos; landing gear; radio transmitter and receiver; and systems for measuring altitude and direction.

RPAs can be classified as fixed or rotary wing. The fixed wings are suitable for longer distances, reach higher speeds, although are heavier, larger and with higher costs in relation to those with rotary wing, as observed by [36] in their studies of potentials and operability of RPA. Most take-off and landing cases of RPAs with fixed-wing platform are manual, except those with autopilot.

The rotary-wing platforms are more autonomous, have vertical take-off and landing, are usually smaller and of lower weight in relation to the fixed wings, and because of their size they can hover in the sky, being advantageous in order to get images of desirable targets. They have a disk-shaped rotary wing with a propeller (helicopter), two propellers (bicopter), three (tricopter), four (quadricopter), six (hexacopter) or eight (octacopter). However, they have some limitations, such as flight autonomy, being usually powered by battery that last for 25 min on average, and they do not support a large number of embedded sensors because they are small [36].

Precision agriculture uses accurate methods and techniques to monitor areas more efficiently. Geospatial technologies are used in order to identify the variations in the field and to apply strategies to deal with the variability [57]. Among these technologies, it can be mentioned: Geographic information systems, Global Navigation Satellite System (GNSS) and Remote Sensing, which can aid in obtaining data and provide localized support in agricultural management.

Remote sensing obtains target information through sensors on platforms at orbital level, reaching a spatial resolution of 0.61 cm in the panchromatic band obtained by the QuickBird satellite, since the RPA achieve a spatial resolution of 5 cm, being useful in studies that require a higher detailed level, as shown by comparative studies of [33]. The spatial resolution of RPA is directly related to flight altitude, and [38] achieved a spatial resolution of 8.1 mm and 65 mm, with flight altitude of 15 and 120 m respectively.

According to [57], in emergency cases of crop monitoring, nutrient deficit analysis, crop forecasting, the orbital sensors cannot provide continuous data, with high frequency and with a high detailed level. It also has limitations as high costs, lack of operational flexibility and low spatial and temporal resolution [52]. Another factor that interferes in the acquisition of images are the weather conditions, e.g., since on cloudy days there is the impediment of the solar energy passage and hence information loss of surface data [16].

Despite advances in the science of remote sensing, due to the mentioned limitations, some studies were performed in order to find different platforms in which they were efficient in obtaining data remotely with minimum costs. Some examples of these studied platforms were airships [21,47], balloons [48] and kites [1,53]. Although dealing with low-cost platforms in relation to orbital platforms, they rely on operationally impractical manual maneuvers for certain locations, e.g., making it difficult the monitoring and follow-up of crops [52,57].

According to [52], there are several peculiarities that make RPA a potential technology, highlighting low cost in relation to piloted aircraft and orbital platforms; autonomous data collection and ability to perform missions; ability to operate under adverse weather conditions and in hazardous environments, and lower exposure risk for the pilot.

Thus, RPAs are being studied and used to obtain high temporal resolution images (e.g., collected several times a day), spatial resolution (in cm) and low operating costs [12,16,18,24,34,42,54]. They can be applied in smaller areas and in specific locations with the easiness of obtaining data in lower time, e.g., monitoring the growth of several cultures.

agriculture

Research in the scientific literature shows that the digital images obtained through RPAs have been used successfully to generate vigor maps in grape plantation. In research performed by [32], images were collected using a hexacopter platform with near-infrared (NIR) camera in which they obtained normalized difference vegetation indexes (NDVI) of a vineyard whose data were in agreement with the reflectance data obtained in the field, being possible to generate maps and evaluate the winemaking potential of the vineyard using such platforms more autonomously.

Developed a method of preprocessing images obtained by RPA, since processing is the phase of obtaining products that requires time and processors [16]. These authors also used a camera with NIR filter, which enabled to extract NDVI from the images and hence estimate the wheat and barley biomass.

For the monitoring of palm cultivation for oil extraction, [36] tested three RPA models and observed the culture monitoring possibilities through the obtained images, such as the control of pruning and the identification of pathologies through infrared images. The tested models were: eBee (fixed wing), X8 (fixed wing) and Phantom 2 (rotary wing), in which only eBee had camera with NIR, being possible to indicate its use for analysis of some pathologies as chlorosis in palm culture. The eBee model showed a higher cost in relation to the others, reaching 45 min of flight time and estimated yield to cover an area of 100 ha per flight at 150 m altitude. The X8 has a medium cost, with also a 45-min flight time, it presented some difficulty for maneuverability in relation to other models tested, covering an area of 100 ha per flight. Phantom 2 model was the cheaper model with the shortest flight time (25 min) with capacity to cover an area of 12 ha per flight at 150 m altitude.

In order to perform mapping of invasive species with RPA and subsequent application of herbicides in maize, in directed with machines, [5] concluded that the use of post-emergence image data in maize through RPA decreased the use of herbicide, resulting in an economy in untreated areas from 14 to 39.2% for uniform spraying and savings from 16 to 45 € ha. These studies corroborate the cyclical efficiency of PA, in which there is data collection, analysis and timely treatment of the problem, improving productivity, reducing the use of inputs, and ensuring the uniformity of application.

Studied the green normalized difference vegetation index [GNDVI = (NIR - green) / (NIR + Green)] and were able to relate linearly with leaf area index and biomass from images obtained by RPA [19]. Thus, they obtained leaf area index with high resolution in the wheat crop.

Used RPA images and observed that mapped regions that had significant reductions in the leaf area index (LAI) and NDVI may be subjected to field inspection for nutrient deficiency, such as potassium, so thus pest and disease can be detected early in the canola crop caused by this nutritional deficiency [38].

Obtained a good correlation of thermal images obtained by RPA with field data measured with term radiometers, in apple crop, being half of the area irrigated and the other half subjected to water stress [10]. They observed the canopy temperature significantly higher in stressed trees.

Collected georeferenced images from coffee crop in the 2002 harvest using RPA, and compared the image pixels with the reflectance data collected in the field, creating a crop maturity index [22].

Research on vegetation dynamics in forests, identification of clearings and monitoring were performed by [8], which concluded that RPA can be used for such studies, obtaining high resolution images and also being a tool for forest control. The authors consider that the low cost of missions with RPA allows recording the vegetation dynamics throughout the year.

Used RPA for image collection in order to monitor and support decisions in the coffee plantation, and several crop management aspects may be benefited from aerial observation, according to the authors [15]. The study demonstrated the RPAs monitoring ability over a long period, besides obtaining images with high spatial resolution, mapping invasive weed outbreaks and for revealing irrigation and fertilization anomalies, thus concluding that RPA is a broad tool that complements the use of satellites and piloted aircraft for agriculture support.

2.2. Embedded technology in RPA

Although RPAs have a great spatial and temporal resolution, they do not have a good spectral resolution. The spectral resolution is related to the wavelength detected by the sensor and the amount of spectral ranges or bands. Sensors embedded in cameras makes a RPA more expensive. For instance, a conventional camera has low spectral resolution because it contains only three bands: blue (0.45 to 0.49 μm), green (0.49 to 0.58 μm) and red (0.62 to 0.70 μm), being possible to obtain digital images only in the visible range. As a comparative result, studies performed by [41] verified that multispectral camera has the ability to discriminate vegetation better than conventional camera due to the number of bands. The infrared (IR) spectral range comprises Near Infrared (NIR; 0.78 to 2.5 μm), mid-infrared (MIR; 2.5 to 5.0) and far-infrared (FIR; 5.0 to 10.0), which make possible to extract from images spectral responses through vegetation indexes, such as NDVI.

NDVI is the most widespread index and is calculated based on spectral ranges of red and NIR. There are sensors that measure thermal radiation whose spectral range goes from the mid-infrared (MIR) to the far-infrared (FIR).

Most of the studies used NIR cameras for several purposes [5,13,16,19,32,36] however, due to the high cost of these sensors, it was decided to use cameras containing only the visible band [23,24,36,42].

In this context, [55] proposed the modified photochemical reflectance index (MPRI), which uses only the green and red band. Studied the spatial and temporal variability of the MPRI vegetation index applied in São Carlos grass images through the use of a remotely piloted aircraft (RPA) with embedded digital camera [11]. The authors concluded that this technique presented a potential aid in the control and management in grass cultivation areas.

In research performed by [42] using RPA equipped with a conventional low cost camera, they tested six visible spectral indices (CIVE, ExG, ExGR, Woebbecke Index, NGRDI, VEG) and concluded that ExG and VEG obtained the best precision for mapping of wheat, with values varying from 87.73% to 91.99% at 30 m flight altitude and from 83.74% to 87.82% at 60 m flight altitude.

Evaluated the use of conventional RGB cameras and removed the filter from the red band, thus the camera was sensitive to capture the bands blue, green and NIR [30]. This result has shown to be a promising tool for studies on vegetation monitoring, identification of phenological trends and plant health.

Platforms of this type embedded with conventional sensors are becoming an integral part of the global society [45]. Even without cameras with high spectral resolution, satisfactory results have been obtained for the agricultural area. Such platforms act as "an eye in the sky" [35], paving the way to use these technologies by small farmers in order to inspect and monitor crops in a fast and detailed way. This technology is intended to be the producer’s eyes, assisting him and making real time inspections in the future, being able to identify anomalies before spreading, such as in the case of pests and diseases, aiding the decision making in the field.

2.3. Flight planning and image acquisition

To perform the flight planning, softwares can be used in the office to accomplish such an action, and then sending the flight plan to RPA. Another possibility would be to perform the planning through applications installed in smartphones or tablets connected in the remote control of RPA, allowing the mission planning minutes before the flight. For each aircraft, whether fixed or rotary wing, there will be compatible software or application to plan and execute missions, as shown by studies performed by [36] in which was used eMotion 2 for eBee, the Mission Planner for X8 and the application DJI-Phantom for Phantom 2.

Some items must be evaluated before the flight: area, potential hazards from and for flight, flight planning, preparation and configuration of equipment, checking equipment and realization of flight and data collection.

Before performing the flight, it is necessary to evaluate the area and observe some safety factors for the aircraft operation, for the operator and for the people involved around the operation, including: weather conditions; wind speed; presence of objects, poles, trees, electric transmission towers; appropriate flight locations away from airports and areas with high population density; landing and take-off places; ground conditions and limiting factors related to the specific laws of each city/state/country should be observed.

Flight planning is a fundamental preliminary step towards obtaining quality products that meet the objectives. The planning is performed through softwares or free applications available in the market, they act as a ground control station for the RPA, and thereby the software route can be sent to the RPA when performing the flight planning, which is called downlink in the literature [57]. The captured images can thus be transmitted (downlink) to the terrestrial station or stored in the memory coupled in the RPA.

Generally, softwares or applications allow planning and executing the flight, being possible to make some flight definitions before performing the mission. In compatible softwares and applications, it is possible to create a new project for each RPA, in which the user can inform data for project settings such as coordinate systems, area, flight direction and camera settings, which are important for the correct mission operation, being that these information are stored in a database and then sent to be executed by the aircraft [14].

After activating the project, the area of interest must be delimited and the image overlaps, flight altitude and aircraft speed. Some softwares do not give the option of forward and lateral overlap.

Investigations performed by [27] show that overlapping is a factor that affect the accuracy and quality of the final product. The authors tested two forward and lateral overlap settings (80% -50% and 70% -40%), and found that the greater overlap (forward 80% and lateral 50%) was the most recommended for orthomosaic preparation. However, depending on the purpose and product, higher overlays will increase the capture time of images, thus resulting in a greater amount of point cloud and hence longer processing time. It should be studied and evaluated if there is a need for a greater amount of overlap for the application.

Recommend to use sufficient longitudinal and transverse coverage areas, at least 70 and 40%, respectively [39].

Once the flight plan is set, the mission must be saved. To execute the mission, the smartphone, tablet or computer must be connected to the aircraft’s remote control via USB, turn on the remote control and the aircraft. When selecting the desired mission and sending to the aircraft, before flying, a checklist will appear to check the flight. After checking, the aircraft is ready to take off.

2.4. Processing and use softwares

The processing of images generated through the RPA consists of a semi-automatic workflow, in which most of the software perform a similar flow, follow the calibration process of the camera, align the images, generate cloud points to then generate the Digital Surface Model (DSM) and Digital Elevation Model (DEM), which can be used for the production of orthomosaics, 3D modeling and obtaining metric information, such as area calculation, volume, heights, among others. [17,29].

After obtaining the products, it is possible to perform the image analysis, such as: soil use classification through the study of the spectral bands of images, or through object-oriented image analysis using supervised classification techniques with studies performed by [33] and [23]. Production of maps using vegetation indexes as studies performed by [32] and [42].

Considering that the altitude of RPA is low, there is an increase in spatial resolution, however, it cannot cover large extensions as orbital platforms, and it is necessary to capture a large number of images to cover larger areas of interest. Thus, the mosaic of these images is a necessary preprocessing [57]. However, studies performed by [51] developed a completely automated method for the mosaic preparation, being an advance for the preprocessing of these images by reducing processing time.

An automatic georeferencing method for small area was proposed by [54], reaching an accuracy of 0.90 m. This procedure reduces the data processing time, but errors of 0.90 m for application of nutrients, spraying and planting that requires accuracy may cause application errors, and thus compromising culture. Therefore, studies that improve the accuracy of automatic processing are valid for the current technology application scenario.

Accurate ground control points (GCPs) are important data for the geometric correction of products obtained by remote sensing, as well as GCPs can significantly increase the accuracy of maps. These control points are targets marked on the ground, or characteristic points of the terrain, such as crossings of roads and corners of buildings that can be identified in the image [50]. In this respect, the coordinates must be collected with a global positioning system (GPS) receiver of Real Time Kinematic (RTK) or Post-Processed Kinematic (PPK) for good accuracy. Research performed with RPA to obtain images showed good results using a minimum amount of points spread in the study area. Research works, such as by [49], used 24 targets as GCPs collected with dual-frequency GPS in RTK in a 30 x 50 m area, obtaining a position error around ± 0.05 m horizontally and ± 0.20 m vertically, [44] collected 23 GCPs with dual-frequency GPS RTK in an area of 125 x 60 m and obtained Root-Mean-Square Error (RMSE) values around 0.04-0.05 m horizontally and 0,03-0,04 m vertically.

For the automatic assembly of the orthomosaic, there are already several softwares that facilitate and automate this pre-processing. Such softwares allow high automation degree, favoring people who do not have expertise to produce accurate information of DSM, DEM, orthomosaic in a shorter time in relation to conventional photogrammetry [52].

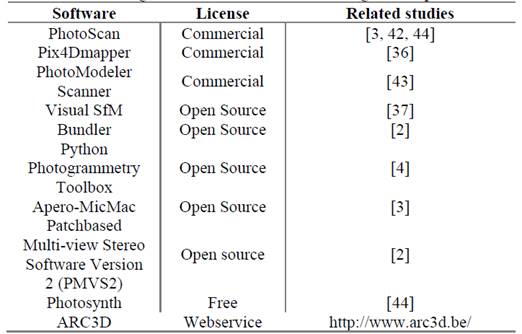

The open source and free softwares (Table 1) currently available, in relation to commercial ones developed and used as PhotoScan, are few reliable and accurate in case of large blocks and complex images [6,29]. Considering the acquisition of images in small and occasional areas, such softwares supply the demand, however, the obtaining of products (orthomosaics, DEM, DMS) requires processing time, being this a problem still faced in obtaining products of such technology. According to [29], the approximate time for flight plan and RPA image collection is 25%, for obtaining control points (GCP) in the field is 15% and for photogrammetric processing is 60%. Even with the automatic softwares there is a demand for processors to complete and reach the products.

2.5. Applications and potentialities

It is possible to observe that there are aircraft applications to obtain data in agriculture, from crops of wheat [16,19,42], grape [32], canola [38] and maize [5], until perennial crops, such as apple [10], palm [36] and coffee [15,22], using several platforms, including fixed [23,36] and rotary wing [31,32,38,54]; containing RGB [23], multispectral [31] and thermal cameras [10], and performing several forms of image treatment for each purpose.

However, the applications of RPA in agriculture are still in the beginning, where studies related to agriculture are still limited and can be developed, such as: the improvement in the image processing time to obtain products; the real time sharing of products; the capacity of embedded sensors; improvement of the RPA autonomy and provision of technology to farmers.

Within this context, it is possible to study the use of these aerial platforms combined with sensors, mainly in the visible spectrum, for applications within PA, being able to work with vegetation indices in the visible range, observation of planting failures, image segmentation and classification, quantification of planted areas, analysis of crop uniformity, detection of plant height and crown diameter through images, monitoring of invasive plants, plant lodging, monitoring of crop anomalies, formation of historical series of cultures and growth monitoring by performing seasonal flight, among other applications. Besides being able to embed multispectral and thermal sensors to correlate with water stress, nutritional stress, pests and diseases and other factors related to plant health in which larger bands of the electromagnetic spectrum can capture more efficiently. Another potential of these aircrafts are the RPA for application of agrochemicals (RPA sprayers), which identify the site that needs defensive or fertilizer, thus applying automatically. This procedure can be useful for small areas and sensitive crops, such as strawberry and tomato that need specific and localized care in search for quality and sustainability in these crops. It is worth mentioning that the development and studies that seek to integrate the image capture with the real-time processing, providing the farmer with a fast and accurate crop diagnosis, will contribute significantly to the advance of PA in the field.

3. Considerations

During the last decade, RPA technologies have evolved and expanded the range of applications and utilities. The imagery through aerial platforms with RPA applying remote sensing in precision agriculture includes not only weed mapping, but also vigor mapping, mapping and detection of nutrient deficiency, susceptibility to pests, biomass estimation, and pasture monitoring.

However, there are still shortcomings in the use of this technology, such as sensor capacity and low spectral resolution. However, even before limitations, it is possible to perform remote sensing studies based on RPA using conventional cameras for agricultural applications, possibly having better management in the field through high temporal and spatial resolution images.