1. Introduction

Distributed reasoning is a central aspect required by many applications proposed in the last decades, including mobile and ubiquitous systems, smart spaces, ambient intelligence systems [1] and the semantic web [2]. In these types of systems, distributed entities are able to capture or receive information from their environment and are often able to derive new knowledge or make decisions based on the available knowledge. Given their ability to sense the environment, reason, act upon, and communicate with other entities, they are generically referred to as agents. Therefore, we consider distributed reasoning as the reasoning of agents in a multi-agent system (MAS) situated in an environment, whether physical or virtual.

We consider such an MAS in environments in which agents may have incomplete and inconsistent knowledge. Incomplete knowledge is the possibility that agents do not have a complete knowledge about the environment, thereby requiring knowledge imported from other agents to reach conclusions. For example, assume a robot agent a that can feel the wind but is blind, and has a knowledge expressed by the following rule: “I conclude that it is going to rain (conclusion) if it is windy (premise 1) and there are clouds in the sky (premise 2)”. Agent α cannot reach the conclusion that is raining by itself because it cannot find the truth value of premise 2 by itself. However, suppose that agent b is known to agent α. Then, α can ask b whether there are clouds in the sky. If 𝑏 answers positively, then α can conclude that it is going to rain. Inconsistent knowledge is the possibility of agents having an inaccurate or erroneous knowledge about the environment owing to imperfect sensing capabilities or badly intentioned or unreliable agents. For example, suppose that agent 𝑐 has some defects in its visual sensor. If α asks c whether there are clouds in the sky, it is possible that 𝑐 cannot see the sky perfectly, thus answering erroneously.

For such scenarios, the concept of contextual reasoning was proposed in the literature. Benerecetti et al. [3] defined contextual reasoning as that which operates on three fundamental dimensions along which a context-dependent knowledge representation may vary. The fundamental dimensions are partiality (the portion of the world that is considered), approximation (the level of detail at which a portion of the world is represented), and perspective (the point of view from which the world is observed). Contextual defeasible logic (CDL), introduced by Bikakis and Antoniou [1], was proposed as an argumentation-based contextual reasoning model based on the multi-context systems (MCS) paradigm, in which the local knowledge of agents is encoded in rule theories and information flow between agents is achieved through mapping rules that associate different knowledge bases. To resolve potential conflicts that may arise from the interaction of agents, a preference order for known agents combined with defeasible argumentation semantics was proposed.

However, the approximation dimension was not well studied in [1]. The idea of approximation is related to the idea of focus, in which the closer one is to an object of interest, the more it is aware of knowledge that is relevant to that object. For example, suppose agent d, which is located in another city distant to α, looks at the sky, sees the clouds, and is also able to feel the wind, but it does not know if this means that it is going to rain (it does not have the same rule as α). It then asks the other agents it knows; meanwhile, the only agent it can talk to at the moment α is. However, α, living in another city, is totally unaware of the weather conditions of ds location. In this case, it is necessary for d to send some knowledge to other agents to help them to reason properly. Thus, d sends two rules without premises (i.e., facts), stating that there are clouds in the sky and that it is windy. When 𝑎 receives the query, it concludes that it is going to rain, and sends this answer to d.

In this study, we call focus the relevant knowledge at a given moment for a particular reasoning context. All the agents cooperating in the reasoning must be aware of this focus to reach reasonable conclusions in a fully decentralized manner. We are also concerned with the way agents can handle scenarios with multiple foci from different interactions.

Furthermore, this work allows mapping rules that reference the knowledge held by any known agent instead of only enabling references to specific agents. This enables the framework to be used in dynamic and open environments, in which the agents and their internal knowledge are not known a priori and where agents come in and out of the system at any time. We also demonstrate that the proposed approach can model a greater variety of scenarios than related work.

In this paper, we present a structured argumentation framework that includes argumentation semantics, which enables distributed contextual reasoning in dynamic environments, including the possibility of agents sharing focus knowledge when cooperating. This is an extension of a previous paper [4], furthering and developing the formalization details and adding new important features, namely: (1) a partial order relation between the agents using a preference function, which enables us to deal with more dynamic scenarios where not all agents are known by each agent and where agents can have equivalent trust in one another; (2) a similarity-based matching of schematic terms to concrete terms, which improves the range of supported scenarios involving approximate reasoning; and (3) an argument strength calculation that considers a chain of arguments to reach a conclusion when comparing arguments, and takes more advantage of the argument-based reasoning to reach more social-based conclusions. We also demonstrate the applicability of our approach using a more detailed example scenario that incrementally illustrates its modeling and behavior.

The paper is divided into five sections. In Section 2, we present a background on CDL. In Section 3, we present our contributions in the representational formalization of the framework, as well as the problem we are solving. In Section 4, we present a scenario that illustrates this problem. In Section 5, we present both argument construction and semantics, along with running examples. In Section 6, we present related studies. Finally, we discuss the conclusions and future work.

2. Preliminaries

In this section, we present some basic definitions and a fundamental background on CDL with slightly different nomenclature to facilitate the introduction of the contribution we make in this paper.

Definition 1: A contextual defeasible logic system is composed of a set of agents. Each agent a i is defined as a tuple of the form (KB i ,P i ) such that

KBi is the knowledge base (or belief base) of the agent;

Pi is a preference relation as a total order relation over the other agents in the system which has the form Pi=[ 𝑎2, 𝑎j, 𝑎3,…, 𝑎1 ], indicating that, for 𝑎i, 𝑎2 is preferable to 𝑎j, 𝑎j to 𝑎3, and so on, and 𝑎1 is the least preferred agent.

In this framework, knowledge is represented by sets of rules, referred to as knowledge bases, which comprise three different types of rules: local strict rules, local defeasible rules, and mapping defeasible rules. The rules are composed of terms of the form (𝑎i, 𝑥), where ai is an agent called the definer of the term and x is a literal. A literal represents atomic information (𝑥) or the negation of atomic information (¬𝑥). For a literal x, its complementary literal is a literal corresponding to the strong negation of 𝑥, denoted as ~𝑥. More precisely ~𝑥≡¬𝑥 and ~(¬𝑥)≡ 𝑥 For any term 𝑝=(𝑎𝑖,𝑥)the complementary term is denoted as ~𝑝=(𝑎𝑖,~𝑥)

The preference order 𝑃𝑖 is useful for resolving conflicts that may arise from interaction among agents because we assume that the global knowledge base (the union of the knowledge bases of all the agents in the system) can be inconsistent.

Definitions 2-4 define the types of rules.

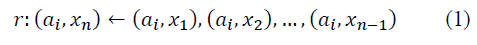

Definition 2: A local strict rule is a rule of the form

A strict rule with empty body denotes non-defeasible factual knowledge.

Note that the rule is local because the definer of each term in the body (premises) is the agent that owns the rule (𝑎𝑖),as well as the term in the head (conclusion) of the rule. Local strict rules are interpreted by classical logic: whenever all the terms in the body of the rule are logical consequences of the set of local strict rules, then the term in the head of the rule is also a logical consequence.

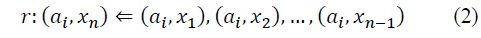

Definition 3: A local defeasible rule is a rule of the form

A defeasible rule with empty body denotes local factual knowledge that can be defeated.

A defeasible rule is similar to a strict rule in the sense that all terms in the body are defined by the same agent that defines the head. However, a defeasible rule cannot be applied to support its conclusion if there is adequate (not inferior) contrary evidence.

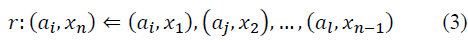

Definition 4: A mapping defeasible rule is a rule of the form

A mapping defeasible rule has at least one term in its body that is a foreign term, which is a term whose definer is an agent different from the agent that defines the head of the rule - e.g. (𝑎𝑗,𝑥 2 )which is a term defined by 𝑎 𝑗

A mapping rule denoted by 𝑟:(𝑎𝑖,𝑥𝑛)⇐(𝑎𝑗,𝑥1)has the following intuition: “If 𝑎𝑖 knows that agent 𝑎𝑗 concludes 𝑥1, then 𝑎𝑖 considers it a valid premise to conclude 𝑥𝑛 if there is no adequate contrary evidence.”

For convenience, given any rule 𝑟, 𝐻ead (𝑟) denotes the head (conclusion) of the rule, which is a term, and 𝐵ody(𝑟) denotes the body (premises) of the rule, which is a set of terms.

Based on the definitions of such agents with their respective rules, CDL proposes an argumentation framework that extends the argumentation semantics of defeasible logic presented in [5], which in turn is based on the grounded semantics of Dung’s abstract argumentation framework [6], with arguments constructed in a distributed manner. The framework uses arguments of local range, that is, they are built based on the rules of a single agent only, which are then interrelated by means of an interdependent set of arguments constructed with respect to mapping rules. Further details concerning the argumentation semantics are presented in Section 5.

3. Architecture and problem formalization

In this section, we present some contributions to CDL’s formalization with new elements to enable its use in a dynamic environment where agents are not known a priori and to enable contextual reasoning considering focus. The first difference lies in the definition of an agent.

Definition 5: An agent 𝑎𝑖 is defined as a tuple of the form (𝐾𝐵𝑖,𝐴𝐾𝑖,𝑃𝑖) such that

KB_i is the knowledge base (or belief base) of the agent;

A_(K_i )={a_1,a_2,…,a_j,…,a_k } is a set of agents known to a_i; and

P_i:A_(K_i )∪{a_i}→[0,1] is a preference function such that, given two agents a_j and a_k in A_(K_i ), if P_i (a_j )>P_i (a_k ), a_j is preferred to a_k by a_i. If P_i (a_j )=P_i (a_k ), then they are equally preferred by a_i. By default, P_i (a_i )=1.

The differences between Definition 1 and Definition 5 are the set of known agents and the preference relation, now represented as a function over the known agents, to support scenarios in which not every agent in the system is known by a given agent as well as when an agent has equivalent trust in two or more agents. Such a preference function, combined with the argument strength calculation and the strength comparison function (Definitions 17-22), enables a partial order relation over the known agents of an agent. The preference function can be derived from some degree of trust an agent has in another agent, which can result from various mechanisms, such as those presented in works on trust theory [7] and dynamic preferences [8].

To enable contextual defeasible reasoning in dynamic and open environments where agents are not known a priori, a new type of rule is necessary, which we call schematic mapping defeasible rule (called schematic rule in the rest of the paper for simplicity), as defined in Definition 6. These rules enable agents to import knowledge from arbitrary known agents without knowing a priori whether such agents have the required knowledge or not. This is a key feature that enables distributed reasoning in a dynamic and open environment where agents’ knowledge about other agents’ knowledge may be incomplete or non-existent. For example, suppose an agent 𝑎 1 with a rule whose premise x is not a logical consequence of 𝑎1’s local knowledge, and suppose that a few moments earlier, an agent 𝑎2, which knows that x holds in the environment, entered the system. In this case, without schematic rules, 𝑎1 would not ask 𝑎2 about x.

The body of the schematic rules can be composed of a different type of term called schematic term, which has the form (𝑋,𝑥), where X is a variable that can be bound to any agent in the system and 𝑥 is a literal.

We also consider the possibility of schematic terms being bound (or instantiated) to any term that is sufficiently similar to it. This means that terms whose literals have some similarity between them can be considered when matching schematic terms with concrete terms (not schematic). This enables us to support an even broader range of scenarios, especially those involving approximate reasoning. For example, suppose a scenario in which knowledge is represented through OWL (Ontology Web Language) ontologies [9]. In this case, a similarity function could be based on axioms that use the owl:sameAs property. Other examples include lexical similarity, such as WordNet [10], which could be used in scenarios involving speech or text recognition, and syntactic and semantic agent matchmaking processes, such as Larks [11].

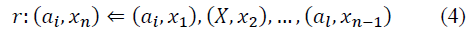

Definition 6: A schematic rule is a rule of the form

A schematic rule has at least one schematic term in its body.

A schematic rule whose body consists of a single schematic term, denoted 𝑟:(𝑎𝑖,𝑥𝑛)⇐(𝑋,𝑥1),has the following intuition: “If 𝑎𝑖 knows that some agent concludes a literal sufficiently similar to 𝑥1,then 𝑎𝑖 considers it a valid premise to conclude 𝑥𝑛 if there is no adequate contrary evidence.”

The following presents concepts related to the similarity between terms and the concept of instantiated terms.

Definition 7: A similarity function between terms is a function 𝛩:𝑇,𝑇→[0,1], where T is the set of all possible terms, such that a greater similarity between the two terms 𝑝 and ?? results in a greater 𝛩(𝑝,𝑞) value. A similarity threshold 𝑠t ∈ [0,1] is the minimum value required for the similarity between the two terms to be accepted. Two terms p and q are sufficiently similar if 𝛩(𝑝,𝑞)≥𝑠t. If 𝑝=(𝑋,𝑥) is a schematic term, 𝑞=(𝑎 𝑖 ,𝑦 ) is a concrete term, and 𝑝 and 𝑞 are sufficiently similar, then there exists an instantiated term of the form ??inst =(𝑎 𝑖 ,𝑦 ,𝜃), where 𝜃 =𝛩(𝑝,𝑞), is called the similarity degree between both terms.

An agent 𝑎𝑖 (which we call the emitter agent) is able to issue queries about the truth of a term to itself or to other agents. When an agent emits an initial query, which results in subsequent queries to other agents (which we call cooperating agents), it is necessary that every agent become aware of the specific knowledge related to the focus (or situation) of the initial query. This is a key feature that enables effective contextual reasoning because it nourishes the reasoning ability of the cooperating agents with the possibility of considering relevant - and possibly unknown a priori by these agents - information in the context of the emitter agent, which can be related to its current activity and/or location. We now define a query context as well as the concept of focus knowledge base.

Definition 8: A query context for a term 𝑝 is a tuple 𝑐 𝑄 =(𝛼,𝑝,𝑎 𝑖 ,𝐾𝐵 𝛼 𝐹 ) in which 𝑎 is a unique identifier of the query context, 𝑎𝑖 is the agent that created the query, and 𝐾𝐵 𝛼 𝐹 is the focus knowledge base of the query context α.

Definition 9: A focus knowledge base 𝐾𝐵 𝛼 𝐹 of query context α is a set of defeasible rules whose concrete terms can also be represented as focus terms. A focus term is a tuple (𝐹,𝑥) where 𝐹 indicates that the term must be interpreted temporarily as a local concrete term.

Given these definitions, it is possible to define a MAS composed of agents with contextual defeasible reasoning capabilities, with their own knowledge bases comprising the types of rules presented. Furthermore, such agents have the capability of issuing queries and sharing focus knowledge bases.

Definition 10: A contextual defeasible multi-agent system (CDMAS) is defined as a tuple 𝑀=(𝐴,𝐶 ?? ,𝛩,𝑠t) in which 𝐴={𝑎 1 ,𝑎 2 ,…𝑎 𝑛 } is a set of agents at a given moment situated in the environment, 𝐶𝑄 is a set of query contexts at a given moment, 𝛩 is a similarity function, and 𝑠t is a similarity threshold.

For convenience, it is also useful to define additional definitions. The global knowledge base 𝐾𝐵𝑀 of a system 𝑀 is the union of the knowledge bases of all the agents in the system, that is, 𝐾𝐵𝑀= ⋃𝐴 𝑎𝑖 𝐾𝐵𝑖.. An extended global knowledge base 𝐾𝐵𝑀𝛼 = 𝐾𝐵𝑀 ∪ 𝐾𝐵F 𝛼 includes the focus knowledge of a query context 𝛼 We also refer to the extended global knowledge base as context, given that it includes all the relevant knowledge for a given situation.

Based on these definitions, it is possible to formalize the problem at hand: “Given an agent 𝑎𝑖 with a query context 𝑐𝑞= (𝛼,𝑝,𝑎,𝐾𝐵F 𝛼)for a term p in a multi-agent system M, 𝑎𝑖 has to answer whether p is a logical consequence of the context 𝐾𝐵𝑀𝛼 or not”. This is a sub-problem of the following problem: “Given a multi-agent system M considering a query context 𝑐𝑞= (𝛼,𝑝,𝑎,𝐾𝐵𝛼 𝐹), which terms in the context 𝐾𝐵𝑀𝛼 are its logical consequences?”. As explained in Section 5.3, it is possible that 𝑝 in 𝐾𝐵𝑀𝛼 is either a logical consequence of the context (𝐾𝐵𝑀𝛼⊨ 𝑝) or not (𝐾𝐵𝑀𝛼 ⊭ 𝑝). However, it may not be possible to state whether p is a logical consequence or not when the accepted argument that concludes p is supported by an infinite argumentation tree, as explained in Section 5.2.

4. Example scenarios

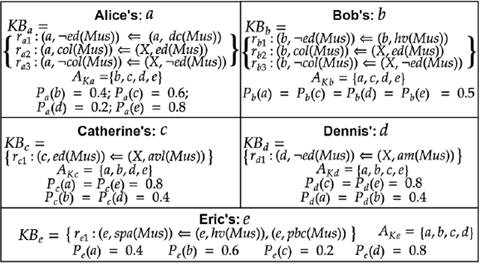

This scenario takes place in an ambient intelligence environment of mushroom hunters in a natural park, who are able to communicate with one another through a wireless network, with the goal of collecting edible mushrooms. Suppose that, at a given moment, there are five agents in the system: Alice (a), Bob (b), Catherine (c), Dennis (d), and Eric (𝑒). The knowledge bases for each agent are presented in Fig. 1. It is interesting to note here that this scenario cannot be modeled by the original CDL presented in [1], given the need to use schematic rules.

Alice has some knowledge about some species, such as the death cap, which she knows is not edible (rule 𝑟𝑎1).Suppose also that Alice and Bob share the same knowledge that, if a mushroom is edible, then they can collect it, and if it is not edible, they should not collect it. They are also willing to consider the opinion of any known agent about the edibleness of a mushroom, that is, if any agent states that a mushroom is edible, then they are willing to accept it to be true if there is no adequate contrary evidence. Such knowledge is thus presented in both a (rules 𝑟𝑎2 and 𝑟𝑎3) and b (rules 𝑟𝑏2 and 𝑟𝑏3).Bob also believes that an object is not edible if it has a volva (rule 𝑟𝑏1).

Catherine believes that a mushroom is edible if it is an Amanita velosa (rule 𝑟𝑐1). However, she is unable to describe a mushroom of this species; thus, the rule is a schematic rule, meaning that she is willing to accept that the mushroom is an Amanita velosa if any other agent states that a mushroom is sufficiently similar to Amanita velosa.

Dennis believes that an object is not edible if it is an Amanita (rule 𝑟𝑑1). However, he does not know anything about the characteristics of Amanita; thus, a schematic term is used in the body of the rule.

Finally, Eric believes that a mushroom is a springtime amanita if it has some properties, such as having a volva and a pale brownish cap (𝑟𝑒1) Springtime amanita is the same type of mushroom as Amanita velosa, but we assume that the agents in the system are not so certain about it. Therefore, all the agents consider a similarity degree of 0.8 between them. Similarly, springtime amanita is a type of Amanita, and the agents consider a similarity degree of 0.5 between them. Hence, we consider the similarity function to have the value 1 for all lexically identical literals, and specifically the value 0.8 between springtime amanita and Amanita velosa and 0.5 between Amanita and any specific type of Amanita. Suppose also that the similarity threshold for the entire system is 0.4.

Each agent has different levels of confidence in the other agents, as shown in Fig. 1. For example, Alice trusts Eric more (𝑃𝑎(𝑒) = 0.8), followed by Catherine (𝑃𝑎(𝑐) = 0.6), Bob (𝑃𝑎(𝑏) = 0.4) and Dennis (𝑃𝑎(𝑑) = 0.2). In contrast, Bob trusts all the agents equally.

Based on this scenario, some possible queries are illustrated as follows.

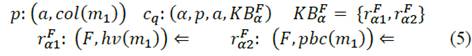

Example 1: Suppose that, at some moment, Alice finds mushroom 𝑚1 that has a volva and a pale brownish cap. Thus, she asks herself a query:

Where 𝑐𝑞 is a new query context whose focus knowledge base contains rules that represent the mushroom’s characteristics.

When receiving her own query, Alice cannot reach a conclusion based only on locally available knowledge. Thus, she must send new queries to other agents, including, in each query, the same 𝑐𝑞 created previously to enable the agents to reason effectively about the mushroom perceived.

Given this context, Alice concludes that she can collect mushroom 𝑚 1 because of the argument generated from Catherine with the help of Eric (𝑟𝑐1 and 𝑟𝑒1), although Bob alone (𝑟𝑏1) and Dennis with Eric (𝑟𝑑1 and 𝑟𝑒1) state that it is not edible. The reason for this decision is based mainly on the preference function of Alice, and is formally presented in Section 5.

Example 2: Suppose that, simultaneously with the query context 𝛼 of Example 1, Bob finds a mushroom (𝑚2) that he knows is a death cap, and he wants to know if he can collect it or not. He then issues the following query:

In this case, we have a new query context, β, originating from Bob. It is important to note that the context in this case does not include the focus knowledge bound to query context α. As presented in Section 5, Bob will decide not to collect 𝑚2, given that Alice will answer the query stating that 𝑚2 is not edible.

Example 3: Suppose that in a later moment, Eric leaves the system, and Alice finds another mushroom 𝑚3 with the same characteristics as 𝑚1 Suppose also that Alice did not learn the reasons for collecting 𝑚1 in query 𝛼(such an ability will be studied in future works). In this case, when issuing a new query 𝛾, which is identical to 𝛼 she only receives answers from Bob and Dennis. Bob still states that the mushroom is not edible, and Dennis cannot answer because his conclusion also depends on the knowledge held by Eric. This will result in Alice making the decision to not collect 𝑚3. This example demonstrates how the framework supports reasoning in dynamic environments where agents leave and enter the environment continuously.

5. Argument construction and semantics

The CDMAS framework is based on the reasoning of agents according to a specific model of argument construction and defeasible argumentation semantics. In this section, we present the underlying argumentation theory in which this reasoning is based. The formalism for the construction of an interdependent set of arguments is presented in Section 5.1. In Section 5.2, we present the argumentation semantics, which, given an interdependent set of arguments, defines the arguments that are justified and those that are rejected, followed by examples in Section 5.3.

5.1 Argument construction

In this section, we present how arguments are constructed from the rules of each agent, together with focus knowledge from a given query context. The framework uses arguments of local range, in the sense that each argument is built upon the rules of a single agent only, which enables reasoning to be distributed among agents. The arguments of different agents are interrelated by means of an interdependent set of arguments 𝐴rgs M𝛼, which is formed from an extended global knowledge base 𝐾𝐵𝑀𝛼 ,given a CDMAS M with a query context α. 𝐴rgs 𝑀a is a set of arguments that are either base arguments, that is, arguments based on rules with empty bodies, or arguments that depend on the existence of other arguments, according to Definition 11.

Definition 11: Given an interdependent set of arguments 𝐴rgs M𝛼 based on extended global knowledge base 𝐾𝐵𝑀𝛼 an argument

for a term 𝑝=(𝑎

𝑖

,𝑥), based on a rule 𝑟

𝑖

∈ 𝐾𝐵 𝑀a such that 𝐻ead (𝑟

𝑖

) = 𝑝, is an n-ary tree with root p. Given 𝑛 |Body(𝑟𝑖)|,if n = 0, then the tree has a single child 𝜏, which makes the tree a base argument. If 𝑛 > 0, then the tree has 𝑛 children, each one corresponding to a term 𝑞 = (𝑑,𝑦) such that 𝑞 ∈𝐵ody (𝑟𝑖), and each child is either:

for a term 𝑝=(𝑎

𝑖

,𝑥), based on a rule 𝑟

𝑖

∈ 𝐾𝐵 𝑀a such that 𝐻ead (𝑟

𝑖

) = 𝑝, is an n-ary tree with root p. Given 𝑛 |Body(𝑟𝑖)|,if n = 0, then the tree has a single child 𝜏, which makes the tree a base argument. If 𝑛 > 0, then the tree has 𝑛 children, each one corresponding to a term 𝑞 = (𝑑,𝑦) such that 𝑞 ∈𝐵ody (𝑟𝑖), and each child is either:

a local concrete term 𝑞′ = (𝑎 𝑖 ,𝑦 ), if 𝑑 ∈ {𝑎𝑖,𝐹} (i.e.,𝑞 is either a local or a focus term), and q’ is the root of a sub-argument 𝑎rg𝑖’∈ 𝐴rg𝑠 𝑀a based on a rule 𝑟𝑖′ ∈ 𝐾 𝐵𝑀a such that Head (𝑟𝑖′)=𝑞’, or

an instantiated term q inst =(𝑎𝑗,𝑦,1),with a j ≠ a i , in the case d=a j (i.e. q is a foreign concrete term) and there exists an argument arg j ∈ Args Mα for a term q'=(a j ,y) based on a rule r j ∈ KB Mα such that Head(r j )=q', or

an instantiated term q inst =(a j ,y',θ), in the case d=X (i.e. q is a schematic term) and there exists an argument arg j ∈ Args Mα for a term q'=(a j ,y' ) based on a rule r j ∈ KB Mα such that Head(r j )=q' and θ=Θ(q,q')≥ st (i.e., q and q’ are sufficiently similar).

ForeignLeaves(arg) is a function that returns all instantiated terms in the leaves of an argument. If ForeignLeaves(arg)=∅, then arg is an independent argument because it does not depend on the terms concluded by other arguments. A base argument is an independent argument.

A strict argument is derived only through strict rules. An argument derived using at least one defeasible rule is a defeasible argument .

An important definition for defining argumentation semantics is that of sub-arguments, which is defined as a relation over arguments. It is also important to define the concept of conclusions of arguments.

Definition 12: A sub-argument relation S⊂ArgsMα×ArgsMα is such that αrg’ S arg indicates that αrg´ is a sub-argument of αrg, which occurs when αrg’ = αrg, that is, an argument is always a sub-argument of itself, or when the root of αrg´ is a descendant of αrg´ A descendant of αrg is either a child of the root of αrg or a descendant of a child of the root of αrg Therefore, a sub-argument αrg ,, of argument αrg´is also a sub-argument of αrg Thus, S is a reflexive and transitive relation.

Definition 13: Given an argument αrg∈ Args Mα with root p, p is called the conclusion of αrg The conclusion of any sub-argument αrg´of αrg is also called a conclusion of αrg

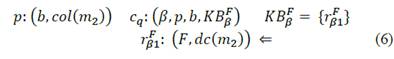

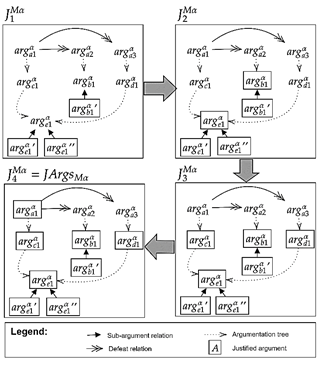

Example 1 - Continuation: The Args Mα constructed for the query context α is shown in Fig. 2. To emphasize some properties, ForeignLeaves (αrga1 α)= {(c,ed(m1),1)} and ForeignLeaves(αrge1 α)=∅. This also means that αrge1 α is an independent argument because it depends only on the knowledge locally available in agent e, given the query context α.

Note that from the schematic rule ra2, the argument arga1 α is formed by instantiating the schematic term (X, ed(m1)) with the conclusion of argc1 α . Similarly, arga α 2 and arga α 3 are formed by rule ra3 having their schematic terms instantiated with the conclusions of arga b1 and arga b1 respectively. Arguments arga b1 and arga e1 simply originate from their local rules. Note that the literals originating from the focus rules received in the query are considered to be incorporated by each agent - e.g., (F,hv(m1)) in arg α b1 and arg α e1, and (F,pbc(m1)) in arg α e1.arg α c1 has its schematic term instantiated with the conclusion of argument arg α e1with a similarity degree of 0.8, as well as arg α d1 with a similarity degree of 0.5.

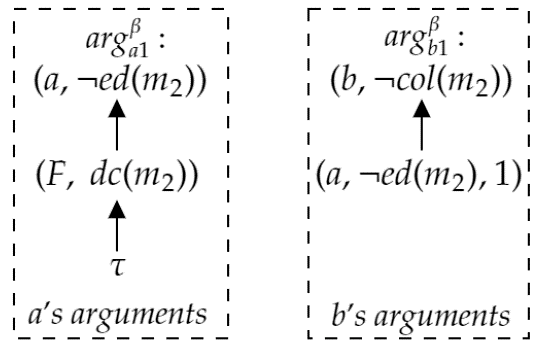

Example 2 - Continuation: From the query context β, a different interdependent set of arguments Args Mβ is created (Fig. 3).

It is interesting to note that ArgsMβ is quite different from ArgsMα and that both queries do not interfere with each other. This exemplifies how different foci or contexts lead to different interdependent sets of arguments.

Example 3 - Continuation: The interdependent set of arguments for the query context γ is simply a subset of ArgsMα containing only the arguments equivalent to arg α a2 and arg α b1. The argument equivalent to arg α a1 is not included because it depends on the existence of argα c1, which in turn depends on the existence of argα e1. Similarly, the argument equivalent to argα a3 is not included because it depends on the existence of argα d1, which in turn depends on the existence of arg α e1.

5.2 Argumentation semantics

Once the interdependent set of arguments has been constructed, we need a way to determine which arguments (and consequently terms) will be accepted as logical consequences of the context. Argumentation semantics aim to define which arguments are accepted, which arguments are rejected, and which arguments are neither accepted nor rejected.

The definitions of attack, defeat, argumentation tree, support, undercut, acceptable arguments, justified arguments and rejected arguments represent the core of the argumentation semantics, which are all equivalent to the definitions given in [1]. These definitions are summarized in Section 5.2.1. Our contributions in this work deal more specifically with the definition of the strength comparison function (Stronger), which also depends on a new definition of strength of arguments, an evolution of the concept of rank given in [1]. Such specific contributions are developed in Section 5.2.2. For a more detailed description of the core definitions, we recommend the readers to [1] for the original semantic and to [12] for some variants of the semantic. Such works also present theorems and proofs regarding the consistency of the original CDL framework, which also applies to our work because we use the same core semantics.

5.2.1 Core semantics

Arguments with conflicting conclusions are said to attack each other. Therefore, there must be a way to resolve such conflicts to enable the choice of only one of the conflicting arguments. This is formalized by a defeat relation between the arguments, which depends on the definition of a strength comparison function Stronger, presented in Definition 22. The following defines the attack and defeat between arguments.

Definition 14: An argument arg 1 attacks a defeasible argument arg 2 in p if p is a conclusion of arg 2 , ~p is a conclusion of arg 1 , and the sub-argument of arg 2 with conclusion p is not a strict argument.

Definition 15: Given a function Stronger∶ Args Mα , Args Mα , A → Args Mα , where A is the set of agents in the system, an argument arg1 defeats a defeasible argument arg 2 in p from the point of view of an agent a i ∈ A if arg 1 attacks arg 2 and, given the sub-argument of arg 1 (arg 1 ’) with conclusion ~p and the sub-argument of arg 2 (arg 2 ’) with conclusion p, then Stronger(arg' 1 ,arg' 2 ,a i )≠arg' 2 .

To connect different arguments, we introduce the concept of argumentation tree, which enables us to consider the chain of arguments used to support a conclusion.

Definition 16: An argumentation tree at for a literal 𝑝 is a tree, where each node of the tree is an argument, built using the following steps:

1 In the first step, add an argument arg1∈ ArgsMα for 𝑝 as the root of at;

2 In the next steps, for each distinct term (aj,x,θ) ∈ ForeignLeaves(arg1 ), add an argument arg2 with conclusion (aj,x) as a child of arg1 under the following condition:

.arg_2 can be added in at only if there is no argument arg3 for (aj,x) which is already a child of arg1 in at;

3 For each argument arg2 added as a child in step 2, repeat step 2 for arg2.

The argument for 𝑝 added in the first step is called the head argument of at, and its conclusion 𝑝 is also the conclusion of at. Each node in at is the head of an argumentation tree, which is also a subtree of at If the number of steps required to build at is finite, then at is a finite argumentation tree. For example, in Example 1, a possible argumentation tree with conclusion (a,col(m1)) is given by (argα a1 (arg α c1 (arg α e1 ))) in tree parentheses notation. An infinite argumentation tree arises when there are cycles in the global knowledge base, which occurs when, during the building of the argumentation tree, an argument is added that is equal to one of its ancestors, that is, its parent or an ancestor of its parent. In an actual computational implementation, as will be presented in future works, these cycles are detected at execution time using a history list passed on between agents, and the interrupted argumentation tree is then marked with a Boolean flag indicating that it is infinite.

The definition of justified arguments w.r.t. KBMα is defined as JArgsMα= ⋃ ∞ i=0 Ji Mα, where Ji Mα is a partial set of justified arguments, recursively defined as follows: (1) J0 Mα= ∅; (2) JMα i+1 ={arg ∈ ArgsMα | arg is acceptable w.r.t. Ji Mα}. An acceptable argument arg1 w.r.t. a set of arguments Acc is such that: (1) arg1 is a strict argument, or (2) arg1 is supported by Acc and every argument in ArgsMα that defeats arg1 is undercut by Acc. An argument arg1 is supported by a set of arguments Sup if: (1) every sub-argument of arg1 is in Sup, and (2) there exists a finite argumentation tree at with head arg1 such that every argument in at, except arg1, is in Sup. A defeasible argument arg1 is undercut by a set of arguments Und if there exists an argument arg2 such that arg2 is supported by Und, and arg2 defeats a sub-argument of arg1 or an argument in at for every argumentation tree at with head arg1.

It is important to note that an argument arg1 must be the head argument of a finite argumentation tree to be supported. Arguments that are the heads of infinite argumentation trees cannot be supported but they can be used to defeat other arguments. If the defeated argument is not supported by a set of arguments that undercuts the defeater, it prevents the defeated argument from being acceptable, leaving its conclusion undecided.

The set of rejected arguments w.r.t. KBMα is denoted as RArgsMα=⋃∞ i=0 Ri Mα, where Ri Mα is a partial set of rejected arguments, recursively defined as follows: (1) R0 Mα= ∅; (2) Ri+1 Mα={arg ∈ ArgsMα | arg is rejected w.r.t. Ri Mα, JArgsMα}. An argument arg1 is rejected w.r.t. the sets of arguments Rej (a set of already rejected arguments) and Acc (a set of acceptable arguments) when: (1) arg1 is not a strict argument; and either (2.1) a sub-argument of arg1 is in Rej; or (2.2) arg1 is defeated by an argument supported by Acc; or (2.3) for every argumentation tree at with head arg1, there exists an argument arg2 in at, such that: either (2.3.1) a sub-argument of arg2 is in Rej; or (2.3.2) arg2 is defeated by an argument supported by Acc.

A justified argument implies that it resists any refutation. A term p (and its literal) is justified in KBMα when there exists a justified argument for p, in which case 𝑝 is a logical consequence of KBMα (KBMα⊨ 𝑝). The intuition of a rejected argument is that it is not a strict argument and is either supported by the already rejected arguments or defeated by an argument supported by acceptable arguments. A term p is rejected in KBMα if an argument with conclusion p does not exist in ArgsMα- RArgsMα, in which case 𝑝 is not a logical consequence of KBMα (KBMα⊭ 𝑝).

5.2.2 Strength of arguments and strength comparison

In this work, we propose that strength comparison between arguments must be based not only on isolated arguments but also on an argumentation tree that supports this argument. Therefore, we denote 𝐴𝑇arg as the set of all possible argumentation trees with head arg. Specifically, we are interested in the most favorable argumentation tree in 𝐴𝑇arg, which we call the chosen argumentation tree (denoted 𝐴𝑇*arg) for an argument. To achieve this, we need a way to calculate the strength of an argumentation tree, which will also enable us to define the strength of an argument. However, these definitions first require the definitions of the support set of an argumentation tree and the strength of an instantiated term, as presented below.

Definition 17. The support set of an argumentation tree at, denoted SSat, is the set of all instantiated terms in the leaves of every argument in the argumentation tree at, that is, SSat= ⋃at arg ForeignLeaves(arg).

Definition 18. The strength of an instantiated term 𝑝 = (𝑎j,l,θ) from the point of view of agent ai is Stp (p, 𝑎i )= Pi (𝑎j )× θ.

Definition 19. The strength of an argumentation tree at with respect to its head argument arg, defined by agent a i , is the sum of the strengths of all instantiated terms in the support set of the argumentation tree (SSat)that is, St t (at,a i )= ∑ p ^(SS at ) St p (p,a i ).

An agent 𝑎i assigns a strength to an argumentation tree based on the strengths of the instantiated terms that compose its support set. For each term, the agent gives it a strength based on its preference function 𝑃𝑖 and the term’s similarity degree θ. For example, in Example 1, agent a will assign a strength Pa (e)× 0.8 = 0.8 × 0.8 = 0.64 to the instantiated term (e,spa(m1),0.8) and a strength Pa (b)× 1 = 0.4 × 1 =0.4 to the instantiated term (b,ed(m1),1).

The similarity degree θ is considered in the strength calculation based on the intuition that less similarity to the actual term that was used to create the instantiated term implies less certainty about the usefulness of the term to support an argument.

It is also important to note that this is the default definition of strength. It is possible to define different strength calculations, as well as different definitions for the function Stronger (Definition 22), depending on the specificities of the application that will be implemented. For example, this default strength definition is based on the intuition that all arguments from all agents that contributed to reach a conclusion must be considered, including the total number of arguments and their respective strengths. In this way, a kind of social trust is implemented, similar to the skeptical agent proposed in [13]. Different formulas for strength calculation can also be used to implement more credulous or more skeptical agents. For example, a possible strength calculation could consider the “distance” of arguments in the argumentation tree to the head argument to demonstrate that an agent trusts less in arguments resulting from subsequent queries, e.g., as Alice asks Catherine, who then asks Eric, Alice could assign a lower value to Eric’s argument.

Still with respect to the definition of strength, the consideration of the entire argumentation tree for strength calculation avoids strong bias toward a specific agent, as is the case with the default strategy proposed in the original work on CDL [1], which considers only the strengths of the foreign leaves in the head argument. Such a strategy has a problem that an agent will always choose responses from its most preferred agent.

As we are interested in the chosen argumentation tree (at*arg) with the most favorable strength among the argumentation trees with head arg considering the strength of each subtree from the point of view of the agent that defines arg, the following presents the definitions of the chosen argumentation tree and the strength of an argument.

Definition 20. The chosen argumentation tree for an argument arg, denoted at* arg ∈ AT arg , is the argumentation tree with head arg such that each child arg' is the head of the argumentation tree with the greatest strength among all argumentation trees in ATarg'.

Definition 21. The strength of an argument arg is given by the strength of the chosen argumentation tree at* arg , that is, St arg (arg,a i )=St t (at* arg ,a i ).

Given the definition of the strength of an argument, it is possible to define the function Stronger that compares the strengths of two arguments.

Definition 22. The function Stronger is such that, given two arguments arg1 and arg2 and an agent ai, Stronger(arg1,arg2,ai )= arg1 if Starg (arg1,ai )≥ Starg (arg2,ai ). Otherwise, Stronger(arg1,arg2,ai )=arg2.

It is important to note that there may be cases where the strengths of the two arguments are equal. In these cases, the first argument passed is chosen as the stronger argument.

5.3 Argumentation semantics example

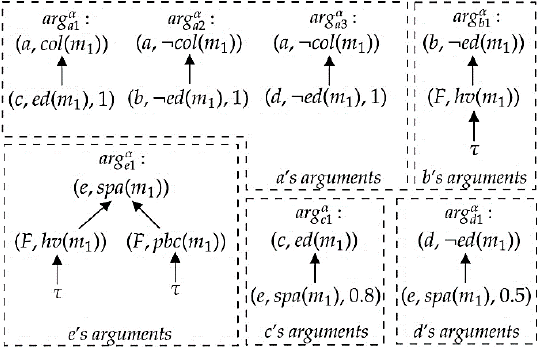

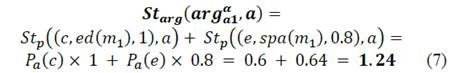

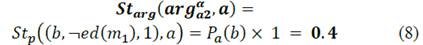

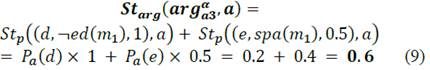

Example 1 - Continuation: From the interdependent set of arguments ArgsMα constructed in Section 5.1, the following are the strengths of the arguments arga α1, argα α2 and argα α3 that attack each other:

Therefore, as illustrated in Fig. 4, defeated αrgα a1 both argα a2 and argα α3 The following steps, also presented in Fig. 4, are used to create a set of justified arguments, starting from J0 Mα= ∅: (1) the arguments arg α b1', argα e1' and arg α e1'' are justified since they are base arguments and no other argument defeats them; thus J1 Mα= J0 Mα∪ {argα b1', arg α' e1,arg α e1''}; (2) the arguments argα b1' and argα e1' are justified since their only respective sub-arguments are supported by J1 Mα and no other argument defeats them; thus J2 Mα= J1 Mα ∪ {argα b1,argα e1}; (3) argα c1 and argα d1 are justified, since they are supported by J2 Mα (because all the other arguments in their respective argumentation trees are in J2 Mα) and no other argument defeats them; although argα a2 is also supported by J2 Mα , it is defeated by argα a1 and J2 Mα does not undercut it, thus it is not acceptable; therefore , J3 Mα=J2 Mα ∪ {argα c1,argα d1}; (4) argα α1 is justified, because it is supported by J2 Mα and, although argα α1is attacked by argα α2 and argα α3, it is not defeated by them; argα α3is not justified by the same reason as argα α2 in step 3; thus, J4 Mα= J3 Mα ∪ {argα a1}= JArgsMα. As there are no more arguments to justify,JArgsMα = J4 Mα

Example 2 - Continuation: From the interdependent set of arguments ArgsMβ constructed in Section 5.2, the following steps are used to create a set of justified arguments, starting from JMβ 0= ∅: (1) the argument argβ a1' is justified since it is a base argument and no argument defeats it; thus, J1 Mβ= J0 Mβ ∪ {argβ a1'}; (2) argβ a1 is justified since its only sub-argument is supported by J1 Mβ; thus, J2 Mβ= J1 Mβ ∪ {arg β a1}; (3) argβ b1 is justified since the argument argβ a1, which is the only other argument in the argumentation tree with head argument Bβ1, is supported by J2 Mβ; thus, J3 Mβ= J2 Mβ ∪ {argβ b1}. As there are no more arguments, then JArgsMβ= J3 Mβ.

Example 3 - Continuation: It is easy to visualize this case when considering the steps of Example 1, as illustrated in Fig. 4, but ignoring the existence of the arguments equivalent to argα a1, argα a3, argα c1, argα d1, and argα e1 and their sub-arguments. The main difference is that argγ a2 (equivalent to argα a2 of Example 1) in this case is not attacked by any argument, and thus is accepted. Therefore, the set of justified arguments in this case is{argγ a2, argγ b1,argγ b1'}.

6. Related work

The main related work is [1], which the model presented in this work extends. It enables decentralized distributed reasoning based on a distributed knowledge base such that the knowledge from different knowledge bases may conflict with each other. However, their work still lacks generality in the sense that many use case scenarios that cannot be represented in their system exist. One kind of such scenarios are those that require that agents share relevant knowledge when issuing a query to others. Another kind of scenarios are those in which the bindings among agents through mapping rules are not static, such as knowledge-intensive and dynamic environments.

There is also a proposed extension to the framework that uses a partial order relation by means of a graph structure combined with the possibility of using different semantics, such as ambiguity propagation semantics and team defeat [12]. Our work takes a different approach in defining a partial relation using a function over known agents, which enables a more efficient argument strength calculation. With regard to using the different reasoning semantics proposed, our work also supports them since it impacts only the definitions of undercut and acceptable arguments, which remain unchanged in such semantics.

Other works with similar goals and approaches are peer-to-peer inference systems, such as that proposed in [2], which also provides distributed reasoning based on distributed knowledge. The works in [14,15] provide ways of resolving inconsistent knowledge between peers, and [15] specifically uses argumentation to achieve non-monotonic reasoning. However, such systems do not deal with the idea of sharing relevant context knowledge when issuing a query, in addition to other disadvantages compared to CDL, such as considering a global priority relation over the agents instead of individual preference orders for each agent, which negatively affects the dimension of perspective in contextual reasoning.

Another relevant work is distributed argumentation with defeasible logic programming [16]. However, its reasoning is not fully distributed, and it depends on a moderator agent that has part of the responsibility of constructing arguments.

Jarraya et al. [17] adopted a different approach to enable agents to reason about shared and relevant knowledge. An agent defines a goal and initiates distributed reasoning by sending all its facts to a list of observer agents, thereby initiating a bottom-up approach that starts with facts and looks for rules to apply to the facts. Their approach is more specific than that presented in this work because it defines agents with specific roles. They also did not present argumentation-based formalism.

Rakib and Haque [18] presented a logical model for resource-bounded context-aware MAS that handles inconsistent context information using non-monotonic reasoning. Their model also allows the use of communication primitives such as Ask and Tell in the rules, which enables the simulation of the role of mapping rules. However, they did not foresee the possibility of asking or telling in a broadcast manner, which could enable the simulation of the concept of schematic rules. Moreover, their approach was not based on argumentation.

Other works on MCS [19,20] proposed resolving conflicts by means of the concept of repair prior to the effective reasoning process to maintain a conflict-free (consistent) global state. However, this may be undesirable in systems where agents may have different points of view of the environment, which can be conflicting but may be valid in certain contexts.

7. Conclusion and future work

In this work, we presented a structured argumentation framework to enable multi-agent fully distributed contextual reasoning, including the idea of focus, which consists of sharing relevant information to cooperating agents when issuing a query. We also proposed a key feature to enable the application of the framework in open, dynamic, and knowledge-intensive multi-agent environments. The framework was built on CDL, and we demonstrated how it is more general than CDL, thereby enabling the modeling of a broader range of scenarios.

Our future work will include the presentation of a distributed query-answering algorithm that efficiently implements the argumentation framework presented. Actually, this algorithm has already been defined and has an agent-based implementation but it was not presented in this work because of lack of space. We also intend to explicitly define other strategies for rank calculation that may better reflect different scenarios. Another interesting idea is to take advantage of the exchange of arguments between agents to enable explanation and learning.