1. Introduction

The technological development related to the fourth industrial revolution has had significant advances in Intelligence, Surveillance and Reconnaissance (ISR) activities used in the military sector, to understand, predict, adapt, and exploit objects and people in complex scenarios, where many things are connected through a mix of sensors that provide information about the terrain, infrastructure, weapons, intelligence, logistics, communications, and soldier health [1]. The data can be obtained with radar, images, radio frequency or audio sensors and processed with artificial intelligence algorithms to obtain accurate information to identify security threats such as drones used for attacker to strap explosives or carry out illicit or dangerous materials [2].

However, these applications initially developed for the military industry can be used in mining, surveillance of critical infrastructure, protection, and conservation of the environment [3,4]. Artificial intelligence provides solutions for the recognition of radio frequency [5] and audio signals at a very low cost, which have been developed with different objectives, such as the detection of drones [6] or the measurement of noise pollution [3]. The study of deep learning models in environmental settings is presented as a tool for monitoring infrastructures of environmental and material value.

The documents and tests carried out by other researchers focus on the detection capacity with respect to natural sounds, produced in urban and domestic environments, where the audio sources for these detections are common data sets published on the internet [7] which contain information of general soundscapes, but they are not designed with objectives of joint application in scenarios of war, deforestation, mining and drone flights, in the same way, there are works on each particular scenario, with multiple methodologies for classification, such as the analysis of PCA acoustic components [8] or Haar filters [9].

Based on the above, the objective of this research is to propose a model based on artificial intelligence for the classification and identification of acoustic signals using unconventional mechanisms [5] for environmental sounds [8] of events that represent risks for ecosystems and productive facilities. Identification of sounds in cases of deforestation, armed combat, use of drones and fires are proposed. Sound files of individual elements and sound stages are collected from open access web platforms, creating two standardized databases in the sound format, then the reading characteristics of best results in sound stages are presented, varying the sampling frequency, reading time and type of training. Likewise, the sound elements to which the best response is obtained are identified.

2. Artificial intelligence for military uses

Artificial intelligence (AI) in the military field has been widely used to automate intelligence, surveillance, and reconnaissance (ISR) activities, in order to obtain information in risk environments through radar, electro-optical or acoustic sensors and then process the data with machine learning with the final purpose distinguishing battlefield features, identifying dangerous objects or describing targets with difficult access. [1,4].

These information gathering, transmission and processing activities are used as disciplines of military intelligence, the most recognized being image intelligence (IMINT) and signal intelligence (SIGINT) which were established between 1940 and 1965, later , in 1970 the term measurement and signature intelligence (MASINT) was coined, which establishes more advanced processes for detection and analysis, but it was not until 1986 that the United States classified it as a formal intelligence discipline [10].

MASINT is defined as technically derived intelligence for the purpose of detecting, locating, tracking, identifying, and describing the unique characteristic signatures of fixed or dynamic objects. MASINT capabilities group various technological activities that are classified into several sub-disciplines, the most common being: acoustic intelligence (ACOUSINT), radar intelligence (RADINT), infrared intelligence (IRINT), nuclear intelligence (NUCLINT), electro-optic intelligence (ELECTRO-OPTINT) and materials intelligence [11,12].

For the development of the present research, the MASINT subdiscipline called ACOUSINT was taken, which uses the distinctive signatures of acoustic signals to identify objects with characteristic sounds or detect the source of remote vibrations [12]. This technology has been widely used to detect drones in natural settings, due to its acceptable versatility and precision at a low cost, compared to radar or electro-optic sensors. [13,14].

3. Methodology

For the proposal of automated signal processing, different mathematical transformations that modify the presentation and domain of information are studied, such as the Fast Fourier Transform (FFT), which delivers the power of the signal respect to frequency [8]; the Wavelet transform, which shows a signal representing it in terms of scales and time finite wave fractions [15] and the Mel Frequency Cepstral Coefficients (MFCC), which delivers coefficients that describe the behavior of frequency dynamics [16]. Finally, the preprocessing of audio signals by the MFCC transformation is addressed, for the convolutional neural network model to training, validation, and testing.

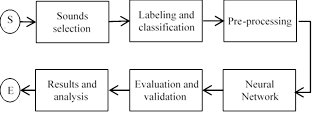

The methodological design is addressed experimentally, where initially the selection of sounds is made, which are labeled and classified to proceed to the preprocessing of the audio signals with MFCC for training, validation, and tests of a convolutional neural network model, which are analyzed to verify their operation and compare their efficiency with respect to other conventional methods, as shown in Fig. 1.

The model used comes from the community on the Kaggle platform, which is designed for the classification of environmental variables [17] without a specific application objective. Training for the identification of threats to infrastructures is proposed through the information segmented by clear and independent sounds of objects or categories with variations, the durations of the performance tests of the model are carried out with the sound scenarios where the accuracy of the predictions regarding variations in the sampling frequency, duration of the reading and type of training of the model. Finally, the sound reading configurations and the type of training that delivers the best results regarding the classification by sound scenarios are exposed.

Table 1 Sound types of preselection for training

| Soundstages | Object Type |

|---|---|

| Natural environment | Tropical environment (sounds of animals like frogs and birds), rain, thunder, Forest (sounds of wind and tree movements), river |

| Associated to ecosystems destruction | Forest fire, chainsaw, bulldozers, shipyard, metal chains, crane, sawmills, engines. |

| Associated to terrorism or crime | Fire weapons shots, Drone/UAV, tree fall |

Source: Own.

4. Collection and tagging sounds by scenario

The sounds selection is made on the Free~Sound and Youtube platforms, where users publish audios with different formats and characteristics, users also show the type of use or license that the data has. According to the above, the search, classification and ordering filters of the sounds are carried out to detect soundstages through an artificial intelligence model, the soundstages are classified as shown in Table 1.

The data is structured so that they have the same characteristics in duration, audio channels, file format, sample rate, plus the file name must be related to the sound class. Then the parameters shown in Table 2 are established, as a template for the conditioning of all the stored and labeled signals, and since not all the sounds maintain this format, a code is created in python 3.7 that oversees conditioning the signals.

The result of the classification, cutting and storage of the audios, reports a total of 1447 audio files separated into 16 categories, with an average of 88 sounds per label, the distribution is shown in Table 3, with the final report of these sounds in a .csv file, in this way it is possible to train, validate and test artificial intelligence models.

Table 3 Number of audio files per category

| Category | Quantity | Category | Quantity |

|---|---|---|---|

| Drone/UAV | 178 | Chainsaw | 93 |

| Forest fire | 164 | Thunder | 76 |

| Rain | 110 | Forest | 65 |

| Tropical environment | 110 | Crane | 60 |

| Bulldozer | 98 | Sawmills | 59 |

| Fire weapon shots | 97 | Tree fall | 58 |

| River | 96 | Metal chains | 48 |

| Shipyard | 96 | engines | 39 |

Source: Own.

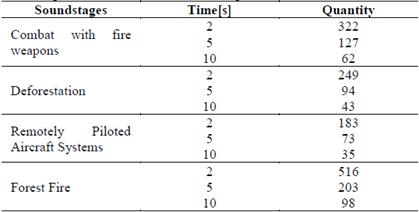

Although in the development of artificial intelligence models the data is segmented for training, validation, and testing, it is necessary to generate another audio data set, since the sounds divided by categories are usually clean and focused only on the element in question, this makes that the selection of sounds is homogeneous and unrealistic. Then a second selection is made with audios that present auditory contamination and instead of presenting the separation by category, a sound stage is shown, which allows testing the performance of artificial intelligence models in realistic situations. Following the methodology of extraction, cutting and preparation of sounds to create an audio data set, which is divided by soundstages, as shown in Table 4.

For this data selection the same system of division, classification and storage is implemented, but the duration of the fragments is a variable for testing purposes. The rest of the parameters established, such as the format, sampling frequency and number of channels, remain as those determined previously shown in Table 2, then the storage of the soundstages is reported according to what is shown in Table 5.

5. Pre-processing

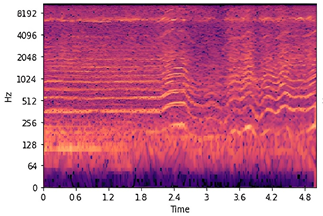

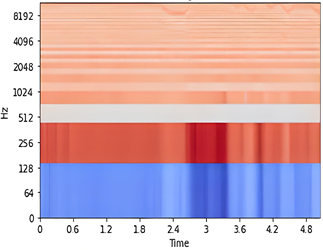

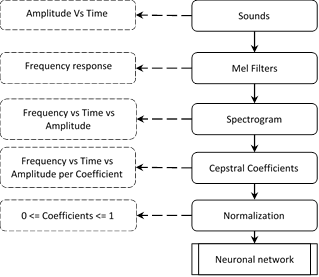

In this phase, the information is processed to enter it into the training and classification model. There, different transforms are related to obtain a matrix input of the data, to be interpreted by the artificial intelligence model. In this process, the transformation of the signal in the domain of intensity and time [s], of sound, to the domain of power [dB] and the Mel frequencies [Hz] of cepstral coefficients spectrogram is carried out, this process is shown in Fig. 2.

Table 4 Soundstages and Classification categories

| Soundstages | Classification categories |

|---|---|

| Combat with fire weapons | Crane, metal chains, engines, fire weapon shots. |

| Deforestation | Sawmills, chainsaw, shipyard, tree fall, crane, metal chains, engines. |

| Remotely Piloted Aircraft Systems | Drone, UAV |

| Forest Fire | Chainsaw, tree fall, engines, metal chains, wind |

Source: Own.

Source: Own

Figure 2 Signal pre-processing diagram for input to the neural network in training and application.

Mel filter banks are applied to the sounds of interest to convert the perceived frequency to a logarithmic scale. What is sought is to approximate the way in which the signal is received from a computer to the way in which it is perceived by a human being, establishing filters by regions of interest. Once the perception of the frequency has been corrected, it is possible to extract the spectrogram of the signal through the fast Fourier transform (FFT), showing the frequency and power of the signal over time, it can also be performed the analysis process using its acoustic properties [8]. On the other hand, the Mel frequency cepstral coefficients spectrogram is a transformation that is applied to the spectrum to identify relevant harmonics, frequencies or pitch in a signal, being used to eliminate high frequency noise and maintain the dynamics of sounds, it is used in speech processing and classification of environmental sounds [16,18,19] since it allows interpreting the behavior of the signal in various frequency bands where the signal has patterns of interest.

For speech analysis applications, with MFCC 13-20 coefficients are commonly used, since the interest is in identifying the mechanics of the vocal tract, for this exercise, 39 are used, since there are characteristics in the sounds that go beyond of human capacity (chainsaws, drones, gunshots). Once the coefficients are extracted, they are normalized so that, within the training and input to the neural network model, the coefficients have an analysis region by standardizing the maximum and minimum values of the signals, also allowing them to be easier to manipulate. For the neural network, an example of this process can be seen in Figs. 3. and 4.

6. Neural Network

For development, the base model of the Kaggle platform, published by Nikunj Dobariya in September 2020, [20] is initially extracted. This model is developed to analyze 5-second audio from the "Environmental Sound Classification 50" [17] database, which obtains an accuracy of 85% for the original data set.

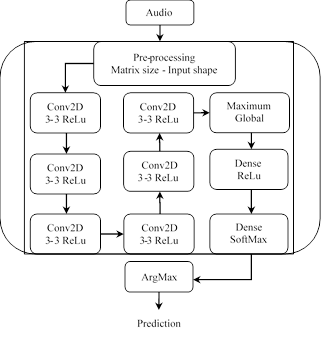

The convolutional network model published by Dobariya is explained in Fig. 5, where the data processing after the input of the audio signal and its pre-processing to MFCC is treated with the 6-layer model of the convolutional neural network where the information of the matrix is decomposed with kernels or filters of size 3x3, to identify characteristics of each category in the MFCC, the activation function used for the layers of neurons is the linear rectification unit (ReLU), once the characteristics are decomposed, the resulting information is fed to two layers of fully connected neurons that deliver the probability of coincidence of each category.

Data is entered from the category data set, separated into 3 groups as shown in Table 6, to perform training, validation, and testing of the models.

Table 6 Quantity and proportion of the groups for the model

| Group | Proportion | Quantity |

|---|---|---|

| Training | 72% | 1042 |

| Validation | 18% | 260 |

| Testing | 10% | 145 |

Source: Own.

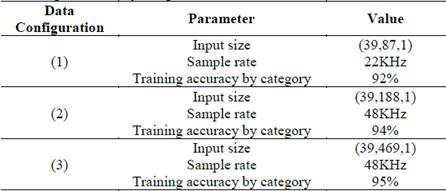

This model is trained with 3 variants in the model input, to recognize the way in which the time interval and sampling frequency intervene in the training and testing between data by categories and characteristics. The training is performed with data separated by categories, as shown in Table 7.

7. Results

With the distribution exposed in Table 6, it is possible to relate and quantify the accuracy of the models, which deliver characteristics, against the sound scenarios. For this, tests were carried out by model in different data configurations, taking as variables the frequency, model and sampling time, the successful cases of scenario selection are compared with respect to the total amount of audios per scenario. The most relevant results are presented in Table 8, where it is found that data configuration 2 presented the best performance with respect to the values of the accuracies for the 4 scenarios evaluated (average of scenario accuracies). In addition, the average time that artificial intelligence takes to make a prediction is reported to measure the efficiency between configurations.

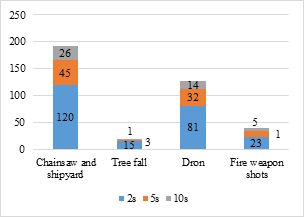

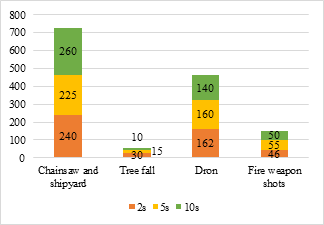

Among the selected data configurations, the differential factor is the effect of the reading intervals on the detections, since in long intervals of time, environmental sounds or sounds with a greater general presence can overshadow other events, such as the fall of a tree versus the sound of a chainsaw, this case is shown in Fig. 6, where it is shown that the 2-second configuration is capable of detecting up to 5 times more cases than the 5-second configuration and 300% in detection time, compared to the configuration of 10 seconds, this is explained by the increase in cases of chainsaws and chippers of the long strips, where the fall of trees is camouflaged.

The same figure shows other critical cases such as shots and drone flights where the general response of the three data configurations is similar, since the difference in the accumulated times (Fig. 7.), in the detections is minimal and the configuration of 5 seconds stands out in these two cases by a margin of 5% to 9% in both categories.

Another observable result is that the configuration of detections for 2 seconds is less sensitive to the loss of information, since it allows obtaining a greater number of detections, better separating the events, and accumulating the times of the categories.

Table 8 Model configurations with best average results per scenario.

| Data Configuration | Time [s] | Sample rate [KHz] | Average accuracy scenarios | Average time per prediction [ms] |

|---|---|---|---|---|

| 2 | 2 | 48 | 57.4% | 48 |

| 2 | 5 | 48 | 59.6% | 113 |

| 2 | 10 | 48 | 62.3% | 227 |

Source: Own.

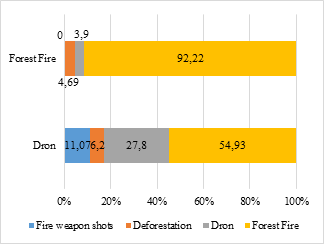

In addition, it is found that the category that presents the most confusion for all configurations is that of drone flights, since 72.2% are false detections, of these 54.9% come from fire cases and only 27.8% from the cases identified by the model correspond to the correct category as shown in Fig. 8. This behavior can be explained because the sound of a moving drone is identified as ambient noise in the samples, then it is erroneously identified in the recordings. of all stripes. On the other hand, the category that presents the least confusion is that of fire, with an effective detection of 92%, although it must be considered that it concentrates most of the test data with 40%.

Source: Own.

Figure 8 Comparison of percentage of detections respect to categories of Drones and Forest Fire

On the other hand, it is observed that the prediction speed between the test groups is a valuable factor, since, although in no configuration they take more than 1 second in the prediction, varying between 14ms and 324ms per element analyzed. The speed of executions is an important aspect when having a system that performs pre-processing and identification in real time. Therefore, the implementation of time slots between 2 and 5 seconds must be deepened in order to obtain a fast response in operation.

Finally, the exposed model is more efficient compared to other convolutional network architectures that have been implemented in the recognition of common environmental sounds [3,17] with parameters between 25'636.712 and 143'667.240. Since it reported satisfactory results in accuracy and prediction speed for the dimensions of the model presented with 54,000 parameters.

8. Discussion

The study demonstrated that the concepts related to the MASINT discipline used in ISR military activities, for the detection of weapons, armed vehicles, or explosives, are applicable to recognize the characteristic signatures of objects or non-war scenarios and that they are not related in the activities of the ACOUSINT subdiscipline [10,12]. The threats to security and defense have mutated towards the use of typically non-warlike objects such as drones, which are already considered a threat to privacy and public safety [13,14].

In this way, the inclusion of sound stages where there are acoustic traces of motors, saws, cranes, or fires, including war objects such as fireweapons, expand the knowledge of these systems to be applied to environmental protection, using the procedures of the discipline of military intelligence MASINT to react and prevent with greater sufficiency before actions of deforestation, violence in protected areas or illegal exploitation of mining deposits. The protection of the environment is a dimension of security and defense that is very relevant for Armies today [21,22].

Finally, the results obtained show that the use of artificial intelligence through unconventional algorithms is applicable to the use in projects related to environmental sustainability and that there is interest in the study of ISR military methods to identify objects in complex sound scenarios. [23,24]. The application of military knowledge to provide solutions to sustainable projects can help develop more efficient systems at a lower cost.

9. Conclusions

A convolutional type of neural network model used like tool for ISR in military ambit was proposed for the processing and classification of environmental sounds with special interest in use to development of sustainable projects related to the environment, mining, and critical infrastructure. The principal advantage is the computational cost that it generates in hardware and software, since the execution time per sample does not exceed 500ms and the number of parameters to load are much lower than other systems used, which it generates an advantage in the operation in processing equipment such as a Jetson Nano or Raspberry Pi.

This makes the deployment of the trained model in the field economically viable and very efficient for the protection of the environment and the sustainable exploitation of ecological and human infrastructures, as an alternative and low-cost technology. In this sense, the configuration and model to be used to deliver the most convenient results in this task are the 2 to 5 second time slots with a sampling frequency of 48KHz in the training of test 2.

Finally, it should be commented that the testing of the models by operation scenarios provides a perspective of operation in the field, since soundscapes are usually made up of various audio elements, as occurs in deforestation, fires or violent acts, therefore, field tests must be carried out to evaluate the behavior in the face of noise generated by rain and winds.