High-quality academic activity requires impact channels that are of a similar level. Monitoring the productivity and impact of scientific production is conducted globally as this is a necessary input for education, funding, and public policy (Heberger, Christie, & Alkin, 2010; Vargas-Quesada & Moya-Anegón, 2007). One approach to assess the impact and dissemination of academic output is using bibliometric indicators, which allow the course of a discipline or area to be analyzed across periods of time using publication patterns. These methods have been accepted in multiple areas of knowledge as valid approaches to evaluate research (Garfield, Malin, & Small, 1978; Krampen, 2008; Long, Plucker, Yu, Ding, & Kaufman, 2014; Zurita, Merigó, & Lobos-Ossandón, 2016).

Several aspects beyond simply the number of articles published can be analyzed using this bibliometric approach. For example, knowledge accumulated in a given area tends to have a close relationship with knowledge produced in other disciplines. In turn, technological developments require the integration of multiple sources. Accordingly, productivity analyses using a bibliometric approach allow areas of greater or lesser proximity to be identified, and, thus, the interdisciplinarity of knowledge (Heberger et al., 2010); furthermore, similar analyses have been conducted on cooperation between authors or types of research (Garcia, López-López, Acevedo-Triana, & Nogueira Pereira, 2017; Robayo-Castro, Rico, Hurtado-Parrado, & Ortega, 2016). This cooperation could be understood as joint efforts towards the common goal of scientific productivity (Garcia, López-López, Acevedo-Triana, & Bucher-Maluschke, 2016). One form of cooperation is publication co-authorship, which has been used to assess collaboration between researchers and contrasts with other forms of cooperation, such as joint research projects, development of regional associations, creation of academic events, and scholar exchanges (Garcia, Acevedo-Triana, & López-López, 2014). Overall, bibliometric indicators are the input for productivity analyses across multiple levels -collaboration, productivity, or internationalization-which are all of relevance for educational institutions, governments, collegiate units, and researchers.

Despite agreement on the necessity to conduct periodic productivity assessments, there is an ongoing debate about their outcomes and purpose (Butler, 2008; Hicks, 1999; Moed, 2008). The discussion goes beyond limitations related to the scope, data, or methodology implemented, and includes the possibility to compare measurements across areas, the influence of these assessments on the institutions or faculty, and whether bibliometric information indeed reflects how research is used and has an impact, without considering publications of a different nature that typically are not indexed in traditional repositories (Bar-Ilan, 2008; Davidson et al., 2014; Thelwall, Haustein, Lariv-ière, & Sugimoto, 2013). Part of the problem is that analyses are implemented using information from heterogeneous sources and, therefore, are inherently limited in scope. The impact of Latin American publications has frequently been underestimated for multiple reasons (Alperin et al., 2015), but especially because traditional analyses only use journals indexed in large databases, and the main indicators considered are solely based on citation information. Fortunately, a promising field in information studies now assesses dissemination of knowledge not only focusing on citations across indexed journals, but also including academic social networks (e.g., almetric.com). Results from these analyses have effectively shown an increased circulation of knowledge and, more generally, have supported the notion that this comprehensive approach is relevant, and thus should be considered in the assessment of academic productivity (Alperin, 2015).

In Psychology, the study of several fields of application has been supplemented with bibliometric information as an input for the reformulation of historical contents, the determination of optimal communication channels, and analyses of production trends and research. The outcomes of this approach have been used to guide policy on research and productivity (Allik, 2013; Mori & Nakayama, 2013; Navarre-te-Cortés et al., 2010; Nederhof, Zwaan, De Bruin, & Dek-ker, 1989; Nederhof, 2006; Schui & Krampen, 2010; Yeung, Goto, & Leung, 2017).

Scientific productivity in Colombia has increased during the past few decades, and there is now a context in which the assessment of scientific output across different areas is increasingly needed (Alperin et al., 2015; López-López, Silva, García-Cepero, Aguilar-Bustamante & Aguado, 2010; Salazar-Acosta, Lucio-Arias, López-López, & Aguado-López, 2013). Considering its rapid growth in productivity over recent decades, psychology is an area of special relevance (López-López, García-Cepero, et al., 2010; López-López, Silva, et al., 2010). It seems that part of this effect can be explained by the growing interest and improvement in editorial processes. The emergence of academic and editorial networks seems to have contributed, which have increased the exchange of experiences and the possibility of overcoming common difficulties (López-López et al., 2010).

Colombian psychology publications have emerged under the Open Access (OA) model and have been mostly supported by educational institutions and not by academic organizations (Alperin et al., 2015; López-López, Silva, et al., 2010; Van Noorden, 2012a). These journals have been created without a specific insight into the discussion about the fact that the OA model is the standard for the Colombian psychology publications. They have been developed under the assumption that the OA model will improve access to content without the intervention of the editorials and will aim to address the need to disseminate local research without imposing payment limitations (Suber, 2015). It is worth noting that these journals have very good content, which is a result of their double-blind peer reviewing process, international editorial committees, and compliance with the high standards that international databases impose to be included in their systems (Alperin et al., 2015). It is also evident that this model challenges the traditional paradigm of knowledge access and the role of publishers, not only in terms of indexing, but also regarding visibility. The "mega-journals" have instigated a push towards the OA movement (Aguado-López, Becerril-García, & Aguilar Bustamante, 2016; Bjõrk, 2015) because they have developed a high-quality OA model, which aims to oppose the control that academic elites exert over some top-tier journals that follow the paid-access model. Several journals with high-quality and corresponding high citation and visibility have emerged under the OA model (e.g., Plos One, Biomed Central, Frontiers in), which have been inspired by the debate regarding increasing access to information (Laakso et al., 2011; Piwowar et al., 2018). Although OA has been useful in promoting global coverage and scope, it has recently been associated with dubious quality; however, these isolated cases are not representative of the general status of the OA model, which is currently estimated to cover 30% of world production (Laakso et al., 2011; Piwowar, 2013; Piwowar et al., 2018). In Latin American countries with high-productivity, such as Brazil, journals with the best reputation and quality follow the OA model, which suggests that strengthening of this type of journals is a positive effort (Neto, Willinsky, & Alperin, 2016). This has been the model followed by several journals in Colombia.

There have been previous efforts to analyze the increasing productivity of Colombian psychology journals (Guerrero & Jaraba, 2009; Morales, Jaraba-Barrios, Guerrero-Castro, & López-López, 2012; Quevedo-Blasco, López-López, & Quevedo-Blasco, R. & López-López, 2011). These studies identified different transformation periods in recent decades. Only some journals have survived these changes to date because of their efforts to adjust their editorial, communication, collaboration and impact practices to world-level standards (Chi & Young, 2013; Koch, 1992; Leydesdorff, 2004). The observed increments in productivity, coupled with the disappearance of several journals, suggest that there has not only been an increase in the number of articles submitted and published, but also a push towards improvement in the quality of the publications. An additional aspect worth noting from these analyses is the increase in the reported collaboration indicators.

Considering several years have elapsed since the last relevant analyses were conducted (i.e., 2008), this paper provides an updated assessment for the period between 2000 and 2016. We analyzed productivity and impact of Colombian psychology journals using multiple systems of international and regional indexing (Scopus and Journal Scholar Metrics, and Redalyc and Scielo, respectively) and compared them across the period of observation. Our effort to show the evolution and success of the journals that are indexed in systems that report citation information is expected to guide the decisions of other journals that are currently working towards inclusion in these systems.

Method

Materials and Procedure

We selected 13 Colombian psychology journals (table 2) for analyses using the following criteria: (a) the journal had been active between 2000 and 2016; (b) the contents of the journal in that timeframe were accessible; (c) the journal was covered by at least two of the following databases: Scopus, Scielo, Journal Scholar Metrics or Redalyc; (d) a regional (Scielo) or international (Scopus or Journal Scholar Metrics) citation indicator was available for the journal. All the Colombian journals analyzed in this paper use a double-blind peer-review model. Full-text articles published during the selected period were downloaded from journals' webpages or repositories. Each paper was independently classified by one of the authors as either theoretical, empirical, or bibliometric. Book reviews, editorials and conferences were excluded from the analyses. A quality-control test was conducted by a different author, and 20% of all the papers were randomly chosen for reassessment. We obtained a 95% inter-rater agreement. The definitions of the indicators obtained and analyzed in the present study are presented in Table 1 (including equations when applicable). Both indicators and analyses followed those described by Salas et al., (2017). In terms of productivity, the unit of analysis was the paper (article). The number of authors and their associations were used to calculate cooperation and collaboration indexes. Papers with authors from different countries were counted towards each country's production. Thematic contents of the articles were not considered due to the diversity of the fields covered.

The number of citations was the standard measure for a publication's impact (Buela-Casal, Medina, Viedma, Godoy, Lozano, & Torres 2004; Buela-Casal & López, 2005). However, due to the volatile and heterogeneous nature of this measure, citation information was examined across the sources available (Lluch, 2005) - i.e., citations reported in Scielo, Scopus, and Journal Scholar Metrics (http://www. journal-scholar-metrics.infoec3.es) were considered. Citation has multiple uses, additional to impact assessment; it also serves as an indicator of cohesion in academic communities via the so-called citation networks (Chi & Young, 2013). In the present study, citation was analyzed via information provided by the different databases.

Indicators solely provided by Scopus, such as the SCImago Journal Rank (SJR) and the Source Normalized Impact per Paper (SNIP), were used to compare the relevant journals.

We used total and normalized H-impact indicators (excluding self-citations) provided by Journal Scholar Metrics for journals only indexed in the regional bases Scielo or Redalyc, and not in Scopus. These indicators can also reveal details about the course of these publications and allow for comparisons with journals included in Scopus. This is an approach that has not been implemented in previous studies, but has potential to provide a more standard picture of the journals reviewed in the present study.

Results

Productivity

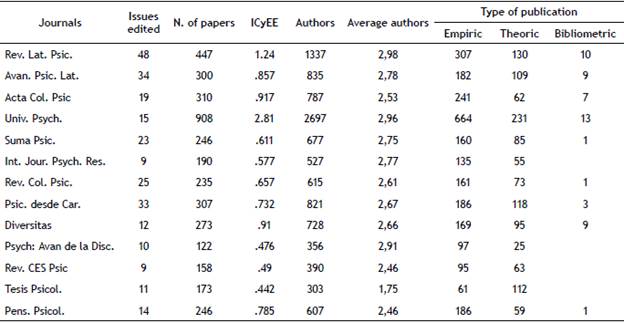

Table 2 presents journals' productivity across the considered timeframe (2000-2016). An increase in the number of papers published in recent years and a strengthening in their overall impact is evident in the data. A total of 3,915 papers written by 10,687 authors were examined. These articles represent 19% of the total amount of psychology papers indexed in Redalyc (20,587), and 11.5% of the Colombian papers referenced in the same database, which is an indication of the productivity level of Colombian journals as optimal channels to publish psychology.

Table 2 Results of productivity of the analyzed journals.

Note: Rev. Lat. Psic. (Revista Latinoamericana de Psicología); Avan. Psic. Lat. (Avances en Psicología Latinoamericana); Acta Col. Psic (Acta Colombiana de Psicología); Univ. Psych. (Universitas Psychologica); Suma Psic. (Suma Psicológica); Int. Jour. Psych. Res. (International Journal of Psychological Research); Rev. Col. Psic. (Revista Colombiana de Psicología); Psic. desde Car. (Psicología desde el Caribe); Diversitas (Diversitas perspectivas en Psicología); Psych: Avan de la Disc. (Psychologia: Avances de la disciplina); Rev. CES Psic. (Revistas CES de Psicología); Tesis Psicol. (Tesis Psicológica); Pens. Psicol. (Pensamiento Psicológico). ICyEE (Indice de contribución y Esfuerzo Editorial).

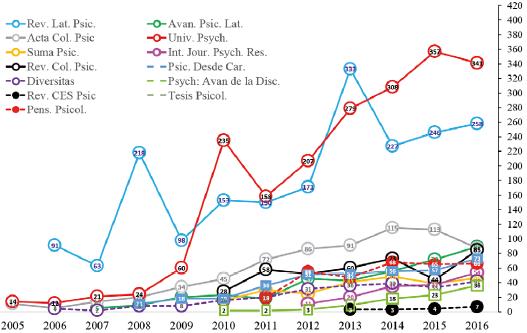

It is worth noting that, in general, journals include research papers that report both direct and indirect observations or experience to spread knowledge - i.e., empirical -rather than theoretical papers; non-experimental research combining and incorporating existing theories to spread knowledge - suggesting a preference for using journals as a channel to disseminate empirical research outcomes in psychology. Bibliometric publications - i.e., manuscripts based on empirical research that analyzes publications, research outputs, and/or researchers - also had an important presence in the journals reviewed. They generally intend to provide assessments on the production and impact of the discipline. Figure 1 shows the number of papers published per year across the period of observation (2000-2016) and per journal. These data indicate a dramatic rise in the number of publications, increasing from 60 papers in 2000 to more than 370 in 2016, which represents an increase of more than 600%. Even though many journals were founded after 2000, or their contents were unavailable for the entire period of observation, data indicate an increase in articles published for all journals, independently of their trajectory.

Figure 1 Number of papers published in journals in Colombia between 2000 - 2016. Panel (a) number of documents per year; panel (b) number of papers per journal.

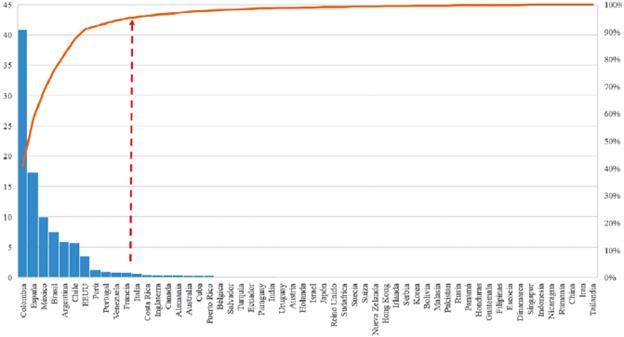

Figure 2 shows the geographical distribution of the reviewed papers. Eleven countries (Colombia, Spain, Mexico, Brazil, Argentina, Chile, USA, Peru, Portugal, Venezuela, and France) concentrate 95% of the production of psychology publications. To a lesser extent, some non-Hispanic countries contribute mainly in English.

Figure 2 Distribution of author's country of origin for the papers published in the Colombian journals during 2008-2016. Note: the red arrow indicates 95% of all items. The data per journal can be found in the supplementary table 1 (Table S1).

As for the number of papers, some journals have special issues on distinct subjects, with specialized academic units directing and editing contents. As we will discuss later, these issues could have a specific impact on citations by focusing on a specific topic.

Finally, Redalyc offers an Index of Contribution and Editorial Effort (ICyEE), which accounts for the number of papers published in relation to the average number of articles published by journals of a given discipline. As shown in Table 2, only two journals have scores above 1.0, which is the average for psychology journals. These two publications were Revista Latinoamericana de Psicología (1.24) and Universitas Psychologica (2.41), which account for twice as many papers, and therefore twice as much editorial effort. Some journals with close-to-average ICyEE scores (1.0) are Avances en Psicología Latinoamericana, Diversitas, and Acta Colombiana de Psicología. The journal with the lowest indicator is Tesis Psicológica, which has an index lower than half the average (.442). It is noteworthy that this increase and contribution to the discipline has been reached without changes in the journals' economic models, but instead by optimizing editorial processes and economic resources; the aim has been growth in divulgation, collaboration, and impact.

Collaboration

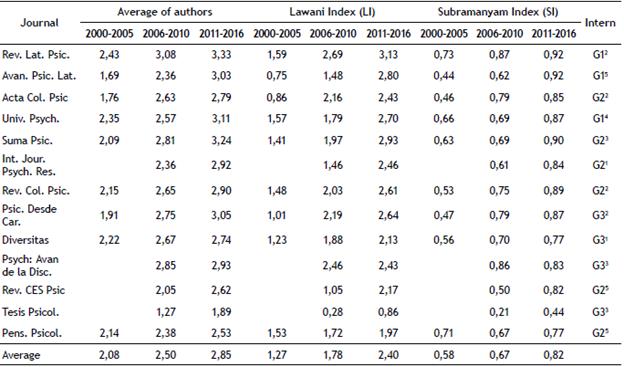

Data on collaboration are presented in Table 3, which indicates an overall growing trend in the related indicators across time (average number of authors, Lawani Index, and Subramanyam Index). Authorship grew from 2.5 authors in 2006-2010 to 2.8 in 2011-2016, and some journals had averages above 3 authors.

Although the average number of authors (Lawani [LI] and Subramanyam [SI] indicators) show the evolution of collaboration, LI calculates the weighted average of authors per article in each period, which makes it a more accurate index than the simple average of authors per article. The SI determines the number of papers that have two or more authors; that is, it indicates a percentage of papers written in collaboration. Accordingly, in the first years of the analyzed period (2000-2005), about 58% of the articles were written by two or more authors, between 2006-2010 this indicator increased to 67%, and between 2011 and suggests a change in the research process. The fact that this change seems to be occurring not only in Colombia, but across the region, is a signal of a change in the culture of research and publication.

Finally, Redalyc's internationalization index (Table 3) shows that journals with the highest ranking in Colombia, that is, with more citations and coverage, have a higher level of internationalization. However, journals not covered by Scopus - Revista CES de Psicología and Pensamiento Psicológico - showed higher internationalization scores than other indexed journals such as Suma Psicológica or Revista Colombiana de Psicología, which could suggest that indexing is more of a reflection of administrative editorial work and does not have so much to do with impact, quality, or visibility of the journals. This finding could be related to the number of citations generated in different databases, which would suggest that indexation has an impact on regional ranking.

Although an analysis of the countries of affiliation and institutions of these collaborations was not performed, Figure 2 shows that Colombian journals are mainly a regional communication channel because the affiliation is from Ibero-American countries. In this sense, it is worth highlighting Redalyc's effort in promoting collaboration indicators both between institutions and between countries.

Impact

One of the objectives of this work was to assess the chosen publications and consider their impact. Traditionally, the number of citations and indexes related to this measurement have helped to identify the impact that a publication may have on a given academic community. However, citation is only one form of journal impact, and more detailed analyses with more inputs may provide a broader assessment. We agreed with most of the criticisms on citation information as an indicator of quality and impact, and, as such, we decided to combine several citation systems to control single source bias. We also included indices provided by Scopus that have been developed as supplementary sources of information, namely CiteScore, SJR, and SNIP.

We pooled citation information from three main sources (see Figure 3, 4, and 5). Namely, Scopus as an international indexing database that covers seven psychology journals in Colombia: Universitas Psychologica, Revista Latinoamericana de Psicología, Acta Colombiana de Psicología, Avances en Psicología Latinoamericana, Revista Colombiana de Psicología, Suma Psicológica, and International Journal of Psychological Research; Journal Scholar Metrics as an international indicator, which is based on data extracted from Google Scholar Metrics and compiles information beyond Scopus' indexed journals (covers 13 Colombian journals); and Scielo citation indices, which offer regional information and covers 6 Colombian journals.

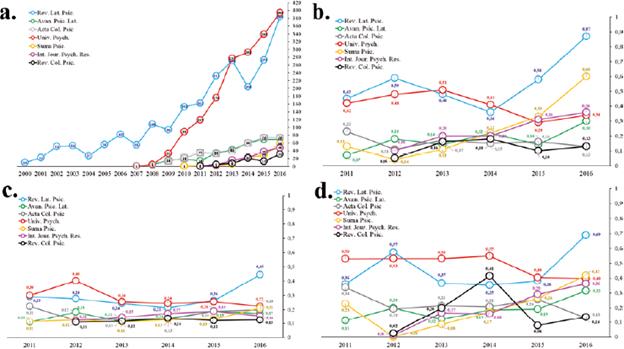

Figure 3 Impact indicators of Colombian journals in Scopus. Panel (a) number of citations per year; panel (b) Scopus's CiteScore, takes into account the number of citations in a year with respect to the documents published in the three immediately preceding years; (c) Scimago Journal Ranking (SJR) of each journal, which evaluated its impact in relation to other journals; (d) Standardized impact of the source by article (SNIP) using Scopus, which measures the potential impact of citations for psychology journals and the area in which the journal has been classified. In this case, values close to 1 mean that the impact of the journal is consistent with the development of the area to which the journal belongs.

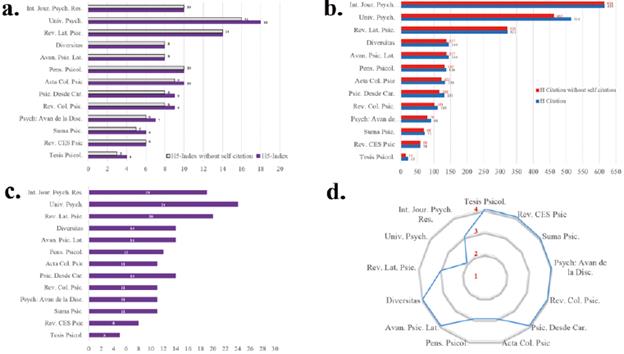

Figure 4 Impact indicators of Colombian journals using Journal Scholar Metrics. Panel (a) h5-index in the period 2010-2014 and means the number of citations that receive h number of papers that have at least h citations each; panel (b) H-Citation, which indicates the sum of the number of citations received for the papers that make up the journal's H5-index; panel (c) H-median, which means the median value of citations for the number of papers collected in the H5-index; (d) Quartile of the journal in the Journal Scholar Metrics classification. If the journal is located in the first quartiles, the indicator will have higher prestige in the social science assessment.

Figure 3a shows the annual number of citations per journal registered by Scopus. These data indicate that Revista Latinoamericana de Psicología and Universitas Psychologica clearly differentiate from the other analyzed journals, especially during the last period of observation - 2010 and 2016 - in terms of the accelerated increase in number of yearly citations, which reached nearly 400 in 2016. The fact that this increasing pattern is steeper for Universitas Psychologica than for Revista Latinoamericana de Psicologia, is due more to its recent foundation in 2007. This suggests that Universitas' growth has been faster. The behavior of the five remaining journals is very similar as they have a yearly growth in the number of citations, but do not exceed 80 citations at the end of the observation period (2016). Figure 3b shows the CiteScore information for each journal across the period of observation, yearly citations are compared with 3-year blocks of published documents. These data show that the two most cited journals - Revista Latinoamericana de Psicología and Universitas Psychologica behave differently. Revista Latinoamericana has progressively achieved a CiteScore value, which was close to 1.0 by the end of the observation period. This finding suggests that this journal is cited the same number of times in a year as documents from the previous three years. This would entail a balance between the number of articles published, or a reduction in the number of articles published per year. A lower number of articles affects the citation-article relationship, as well as the more than 50-year history of the journal. The opposite pattern is observed in Universitas Psychologica, which has decreasing CiteScore values across the last years of the observation period. This is the result of a regular increase of articles each year, which is related to the strategy of growing to become a broader communication channel.

Data regarding the SJR index is shown in Figure 3c. Information on the last year of observation (2016) shows six journals in a similar range (between .1 and .3; Avances en Psicología Latinoamericana; Acta Colombiana de Psicología; Universitas Psychologica; Suma Psicológica; International Journal of Psychological Research and Revista Colombiana de Psicología), only Revista Latinoamericana de Psicología is clearly on a higher level (between .4 and .5). Contrasting this finding with previous indicators (Citation Index and CiteScore) suggest that Scopus-indexed journals have larger citation networks.

Finally, data on the potential citation impact index is shown in Figure 3d. This information shows a separation between most journals and Revista Latinoamericana, and is similar to how leading journals in the region operate (Salas, Ponce, Méndez-Bustos, Vega-Arce, Pérez, López-López, & Cárcamo-Vásquez, 2017); four are mid-level journals (Avances en Psicología Latinoamericana, Universitas Psychologica, Suma Psicológica, International Journal of Psychological Research), and Acta Colombiana de Psicología and Revista Colombiana de Psicología are low-level journals. Overall, these data on SNIP show how the Revista Latinoamericana de Psicología has grown over recent years, whereas several indicators for other journals have stayed nearly unchanged, except for growth in the number of citations.

Figure 4 shows Journal Scholar Metrics indicators. As Figure 4a indicates, Universitas Psychologica has the highest 5-year h-index, suggesting an important position outside Scopus. This indicator has a median number of citations - see Figure 4c. As shown in Figure 4b, the International Journal of Psychological Research has the highest h-citation index, followed by Universitas Psychologica and Revista Latinoamericana de Psicología. This result suggests that the ranking of some journals could be measured outside the Scopus' system. It is also striking that setting aside these three journals, there is no difference in impact when comparing the journals that are in Scopus and those that are not. This again suggests that indexing does not ensure a greater impact; rather, it reflects a regional placement, which in turn reflects international placement.

Data from the Journal Scholar Metrics for Social Sciences (Figure 4d) shows that only Universitas Psychologica is located in a high quartile (Q2). Four other journals are located in Q3 (International Journal of Psychological Research, Acta Colombiana de Psicología, Pensamiento Psicológico, and Revista Latinoamericana de Psicología) and the eight remaining journals in Q4 (Tesis Psicológica, Revista CES de Psicología, Suma Psicológica; Psychologia, Avances de la Disciplina, Revista Colombiana de Psicología, Psicología desde el Caribe, Avances en Psicología Latinoamericana, and Diversitas). This is a measurement that differs from the quartiles established by Scopus and Web of Science, which in turn illustrate how indicators differ depending on the source. However, the similarities in the two indicators (of quartiles) is related to the number of documents, citations and the contribution made by each publication to the growth of a certain area. The discrepancy indicates the need to gather several measures to overcome database biases. An important correction that appears in these indicators is related to the exclusion of self-citations (Pérez-Acosta & Parra Alfonso, 2018), and although it could be considered to have some misgivings, it does not represent a major change among the journals (Figures 4a and Figure 4b).

Lastly, regional impact was evaluated through Scielo and the number of citations reported (see Figure 5). Although Universitas Psychologica and Revista Latinoamericana de Psicología showed high citation levels, with a 100-citation difference between them in 2016, the remaining 12 journals show very similar citation levels, both for journals included an not included in Scopus. This result could reflect journals' similar regional and international impact. The fact that this relationship is not reflected in the journals indexed in Scopus, suggests that Colombian journals' visibility level in Scopus is determined by the position of Latin American journals, and there is no relative difference between those indexed in Scopus and those that are not.

The contribution and editorial effort index that Redalyc calculates supplements the regional information that has just been analyzed. This index represents the contribution of a journal to its area, and thus can be taken as an impact indicator. Several of the regional indicators suggest that journals in Colombia lag behind, but two have an above-average level, which indicates a leading role in the development of psychology at the regional level and supports the use of these indicators to generate growth strategies (Garfield, 2003).

Discussion

We analyzed the development and growth of Colombian Psychology journals between 2000 and 2016. Overall, we found increased author collaboration rates and visibility during the period of observation, together with a growing impact and positioning of these journals in Latin American and international psychology communities. The comparison between the chosen databases shows that the behavior of the reviewed journals is similar in Scopus and Scielo, but not in Google Scholar Metrics.

With respect to documents published within the last 16 years, in addition to the observed growth, it is possible to infer an improvement in Colombian journals' editorial policies. Their presence in International databases suggests an overall implementation of better editorial practices and processes leading to publication. As previously noted, all the analyzed journals, except Revista Latinoamericana de Psicologia, are open access (OA), and their publishing costs are covered by the institutions that own them. Changes in the journals' output throughout the examined period may be explained by changes in editorial practices linked to OA and/or a transition to digitalization of the publications. Another consequence of this economic model is an increase in papers written in languages other than Spanish, which can be indirectly measured by the country the authors are from.

In terms of collaboration, the patterns of the author-per-paper indicators and the scores in the Lawani and Subramanyam indices are similar to those reported in other studies; namely, collaborations tended to increase over time (Lawani, 1986; Polanco-Carrasco, Gallegos, Salas, & López- López, 2017; Salas et al., 2017; Subramanyam, 1983). Kliegl and Bates (2011) indicate that the levels of collaboration in the top psychology journals reached their peak in the 1990s. Although the present observation window begun in the 2000s, there is a similar increasing trend in collaboration, which has been pointed out in other studies and is supported by the cooperation and previous reports on psychology journals in Colombia (Ávila-Toscano, Marenco-Escuderos, & Madariaga Orozco, 2013; Garcia et al., 2016, 2017; Guerrero & Jaraba, 2009; López-López, de Moya Anegón, Acevedo-Triana, Garcia, & Silva, 2015). Although the data are not presented in this study, this collaboration has been found to be going through a change, moving from intra-institutional to national and international collaborations; as such, this category is necessary for the optimization of various kinds of resources. This transition in the number of authors may, in turn, reflect policies by academic institutions that promote collaborative research and facilitate access to funding when proposals come from several research groups or institutions. In Colombia, for example, COLCIENCIAS (the State entity with the highest funding for science and technology projects) scores proposals higher when they are formulated jointly, combining researchers, research groups and/or participating institutions.

We reported collaboration levels that are consistent with the growth of regional productivity, which are, in turn, aligned with those based on traditional databases (Buela-Casal & López, 2005; Vera-Villarroel, López-López, Lillo, Silva, López-López, & Silva 2011). This observed collaboration is not limited to the co-authorship of papers, which has been the standard bibliometric measure. It could also be the resulting effect of the establishment of collaborative efforts with a more long-term focus (Guerrero Bote, Olmeda-Gómez, & de Moya-Anegón, 2013; López-López, de Moya Anegón, Acevedo-Triana, Garcia, & Silva, 2015).

An aspect that indirectly reflects author collaboration, which was not considered here, is the publication of special issues, in contrast to regular issues. In a recently study, Sala et al. (2017) showed that impact, measured by citation and special issues time of publication, is more efficient than that of regular issues, but the number of authors per article is lower. It seems plausible that despite some Colombian journals have established publication of special issues as part of their editorial policy, this may have had a double effect; namely, cooperation on the same topic, but a decrease in collaboration rates per article.

Psychology Journals in Colombia have emerged under the OA model; thus, authors do not pay publication fees - an exception is Revista Latinoamericana de Psicología. Accordingly, the editorial effort has been greater with limited economic resources, a challenge partially solved by devising new tools aimed at decreasing response times (Neto et al., 2016; Piwowar et al., 2018). Research has demonstrated that the OA model has been successful in terms of dissemination and quality, notwithstanding opposition by large publishers who have hegemonically retained and restricted access to content. However, editorials with international influence have begun to slowly integrate OA models (Alperin & Rozemblum, 2017; Laakso et al., 2011; Mukherjee, 2009; Neto et al., 2016; Piwowar et al., 2018).

There have been substantial changes in the patterns of production, dissemination, and impact of academic publications (Gallegos, Berra, Benito, & López-López, 2014). Most are related to the way in which informal dissemination of publications is conducted today (e.g., disertations, thesis or non academic publication or social media; Alperin, 2015). Some studies propose that psychology, because of its nature, requires increasingly broader co-citation systems than other sectors (Chi & Young, 2013), suggesting the need for enhancements in the way impact is measured across disciplines (Krampen, 2010).

Citation in psychology depends heavily on dissemination, and its contemporary relevance is related to the efforts to control the problem of replicability and reproducibility of psychological phenomena; as discussed in other forums, citations can be more beneficial and less dogmatic (Acevedo-Triana, López-López, & Cardenas, 2014; Begley & Ioannidis, 2015; Open Science Collaboration, 2015). The use of citation as a measure of research dissemination and academic communities' cohesion is consistent with other measurement strategies such as cooperation (Alperin & Rozemblum, 2017). Furthermore, across academic research, and professional systems, citation has been used to establish incentive systems for researchers (Gingras, Larivière, Maca-luso, & Robitaille, 2008).

Worth noting is the fact that we did not implement an analysis of the so-called top quotes or top articles that generate a chain of influence in researchers and that determine what is known as Mainstream Psychology (Bornmann, Wagner, & Leydesdorff, 2017). Some of the debates on citation as an indicator of quality, divulgation, and impact can be reviewed elsewhere, and there are some criticisms that we share but that are beyond the scope of the study (Emilio Delgado López-Cózar, Robinson-García, & Torres-Salinas, 2012; Fetscherin & Heinrich, 2015; E. Garfield, 2007; E. Garfield et al., 1978; Nicolaisen, 2009; Seglen, 1997).

New efforts are currently undertaken towards increasing quality and visibility of publications via the creation of rankings that follow diverse methodologies. These efforts have resulted from acknowledging the need to review and update measurement and impact systems for publications (Aguado López et al., 2013; López-López, 2014, 2015; Zou & Peterson, 2016), and to optimize indicators that allow matching different types of journals using a homogeneous measurement system (Romero-Torres, Acosta-Moreno, & Tejada-Gómez, 2013). Likewise, these efforts are aimed at optimizing research resources, changing a paradigm of knowledge-possessing researchers into one where cooperation and collaborative networks determine the level and quality of research (Duffy, Jadidian, Webster, & Sandell, 2011). In this regard, Alperin and Rozemblum (2017) have pointed out the need to assess Latin American journals in their historical, teleological, and conceptual region-specific contexts as a way to potentiate technological and scientific development.

One of the explicit assumptions of this study was the recognition of the diversity of academic activity in psychology and its subareas (clinical-, social-, organizational-, educational-, or neuropsychology). Although our analysis did not intend to homogenize this activity (Krampen, 2008; Günter Krampen, von Eye, & Schui, 2011), bibliometric data presented here should be used to improve editorial practices that aim to better position and disseminate subdisci-plines (Lluch, 2005).

Additionally, the use of Google Journal Scholar Metrics (Ayllón Millán, Ruiz-Pérez, & Delgado López-Cózar, 2013) in the present study aimed to overcome potential biases in data extraction and analyses based on Scopus and Web of Science databases, which is the traditional method (Gorraiz & Schloegl, 2008; Hernández-González, Sans-Rosell, Jové-Deltell, & Reverter-Masia, 2016; Salvador-Oliván & Agustín-Lacruz, 2015).

One possible limitation of this study is that journal inclusion was based on availability of impact indicators from at least two indexing systems. This decision was based on the notion that a lack of standardized impact indicators across all journals would have precluded comparisons across them; instead, new arbitrary categories would have been needed. Although we acknowledge that these indicators are not indispensable, avoiding their use entails the cost of assessing impact with no widely accepted indicators. Accordingly, there is a need to develop alternative approaches to assess impact via emerging-academic and social networks, which have been underestimated options (Alperin, 2015).

Conclusions

Psychology journals in Colombia are a reference point-for publications across Latin America in terms of dissemination and impact. Coverage in international databases does not ensure greater regional visibility; instead, it optimizes growth in terms of journal networking and co-citation. An increase in productivity has also increased the pressure on the journals' editorial teams, which has led to improvement in response times and quality. The increment in the number of documents per journal seems to be a feature of most of the journals. Analyses of journals' productivity, collaboration, and impact need to comprise different sources to control for partial information, and also be based on samples from different sources. Although some of the reviewed journals seem to have higher scores across the different indicators we analyzed, it is evident that depending on the indicator or the database utilized, journals may change their positioning in comparison to the others (de Araújo & Sardinha, 2011).