Introduction

Education systems have changed dramatically over the past 20 years. The technification of science, the influx of information, and the influence of economics on scientific development have promoted a change not only in institutions, but also in ways of thinking, which is expressed through changes in teaching and assessment methods as a result of the understanding of differences in how children and adults learn.

Performance assessment should be a dynamic, systematic, and structured process that identifies and involves assessment objectives, the selection and use of multiple tools and instruments according to those objectives, and the application of behaviors derived from this process to optimize and guide learning.1,2

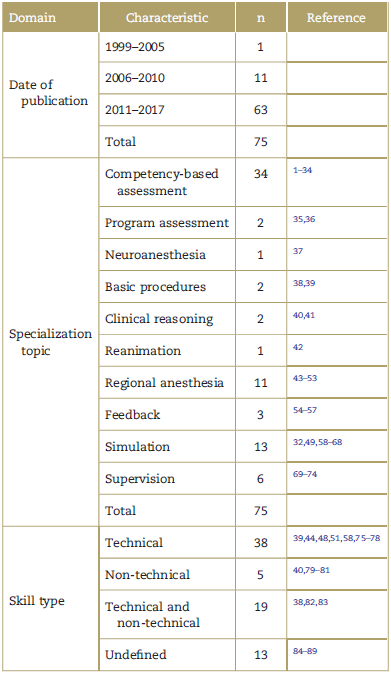

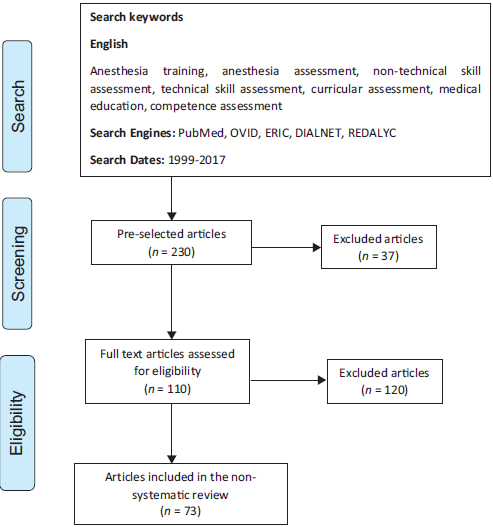

After a literature search spanning from 1999 to 2017 focused on the assessment process of anesthesiology students' performance, which attempted to describe its theoretical and pedagogical foundations, educational principles, assessment tools, and implementation strategies from the concept of programmatic assessment and assessment for learning in this practical knowledge area, 110 articles were reviewed and 73 were considered relevant for the review (Table 1, Fig. 1).

Below, readers will find the most relevant results of this narrative (non-systematic) literature review. Initially, there is an explanation of how the concept of competency has modified the anesthesiology assessment process over the past two decades, through a brief description of the domains and learning theories applied in anesthesiology. Finally, there are the assessment instruments and systems currently recommended for performance assessment of anesthesiology graduate students.

Anesthesiology assessment

Over their professional lives, anesthesiologists develop a number of complex skills that must be learned during training and honed with practice. Teachers have a responsibility to know what skills they should teach, how to do it, when to delegate responsibilities and when a resident is able to deal with the real world in unsupervised conditions.58

Some authors propose to work on the classification proposed by Gaba et al59 based on the concept of "Situation Awareness", which describes three basic aspects anesthesiologists should develop during training for conscious decision-making: interpretation of subtle signals, interpretation and management of evolving situations and special knowledge application.58,59,83

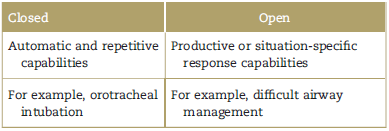

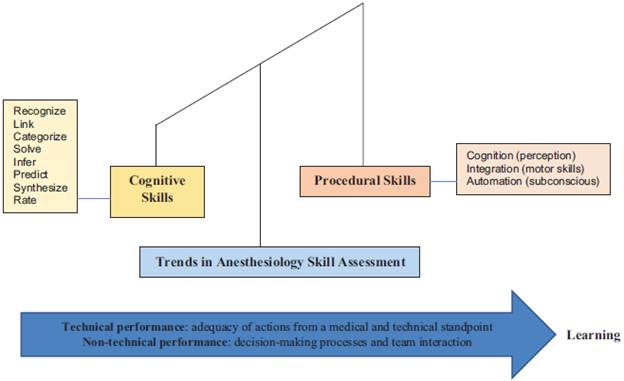

Gaba et al classify the competencies in which anesthesiologists should be trained in both technical and nontechnical skills.6,7,58,59 The term "technical skills" refers to the execution of actions based on medical knowledge and technical perspective, focused on the control of the body and thought (Table 2).38 The most studied are orotracheal intubation, vascular catheterization, regional anesthesia, crisis management, pain management, patient assessment, and critical care management.60,71

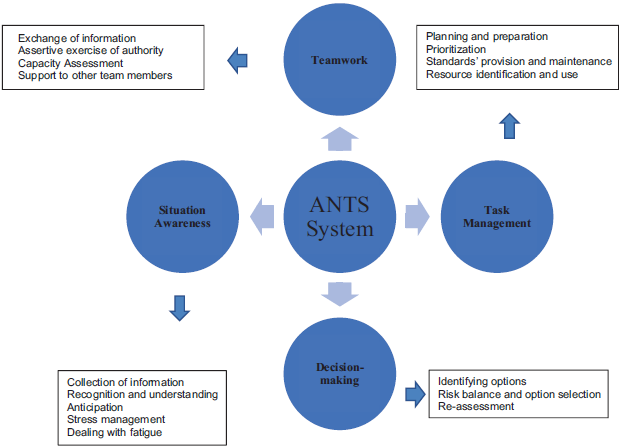

The concept of non-technical skills refers to the development of cognitive and social skills and personal resources that enable safe and efficient task performance.6,80 The acquisition of these types of skills40 is what decreases the possibility of error and adverse events in patient care7 (Fig. 2).

Source: Adapted from non-technical skills for anaesthetists: developing and applying ANTS.80 Authorized by Rhona Flin.

Figure 2 Non-technical skills based on the ANTS system (Anaesthetists' non-technical skills).

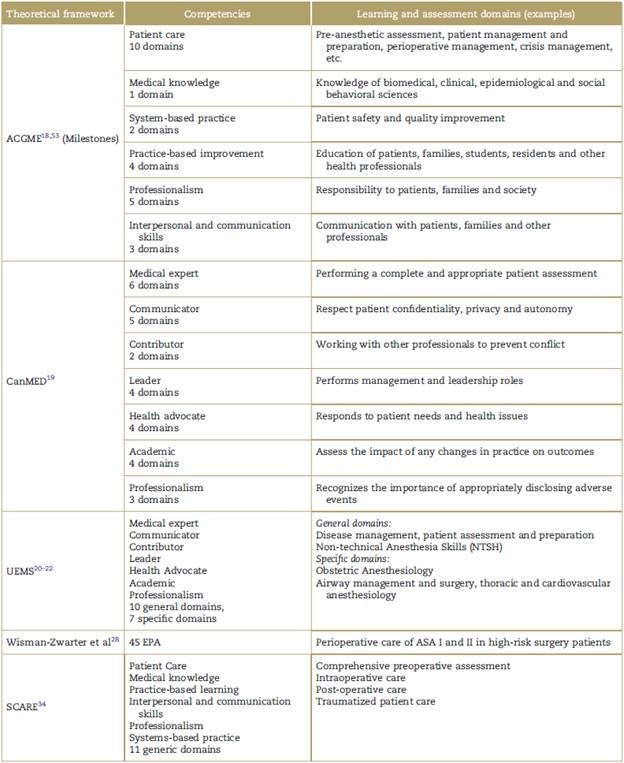

Currently, there are multiple theoretical frameworks focused on the application of different competency-based models (ACGME, CanMEDS, Union of European Medical Specialists [UEMS], SCARE, etc.), which have reached different development and search ranges (Table 3).18-22,30 The most current vision is perhaps the approach based on the entrustable professional activities proposed by Ten Cate since 2010, still under in-depth study in this area of medicine.27-29

Exchange of information Assertive exercise of authority Capacity Assessment Support to other team members

How is it assessed in anesthesiology?

Purpose of the assessment

For years, assessment in anesthesia has focused on summative competency assessment related to clinical practice, patient interaction, and critical situation analysis, often at the end of rotations. Currently, it is proposed to emphasize real-time, sequential, and progressive process assessment and learning individualization, as well as the relevance of feedback within this process.4-6

Content of the assessment

The assessment of technical and non-technical performance should have equal weight when establishing judgment.59,70,75) Traditionally, assessment in anesthesia has been limited to theoretical knowledge tests as the main source of information, coupled with unstructured direct observation of daily work and isolated logs of information without feedback focused on technical skill acquisition (Fig. 3).

A study by Ross et al found that most assessments were related to "patient care" and "medical knowledge" competencies (patient care, anesthetic plan and behavior [35%], followed by use and interpretation of monitoring and equipment [8.5%]). 10.2% were related to practice-based learning and improvement, most commonly self-directed learning (6.8%); and 9.7% were related to system-based practice competency.11

Assessment tools

Although the educational literature supports the usefulness of multiple tools to assess performance, habit leads to using a single tool to define performance (Global Rotation Assessment and multiple-choice tests).4 This type of assessment suffers from the known limitation of the use and inadequate interpretation of scales, subjective performance assessments and the "halo" effect, where the result is determined by what is known to have occurred in the past.4-6

Despite the interest of the European Society of Anaesthesiology (the UEMS/European Board of Anaesthesia) to harmonize assessment and certification tools for anesthesia programs in Europe,20-22,24 a recent study in the European Union10 found that the assessment and certification processes for anesthesia specialist training were diverse. In many countries the traditional time-based learning model remains active, with an average duration of 5 years (range 2.75-7). The programs with the greatest number of assessment tools were competency-based (mean 9.1 [SD 2.97] vs. 7.0 [SD 1.97]; p 0.03). The most frequently mentioned tools were direct clinical observation, feedback, oral questions and/or multiple-choice tests procedure log and portfolio. Most countries had a certification process at the national level.

Some competency-based anesthesia programs, such as that of the University of Ottawa in Canada,7 suggest simplified assessment tools like those used in the oral exams of the Royal College of Physicians and Surgeons of Canada, which serve to evaluate residents' medical knowledge and critical thinking associated with questions intended to guide their learning.

Simulation-based assessment is perhaps one of the most evidence-based tools to acquire competencies in the management of simulated intraoperative events; however, further studies are needed to determine its validity in terms of clinical performance and knowledge transfer.60,62

Some studies focused on measuring the effectiveness and validity of methods, such as Script, ECOE, Mini-CEX, and DOPS, among others, have shown their usefulness to assess graduate anesthesiology students, but at a higher cost (4-6.65).

In parallel with the difficulties of applying these "novel" assessment methods within anesthesia practice, Tetzlaff demonstrates the virtues of problem-based assessment for the acquisition and assessment of both technical and non-technical competencies at a more reasonable cost.4-6

To summarize, anesthesiology assessment is characterized in most cases by the gap between what must be evaluated and what is ultimately evaluated, giving greater importance to theoretical knowledge assessment of procedural skills and clinical judgment at the time of assessing residents. This prevents the proper assessment of the competency level reached by the student, as it does not entail a general assessment.

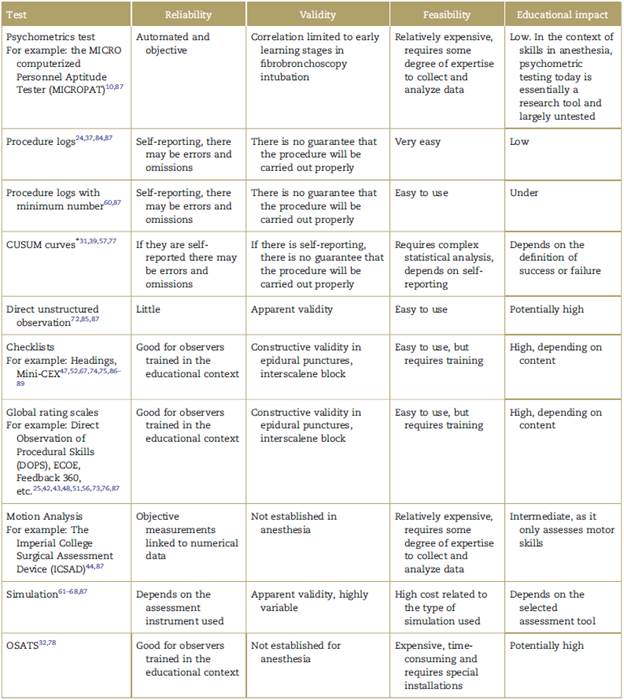

The advent of multiple assessment instruments designed under the precept of "evaluating to learn" and the assessment usefulness formula proposed by Van der Vleuten et al36 show that anesthesiology has a significant gap between the application of such instruments in specific teaching situations and competency-based assessment in this specialty10-17,25-32,37-46,56,57,60-67,71-78,82-89) (Table 4).

Table 4 Anesthesia assessment instruments according to the Van der Vleuten Equation.

* CUSUM curves or cumulative learning curves. CUSUM graphs are models that evaluate the success rate in the accomplishment of a task over time, considering the assessment method's possibilities of failure from the point of view of type 1 and type 2 errors, and of the skill to be properly evaluated from the point of view of the acceptable and unacceptable probability of failure. In anesthesiology, CUSUM charts have been used not only to assess psychomotor learning but also to describe its evolution over time in both trained and untrained individuals. The most frequently assessed procedures are: orotracheal intubation (OTI), vascular catheterization, and regional anesthesia.39

Source: Authors.

The need to assess anesthesiology residents in the clinical setting is evident; however, there is no consensus on the use, let alone the selection of the best strategy to assess their performance and learning. Although the assessment trend is focused on the "patient care" and "medical knowledge" competencies, there is great interest in other types of tools and instruments in other competencies within the AGCME and CanMEDS theoretical framework. For example, to assess non-technical skills, the University of Aberdeen in Scotland designed the Anaesthetists Non-Technical Skills tool, currently incorporated by the UK's Royal College of Anaesthetists for the routine assessment of anesthesia residents and as a possible national selection tool for future anesthesiologists.80,87

Considering that the development of an appropriate programmatic assessment system must start from the fact that there is no single type of assessment method or tool that is intrinsically superior or sufficient to assess all competencies, regardless of the proposed curricular model, programs should ensure the design and implementation of assessment methods that are consistent with the curricular philosophy according to their priorities and learning objectives.

Conclusion

The analysis of assessment from its educational impact and historical development indicates that the way assessment is carried out substantially influences the change in students' learning styles; hence the importance of not making assessment an isolated measure of student performance.

Competency is content or context specific, and therefore more than one method or measurement is required to assess it, in addition to being appropriate to the learning level.13 This highlights the importance of an assessment program that includes-in a structured manner and in line with the curriculum philosophy-the use of multiple instruments to obtain the greatest amount of data and attributes related to student performance.

It is easier to recognize a competency when it has been developed than when it is absent,33 so it is important to assess all aspects of training, particularly in areas where procedural skill acquisition appears to be of most importance and less attention is paid to the acquisition of the trainees' other professional skills.

Programs and teachers have the responsibility to define the complex competencies and skills to be learned35,36 and how to teach and evaluate them, to recognize when to delegate responsibilities and when the resident can face the real world in unsupervised conditions.

Every day there are more resources to turn assessment into a transformative tool for learning. Today there are multiple competency measurement instruments based on the traditional Miller pyramid which allow to assess both technical and non-technical skills based on residents' process and progress, to apply the often-discussed concept of student individualization more broadly.13,33,90

Although there is still a long way to go in the area of anesthesia, there is great concern for perfecting and studying the impact of other types of tools and instruments in specific scenarios of the specialty. Curricular reforms, a change of vision and the professionalization of the medical discipline have expanded the room for improvement in the teaching area, as well as the application of new assessment strategies and instruments that could be positive and increase the likelihood of "significant learning" in anesthesiology residents.

Ethical responsibilities

The article is based on and follows the "Scientific, technical and administrative standards for health research" established in Resolution 8430 of 1993 of the Ministry of Health of the Republic of Colombia. The published study was deemed as a low-risk research, which required written informed consent as the study used private documents, as well as opinions and personal data, the use of which may cause psychological and/or social changes or human behavior modifications.

text in

text in