I. Introduction

Ocular imaging has been continuously evolving and constitutes a useful tool in the clinical care of patients with retinal diseases. Over the last few decades, the use of different imaging techniques has provided a very detailed description of several retinal diseases. The different ocular image modalities provide information about the anatomy and functional changes in the retina with high-resolution images [1].

In addition, ocular images are essential for the prognosis, diagnosis, and follow-up of patients with retinal diseases. Currently, some modalities commonly used by ophthalmologists are fundus photography (FP) and optical coherence tomography (OCT). The FP presents a 2D representation of the retinal semitransparent tissues projected in a 3D image plane using reflected light. On the other hand, OCT uses low coherence light interferometry to create a detailed image of retinal and choroidal layers [2]. Both are widely used for the detection and treatment of diabetes-related eye diseases, such as diabetic retinopathy (DR) and diabetic macular edema (DME).

DME and DR are complications responsible for an estimated 37 million cases of blindness worldwide, also, they are a major cause of vision loss among people in working age [3].

Around the world, the dedicated budget to diseases related to vision disorders has increased exponentially in recent years. In addition, the growth in life expectancy requires more eye care services, pushing health care systems to bring adequate care to rural and remote populations [4].

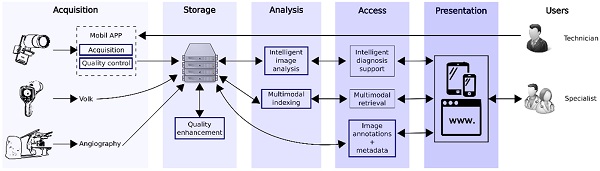

Deep learning-based methods for automatic analysis of eye images have proven to be a valuable tool to support medical decision making [5, 6, 7, 8]. Moreover, the synergy of teleophthalmology and deep learning algorithms is considered a solution that offers an appropriate and efficient alternative, especially in diseases of the retina, where images are useful for diagnosis and follow-up procedures [9]. This article presents the general architecture of a system based on deep learning techniques, called SOPHIA, for the diagnosis of eye diseases. SOPHIA comprises five blocks: acquisition, storage, analysis, access, and presentation. The system supports different types of acquisition devices, in particular portable and low-cost devices based on a conventional smartphone.

The article's structure is organized as follows: Section 2 describes the general architecture of the proposed system. Results are presented in Section 3. Discussion, conclusions, and future work are mentioned in Section 4. Finally, Section 5 contains the acknowledgments and funding of the study.

II. Methodology

SOPHIA is an acronym for System for OPHthalmic image acquisition, transmission, and Intelligent Analysis, which is a deep learning algorithm-based system for managing ophthalmic medical images. Its general architecture corresponds to a picture archiving and communication system (PACS), i.e. a system that provides storage and access to medical images [10]. However, SOPHIA's design is driven by particular design objectives and constraints: the solution must be focused on its low cost, using free software tools, open-source code, and low hardware requirements; the solution must support images from conventional ophthalmic imaging devices and low-cost acquisition devices (3D printing); access to images should support different mechanisms from conventional text search of image metadata to retrieval mechanisms based on visual content, and, finally, an interface that supports different types of users and platforms (both a web-based and a mobile front-end) must be provided.

The overall SOPHIA architecture is depicted in Figure 1. The architecture is organized in five different blocks: acquisition, storage, analysis, access, and presentation.

A. Acquisition

The main static devices used in ophthalmology facilities are the ones from the following brands: Zeiss, Optovue, Canon, Topcom, and Heidelberg. These devices are limited by the high associated cost, non-portability and by the manufacturer's limitations for post-image analysis. On the other hand, some fundus cameras are portable, allow easy acquisition, and subsequent analysis, but are considerably expensive, the most widely used include Volk Pictor plus, Horus Scope, and Welch Allyn RetinaVue 100 Imager [10].

B. Storage

The storage block corresponds to a database in the cloud that stores images and metadata. However, images acquired with mobile devices are evaluated using automatic methods to improve illumination, contrast, and edge enhancement according to clinical criteria.

C. Analysis

This block uses methods for two main purposes: diagnostic support and visual/textual content analysis to improve image retrieval [11, 12, 13, 14]. The intelligent image analysis module includes different models of machine learning and deep learning aimed at specific disease diagnosis. The multimodal indexing module includes models that allow the visual content of the image, and the textual content of the metadata to be analyzed jointly to extract useful patterns for content-based image information retrieval.

D. Access

The access block provides several modules that implement different system functionalities. The intelligent diagnostic support stage provides additional interpretable clinical information for disease prediction. On the other hand, the use of algorithms to retrieve clinically meaningful information from large medical databases supports diagnosis and decision making. Finally, the image annotation and metadata editing stages allow to add new information about clinical data, as well as local and global characteristics to be included in the image dataset.

III. Results

This section presents the main results obtained for quality assessment, DR, DME, and clinical findings detection. The databases, clinical criteria, and results obtained were validated by professionals from the Fundación Oftalmológica Nacional.

A. Automatic Evaluation of Ophthalmic Image Quality

The automatic quality assessment was developed using the eye fundus image set provided by the California Healthcare Foundation and Kaggle for the detection of diabetic retinopathy [15]. Images from this database were classified by experts as images in a binary-class problem with acceptable quality and rejected images [16]. Also, they were classified in a multi-class problem with good, usable, and poor-quality image labels, respectively [17].

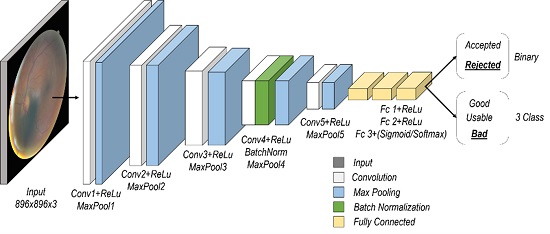

The architecture of the proposed model contains a series of 5 blocks of convolutional and max-pooling layers with different filters (16, 32, 64, 64, and 64), and with kernel sizes of 11x11, 9x9, 7x7, 6x6, and 6x6, respectively. Finally, it contains three dense layers with 256 and 64 neurons in the first two layers, while the last layer uses the number of classes, as presented in Figure 2.

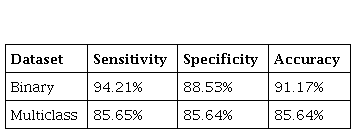

The results obtained for the databases in test sets are presented in Table 1.

B. Models for the Diabetic Retinopathy Detection

Models for diabetic retinopathy detection were developed using two datasets. The Kaggle challenge dataset, consisting of about 88,000 fundus images, and containing the following numerical classification: 0 for healthy individuals and 1-5 for mild, moderate, severe, and proliferative DR, respectively [15]. The second set is Messidor-2 [18], with 1748 eye fundus images from a French research program, and binary labels for referable and non-referable DR.

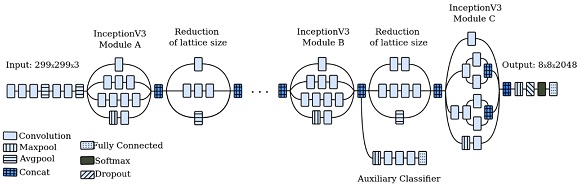

The InceptionV3 architecture was trained and fine-tuned using the pre-trained weights from the ImageNet dataset [20], as shown in Figure 3.

The model uses a low learning rate (LR) to perform fine-tuning with a particular partition of the Kaggle public dataset. Each image was associated with a label of 0 for healthy or non-referable patients, and 1 for a patient with any DR or referable diagnosis. Initially, features are extracted using the InceptionV3 pre-trained with the ImageNet dataset, these features are used as input to the classification model, on which a systematic exploration was performed to determine the best hyperparameters. In our case, the parameters were a LR =1x10-3 and the batch size was of 32. Finally, a fine tuning of the pre-trained weights of the last two blocks of the InceptionV3 architecture during 300 epochs was made, using as optimization algorithm the stochastic gradient descent (SGD), with LR =1x10-5, and a momentum of 0.9; the loss employed was categorical cross-entropy and a batch size of 32. Thus, an AUC of 0.92, a sensitivity of 89.74%, a specificity of 92.44%, and an accuracy of 90.10% were obtained using the Messidor-2 dataset.

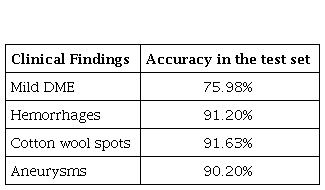

C. Obtained Results in the Detection of DME and Clinical Findings

The proposed model was trained using a VGG model to classify the images with moderate DME, using an Adam adaptive optimizer, with an LR = 1x10-5 and a batch size of 2. The number of dense layers and nodes per layer for the classifier were explored in a systematic search, using 25 epochs and a binary cross-entropy function to model the loss. The results with 2 dense layers, and with 4096 and 512 units presented the best results in training and validation. The best model was evaluated with the test set, the results are presented in Table 2.

IV. Discussion and conclusions

The implemented deep learning methods have achieved good performance for eye fundus image quality assessment or improvement, however, the enhancement of images taken with mobile devices is still a challenge [21]. In addition, deep learning offers the best performance for the description of visual content compared to other methods [22], therefore, its application in ophthalmic information retrieval is a promising research opportunity [23].

Ophthalmological databases have additional information that is not fully exploited, such as clinical data, diagnostic reports, or other data that could be included to improve the models' performance. In this aspect, there is a need for multimodal systems that allow the effective exploitation of joint information between different information sources.

There is great potential for the design and implementation of low-cost systems based on machine and deep learning techniques in low-income and developing countries. The proposed system combines a low-cost image acquisition and computer vision methods with the highest quality clinical requirements to ensure accurate medical diagnosis in remote and hard-to-reach areas. The implementation and future validation of such systems, in support of clinical staff, represent major challenges that could potentially help to reduce the low coverage of public health systems, the lack of specialized services, and the high cost of specialized medical examinations.