I. INTRODUCTION

Empirical research in Software Engineering (SE) is a form of experimentation or observation based on evidence. Between 2004 and 2005, Kitchenham, Dybå, and Jørgensen wrote three relevant works proposing the Evidence-Based Software Engineering (EBSE) concept [1,2,3]. This is based on previous works applied in the medicine, which were later taken up and adopted by other disciplines including economics, psychology, social sciences, and SE. The EBSE was adopted in the research processes to give methodological rigor to the results identified in the systematic literature studies, and to make these results impartial and more reliable [3]. The mechanism of systematic literature studies to identify and contribute evidence in medicine consists of several stages of SE [4]. It was structured in six steps organized into four methodological phases, i.e., (i) Set out a research question; (ii) Search for the evidence to answer the question; (iii) evaluate the evidence critically; and finally, (iv) use the evidence to address the question.

Systematic literature studies in EBSE are secondary studies classified into Systematic Mapping Studies (SMS), which are broad literature studies on a topic that seek to find the available evidence on the subject [4, 5]; and the Systematic Literature Reviews (SLR), which aim to identify, evaluate, and combine the evidence from primary studies and answer a research question in detail [6]. These systematic literature studies are supported by research guidelines or protocols that guide researchers to obtain results in less or greater depth. Examples of the application of these protocols and their results can be seen in [5, 7, 8, 9, 10, 11, 12, 13, 14, 15].

The protocols indicate how to proceed in a way that facilitates the analysis of results, the conclusions, and the possibility to replicate the study. K. Petersen et al. [6] describe the essential steps of the process to carry out an SMS from the definition of research questions to the result of the process. Petersen et al. [16] shows a protocol was updated and the concepts of PICO (Population, Intervention, Comparison, and Outcomes) were added to identify keywords and formulate search strings based on research questions and validity assessment such as descriptive validity, theoretical validity, generalization, and interpretive validity.

Additionally, strategies to include grey literature have emerged [17, 18, 19, 20]. Also, strategies to complement the protocols of systematic literature studies or to design a search strategy that appropriately balances result quality and review effort were suggested [21, 22, 23, 24]. For instance, the strategy presented in [25, 26], which aims to extend and detail the searches using the list of references or citations to identify additional works, better known as Snowballing. It could also be an alternative to update studies [27, 28, 29, 30, 31] or to maintain and manage traceability [32] to make the studies more reproducible [33, 34]. Henceforth, Snowballing will be the most widely used term in the scientific community.

It is evident that systematic literature studies benefit the researcher's work [35, 36, 37]; however, the execution of the protocols can be a repetitive, slow, laborious, and error-prone activity because there are many steps to follow, sources of information to consider, various jobs to manage. It is also a time-consuming activity [38], there can be problems caused by unreproducible research [39] or plagiarism [40]. Thus, systematic literature studies in SE require much more effort than traditional review techniques. Therefore, given the innumerable advantages of conducting systematic literature studies and the effort and additional work that they require, this work presents a semi-automatic process that supports the researcher in the execution of the research protocols in literature searches. In most cases, massive searches for information should be considered. The process is based on identifying the number of citations from lists of papers that enable finding new ones to be included. The proposed process will be used to complement traditional search processes and to cover more studies, especially those that may be disregarded by the manual treatment of information conducted by the researcher. In this vein, it will be possible to have a much more agile and precise protocol that can be replicated by implementing the proposal presented here, considering that the number of citations may change over time.

The article is organized as follows: Section 2 presents a theoretical framework and related works. Section 3 is an example of the application of the proposed process. Section 4 presents the analysis of results. Finally, Section 5 presents the conclusions and recommendations for future work.

II. MATERIALS AND METHODS

A. Related Work

In general, the works mentioned below were identified. Wohlin and Rainer [41] argue that mistakes could be made, thus resulting in the production, consumption, and dissemination of invalid evidence. They propose a framework and a set of recommendations to help the community to produce and consume credible evidence. Pizard et al. [42] proposed to improve the practice to obtain better results. Therefore, provide a guidance for producers and consumers called “Bad smells” for software analytics papers [43]. Besides, present a process to select studies based on the use of statistics, in which the criteria are refined until reaching agreement [44]. After that, the researchers interpret the selection criteria and the bias is reduced as well as the time spent. In the same way, present an approach based on robust statistical methods for empirical SE that could be applied to systematic literature studies [45]. Finally, report several problems identified during their research that threaten any type of literature study and hinder the support of adequate tools [46]. They also recommend solutions to mitigate these threats.

Other works that propose ways to support researchers when they do systematic literature studies were identified. One of them is defined by Bezerra et al. [47], where an algorithm to perform forward and backward Snowballing is proposed, but the sources to replicate the study are not identified. Tsafnat et al. [48], a literature review is carried out to identify tools that support or automate the processes or tasks of systematic literature studies. Most of the findings focus on automating and/or simplifying specific tasks of the protocols. Likewise, some tasks are already fully automated while others remain largely manual. Moreover, some studies describe the effect that automation has on the entire process, summarize the support of the tool for each task, and highlight what subjects require more research to carry out automation. As a research opportunity, they highlight the importance of integrating tools in literature reviews. In [49], a tool to support the implementation of a protocol for SMS-type studies is proposed. Finally, Marshall and Brereton [50], 14 tools that can help automate part or all of the process proposed in the protocols are identified and classified. The works found focus mainly on automating certain points of the protocol process that guide systematic literature studies or suggest the integration of several tools to have a more robust or unified proposal. Others focus on the analysis of the protocols. It is worth mentioning that none of them specifically focuses on the use of forward Snowball with citations as a strategy to include works. We highlight the statement of [51], who state that the quality of conclusions completely depends on the quality of the selected literature.

B. Proposed Process Steps

The process proposes to automate some steps of the Snowballing technique presented by Wohlin [26]. Additionally, it is intended to extend and deepen the search for works to identify other documents. That is, to complement the search by identifying the list of references and citations, being this number the inclusion or exclusion factor. This evaluation will be conducted based on the information provided by Google Scholar, a search engine for academic literature that has access to digital libraries and open scientific works. It is opensource and allows researchers to quickly obtain works that may be relevant to an investigation. In addition, Google Scholar provides information that may be useful for researchers, e.g., number of citations, related works, versions of the work, format for citations, among others. The number of citations will be the main element in this proposal.

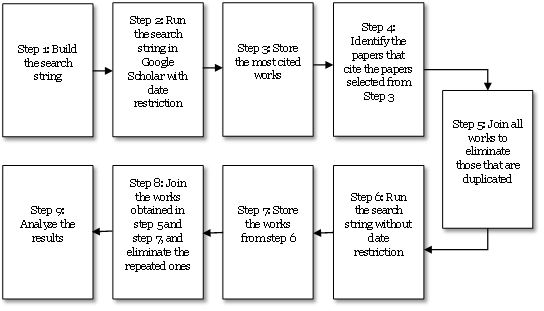

The proposed process is summarized in Figure 1, as protocol with a series of steps to be executed sequentially. It is semi-automatic since in the first instance we will rely on a reference management tool. In this case we will use Zotero (https://www.zotero.org) as bibliographic manager and spreadsheets of Microsoft Excel to manage and store the results. In addition, the process is defined by the researcher’s criteria, who finally decides which works to include or exclude from the research. As can be seen in Figure 1, some steps are proposed, and their details are shown in Table 1. It should be noted that the suggested steps can be adjusted according to the researcher’s will, as well as the number of works selected in each. The example selection and the application example of the proposed process is described in detail in https://bit.ly/3qWUfXd.

Table 1 Steps of the process proposed in the present work.

| Id. | Name | Description |

|---|---|---|

| 1 | Build the search string. | In this step, keywords are identified and strings are formulated to answer the research questions in the study. |

| 2 | Execute the search string in Google Scholar with date restriction. | When executing the string, it is possible to find several works, therefore, it is necessary to filter by the most cited ones and restrict the search by date. |

| 3 | Store the most cited works. | In this step, the selected documents are stored so that they can be processed in later stages. It should be noted that the selection of the most cited works is left to the discretion of the researchers. |

| 4 | Identify the papers that cite the papers selected from Step 3. | To the selected works, in Step 3, the Snowballing forward strategy is applied, that means to identify who is citing them and filter by the most cited ones. |

| 5 | Join all works in order to eliminate those that are duplicated. | To get a unified list, repeated works must be eliminated and from this point on managed the selected ones. |

| 6 | Run search string without date restriction. | This step aims to find works that have historically been highly cited or are benchmarks in the research area. |

| 7 | Store works from Step 6. | The data is stored from use in subsequent steps. |

| 8 | Join the works obtained in Step 5 and Step 7, and eliminate the repeated ones. | Both lists are unified and the repeated ones are eliminated to obtain a single list in which the data is processed. |

| 9 | Analyze the results. | Analysis of the results can be carried out to compare them or to identify whether they meet the research questions and quality criteria. |

C. Analysis of the Results Obtained by Both Proposals

At this point it is important to consider the perspective of the research questions and the purpose of the work, and thus, make the necessary adjustments because the proposal does not seek to replace the protocols for systematic reviews of the existing literature. On the contrary, the objective is to complement the strategy the researcher is carrying out. Therefore, the first step was to compare the data obtained by executing the proposed process with the Coded Papers of Connolly et al. [52] (more information in: https://n9.cl/d3t70 and https://n9.cl/yx8p). It was possible to observe that: (i) the Coded Papers did not have the number of citations as a requirement or inclusion criteria, this is why the occurrences in both works are different; and (ii) some of the digital libraries used to elaborate the work of Connolly et al. [52] must be accessed through subscriptions and some like Google Scholar cannot track them. Some works in the Coded Papers do not have any citation, but their authors indicate that these works answer the research questions. Therefore, according to our proposal, works with a small number of citations or without citations would not be considered.

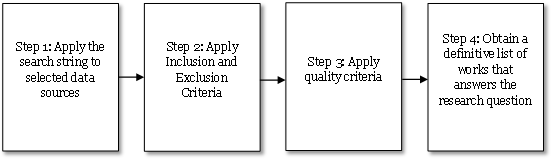

On the other hand, in the process followed by Connolly et al. [52] represented in Figure 2, the inclusion and exclusion criteria are relevant to arrive at the definitive works. Therefore, the following steps are highlighted:

Step 1. The search string was applied to the selected data sources. According to the authors, 7,932 papers were found in ACM, ASSIA, BioMed Central, Cambridge Journals Online, ChildData, Index to Theses, Oxford University Press (journals), Science Direct, EBSCO, PsycINFO, SocINDEX, Library, Information Science and Technology Abstracts, CINAHL, ERIC, Ingenta Connect, InfoTrack, Emerald, IEEE Computer Society, and Digital Library.

Step 2. Inclusion and exclusion criteria were applied. This step made it possible to reduce the list of works to 129. According to the authors, the criteria were: (i) they include empirical evidence related to the impacts and results of the use of games; (ii) the works’ data should be in a time window of only 5 years; (iii) they include an abstract, and (iv) they include participants older than 14 years of age in the studies.

Step 3. Quality criteria were applied to the 129 works obtained after applying the selection criteria: (i) game category; (ii) categorization of game effects; and (iii) coding methods. Then, the works were read and assigned a grade between 1 and 3 according to the following dimensions: research design, method and analysis, research results, relevance of the study focus, and whether the study could be extended.

Step 4: A final list of works was obtained with one more step after the Coded Papers (129). Then, the definitive list contained 70 works that answered the research question, being considered by their authors as the best quality works for the investigation. Based on them, the final analysis was elaborated and presented.

III. RESULTS AND DISCUSSION

Results show that the number of citations can be used as a criterion to measure the importance of a work since those who are citing it find it useful. Hence, it indicates that the most cited works have an impact on a field. The proposal of this article helps to identify the most cited papers and iterate on those results. It does not pretend to replace the protocols already established, in turn, it is a support strategy. It is also necessary to use other criteria to identify whether the results of applying the proposal are answering the research questions, as described below.

Following the process proposed by Connolly et al. [52], the final quality criteria used as a new filter were: (i) empirical evidence on results and impacts related to the use of games; (ii) effects on games, mainly focused on positive ones; and (iii) the method used to evaluate the games, e.g., study with qualitative and/or quantitative results, examples, samples, sampling, data collection, data analysis, results, and conclusions.

In the application example based on our proposal, the quality criteria described by Connolly et al. [52], and subsequently, the number of citations criterion was no longer considered. By doing this, 58 works were discarded and a final list of 54 works was obtained (more information available at: https://n9.cl/3ek5i). They enabled drawing a final discussion (more information available at: https://n9.cl/jywvb and https://n9.cl/w8e3) and conducting the analysis of the subject. Regarding this last step, it should be noted that (i) all the selected works are in the context of serious games and computer games; (ii) the investigations whose scope was the evaluation of negative aspects of entertainment games were discarded; (iii) the works at design stages were discarded; (iv) the works including pedagogy were better valued; (v) many discarded papers were related to studies on the behavior of users when they played games, which was not within the scope of this study; (vi) the 54 works answered the research question and the number of citations is based on the assumption that important works are usually cited (more information available at: https://n9.cl/3ek5i)

IV. CONCLUSIONS

To support the protocols used in systematic literature studies, a forward Snowball proposal with number of citations as inclusion criteria is an alternative that enables covering considerable volumes of information if used along with Google Scholar. The number of citations indicates the impact a work has had and also its quality by counting the number of times other authors cite it.

The work of Connolly et al. [52] was used for comparative analysis. New works that also answered the research questions and that may eventually be considered by the researcher to be included or to expand the study were found. By identifying these works and quantifying their citations, they can be considered important in the explored area of knowledge. The proposal does not necessarily reduce time or effort, but it does reveal works that could not have been considered due to previous strategies and that due to the number of citations may be impacting the area of interest of the research. The success of the application of the semi-automatic process based on Snowballing to support research protocols in massive literature searches lies fundamentally in the permanent validation of the procedure and its steps by the researcher.

As a future work, it is expected to fully automate the process and make more comparisons, as well, it would be useful to develop a tool that automates the process. However, we identified that there may be a limitation when trying to use a bulk search using Google Scholar, since it can detect and block the URL used. Other works showed that using massive queries can be detected as a security threat by the platform. Additionally, Google Scholar does not consider some digital libraries that require subscription or payment. Those ones should be added manually if the researcher considers it so. Moreover, some works may be left out of the search if Google Scholar is used without previous settings. The different standards of scientific journals that belong to certain digital databases must be also considered when automating the process to back the proposal established in this work.