I. INTRODUCTION

Currently, software development companies face challenges to deploy solutions with high quality standards in short time intervals [1]. To achieve this, companies seek to improve their processes by implementing approaches and/or frameworks that allow them to enhance the quality of their products [1]. In this sense, proposals related to the software product implementation life cycle (Dev) that can be classified as traditional and agile have been made. Some of the most used traditional solutions are CMMI [2], RUP [3], Cascade model [4], Spiral model [5], and Rapid Application Design (RAD) [6]. Some common agile solutions are Scrum [7], Lean Software [8], Test Driven Development (TDD) [9], Extreme Programming (XP) [10], [11], Crystal Clear [12], Adaptive Software Development [13], and Dynamic Systems Development Method [14]. Moreover, hybrid solutions that take advantage of both approaches have been proposed, e.g., Scrum & XP [15], Scrumban [16], and Scrum & CMMI [17]. However, software companies have also paid special attention to the processes related to operations management in Information Technology (Ops), which are applied to establish strategies that allow defining and implementing a set of best practices to guarantee the stability and reliability of the solutions in productive environments. Software development life cycle management brings multiple benefits to companies including continuously reducing development, integration, and deployment times; delegating repetitive tasks to automated processes; reducing errors caused by human intervention [18], [19], among others. To achieve this, solutions related to operations management such as ITIL [20], COBIT [21], the ISO/IEC 20000 standard [22], and ISO/IEC 27000 standard [23] have been proposed. Debois [24] introduced the term DevOps in 2009 with the aim of integrating the best practices proposed for development and operations (Dev and Ops). Over the years, DevOps has proven to bring multiple benefits related to the improvement of activities of the projects’ life cycle, especially in productivity, quality, and competitiveness of software development companies [25], [26]. In general, DevOps focuses on defining practices that allow enhancing tasks related to continuous integration [27], change management [28], automated tests [29], continuous deployment [30], continuous maintenance [31], among others. According to the global survey report on the state of agility in 2021 [32], 75% of the participants mentioned that a transformation towards a culture supported by DevOps brings multiple benefits for companies in terms of reduced effort, cost, and time. However, adopting DevOps in software companies is not a simple task [33], to minimize the risk of error in its adoption, they must establish mechanisms that allow quantifying how it is applied in their projects and identify improvement opportunities to fine-tune their practices and improve their internal processes [34]. The efforts and proposals related to the evaluation of DevOps in software companies were identified with a systematic mapping of the literature carried out in [35]. Two mechanisms were used to define methodological solutions (models, metrics, certification standards) and tools developed by active players in the industry that seek to assess DevOps in multiple ways. However, the results show a high degree of heterogeneity in the proposed solutions, since there is no consensus in the definitions, relationships, and concepts related to DevOps [36]. In consequence, the solutions identified in the literature were proposed in accordance with a set of values, principles, activities, roles, practices, and tasks considered relevant by each author. Although the analyzed solutions follow the same objective: “assess the degree of DevOps capacity, maturity and/or competence”, they have different perceptions, scopes and, in some cases, they are ambiguous. Likewise, the solutions described in [35] establish "what" to do; however, they do not define "how" to implement the proposed practices, which can cause confusion when applying DevOps in software companies. Besides, there are studies related to the evaluation of DevOps in companies of different sizes, most of them focus on large and medium-sized companies and leave aside small and micro software companies. According to the digital transformation report of the Economic Commission for Latin America and the Caribbean (CEPAL) in 2021 [37], they correspond to approximately 99% of the legally constituted companies in Latin America and have gradually become active industry players looking to apply DevOps in their projects.

Hence, there are solutions and tools to evaluate DevOps; however, each author suggests his own terminology, evaluation criteria, concepts, practices, and process elements. It results in a high degree of heterogeneity that can generate confusion, inconsistency, and terminological conflicts during the adoption of DevOps practices. This article presents a metrics model defined following the Goal, Question, Metric (GQM) approach [38], and aims to complement the evaluation of DevOps. The model organizes its elements around four dimensions: people, culture, technology, and processes and aims to define what and how to evaluate DevOps compliance in the software industry. The paper is structured as follows: Section 2 analyzes the state of the art of solutions to evaluate DevOps in software companies; Section 3 presents a metrics model to evaluate DevOps according to the practices, dimensions, and values found, analyzed, and harmonized from the literature; Section 4 describes the protocol to form a focus group as an evaluation method. Finally, Section 5 presents the conclusions and future work.

II. MATERIALS AND METHODS

A. Background

After executing a systematic mapping of the literature (SML), as reported in [35], it was analyzed to identify the solutions proposed by different authors in relation to the definition of processes, models, techniques and/or or tools to evaluate DevOps in software companies. Three types of studies were identified: (i) exploratory studies, (ii) methodological solutions, and (iii) tools. The results obtained are presented below.

1) Exploratory Studies. In [39], an exploratory study was carried out to analyze different tools to evaluate DevOps in small and medium software companies. In [11], [36], [40], [41], SML were made to identify the process elements that must be considered to certify that a company applies DevOps appropriately. In [42]-[45], studies were conducted to know the use of maturity models to evaluate DevOps.

2) Methodological Solutions. In [46]-[49], metrics to evaluate the construction, integration, and continuous deployment practices in software companies are proposed; [50], [51] propose competency models; [42]-[45], [52]-[57], maturity models; [50], a model to evaluate DevOps collaboration; [57], a DevOps evaluation model based on the Scrum Maturity Method (SMM); [58], a method to certify the use of best DevOps practices; [59], a model to evaluate development, security, and operations (DevSecOps); and [60], a standard to adopt DevOps in software companies.

3) Tools. [39], [50], [61] mentioned the following tools: DevOps Maturity Assessment [62], Microsoft DevOps Self-Assessment [63], IBM DevOps Self-Assessment [64], and IVI's DevOps Assessment [65]. However, the tools presented in the studies were not assessed exhaustively. To expand the knowledge on the definition of tools to evaluate DevOps, an exploratory study was carried out based on the methodology proposed in [66]. As a result, 13 tools were identified and are presented in Table 1. The tools analysis considers accessibility (A1): free to access, trial period, or paid; evaluation method (A2): surveys, frameworks, consulting, or another mechanism; and objective or scope of the evaluation (A3): the tool performs an evaluation of the process, practices, activities, tasks, or other aspects/element. In relation to accessibility (A1), it was observed that 7 tools (54%) ([62]-[64], [67]-[70]) are free, 5 tools (38.4%) ([65], [71]-[74]) are paid, and 1 tool (7.6%) [75] offers a 30 day trial period. Regarding the evaluation method (A2), different mechanisms were observed: 6 tools (46.2%) ([62], [63], [67]-[70]) evaluate DevOps through of surveys, 5 tools (38.4%) ([71]-[75]) evaluate DevOps through consulting processes, and 2 tools (15.4%) ([64], [65]) evaluate DevOps through methodological guides and frameworks. In relation to the objective or scope of the evaluation (A3), 6 tools (46.2%) ([65], [71]-[75]) evaluate DevOps according to the set of principles, values, activities and roles applied by a company; 5 tools (38.4%) ([62], [64], [67]-[69]) evaluate continuous integration and deployment practices; and 2 tools (15.4%) ([63], [70]) evaluate DevOps according to compliance with the Culture, Automation, Lean, Measurement and Shared Use principles.

Table 1 Tools to evaluate DevOps.

| No | Ref. | Company | A1 | A2 | A3 |

|---|---|---|---|---|---|

| 1 | [62] | ATOS | Free | Survey | Practices |

| 2 | [63] | Microsoft | Free | Survey | Principles |

| 3 | [67] | Infostretch | Free | Survey | Practices |

| 4 | [68] | InCycle | Free | Survey | Practices |

| 5 | [64] | IBM | Free | Guide | Practices |

| 6 | [69] | Xmatters | Free | Survey | Practices |

| 7 | [70] | Attlasian | Free | Survey | Principles |

| 8 | [65] | IVI’s | Payment | Framework | Process |

| 9 | [71] | Veritis | Payment | Consulting | Process |

| 10 | [72] | Boxboat | Payment | Consulting | Process |

| 11 | [73] | Humanitec | Payment | Consulting | Process |

| 12 | [74] | Attlasian | Payment | Consulting | Process |

| 13 | [75] | Eficode | Free demo | Consulting | Process |

B. Protocol to Harmonize DevOps Process Elements

It was necessary to carry out a harmonization process that allowed identifying the elements to define a generic model to evaluate DevOps. Nevertheless, each model and tool has its own structure, concepts, and characteristics. To establish a homogeneous solution, HPROCESS was used to harmonize the models [76] with the following activities: identification, carried out during the SML; homogenization; comparison; and integration.

1) Homogenization Method. This method compares the general information of each solution and tool in a common structure that shows the characteristics of each study in relation to the rest [77]. It was defined from the process elements established in the PrMO ontology [78]. The characterization is available at https://bit.ly/3QDJOT9.

2) Comparison Method. The comparison was made by applying the set of activities proposed by MaMethod [79] adapted to compare the dimensions, values, and practices identified in the homogenization stage through the following activities: (i) analyze the solutions, (ii) design the comparison, and (iii) make the comparison. To do it, it was necessary to establish a base model that was crossed with all the solutions through a matrix that relates the set of practices, dimensions, and values proposed by each solution. The base model was chosen considering as selection criteria C1: the solution is generic; C2: the solution has a clearly defined set of dimensions, values, and practices; and C3: the solution was peer-reviewed by experts. After the analysis, it was determined that the reference model proposed in [80] meets all the criteria. The base model was compared with 23 solutions and 3 tools. The details of all the comparisons can be consulted at https://bit.ly/3c4nzaa.

3) Integration Method. IMethod [81] was applied to carry out the integration. It proposes five (5) activities: design, define an integration criterion, execute it, analyze the results, and present the integrated model. After the integration, 12 practices were considered fundamental and 6 complementary. 4 dimensions and 4 values were obtained, which represent the state of knowledge related to all the solutions. Table 2 summarizes the practices, dimensions, and values resulting from the integration process.

The detail of the results can be consulted at https://bit.ly/3dItx0M. Finally, an activity was conducted to identify the relationship between practices, dimensions, and values. It can be consulted at https://bit.ly/3T05Q45.

Table 2 Integrated process elements.

Note: The acronyms are in Spanish.

III. RESULTS AND DISCUSSION

The goal of the metrics in software engineering is to identify the essential parameters present in the projects [82]. The harmonization process allowed to obtain 12 fundamental practices, 6 complementary practices, 4 dimensions, and 4 values. The model follows a hierarchical structure in which the values are the aspects that must be considered to ensure that DevOps culture is applied properly, the dimensions describe each of the activities required to implement the values proposed for DevOps effectively, and the practices represent what must be applied to comply with each of the dimensions.

A. Purpose of the Model

The metrics model seeks to evaluate the implementation of DevOps based on a set of questions that allow knowing the degree of compliance of DevOps practices, dimensions, and values. The metrics model aims to support the evaluation made by a consultant, through a set of clearly defined metrics, of the implementation of DevOps in software companies. It also aims to identify areas to be improved with respect to the mechanisms used by companies to adopt and/or apply DevOps. The metrics model was defined following the guidelines described by the GQM approach [38]: a conceptual level (Goal), an operational level (Question), and a quantitative level (Metric). At the conceptual level, the dimensions, practices and values proposed by DevOps were identified. At the operational level, the questions associated with each DevOps practice were defined according to a set of goals associated with each practice. Finally, at the quantitative level, a set of metrics that enable knowing the degree of implementation of DevOps practices, dimensions, and values were defined.

B. Goals

Initially, a set of goals related to each practice defined at the harmonization stage was defined. As a result, 42 goals and 63 questions related to fundamental practices were set; and 19 goals and 29 questions related to complementary practices. Table 3 shows the goals related to the continuous deployment (DC) practice. The rest of the objectives can be consulted at https://bit.ly/3SZQSuZ.

C. Questions

Each goal is associated with one or more questions that relate the aspects to be evaluated quantitatively. The questions use a nominal scale with two possible values (YES: 100%, NO: 0%). They were defined following the criteria proposed in [83], which seeks to avoid ambiguous questions, vague terms, cognitive overload, among others. Table 4 presents the questions associated with DC. The rest of the questions can be consulted at https://bit.ly/3ChM1zi.

Table 4 Proposed questions for complementary practices

Note: The acronyms are in Spanish.

A questionnaire-type evaluation instrument was designed with two possible answers (“YES”, “NO”). The template used to answer the questions can be found at https://bit.ly/3LDgfik. The answer to each question is given according to the following criteria: “YES”; (i) collection of opinions about each role involved in the practices or (ii) consistent historical records that evidence compliance. "NO"; (i) if the company does not present evidence of compliance with the practice.

D. Metrics

Table 5 shows the scale to assess the degree of implementation (gi) of practices, dimensions, and values. It was defined following the formalism proposed in [80]. The metrics definition process was carried out by assigning weights to each practice, dimension, and value by applying the linear weighting method [84], and metrics were defined using the GQM [38].

Table 5 Scale to measure the implementation level.

| Acronym | Implementation level | Scale |

|---|---|---|

| NA | Not achieved | 0% ≤ gi ≤ 15% |

| PA | Partially achieved | 15% < gi ≤ 50% |

| AA | Widely achieved | 50% < gi ≤ 85% |

| CA | Completely achieved | 85% < gi ≤ 100% |

Note: The acronyms are in Spanish.

1) Metrics to Evaluate Practices. As a result of the weighting process [84], it was identified that each practice has an associated weighted percentage (%𝑃𝑃𝐴). The combined weighted percentage (%𝑃𝑃𝐶) corresponds to the weight associated with all practices during the total evaluation. Table 6 shows the weights of each fundamental and complementary practice.

Table 6 Integrated process elements.

Note: The acronyms are in Spanish.

Table 7 describes the metrics that relate the degree of individual, combined, weighted and total implementation of the fundamental and complementary practices.

Table 7 Metrics to assess the degree of implementation of practices.

| Id | Scale [%] | Equation | Variables |

|---|---|---|---|

| %𝑃𝐹𝐼 | [0,100] | 𝒏: Number of questions; %𝑷i: Question percentage (YES: 100%, NO: 0%). | |

| %𝑃𝐹𝐼𝑃 | [0,%𝑃𝑃𝐴] | %𝑷𝑭𝑰: Percentage of individual compliance with a fundamental practice; %𝐏𝐏𝐀: Associated weighted percentage (see Table 6). | |

| %𝑃𝐹𝑇 | [0,100] | 𝒏: Number of fundamental practices; %𝑷𝑭𝑰𝑷𝒊: Percentage of weighted compliance with a fundamental practice. | |

| %𝑃𝐶𝐼 | [0,100] | 𝒏: Number of questions associated with the practice; %𝑷𝒊: Percentage obtained by a specific question (YES: 100%, NO: 0%). | |

| %𝑃𝐶𝐼?? | [0,%𝑃𝑃𝐴] | %𝑷𝑪𝑰: Percentage of individual compliance with a complementary practice; %𝐏??𝐀: Associated weighted percentage (see Table 11). | |

| %𝑃𝐶𝑇 | [0,100] | 𝒏: Number of practices; %𝑷𝑪𝑰𝑷𝒊: Percentage of weighted compliance with a complementary practice. | |

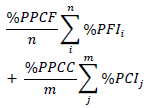

| %𝑃𝑇𝑃 | [0,100] | %𝑷𝑷𝑪𝑭: 70% (see Table 6); %𝑷𝑷𝑪𝑪: 30% (see Table 6); %𝑷𝑭𝑻: percentage of compliance with fundamental practices; %𝑷𝑪𝑻: percentage of compliance with complementary practices. |

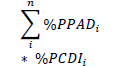

2) Metrics to evaluate dimensions. A total of 4 dimensions were obtained: (i) tools, (ii) processes, (iii) culture and (iv) people. Each dimension has a set of practices associated with it and a weighted percentage (%𝑃𝑃𝐴𝐷=25%). Table 8 shows the metrics to evaluate the degree of implementation of dimensions.

Table 8 Metrics to assess the degree of implementation of dimensions.

| Id | Scale [%] | Equation | Variables |

|---|---|---|---|

| %𝑃𝐶𝐷𝐼 | [0,100] | 𝒏: Number of fundamental practices; 𝒎: Number of complementary practices; %𝑷𝑷𝑪𝑭: 70% (see Table 6); %𝑷𝑷𝑪𝑪: 30% (see Table 6); %𝑷𝑭𝑰𝒊: Percentage of individual compliance with a specific fundamental practice (see Table 7); %𝑷𝑪𝑰𝒊: Percentage of individual compliance with a specific complementary practice (see Table 7). | |

| %𝑃𝐶𝐷𝑇 | [0,100] | 𝒏: Number of dimensions; %𝑷𝑷𝑨𝑫𝒊: Weighted percentage associated with a specific dimension; %𝑷𝑪𝑫𝑰𝒊: Percentage of individual compliance with a dimension. |

3) Metrics to evaluate values. A total of 4 values were obtained: (i) automation, (ii) collaboration, (iii) measurement and (iv) communication. Each value has a set of associated dimensions and a weighted percentage (%𝑃𝑃𝐴𝑉 = 25%). Table 9 presents the metrics to evaluate the degree of implementation of the values.

Table 9 Metrics to assess the degree of implementation of values.

| Id | Scale [%] | Equation | Variables |

|---|---|---|---|

| %𝑃𝐶𝑉𝐼 | [0,100] | 𝐧: Number of dimensions associated with the value; %𝐏𝐂𝐃𝐈𝐢: Percentage of compliance with a dimension associated with a value (see Table 8). |

4) Metrics to evaluate DevOps.Table 10 presents the metric to know the implementation degree of DevOps in a software development company.

Table 10 Total degree of implementation of DevOps (own elaboration)

| Id | Scale [%] | Equation | Variables |

| %𝑃𝐶𝐷𝑒𝑣 | [0,100] | Variables: 𝐧: Number of values; %𝐏𝐏𝐀??𝐢: Weighted percentage associated with a specific value;%𝐏𝐂𝐕𝐈𝐢: Percentage of individual compliance with a value (see Table 9). |

IV. EVALUATION

A. Focus Group Protocol

The procedure to form the focus group followed the guidelines defined in [85], which proposes 5 phases: (i) planning, (ii) recruitment, (iii) moderation, (iv) analysis and report of results, and (v) limitations. To conduct the focus group, a questionnaire that aimed to assess the suitability, completeness, ease of understanding, and applicability of the metrics model was designed.

1) Planning. During this phase, the general goal of the focus group and the research objective were defined. Subsequently, the materials and procedures necessary to carry out the discussion session were identified. The general goal of the research was oriented to know the perceptions, opinions, and suggestions made by the participants of the focus group. The research goals had the purpose of identifying possible improvement actions or suggestions made by DevOps experts about the degree of acceptance or rejection of suitability, completeness, ease of comprehension, and applicability of the model in software development companies. The materials were a questionnaire, a work agenda, a protocol structure, and a proposal to be evaluated.

2) Recruitment. The research group defined the profile of the attendees with the aim of choosing people with the necessary experience and knowledge about DevOps. As a result, the participants must meet the following criteria: be an active professional in the industry or academic environment, have knowledge and experience in the definition or application of agile approaches, and have at least one year of experience working with DevOps. Considering that criteria, 15 potential participants were invited, out of which 14 were accepted.

3) Moderation. The debate session took an hour and a half, and the agenda was: (i) thanks to the participants for attending; (ii) presentation of the goals for the focus group; (iii) presentation of the metrics model; (iv) discussion of the observations and suggestions identified by each of the participants; and (v) completion of an online form to know the opinion of each participant. The activities were coordinated by a moderator, who ensured that the interventions of the participants were within the objectives and scope of the focus group, and a rapporteur who recorded the perceptions, suggestions, and comments of each participant. At the end of the discussion session, the participants were asked to fill a form answering 17 questions defined according to the levels of conformity proposed in the Likert scale [86] including 5 possible values: (1) Very bad, very dissatisfied; (2) Bad, little satisfied; (3) Good, sufficient, adequate, somewhat satisfied; (4) Fairly good, adequate, satisfied; and (5) Very good, very adequate, very satisfied. Additionally, there were two open questions that allowed the participants to propose adjustments to the process and make additional comments. The relationship between each question and the following criteria is presented: comprehensibility; applicability; suitability; and completeness. According to the distribution of the questions, questions P1-P3 evaluate the comprehensibility of the proposal; P4-P5 and P16, its applicability; P5- P14, its suitability; P8-P17, its completeness; and open questions P18 and P19 evaluate all aspects. Table 12 presents the details of the questions.

Table 12 Focus group results.

Note: The acronyms are in Spanish.

4) Analysis and Report of Results. According to the results, the participants had a positive perception about the practices, dimensions, and values proposed in the model. Besides, they considered that the practices are sufficient and necessary to guarantee the evaluation of DevOps and that the proposed dimensions and values are coherent because they approach DevOps including aspects related to soft skills such as communication, cooperation, transparency, and teamwork. A high degree of agreement was observed regarding the applicability of the proposal. The participants stated that the elements in the model provide value to companies and open opportunities for improvement after an evaluation. They also stated that the defined weights are consistent and adequate according to the distribution of fundamental and complementary practices. In addition, a high degree of agreement was observed regarding the suitability of the proposal. According to the participants, the model has a solid mathematical basis according to the goals proposed for each practice. In this sense, the participants stated that the metrics can offer a result that allows companies to identify possible aspects to be improved. Finally, it was possible to observe a favorable opinion regarding aspects related to mathematical rigor and the usefulness of the proposed metrics; however, the ones related to the applicability of the proposal in small and medium-sized companies were identified. They were considered and applied to refine a new version of the proposal. The detail of the improvement actions can be consulted at the following link: https://bit.ly/3pw659y.

B. Limitations

Each limitation found during the focus group and the solutions they applied are presented below. Although all the participants met the selection criteria, they did not have the same level of knowledge and experience with DevOps; the metric model was sent to all participants three weeks in advance to guarantee that all participants were aware of the context of the proposal. According to [85], the focus group should have at least 6 participants; therefore, 15 people were invited to reduce the possibility of not reaching the minimum number of attendees. At the beginning of the session, it was possible to observe that the participation was low, this was corrected by the rapporteur and the moderator, who encouraged them to participate by asking questions or animate them to express their comments. Due to the number of participants, some of the comments made during the discussion were outside the scope of the proposed evaluation objectives; it was decided to clarify each comment quickly to continue with the discussion. The focus group was carried out following biosafety protocols to avoid crowds: the session was held remotely and permission was requested to record it and analyze the observations and comments that could have been omitted during the session.

V. CONCLUSIONS

The metrics model was the result of several stages executed in a structured and organized manner: (i) a SML on the evaluation of DevOps in software companies, (ii) the harmonization of the methodological solutions and tools identified in the SML, and (iii) the definition of a metrics model applying the GQM approach that allowed establishing the set of process elements to evaluate DevOps. The harmonization of the solutions and tools identified in the systematic mapping allowed a much broader and clearer picture of the set of practices, dimensions and values associated with DevOps through an organized, clear, and generic structure. The metrics model proposed in this article provides support to expert DevOps professionals and consultants who seek to assess the degree of implementation of DevOps practices, dimensions, and values. As a result, a company can quickly understand the degree of DevOps implementation at general and specific levels. The evaluation of the proposal through a focus group allowed to confirm that the model is consistent and defines a set of clear metrics that evaluate vital aspects to the application of DevOps. Likewise, the focus group allowed to receive feedback thanks to the recommendations of software engineering experts with experience in the definition, adoption, and application of DevOps processes, and to identify aspects to be improved. Those aspects were analyzed to obtain a refined version of the proposal. Finally, the future work gaps that are currently being addressed include the execution of multiple case studies to evaluate the metrics model in operational environments, the construction of a tool to automate the application of the metrics, and the execution of additional exploratory studies to identify new proposals that can be integrated into the model.