IN THE context of metacognition research, at least three historical perspectives are recognized. They are distinguished in the theoretical position of different researchers in the field, in relation to the approach to the discussion about whether metacognition corresponds to a set of general metacognitive skills or if these are developed as a function of domain of the learning task. Thus, an initial perspective is recognized from where meta-cognition is postulated as a set of skills of general mastery, more at the level of a metacapacity for the agency and regulation of one's own learning, that generally serves to understand and regulate one's own metacognitive activity, regardless of the domain under investigation. In this perspective is the classical theory about general metacognitive awareness (Schraw, 2002) that posits the existence of a general metacognitive capacity that allows individuals to know and regulate their own learning process, regardless of the domain.

Historical Approaches to the Study of Metacognition

This section focuses on three historical approaches to research on metacognition. The first approach endeavors to explain the nuances of the micro-processes involved in metacognition while the second one attempts to understand how domain generality and specificity influence these micro-processes. Finally, the third approach employs more sophisticated statistical modeling techniques for a better measurement of various aspects of metacognition.

Classical conceptual approaches to metacognition are understood as the capacity that allows students to know themselves and consciously inspect their own cognition and its attributes such as how they think and with what information they think, then charting and then mentally following a path of action to achieve a learning goal (Winne & Marzouk, 2019). In this sense, Schraw (2002) has argued that cognition and metacognition differ in that cognitive skills are necessary to perform a task while metacognition is necessary to understand how the task was executed (Garner, 1987; Schraw, 2002). Similarly, Schraw's (2002) theory about general metacognitive awareness states that knowledge and metacognitive regulation are recognized as components of a multidimensional general domain that are teachable and malleable. Thus, metacognitive knowledge and regulation span a wide variety of subject areas and domains, with empirical evidence to support the conclusion that students demonstrate a general monitoring skill that evolves from tacit to informal, to formal actions of metacognition (Schraw & Moshman, 1995). Schraw (2002) postulated that cognitive skills were encapsulated within domains or subject areas, while metacognitive skills covered multiple domains, multiple tasks and exhibited flexibility in new learning tasks, including in domains that may have little in common (Schraw & Moshman, 1995).

Schraw (2002) initially considered that me-tacognitive knowledge was in principle domain or task specific and that as students acquired more metacognitive knowledge in various domains, they might be more capable of constructing general metacognitive knowledge such as understanding memory limitations (i.e., metamemory) and also general regulatory skills, like selecting appropriate learning strategies (Schraw & Moshman, 1995). Along a similar vein, Dunlosky and Tauber (2012), based on the approaches of Nelson and Narens (1990) and Schraw (2002), argued in their theory of isomechanism the existence of a general capacity for metamemory, from where they establish that all metacognitive judgments made in different activities, are based on the same cognitive processes. In general, this perspective has been more aligned to researchers who execute their work in the fields of experimental psychology and educational psychology. More recently, this position is recognized in some applications within education, especially in relation to the uses of procedural knowledge. In this regard, for example, Winne and Azevedo (2014) define procedural knowledge as a set of skills that the student has about how to execute cognitive work to perform tasks effectively.

Consistent with this logic, a student can know how to build a first mnemonic letter or how to use a method to optimize an internet search by forming literal strings such as phrases between quotation marks; all of these are procedures and strategies for learning that can be domain specific or general, if they span multiple domains (Winne & Azevedo, 2014; Sawyer, 2014).

Some researchers in the field of metacognition examine differences in domain general vs. domain specific skills. These investigations have included samples from different domains of study such as psychology, education, medicine, and mathematics (Ariel, Dunlosky, & Bailey, 2009; Dunlosky & Rawson, 2012; de Bruin, Dunlosky & Cavalcanti, 2017; Hacker, Bol, Horgan, & Rakow, 2000; Hacker, Bol, & Bahbahani, 2008; Nietfeld, Cao, & Osborne, 2006; Rutherford, 2014). However, many of these studies explore the bases of meta-cognitive judgments, the capacity of metamemory or the study of the effects of processes of different metacognitive interventions on monitoring accuracy. On the other hand, they do not focus on the analysis of the differences in the metacognitive abilities of knowledge and regulation, based on the hypothetical differences of these components across disciplines or domains. They also do not elucidate the implications for learning these purported differences may bring.

Finally, in a third approach, there are studies from where metacognition is treated as a complex, sophisticated, and hierarchical macroprocess, involving both universal mechanisms more related to general cognition and structural and functional differences within the specific domains of knowledge. These findings have allowed the formulation ofnew theoretical models of metacognitive monitoring, among which is the proposed general monitoring model for metacognitive monitoring (Gutierrez et al., 2016; Schraw et al., 2013, Schraw et al., 2014).

Theoretical Considerations

In this section, theoretical underpinnings to the study of metacognition are discussed. In general, the metacognitive monitoring literature groups different investigations that compare the metacognitive awareness that students have about their actual performance in relation to the expected performance in a criterion task (i.e., confidence in performance judgments). In this way, monitoring accuracy is understood as the degree that individuals judge their performance and how this judgment or belief compares with their actual performance (Nelson, 1996; Gutierrez & Schraw, 2015; Gutierrez & Price, 2017; Gutierrez de Blume, 2017).

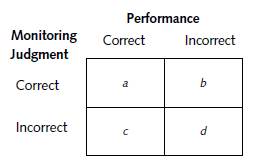

There are different measures to determine monitoring accuracy that have been used in previous investigations of metacognitive monitoring. Among them are the estimation of absolute accuracy (e.g., G Index or raw frequencies in the 2 x 2 performance/judgment array in Table 1), that evaluates the diferences between metacognitive judgments and actual performance, and relative accuracy (e.g., Gamma or d') that measures the degree that judgments diferentiate or discriminate performance (Serra & Metcalfe, 2009). In turn, the level of agreement between judgments and actual performance has also been evaluated using more sophisticated measures like sensitivity and specificity (Schraw et al., 2013; Schraw et al., 2014; van Stralen et al., 2009).

The prototypical format in metacognitive monitoring studies involves answering a test item and judging whether one's answer is correct or incorrect. Monitoring accuracy and error (bias) are calculated based on diferent computational formulas that use frequencies of two or more of the four mutually exclusive cells in a 2 x 2 data matrix, a matrix like the one presented below in Table 1.

According to research, cell a corresponds to correct performance judged to be correct, cell b corresponds to the incorrect performance that is judged to be correct (more commonly referred to as overconfidence), cell c corresponds to correct performance that it is judged to be incorrect (more commonly known as underconfidence), and cell d corresponds to incorrect performance that is judged to be incorrect (Gutierrez et al., 2016; Schraw et al., 2013; Schraw et al., 2014). Different statistical measures combine the four types of information from cells a to d in various ways to obtain an estimate of the accuracy or error of metacognitive monitoring in a set of test items (locally) or tests as a whole (one holistic global judgment). Thus, cells a and d correspond to accurate metacognitive monitoring whereas cells b and c are aligned with erroneous monitoring.

Three metacognitive monitoring models are recognized according to the way that monitoring has been investigated. The first is a classic model derived from the double information flow theory of Nelson and Narens (1990), that typically employs the statistical measure Gamma (Goodman & Kruskal, 1954). In this model, monitoring is considered the link to the available information on the object level with the information that the individuals have about their own cognitive resources at a meta level. In this way, the information obtained through precise monitoring can be used at the meta level to control the subsequent performance of the learner. This model has been called by different researchers as the one factor model, given that its entire research tradition is based on the use of the gamma statistic (Gutierrez et al., 2016; Schraw et al., 2013; Schraw et al., 2014).

A second monitoring model is derived from the medical diagnosis process where the concepts of sensitivity (degree that a test detects disease) and specificity (degree that a test detects the absence of disease) are adapted (Mayer, 2010; Schraw et al., 2013). Thus, these measures have been employed in studies on metacognitive monitoring in educational settings, such that sensitivity assesses the accuracy of judgments on correct performance, while specificity measures the accuracy of judgments on incorrect performance (Schraw et al., 2013); this monitoring model has been named as two-factor because judgments about correct versus incorrect performance constitute two separate and independent aspects of the metacognitive monitoring process (Gutierrez et al., 2016; Schraw et al., 2013; Schraw et al., 2014).

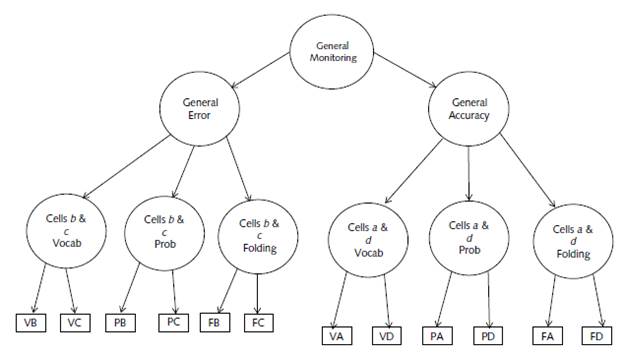

The third model is referred to as the general monitoring model. It assumes that monitoring occurs through two different processes, although inversely related, of accuracy and error, and that individuals obtain metacognitive judgments in different ways. More specifically, the processes related to accurate monitoring judgments are diferent from those related to erroneous judgments and, as an equally important aspect, judgment errors are not unidimensional but rather divided into discordant judgments in relation to actual performance that lead to overconfidence (e.g., arrogance) and those that lead to underconfidence (e.g., insecurity) (Gutierrez et al., 2016; Schraw et al., 2013; Schraw et al., 2014).

Gutierrez et al. (2016) suggest that metacognitive monitoring can be better understood using this more nuanced general monitoring model, where cognitive performance would be the foundation (e.g., performance around specific vocabulary, probability, and paper folding tests). At the same time, this primary and domain specific level can be included in a higher level of monitoring for correct performance and incorrect performance that are assumed to be two diferent routes to mentally process correct and incorrect metacognitive judgments, regardless of the domain of study. These two previous levels are included in a third level that represents general metacognitive monitoring (Gutierrez et al., 2016; Schraw et al., 2013; Schraw et al., 2014). The general monitoring model is depicted in Figure 1.

Figure 1 Hypothesized third-order CFA model of the 2 x 2 matrix of raw frequencies for vocabulary, probability, and paper folding tests. The first-order factors represent domain-specific accuracy (i.e., accurate judgments respectively) and domain specific error judgments (i.e., over- and under-confidence). The first letter in each of the manifest variables represents the name of the test (i.e., V = Vocabulary, S = Probability, and F = Paper Folding) and the second letter represents the specific cell in the 2 x 2 matrix.

This notion of a hierarchical framework of metacognitive processes has also been developed from findings proposed in the field of neurophysiology and cognitive neurosciences by different researchers who have studied the anatomo-functional correlates of metacognitive judgments in various domains such as memory and perception processes (e.g., Fleming et al., 2016; Morales et al., 2018). In this regard, Morales et al. (2018), for example, conducted an experiment examining the compromised neural substrates when people made metacognitive judgments during the tasks of perception and memory, combined with performance and stimulus characteristics. When comparing activity patterns of functional magnetic resonance imaging (FMRI) while people evaluated their performance, they found evidence for both domain specific and domain general metacognitive processes. Multi-voxel activity patterns in the anterior prefrontal cortex predicted confidence levels in a domain-specific manner, while domain general signals predicting confidence and accuracy were found in a generalized network in the front and posterior midline (Morales et al., 2018).

The Present Study

Research question and hypotheses. Based on the reviewed literature , the following research question guided the conduct of this study: What is the effect of domain (in undergraduate programs in education, psychology, and medicine) on the metacognitive skills (knowledge ofcognition: declarative, procedural, and conditional; and regulation of cognition: planning, information management, debugging, comprehension monitoring, and evaluation) of undergraduate Colombian students?

Hypotheses: (1a) Like previous research (Gutiérrez et al., 2016; Schraw et al., 2014) that supported both the domain general hypothesis and the domain specific hypothesis, in the present study metacognitive awareness was expected to be different among students studying medicine and education and those studying medicine and psychology. However, (ib) no significant difference was expected among undergraduates in education and those in psychology because these domains are more likely to resort to similar metacognitive skill sets compared to the metacognitive skills required in a domain such as medicine, a program of study traditionally recognized as one of the highest regarding academic demand and burden.

Method

Participants and Sample

In the present study, a convenience sampling approach was used. The research involved 507 Colombian university students who during the year 2019 were studying undergraduate programs in education (n = 156), psychology (n = 166), and medicine (n = 185) in two Colombian universities. Of the 507 students, 297 identified as female and 210 identified as male. All students met the following inclusion criteria: age between 20 to 30 years; enrollment as an undergraduate during any of the two semesters of the year 2019; absence of repetition of coursework; and having their signed informed consent for their involvement in the research study. In this way, the distribution of gender and academic program of study is typical of each of the two participating universities.

Materials and Instruments

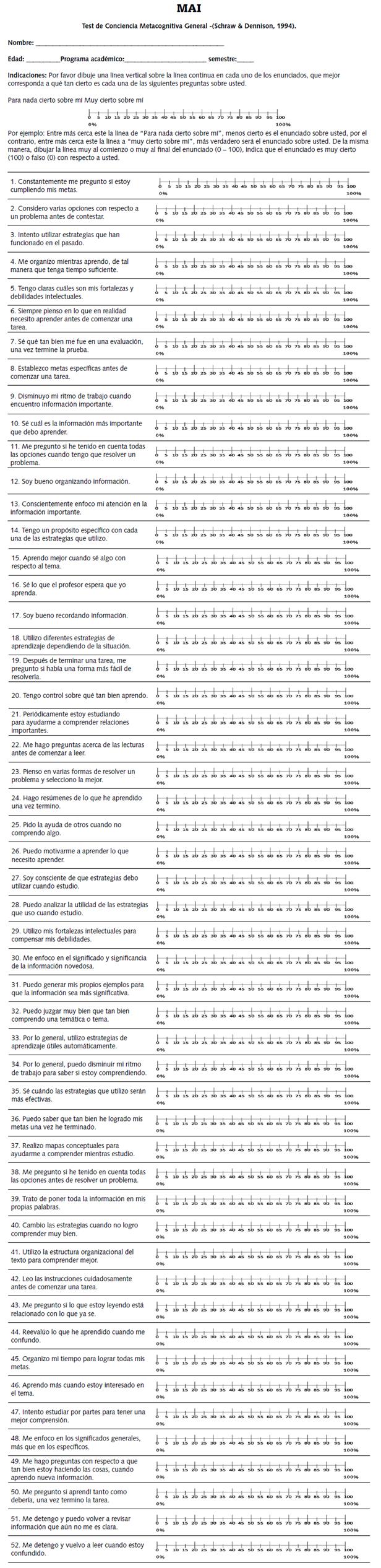

Metacognitive awareness inventory. The eight components ofmetacognition (that is, knowledge of cognition: declarative, procedural, and conditional; and regulation of cognition: planning, information management, monitoring, debugging, and evaluation) were measured using the Metacognitive Awareness Inventory (Spanish version; MAI, see Appendix). The MAI was originally developed and validated in English by Schraw and Dennison (1994), and was adapted to be used in Spanish-speakers with Colombian samples by Huertas, Vesga, and Galindo (2014) and by Gutierrez de Blume and Montoya Londoño (2020). The MAI is a 52-item instrument that measures metacognition through its constituent components. Sample items include: "I constantly wonder if I am meeting my goals" (monitoring); "I try to use strategies that have worked in the past" (procedural knowledge); "I reevaluate what I have learned when I get confused" (debugging strategies); and "I know how well I did in an assessment once the test is over" (evaluation).

The ratings for each item were marked with a vertical bar on a continuous bipolar line of 0-100 (i.e., not at all true of me representing 0 and very true of me representing 100) on a scale that is 10 cm (i.e. 4 inches) in length. This scoring scheme is superior to an ordinal Likert scale because it improves the reliability of the instrument by increasing the variability of the responses (Schraw & Dennison, 1994; Weaver, 1990). The scores of each participant in the individual scales were obtained by adding all the items that comprise that scale and taking the average. Therefore, each participant had eight composite scores, one for each of the metacognition components.

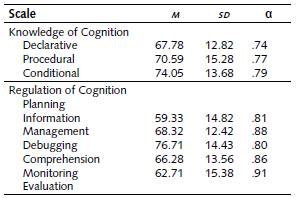

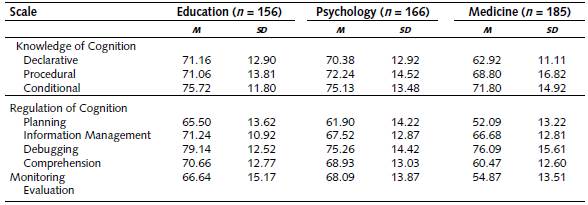

The Spanish version of the MAI employed in the present study was the version validated by Gutierrez de Blume and Montoya Londono (2020). Their construct validation study with a sample of 528 undergraduate students demonstrated excellent psychometric properties. For the present study, the eight MAI scales demonstrated appropriate internal consistency reliability coefficients, ranging from .74 to .91 (see Table 2 for descriptive statistics of the sample and internal consistency of each individual scale). This demonstrates that participants provided consistent responses across the various dimensions of metacognition as measured by the MAI (Spanish version), suggesting low measurement error in the hypothesized constructs.

Table 2 Descriptive Statistics for the Sample and Internal Consistency Reliability Coefficients for the Eight mai scales

Key. M = mean; ds = standard deviation; α = internal consistency reliability coefficient. w=507

Procedure

Students from the three undergraduate programs included in the study were contacted to solicit their participation. A separate meeting was held for each of the three academic programs during the year 2019, with the collaboration of the instructors in charge of the participants of each of these programs. This provided the space to contact the prospective participants and execute the application of the instrument. In the meeting space with the students, they first learned the specifics of the research, and they read and signed the corresponding informed consent under the advice of one of the instructors. Subsequently, those who expressed interest and agreed to participate in the study, completed the MAI (Spanish version) instrument as a group. The application of the instrument lasted approximately half an hour and the ethical guidelines for studies considered to be of minimum risk with human beings in the country where the data were collected were adhered in the research process (Ministerio de Salud República de Colombia, 1993).

Data Analysis

Before performing data analyses, data were tested for the necessary statistical assumptions and tested for univariate and multivariate outliers. Univariate outliers were reviewed for each group relevant to the present study separately. Inspection of box and whisker plots indicated that, of the original 509 complete cases, two cases were detected that were considered extreme univariate outliers in the education group (standard residuals > 3 standard deviations). Therefore, to avoid biases due to extreme scores that would otherwise undermine the reliability of the data, these two cases were removed from any subsequent analysis. Next, Mahalanobis Distance and Cook's D were used as metrics to evaluate multivariate outliers. None of the 507 remaining cases in the sample were considered multivariate outliers. Therefore, all main analyses continued with these remaining 507 cases with complete data. The data met the assumption of univariate normality (all skewness and kurtosis values were less than the absolute value of 2) and multivariate normality. The data also met the assumption of homogeneity of the error variance (the Levene test p-values were all greater than .12) at the univariate level and the homogeneity of the variance-covariance matrices (p-value of Box's M=.19) at the multivariate level.

A one-way (domain: education, psychology, medicine) multivariate analysis of variance (MA-NOVA) was performed. The various metacognitive skills (knowledge of cognition: declarative, procedural, and conditional; and regulation of cognition: planning, information management, monitoring, debugging, and evaluation) served as dependent variables. Bonferroni's adjustment to statistical significance was used to control familywise Type I error rate inflation for univariate omnibus results (.05 / 8=.006) and all post hoc comparisons. All effect sizes for the MANOVA results were reported as η2. Cohen (1988) specified the following interpretive guidelines for η2: .010-.059 as small; .060-.139 as medium; and ≥ .140 as large.

Descriptive

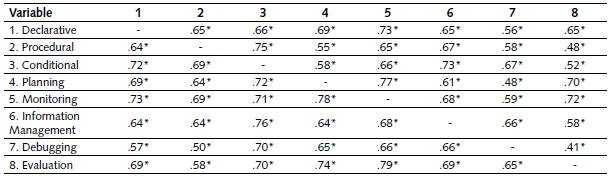

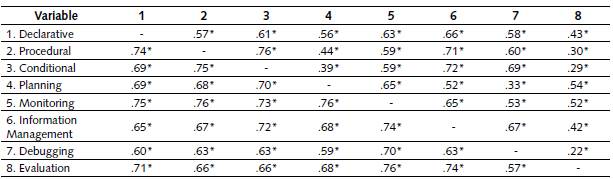

Descriptive statistics by group for the eight MAI scales are presented in Table 3, while Tables 4 and 5 present the zero-order bivariate correlations for the sample and by group, respectively.

Table 3 Descriptive Statistics by Group for the Eight MAI scales

Key. M = mean; os = standard deviation. n = 507

Table 4 Zero-Order Correlation Matrix of Metacognitive Skills for the Sample and Education Students

* p < .01

Note. Correlations above the diagonal are for the sample and those below the diagonal are for the education students.

Table 5 Zero-Order Correlation Matrix of Metacognitive Skills for Psychology and Medical Students

* p < .01

Note. Correlations above the diagonal are for medical students and those below the diagonal are for psychology students.

Evidently, medical students consistently reported significantly lower scores on all eight components of metacognition compared to education and psychology students.

All correlation coefficients were statistically significant at the p<.01 level of significance, and all were in the theoretically expected (i.e., positive) direction. The correlation pattern between the three groups requires a more detailed examination, as they were different depending on domain of study. Interestingly, medical students consistently exhibited weaker correlation coefficients than education and psychology students, suggesting that further exploration of predictive patterns of metacognitive skills and cognitive and performance measures across domains is warranted.

Main Analyses

The MANOVA results revealed that there were statistically significant differences between domains in the linear combination of dependent variables, multivariate F(16,996)=13.21, p<.001, η 2 = .175. The interpretation of the univariate results is presented below.

After controlling for inflation of the familywise Type I error rate using the Bonferroni adjustment to the p-value for multiple comparisons, the univariate findings were significant between-groups for declarative knowledge, F(2,504) = 28.31, p < .001, η 2 = .101; conditional knowledge, F (2,504) = 5.00, p = .006, η 2 = .025; planning, F (2,504) = 50.05,p < .001, η 2 = .166; monitoring, F (2,504) = 34.88, p < .001, η 2 = .122; information management, F (2,504) = 7.24, p = .001, η 2 = .028; and evaluation, F (2,504) = 49.08, p < .001, η 2 = .163. The univariate results for the procedural knowledge and debugging were not statistically significant, all p values ≥ .03.

Post hoc pairwise comparisons for statistically significant dependent variables are explained next. The pattern of pairwise differences was relatively consistent in that the mean differences were significant between students of medicine and education and students of medicine and psychology, but not between students of education and psychology. This was the case for declarative knowledge, conditional knowledge, comprehension monitoring, and evaluation. However, for planning and information management, there were also significant differences between education and psychology students, where the former group reported significantly higher mean scores than the latter group. Finally, overall, medical students reported the lowest mean scores on the eight metacognitive components compared to the other two groups (see Table 3 for descriptive statistics by group).

Discussion

All the correlations were statistically significant and all were in the theoretically expected direction, which supports the factorial structure of the classic components of metacognition, focused on knowledge and regulation, drawn from Schraw's original studies to some of his latest research (Gutierrez et al., 2016; Schraw & Dennison, 1994; Schraw, 2002; Schraw et al., 2013; Schraw et al., 2014) . In general, statistically significant differences between-groups were found in the case of metacognitive knowledge (declarative and conditional) and regulatory skills (planning, monitoring, information management, and evaluation). However, it should be noted that no significant differences were found in the case of procedural knowledge and debugging skills. These differences were consistent among medical students relative to education and psychology students. Likewise, no differences were found in the metacognitive skills of declarative and conditional knowledge and monitoring and evaluation among education and psychology students. This finding may be due to the way that the Western educational system is focused on the development of language skills, the basis of declarative knowledge, an aspect that seems common in the formation of careers focused on the social sciences, different from what can be presented in health sciences, as is the case of the medical undergraduate program focused on what has been considered as the medical model.

In general, studies on metacognition have not delved into the exploration of differences in metacognitive knowledge and metacognitive regulation processes across domains of study, with a few exceptions. Exceptions include studies regarding specific experimental learning situations in certain disciplinary fields, as those that have addressed, for example, metacognition and its relations with problem solving in mathematics, medicine, and history, at an individual level and in a shared perspective with intelligent tutors (Hurme, Járvelá, Merenluoto & Salonen, 2015; Lajoie, Poitras, Doleck & Jarrell, 2015; Poitras, 2015; Sáiz-Manzanares & Montero-García; 2015), and in the study of the role of metacognitive awareness in listening strategies typical of listening competence in language students (Rahimi & Abedi, 2015) . Thus, the investigation of differences of the central components of metacognition, as in the case of metacognitive knowledge and metacognitive regulation, constitutes a novel endeavor, as the existence of differences in metacognitive skills as a function of domain is shown to be a ripe avenue of investigation.

The present study contributes empirical evidence in the development of this conclusion by providing findings that support the metacognitive differences in specific domains (i.e., education, psychology, and medicine), a finding that is consistent with the metacognitive differences found in studies that used cognitive tasks of vocabulary, probability, and paper folding, previously reported since the initial formulation of the general monitoring model (Gutierrez et al., 2016; Schraw & Dennison, 1994; Schraw, 2002; Schraw et al., 2013; Schraw et al., 2014). In this series of studies, Schraw and colleagues examined the extent that metacognitive monitoring is consistent across domains (more commonly known as the domain-general hypothesis because metacognitive monitoring is not domain dependent and instead operates as a general set of monitoring skills that transfer and function across different domains) or is domain specific (more commonly known as the domain-specific or encapsulated hypothesis because metacognitive monitoring skills are domain specific and, therefore, do not transfer or function similarly across domains).

In their last two investigations, Schraw and his associates posit the argument that perhaps metacognitive monitoring is a combination of domain-specific and domain-general hypotheses (Gutiérrez et al., 2016; Schraw et al., 2014). They argue that metacognitive monitoring begins as a domain-specific skill early in development and progressively becomes more general in adolescence and, especially, in adulthood. This conjecture is certainly consistent with modern conceptualizations of the theory of self-regulated learning (Bandura, 2006; Boekaerts, 1999; Zimmerman, 2000). In the present study, medical students reported lower scores in the eight components of metacognition evaluated compared with students from other undergraduate programs such as education and psychology. This finding is interesting, given the high academic demand that training in the field of study of medicine represents in any country in the world. Medicine is a discipline of knowledge that some researchers recognize as a domain where students can demonstrate exhaustion as a result of the arduous demands of this domain (e.g., as a result of the stress related to academic work, the handling of a large volume of knowledge and information, the excess of tasks, as well as the exposure to the suffering of patients, which represents a very high cognitive and emotional demand), that may trigger feelings of incompetence in the student, with important consequences for learning (Dyrbye, et al., 2014; Phinder-Puente et al., 2014).

Like Schraw and colleagues (Gutiérrez et al., 2016; Schraw et al., 2014), this study also found support for both the domain-general hypothesis and the domain-specific hypothesis, albeit in more nuanced ways. Presumably, as social sciences, psychology and education share much more in common with each other than with medicine, and therefore metacognitive skills can be more easily transferred across those domains than in medicine. Although it has been documented worldwide that, in general, students with high intellectual capacity are admitted to study in medical programs, only a very small percentage of students are actually admitted. Once medical students begin their studies, their learning and performance possibilities vary widely, between profiles of students who achieve in-depth learning and high levels of competence in relation to their training, and those students who barely manage to pass and even experience academic decline and, ultimately, dropout (Abdulghani, 2009; Abdulghani, et al., 2014; Arulampalam, Naylor, & Smith, 2004).

The lower mean scores on all of the components of metacognition in medical students compared to the other two groups seem to demonstrate the need to incorporate the intentional and explicit teaching of metacognitive skills in undergraduate careers with such high academic demands, as would be the case for medical programs, to promote in medical students a more reflective attitude towards their own learning. This approach favors the development of a greater metacognitive awareness of the possibilities of knowing oneself well during learning episodes and to control the process of self-regulation of learning. In this regard, some researchers have argued that, although much of medical education involves immersion in clinical learning environments, students rarely, if at all, receive explicit instructions in their training on how to manage their own learning. (Aukes, et al., 2007; Westberg, & Jason, 1994). In fact, some researchers have described the culture of teaching medicine as influenced by a formative action that can sometimes be unreflective, given that long hours of work and the burden of clinical service can limit the time practicing doctors have to reflect on their experience, an aspect that, at the same time, contributes to limiting the possibilities of time invested in training in the perspective of a new form of patient care, based on a process of constant self-evaluation and lifelong learning by the doctor in training (Aukes et al., 2007; Nothnagle, Goldman, Quirk, & Reis, 2010; Westberg, & Jason, 1994).

In this sense, Quirk (2006) posits that in the face of the challenges of medical training, medical educators should explicitly teach medical students metacognitive skills that allow them to: (a) define and prioritize their goals compared to their study; (b) anticipate and assess their specific learning needs relative to goals; (c) organize (and reorganize) their experiences to meet their learning needs; (d) define their own perspective and recognize differences in the perspectives of others; and (e) continuously monitor their learning, to exercise control over their knowledge base, the resolution of problems and interactions with others, as well as against their own process of metacognitive reflection. Presumably, this should benefit their learning because it is predicated in real clinical practice with the ability to act quickly and decisively (Quirk, 2006; 2014).

It is logical that higher scores are evident in the eight components of metacognition for students in undergraduate programs in psychology and education, as traditionally these are careers where training spaces focused on reflection on self, on practice and in general, and on introspection are provided in abundance, as they are disciplines focused on reflective thinking (Cooper, & Wieckowski, 2017; Foong, Nor, & Nolan, 2018). In general, the development of reflective thinking, as the basis of metacognitive action, requires training spaces to make sense of the experience in relation to oneself, with others, with contextual conditions, and with the development of the ability to monitor and plan future experiences, as well as to develop a greater awareness about learning (Fullana et al., 2016; Ryan, 2013).

Finally, differences were also found for planning and information management skills insofar as education students reported significantly higher scores than psychology students in these measures, a finding that can plausibly be explained by the emphasis these skills are given in education programs. Preservice teachers, for instance, are trained from the very beginning on skills such as defining teaching objectives, the search for sources, planning, the establishment of didactic sequences, monitoring of the state of their students' learning, and forecasting the evaluation process (Jiang et al., 2016; Stewart et al., 2007). This is done while education students must plan and organize the sources and resources for their teaching, skills that are required based on their practice processes, assumed as reflective practice, that according to their particular undergraduate education specialization, they can start in tandem with their academic preparation in the basic and initial training cycle. In this regard, some researchers have described that reflective practice has been included in initial teacher training programs as part of the international movement to reform teaching and improve the quality of education since the 1980s (Adams & Mabusela, 2014; Jiang et al., 2016; Lee, Irving, Pape, & Owens, 2015; OCDE, 1989; Robinson, Anderson-Harper, & Kochan, 2001). Currently, it is considered as a compulsory competency in many preservice teacher training programs (Collin, Karsenti, & Komis, 2013; Richardson, 1990).

Implications for Theory, Research and Practice

The findings of the present study, although based on self-report measures, tentatively have a significant relation with the theory of self-regulated learning and the general monitoring framework proposed by Gutiérrez et al. (2016). More specifically, they indicate that, insofar as the theoretical statements are consistent depending on the task and domain, this relation is much more complex than in initial hypotheses. The extent that these theories are generalized across all domains, are domain-specific, or a combination of both hypotheses, should be examined more fully by researchers. Therefore, research efforts should consider recruiting samples from a variety of different domains (e.g., physics, biology, engineering, fine arts, etc.) and different roles within those domains (e.g., clinician, teacher, researcher) to better understand to what extent metacognitive skills are domain-general or domain-specific. Finally, regarding practice, the findings suggest that additional applied experiments should be designed, examining the extent that interventions aimed at improving metacognitive skills can be generalized across different learning domains.

Avenues for Future Research

The findings of the present study found partial support for the domain-general hypothesis and the domain-specific hypothesis. Evidently, the results for education and psychology students (domain-general) were much more consistent than those found for medical students (domain-specific). However, the present study used only a self-report measure, albeit supported by appropriate psychometric properties from a wide range of languages and cultures. Future research should also employ cognitive or performance measures like those implemented by Gutierrez et al. (2016) and Schraw et al. (2014) and explore how these work in various domains under study. An interesting approach that would further support both hypotheses would be studies investigating block domains (i.e., social sciences like education and psychology compared to engineering or biology) to more fully determine the specificity and/or generality of metacognitive skills.

Likewise, it would be beneficial to investigate whether medical students who aspire to continue training in areas of clinical specialties (for example, family general practitioners, pediatricians, etc.) differ in their metacognitive ability compared to students who choose to become researchers (for example, epidemiologists, immunologists, etc.). Finally, additional research on the cross-cultural implications of our findings would also be useful.

Methodological Reflections and Limitations

It is important to be transparent and highlight the limitations of this research. First, the study employed only a self-report measure that, although considered the gold standard for measuring the construct and has been validated in different languages and cultures, including samples from the Colombian population, is only one measure. Second, it could be that, due to phenomena such as social desirability bias, participants were not completely open and honest about their true metacognitive abilities, a limitation that is most evident on self-report measures. Finally, some of the effect sizes reported in our MANOVA are considered small effects. Therefore, our findings and conclusions may not fully or adequately capture the true effects in the populations under study.

However, despite these limitations, it is important to highlight some strengths of the study. It employed a robust sample size, and thus, increased the stability of the findings (i.e., they are not likely to be spurious). Likewise, the study was implemented in the context of real classrooms, where these students carry out their learning process, instead of the artificial environment of a laboratory and, therefore, it is more ecologically valid. In sum, despite the limitations, the present study contributes to a better understanding of how metacognitive skills operate according to different learning domains.

Conclusion

The results found in the present investigation contribute to the empirical and, in some way, cross-cultural validation of the multilevel metacognitive general monitoring model, to the extent that they support the perspective that metacognitive monitoring is considered to be a combination of the domain-specific hypothesis and the domain-general hypothesis (Gutierrez et al., 2016; Schraw et al., 2014). In particular, the present study is of special relevance to the authors, as it constitutes the first research published in Spanish on the empirical support for the general monitoring model. It is certainly worth noting that psychology and education students (in support of the domain-general view of metacognitive monitoring) reported similar mean scores on most of the eight metacognitive skills components. Equally as important, medical students reported the lowest mean scores overall across all eight components of metacognition. The fact that our sample size was robust lends credence to the findings of the present study, as they are not likely due to chance. It is hoped that the present study spurs additional debate and future research on the general monitoring model and the examination of the domain-general versus domain-specific hypotheses.