Services on Demand

Journal

Article

Indicators

-

Cited by SciELO

Cited by SciELO -

Access statistics

Access statistics

Related links

-

Cited by Google

Cited by Google -

Similars in

SciELO

Similars in

SciELO -

Similars in Google

Similars in Google

Share

Ingeniería y Desarrollo

Print version ISSN 0122-3461On-line version ISSN 2145-9371

Ing. Desarro. vol.29 no.1 Barranquilla Jan./June 2011

ARTÍCULO DE INVESTIGACIÓN / RESEARCH ARTICLE

A low cost touch-screen interface for image collage

Una interfaz touch-screen de bajo costo para collage de imágenes

Idanis Díaz Bolano*

Universidad del Magdalena, Colombia

* Profesora auxiliar Programa de Ingeniería de Sistemas, Universidad del Magdalena - Unimagdalena. Estudiante de doctorado University of Alberta - UofA. Magíster en Ingeniería de Sistemas, Universidad Nacional de Colombia sede Medellin. idanis@ualberta.ca. Correspondencia: teléfono: 1-780-4320401, dirección: 107 10805 79 Ave. T6E 1S6, Edmonton, Alberta, Canada.

Fecha de recepción: 24 de agosto de 2010

Fecha de aceptación: 28 de febrero de 2011

Abstract

This paper presents the design of a low-cost interface based on touchscreen interaction. The interface is developed for rehabilitation purposes, such as, stimulating cognitive development and motor skills as well as creativity and expressibility. The touch-screen interaction design is based on a Wii Remote system. We compared two system versions. The first is based on touch-screen interaction design and the second is based on mouse interaction. Although the wii Remote has low resolution the experimental results showed that users had a better performance with the touch-screen version when carrying out activities that require fine motor skills such as delineating free shape figures.

Keywords: touch-screen interaction, Wii Remote, image collage

Resumen

En este artículo se presenta el diseno de una interfaz de bajo costo basada en interacción touch-screen para crear collage de imágenes. La interfaz fue diseñada para propósitos de rehabilitación, tales como la estimulación de desarrollo cognitivo, habilidades motoras, creatividad y expresividad. La interacción touch-screen de la interfaz se basa en la utilización de un control remoto de Wii. Para evaluar la eficiencia de la interfaz, el diseño touch-screen fue comparado con un diseño basado en interacción con el mouse. A pesar de los problemas inherentes a la baja resolución de la cámara infrarroja del wii Remote, los resultados experimentales obtenidos muestran que la versión del sistema touch-screen permitió un mejor desempeño para realizar tareas que requieren habilidades motoras finas, tales como delinear la forma libre de una figura.

Palabras clave: interacción basada en touch-screen, Wii Remote, collage de imágenes

1. INTRODUCTION

Interaction based on touch-screen offers some advantages over other common computer interfaces such as direct relationship between cursor and hand movement, intuitive and predictive interaction, and freedom of action in comparison with other devices such as mice. Touch-screen interaction allows reduction in mouse-interaction, ergonomic problems and increases the software accessibility. According to Paul Sherman [8] : "Software accessibility can be defined as a trait of software or other electronic information sources whereby it is usable by people with physical, cognitive or emotional disabilities." Mouse-interaction requires fine motor control in order to press buttons holding the mouse while dragging it on a flat surface. This operation mechanism entails some ergonomic problems due to the hand posture and the use of the thumb and little finger [9] . On the other hand, touch-screen interaction is easier and it requires a more natural hand motion from users.

In spite of the advantages offered by the interaction based on touch-screens, this technology is not much in demand. One of the primary reasons for this situation is the relative initial high-cost involved in the implementation of such an interface. However, there are some alternatives that work under the basic principle of a touch-screen that can be built with low-cost materials. One of these alternatives is the Nintendo® Wii remote control for Wii Consoles. This device contains a low-resolution infra red (IR) camera which allows to quickly implement a touch-screen interaction with low cost for the end-user. This alternative has been explored with some projects with similar interaction [2] , [5] , [6] .

Nevertheless, the touch-screen interaction based on the IR Wii Remote presents some problems inherent to the camera's low-resolution. A good combination of signal registration and programming is necessary to deal with noise and undesirable system behavior. In this paper we evaluate the performance of the low-cost alternative touch-screen interaction, with a IR Wii Remote, used to create an image collage in environments for psycho-motor evaluation. The interface designed involves some activities that require motor precision and eye-hand coordination such as bordering the shape of a figure and dragging.

In Section 2 we illustrate the functionality of the designed interface. Then, in section 3 we describe hardware and software implementation details. In section 4, we detail the experiments carried out to evaluate the interface performance. In Section 5 we analyze the data gathered during the experimental phase by applying statistical tests. And finally, in Section 6 we give some conclusions about this work.

2. INTERFACE FUNCTIONALITY

The purpose of the interface is to create image collages. Collage is the art of creating image compositions from a collection of images or photograph parts and arranging the pieces together. In our interface, users can create pictures or scenes by segmenting figures from images and pasting them in a work area. Creating a collage is a task that requires imagination, creativity, visual acuity and visual perception, hand-eye coordination, and fine neuromotor skills. Collages are useful to measure and to treat learning disabilities, visual and neuromotor impairments [4] , [10] .

Creating scenes by cutting and pasting figures stimulates cognitive and motor development, creativity, aesthetic and expressive attitude through imagination and thinking.

3. INTERFACE DESIGN

The designed interface has two main characteristics: the touch-screen interaction implemented with low-cost materials and the use of segmentation technique. In this section, we give details about the touch-screen interaction implementation and we describe some details about the segmentation technique.

Touch screen Interaction Design

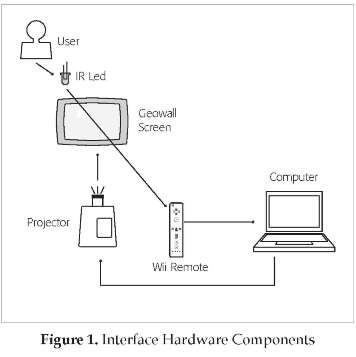

The hardware used to emulate a touch-screen interaction is composed of a Geowall screen, a DLP micro-mirror screen projector, a Wii remote, a computer, and a one-finger IR LED glove. Figure (1) illustrates the system's hardware components. The user interacts directly with the Geowall screen by using the one-finger glove. The glove contains the necessary electronics to drive a 940-nm IR LED, they are illustrated in front of Figures (2) and (3).

The system depicted in Figure (1) works as follows: the Wii remote sensor receives the IR luminance signal when the led is on and sends the position of the highest luminance peak position to the computer via bluetooth® wireless network. The Wii Remote's primary sensor is a 940nm IR pin-hole CCD camera whose reduced cost is due to high production demand. Unfortunately, the resolution specifications are restricted due to proprietary agreements, but the suspected resolution is about (128 x 96)-pixel grid with an integrated sub-pixel interpolation algorithm that increases the camera resolution to (1024 x 768)-pixel grid [1] . 940-nm infrared source in the camera vision field is mapped onto the sensor's image coordinates. However, another mapping from the device's image coordinates to the computer screen is necessary.

The IR signal position is inside a rectangular area representing the IR camera image mapping. The IR CCD integrates some image processing functionality that allows the Wii remote send compact information of where the highest IR luminance peak occurs. Qnce the IR source position information has been passed to the computer, the mapping from sensor image to computer screen coordinates is accomplished using a transformation obtained from a previous touch-screen calibration. This way, the input signal position is transformed from relative coordinates in the Geowall screen to the computer screen. Then, the system application interface triggers an event according to the coordinate's information.

The IR signals captured by the system are emitted by the users through the one-finger glove illustrated in Figures (2) and (3). The glove's design allows us to emulate a mouse right button, it contains a skin touch button which works as an interrupter to turn on or off an IR Led. When the user presses the skin touch button it is interpreted as a single-click. If the user presses the skin touch button twice consecutively it is interpreted as double-click. When the skin touch button is released it is interpreted as a mouse-up. When the user holds the button pressed and move his/her finger it is interpreted as a dragging action.

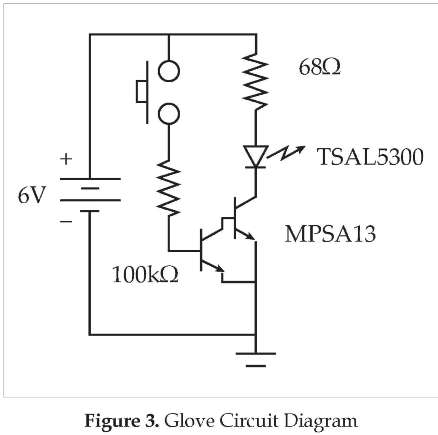

The glove's circuit diagram is shown in Figure (3). The IR source is driven using a NPN-Darlington transistor (Fairchild Semiconductor MPSA13). The power supply is provided by two alkaline 3V batteries in serial configuration. The floating power supply posses no electric shock risk for the user. The purpose of the transistor is to simplify the switching mechanism to turn he IR source on and off. The high-gain at the base-emitter input of the transistor allows us to use the natural skin resistance, about 1MΩ, to turn-on the IR LED when pressing two contact points. The two contact points were integrated into the glove's design using a shirt-button at the interior side of the first phalanx of the index finger. This way the shirt-button works as the skin touch button.

The choice of the driver resistor (68Ω) is due to the need of driving a rather high current through the IR LED in order to avoid the light diffusion caused by the Geowall. Earlier tests with the IR source showed that lower luminance intensities of the IR spectrum is insufficient to be detected by the Wii sensor when the source light passes through by the Geowall. A higher current in the IR LED increases the luminance level, enough to be detected by the camera's sensor.

Software Design

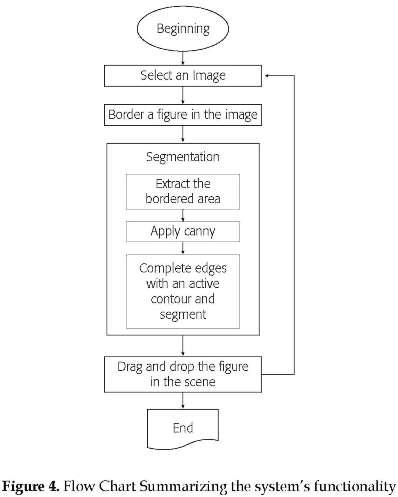

The system was designed for touch-screen interaction. The graphic interface consists of buttons and icons covering an area in the screen for which a user has to click using the one finger glove. The flow chart at Figure (4) summarizes the application's functionality. The button Add Figure opens a window to show different available images from which a user can search and chose. Once an image has been chosen from the available database, the user has to do a bordering task on the chosen image. This task requires the user to completely enclose a section of the image and demands fine motor coordination from the user. Next, a segmentation of the image is automatically accomplished by a segmentation algorithm based on active contours. In the following step, a drag and drop operation takes place. The figure is placed on the work area of the interface and the drag and drop is carried out by user using the glove. The Wii remote connection to the software component in this interface is carried out by the WiimoteLib API [7] .

The segmentation algorithm that extracts sections from the images entails three steps as illustrated in the flowchart in Figure (4). In the first step, the region is defined by the user who receives visual feedback from a bordering curve drawn over the image. Since the resolution of the Wii remote is very low, 128 x 96 pixels, the bordering operation will always contain gaps that are automatically closed by the algorithm in the discrete (u, v) positions. In the second step, a Canny edge detector is applied on the extracted area in order to create the attractor space for an active contour. During the third step, a closed active contour approximates and improves over the edge mapping obtained from the previous step. Once we have a fitted edge map to the image section that the user wants to extract, we apply a region growing technique and copy the result to a PictureBox control that is manipulated by the user. Then, the user is able to drag and drop and re-size the figures.

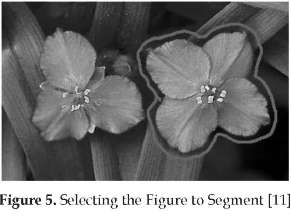

The implementation for active contour algorithm in this interface is based on the Chan-Vese implementation [3] . The working principle of contour algorithms is to evolve a parametric space to fit the maxima of a gradient mapping, usually edges. Edges are the most informative feature for segmentation of images. In this way, the edge-detector result is used to stop the evolving curve to the object contained inside the region of the interest pointed out by the user. We can see in Figures (5) and (6), an original image with the drawn border by an user and the segmented image result that was pasted in the work area.

4. experimental design

In this section we present the usability study carried out to evaluate if the interfaces is both learnable and the interaction design is efficient for image collage. In this usability study, we compared the performance of naive users against the developer's performance and we also compared the interface's touch-screen interaction against mouse-interaction. Three basic questions guided this experimental design:

i. Can users build a given scene in the same time that the interface developer does, without help?

ii. Do users need to consult the system manual more than once during the experiment in order to understand how the system works?

iii. Do users need more time to build a scene through the interface by interacting with their hands than by interacting with a mouse?

Apparatus

The interface was implemented in a Hewlett-Packard Pavilion dv6-series computer with a 2,20 GHz AMD Turion Ultra Dual-Core with 4 GB RAM memory. Two versions of the system were run during the tests. The first version used the one-finger glove, as seen in Figure (2). The tests for this version were carried out in the Advance-Man Machine Interface Laboratory at the Department of Computing Science at the University of Alberta. The interface was projected in a Geowall screen with an image area of 50 cm height and 80 cm width, preserving a screen ratio 15.9. The second version of the system used a V150 Laser Mouse, Model number: M-UAL120. The tests for the second version were carried out directly on the laptop display which preserves the same screen aspect ratio 15:9. We ensured that each participant was comfortably seated at a minimum distance of 20 cm from the screen and with enough arm space to allow mouse interaction.

Participants

A total of 10 adult volunteers between the ages of 18 to 45 were invited to participate in the experiments. All volunteers were naive of the interface development process. The users were divided in two groups, corresponding to the two different interaction devices (mouse and hand). The group of users who tested the touch-screen interaction version of the system was mainly composed by students from the Department of Computing Science at the University of Alberta. The group of users who tested the mouse interaction version of the system was composed by people who are not related to Computing Science. All participants had normal or corrected to normal vision and no reported neuromotor impairment.

Procedure

At the beginning of each experiment we briefly explained to each participant how the system works and the experiment's purpose. Then, we presented three goal-oriented tasks in the form of pre-elaborated collages containing different number of visual elements. Each collage was previously elaborated by cutting and pasting distinct figures using the mouse-interaction version of the interface. The three collages were given to each participant as a sample on paper and ordered from the first to the third collage. The first collage contained five visual elements. The second collage contained eight visual elements and the third collage contained thirteen visual elements.

During the experiment the users were asked to create the three scenes in the given ordered by using the selected study interface. A set of different images was organized in a folder accessible by the interface. Each user had to identify the visual elements in both the image set and the corresponding collage element. The different collages contained distinct elements from the image set. While the user interacted with the system, we estimated the time spent to build each scene. For each scene the time was accounted from the moment that the user added the first figure to the moment when the user pressed the button save. Since the users ignored the timing element, we ensured that the button was pressed immediately after each scene was completed by a gentle suggestion. Additionally, the number of times the user pressed the button help of the system was also accounted.

Previous to the user touch-screen experiments, the developer also conducted the same experiment designed for the users. The purpose of this experiment was to provide a baseline on which to compare the naive users' performance. The time spent to create each scene was also registered. This experiment was carried out in the same place and under the same conditions that the users' experiment.

Results

To answer the three questions stated in Section 4, we analyzed the data gathered in each experiment. In this section we present this analysis.

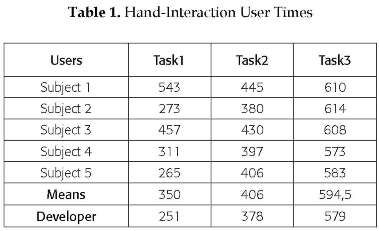

We applied Chi-Square test for goodness of fit. Our focus was to statistically estimate with a 0.05 significance level of confidence, if there is a difference between the times that the developer took to perform all three goal oriented tasks and the time mean from the users who tested the hand interaction version of the designed interface. We referenced the time mean of the users as the observed values and the developer time as the expected value. Table 1 contains the times in seconds obtained for each user and, the developer in the last row, using hand interaction.

We obtained x005 = 5.99 for two degree of freedom (df = 2). The result of the statistical test to the data was: x2 = 60.08 which implies that there is difference between the users' time mean and the developer's time. However, the largest difference is observed in the time spent for the first task. In fact, by comparing the time spent to carry out task 2 and task 3 we observed that the statistical test does not reject the hypothesis of time similarity. Omitting the first task the statistical result that we obtained for x0.05 = 3.84 with (df = 1) is x2 = 2.43.

According to these results, there were significant differences between the users and the developer at performing the first task. A possible reason for this is because users, during the first task, spent time familiarizing themselves with the set of images available to build the collages. Once the users knew where each figure was, their performance speed increased during the second and third tasks.

After we evaluated the differences in performance from the users and the developer, we proceeded to evaluate how many times the system manual was consulted during the experiment. In this evaluation we considered the two versions of interactions, touch-screen and mouse. We expected that a user would not consult the manual more than once, because a high number of manual consults would imply that the user was having difficulties at understanding how the system works. The average number of times that users consulted the system manual was 0.8. The number of times that each user consulted the manual was between 0 to 2 times. The manual consults were carried out frequently during the first task.

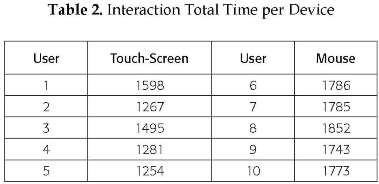

On the other hand, when comparing the user performance using the touchscreen interaction version of the system and the mouse interaction version, we used one-single factor ANOVA analysis between subjects. The test was applied over two samples composed by the total time that users spent to accomplish the three tasks by interacting with the two versions.

Before this analysis of variance, we applied the Shapiro-Wilk test to determine whether the sets of data hold the required normality assumption or not. The p-values obtained in this test were 0.099 and 0.4012 for the touch-screen interaction and the mouse-interaction respectively. This results indicate that the two small samples of the registered times hold the normality assumption.

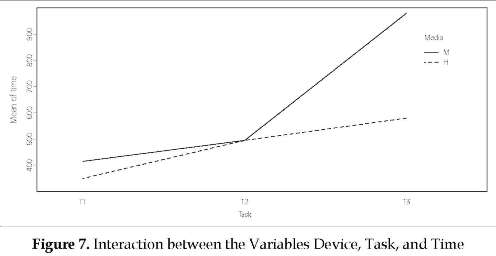

The results of the analysis of variance has a p-value = 0.0005, which indicate that the two user groups have a significance difference in their performance according to the used input device. Figure (7) shows the interaction between the variables Device (H = hand, M = mouse), Tasks (T1 = Scene1, T2 = Scene2, T3 = Scene3) and the time spent by the users.

In figure (7), we can observe that the performance of users who interact with the system by using the glove and the screen was faster than the users who use the mouse. During the test we noticed that users who used the mouse had some difficulties when they moved their hands and held the right button pressed at the same time to enclose the figures.

However, the users that interacted with the touch-screen version had some problems enclosing the figures due to the low resolution of the Wii remote. Frequently, the drawn border line had large gaps. A possible reason for the differences in the time between the two groups of users is that the touch-screen version had more motion freedom and precision to enclose the figures, particularly with figures with irregular shapes. In Table 2 we can observe the differences between the total times spent by users who used the two system versions. The first column contains the time in seconds for the five users who tested the touch-screen interaction version and the second column contains the time in seconds for the five users who tested the mouse interaction version.

6. CONCLUSION

In this work we evaluated the performance of an interface designed with a touch-screen interaction using low cost materials. In the developed application for this interface, users had to carry out tasks that require motor precision such as bordering figures and drag and drop figures in a work area. The system was developed to create image collages. The interface uses a Wii remote control as an IR sensor and a one-finger glove which emulate the mouse's right button function.

In spite of the problems that the low resolution Wii remote introduce into our interface, we observed that users who used the touch-screen version had more hand motion freedom than users who used the mouse version. By using the glove, users avoid the required arm position and the use of the thumb and little finger to move the mouse. Additionally, the application visualization in a bigger screen helps to obtain better performance time with the touch-screen version than with the mouse version.

The interface performance was evaluated by comparing the time users spent accomplishing three predefined tasks through the emulated touch-screen and a version of the same system using the mouse. According to the results obtained, a touch-screen emulated with low-cost materials could be a good alternative to increase the use of this technology to overcome the ergonomic mouse problems and to increase the software accessibility.

References

[1] Wiibrew wiki, 2010. http://wiibrew.org/wiki/Wiimote#IRCamera. [ Links ]

[2] Wiimote Project, 2010. http://www.wiimoteproject.com/index.php. [ Links ]

[3] T. Chan and L. Vese. "Active Contours without Edges". IEEE Transactions on Image Processing, vol.10, pp. 266-277, 2001. [ Links ]

[4] M. Farrel, The effective teachers' guide to sensory impairment and physical disability, practical strategies, new directions in special educational needs, 1 ed,Oxon, Routledge, 2006, pp. 43-70 . [ Links ]

[5] R. Francis. Wiimote Whiteboard Project for Beginners, 2009. http://www.wwp101.com/. [ Links ]

[6] T. Imbert. Liliesleaf Interactive Table- WiiFlash & Papervision3D, 2008. http://wiiflash.bytearray.org/. [ Links ]

[7] B. Peek. Managed Library for Nintendo's Wiimote, 2010. http://wiimotelib.codeplex.com/releases/view/21997. [ Links ]

[8] P. Sherman. Cost-Justifying Accessibility. Austin Usability, 2001, http://www.gslis.utexas.edu/~l385t21/AU_WP_Cost_fustifying_Accessibility.pdf. [ Links ]

[9] R. Whittle. In praise of thumb-operated optical trackballs with scroll wheels - and some warnings about the serious ergonomic problems of mice, 2001. http://www.firstpr.com.au/ergonormcs/. [ Links ]

[10] L. Willadino-Braga, A. Campos, The Child with Traumatic Brain Injury or Cerebral Palsy: A Context-Sensitive, Family-Based Approach to Development, United Kingdom, Taylor & Francis, 2006, pp. 55-98 [ Links ]

[11] Banco de Imágenes Gratuitas, 2010. http://joseluisavilaherrera.blogspot.com/2006/05/10-de-mayo-da-de-las-madres.html [ Links ]