1. Introduction

Laparoscopic surgery is a minimally invasive surgical method that consists of making small incisions in the abdomen, followed by the injection of carbon dioxide to create a working space in which the insertion of the surgical tools required for the medical procedure will take place 1-2. Due to the risks involved, different improvements have been made to this type of procedure over the years, one of the most important being the inclusion of robots, which has given rise to the so-called robotic-assisted laparoscopic surgery whose contributions are notable in terms of improved performance and precision compared to traditional laparoscopic surgery.

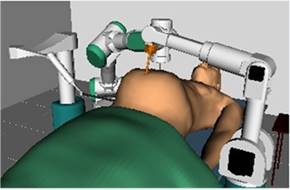

Considering the advantages present in the addition of robotic systems in surgical procedures, there has been a growing interest in training surgeons to operate in environments that provide the highest possible performance using the latest advances in surgical technology. To overcome this limitation, surgical simulators have been used to train surgeons in the use of technological tools, routine practices and the most complex tasks of their profession in a safe and low-cost environment 4. One of its most important lines of research is precisely medical robotics, the Industrial Automation Group of the Universidad del Cauca has its own surgical simulator, called Surgical Robotics Virtual Simulator (abbreviated SVRQ) 5, which consists of three robots: two surgical robots and an endoscope holder, which allow simulating a robot-assisted cholecystectomy procedure under optimal conditions.

Although the inclusion of robots has brought significant advantages to laparoscopic surgery, it is necessary to mention that it presents certain drawbacks as the need for a trained team for the manipulation of the complex systems, the high costs in the initial investment, the lack of tactile feedback during the procedures, the occurrence of phenomena such as collision between the robot arms, and the impossibility of changing the position of the patient once the insertions have been performed 3. Another problem inherent to these new technologies are the limitations in the field of vision of the endoscope holder robots, where the patient's abdominal cavity can be observed through a camera attached to one of its end-effector. This limitation is a major drawback since it entails a high risk of collision between surgical instruments and/or the generation of wounds in the patient (damage to internal organs, nerves or major arteries), and has therefore been the subject of several research studies in this field.

One of the fields of greatest interest in biomedical sciences, and by extension in medical robotics, has been to find a way to connect the human mind and machines, which today seems possible thanks to brain-computer interfaces (BCI), which aim to streamline processes by minimizing the time in which a device performs an action desired by a user. Although this type of connection was originally intended for other applications, it is quite interesting to test the results obtained by implementing BCI interfaces in surgical environments.

The developed project aims to validate the advantages and disadvantages of manipulating the endoscope-holding robot of the SVRQ surgical simulator with encephalographic signals, with respect to the traditional method using joystick (input device for controlling the movement of the cursor in a computer), using usability as an evaluation parameter.

1.1 Related work

Over the last few years, different platforms have been developed that allow robot-assisted surgeries to be performed. Among these, the most widely used is the Da Vinci surgical system, which allows surgeons to operate minimally invasively through small incisions in the patient's abdomen directed from a nearby console 6. However, there are other options designed to facilitate the work of surgeons, among which we can find: Versius, a surgical robot inspired by the human arm that aims to reduce the surgeon's physical and mental effort 7; the SPORT single-port surgical robotic system for surgery, which has multi-articulated instruments and high-definition 3D visualization on a flat-screen monitor 8; and Raven, a system developed to perform teleoperation, allowing the patient to be operated on from the same operating room or from anywhere in the world 9. On the other hand, considering the existing difficulties for practice in real operating rooms, it is important to take advantage of the facilities provided by surgical simulators. In the case of surgical simulators for laparoscopic surgery, these can be of two types:

Physical simulators, which can be defined as closed boxes where the patient is emulated, and which have holes for the insertion of instruments, cameras and monitors that allow the visualization of the interior of the box 10. These simulators are used to strengthen surgical skills and abilities.

Virtual simulators, where the endoscopic chamber, surgical tools, organs and tissues with which the surgeon practices are digitally modeled, providing the surgeon with a training that is closer to reality, and consequently, a faster learning curve is achieved 11. Within these virtual simulators it is possible to find: SmartSIM 12, LapVR 13, LapSim® 14, Lap-X 15 and the SVRQ of the University of Cauca 5).

Regarding robotic endoscopic holder in particular, there is the EMARO robot (Endoscope Manipulator Robot) of 4 DOF (degrees of freedom), pneumatically controlled through the horizontal and vertical movements of the surgeon's head (movements captured through a gyroscope placed on the surgeon's forehead) 16; the Flex® system, used for visualization of the pharyngeal region, rectum and colon 17; the Monarch platform, which is used to explore hard-to-reach places inside the lungs using a joystick-controlled endoscope 18. Likewise, different researchers have tried to adapt to medical robotics techniques such as: voice recognition 19; facial gestures 20; finger movements 21; foot movements 22; and eye movements and/or pupil variations 23. Although all these developments and techniques have had the purpose of dealing with the problem of visual limitation and/or manipulation of surgical assistant robots, all of them have had different degrees of success and drawbacks, which is why there is still no consensus on the most adequate method that satisfies to a high degree the current needs in this field. For this reason, brain-machine interfaces are part of the group of so-called natural interfaces that can provide a solution to the aforementioned problem. These interfaces allow the manipulation of applications and devices by capturing the users' brain activity without the use of mechanical devices.

Although there are different tools or equipment to establish interfaces of this type, such as those shown in 24-27, one that stands out for its simplicity and cost is the Emotiv EPOC helmet, which allows capturing electroencephalographic signals from which it is possible to recognize facial gestures and cognitive thoughts so that actions such as manipulating the movements of a virtual hand can be performed 28; the manipulation of a prosthesis, and its integration with graphical interfaces such as LabVIEW 29; and home automation applications such as the one shown in 30

The Emotiv helmet is capable of responding to different needs in various applications, making it an interesting alternative for the manipulation of a robotic endoscopic holder in surgical environments. The present project focused on discovering whether or not its use in a laparoscopic surgery application in a simulated environment is really feasible.

2. Metodology

The following is the procedure to test the manipulation of the endoscope-holder robot with EEG signals, the definition of the brain-computer interface, the helmet to be used and finally the insertion of the helmet as a command input for the manipulation of the endoscope-holder robot in the SVRQ surgical simulator.

2.1 The surgical procedure: cholecystectomy

The SVRQ (Surgical Robotics Virtual Simulator) developed at the University of Cauca, simulates a surgical environment composed of three surgical robots with which the simulation of a laparoscopic cholecystectomy is performed. It contains three robots, a robotic endoscopic holder (Hibou robot) and two surgical robots (LapBot and PA-10), which allow the manipulation of surgical instruments. All three are moved by joystick, in addition to a scenario that simulates collision and deformation of the stomach, liver and gallbladder when they come into contact with any of the instruments carried by the robots (Figure 1).

The SVRQ simulator uses Microsoft Visual Studio as the development environment, VTK (Visualization Toolkit, open source software for manipulating and displaying data for 3D) as the graphics engine, QT (cross-platform software for creating graphical user interfaces) is used to design the graphical user interface, and CMake is used to package the software.

The surgical procedure to be simulated in the SVRQ environment is called cholecystectomy. This consists of the surgical removal of the gallbladder (a pear-shaped organ located below the liver), which serves as a reservoir for bile, which is produced in the liver and whose function is to aid in the digestion of fats. Sometimes gallstones appear inside the gallbladder, which cause pain and lead to the appearance of infections, being this phenomenon the one that forces the removal of the gallbladder. There are currently three surgical procedures available to treat this condition: i) laparoscopic cholecystectomy, in which the gallbladder is removed by means of surgical instruments inserted through small incisions in the abdomen; ii) open cholecystectomy, in which the gallbladder is removed through an incision in the right side of the thoracic cage; iii) there is also the possibility of removing gallstones by means of endoscopy. The method used for simulation and experimentation in this project will be that of laparoscopic cholecystectomy, which will be described below 31-32.

Phase 1: Exposure of the gallbladder and cystic pedicle.

A fundamental aspect of laparoscopic surgery corresponds to the location of the incisions, which can have different configurations.

After making the incisions and injecting the gas to create the working space, we proceed with the exposure of the gallbladder and the cystic pedicle, which is formed by the cystic artery and the cystic duct, and whose exposure is achieved by inserting a grasper whose purpose is to retract the gallbladder through the incision made in the right flank.

Phase 2: Cystic pedicle dissection.

In this phase, a dissection is performed in which the cystic pedicle is separated from the tissues that cover it.

Phase 3: Cystic duct and cystic artery dissection.

Once the cystic pedicle is exposed, an endoclip is introduced through the trocar. This endoclip carries the clips that are placed in the cystic pedicle to avoid leakage in the subsequent cut. The cut is made with scissors in the space between the clips.

Phase 4. Dissection of the gallbladder from the liver bed.

In this phase, the gallbladder is separated from the hepatic bed using an electrosurgical hook with cautery.

Phase 5. Gallbladder extraction.

Finally, using an endo-bag, the gallbladder is extracted.

2.2 Brain-computer interface

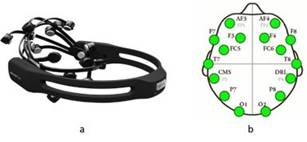

Brain-computer interfaces (BCI) are computer-based systems that acquire, analyze and translate signals into output commands in real time, making use of several methods. For this project, electroencephalographic (EEG) signals were used, with the Emotiv EPOC headset 33, which has fourteen non-invasive brain electrodes plus two references located according to the 10-20 standard defined by the IFES (International Federation of EEG Societies). The appearance of the headset and the distribution over the wearer's head are shown in Figure 2.

Additionally, to establish a BCI it is necessary to use software tools that allow the manipulation and/or translation of the signals that are captured by the headset or helmet. These tools are:

Emotiv Control Panel: It´s the graphical user interface for the management of user-specific settings, provides real-time information on the status of the headset. It has 3 environments for interacting with EEG readings: Expressive Suite, has an avatar that mimics the expressive actions of the user's face; Affective Suite, that reports changes in the subjective emotions experienced by the user; and Cognitiv Suite, that evaluates brain wave activity in real time in order to detect the user's conscious intention to perform different physical actions on a real or virtual object (e.g. moving an object forward, backward, left, right, etc. …).

EmoKey: It´s a tool that allows mapping the EEG signals detected by the helmet to a keyboard input, according to the rules previously defined through the EmoKey user interface 33.

The help of these three tools, combined with the use of the Cognitiv Suite, provides the possibility to perform the training required to be able to control the robotic endoscopic holder. To carry out the training of the users, the suite has a 3D cube that is used as a visual aid to focus the user's efforts on the execution of the thinking actions to be performed. Cognitive training is performed following a sequence of instructions that includes the choice of the action to be performed, data capture, and data storage.

2.3 Insertion of the Emotiv headset in the SVRQ environment

Normally the manipulation of the robots that are part of the SVRQ environment is done by means of a joystick. However, the objective is to use the Emotiv headset with EEG signals, so that the endoscopic holder reacts following the user's thoughts in real time. For this reason, both the Emotiv headset and the joystick will be used in the simulation, and the positioning of the endoscope will be compared between the two devices. For the joystick control, four buttons were selected, which allow to perform the chosen action, change the robot, change the tool or delete a clip (Figure 3).

Once the mechanisms for robot manipulation have been established and the simulation environment has been configured, the procedure carried out to simulate cholecystectomy is illustrated. This procedure is described as follows, using the joystick:

a. Exposure and dissection of the cystic duct and artery

a.1. Positioning of the endoscope over the gallbladder using the Hibou robot.

a.2. Position the endoscope by mentally visualizing the cognitive actions of translation in order to locate the gallbladder. Figure 4 shows the image of the initial position of the camera at the moment the simulation starts.

a.3. Once the camera is positioned, we proceed to change the robot and choose the Lapbot, which has a surgical clamp that allows us to hold the gallbladder and move it in order to have an appropriate field of vision of the gallbladder, the cystic duct and the cystic artery.

a.4. Change robot with button 3 of the joystick.

a.5. Position the Laptbot robot on top of the gallbladder.

a.6. Hold the gallbladder with button 1 and move the robot to position the gallbladder.

b) Cystic duct and cystic artery dissection

b.1. Change robot and choose the PA-10 robot, which has the necessary instruments to cut the cystic duct and artery. This change is made by pressing button 3 on the joystick.

b.2. Position the instrument on the duct, using the interface to select the appropriate position to place the endoclips.

b.3. Select with button 2 the endoclip, in the upper right part of the simulator window you can see how the selected instrument and its respective name change.

b.4. Position the endoclip over the cystic duct, the endoclip will rotate 90° when it is in the right position to place the clips.

b.5. Placing the staples in order to dissection the conduit.

b.6. Press button 1 when the position is as indicated to place the clip.

b.7. Once the first clip has been placed, the user has the possibility to remove it pressing button 4, in case it has been placed incorrectly.

b.8. Place the second clip in the same way the first one was placed. Figure 5 shows how the two clips have already been placed to make the cut.

b.9. To cut the cystic pedicle, the PA10 robot is manipulated and the scissors are selected with button 2.

b. 10. Position the scissors between the two clips previously placed on the cystic pedicle.

b.11. Cut by pressing button 1. Once the cut has been made, the gallbladder can be dissected.

c) Gallbladder dissection

c.1. To separate the gallbladder from the liver bed with the aid of the integrated cautery, the Lapbot robot is selected with button 3 on the joystick.

c.2. Pass the Lapbot robot forceps around the gallbladder simulating such dissection.

d) Gallbladder extraction

d.1. To simulate gallbladder removal, the PA10 robot is selected by pressing button 3.

d.2. Selecting the retractor from the list of instruments.

d.3. Place the retractor over the gallbladder and press button 1 to hold the gallbladder.

d.4. Move the joystick upwards to remove the gallbladder. Figure 6 shows the surgical environment without the gallbladder, once the gallbladder has been removed.

With the removal of the gallbladder, the basic steps of laparoscopic surgery are completed. The simulation is designed up to this point, in a real procedure the surgeon would manually or in a guided manner remove the robot and proceed to the respective suture.

3. Results and discussion

This section shows the validation process carried out and the results obtained once the cholecystectomy simulation was performed by manipulating the endoscopic holder with the headset and the joystick.

3.1 Validation parameters

Considering that the work was carried out at the software level, the ISO/IEC 25000 family of standards, also called SQuaRE, was used (System and Software Quality Requirements and Evaluation), that aim to create a common framework for evaluating the quality of a software product. Specifically, use is made of the ISO/IEC 25010 standard (System and software quality models), which describes the quality model for the software product and for quality in use, and which has several categories (functionality, performance, usability, reliability, security, maintainability, portability and compatibility). Within these categories, the usability category was used for the validation of this project, since it is desired to know if a system such as the proposed one can be adapted to the needs of the surgical environment, complementing or replacing the existing command method. To this end, the usability category has the following subcategories: ability to recognize its suitability, ability to learn, ability to be used, protection against user errors, aesthetics of the user interface, and accessibility 34.

3.2 Test conditions

The objective of the test is to verify the potential of this EEG signal reading system as an interface to move a virtual object, testing the user's perception of its use. As can be inferred from Table 1, the group of participants chosen for the tests was designated taking into account variety in terms of age, sex and occupation. Figure 7 shows one of the participants during the development of the tests.

Table 1 Test parameters

| Participants | 10 |

|---|---|

| Average age | 29.7 years |

| Medical students | 2 |

| Engineering students | 6 |

| Non students | 2 |

| Activity | Perform cholecystectomy on the SVRQ simulator with the joystick and with the headset. |

| Procedure | Training of cognitive actions with the headset, explanation of the procedure, performance of the procedure with each of the commands, completion of a final survey. |

Tests were conducted using a Dell Inspiron 620 computer with 4 GB of RAM and an Intel Core i5-2320 processor, a Trust GXT555 Predator joystick, and the Emotiv EPOC 16-channel headset.

The development of the tests consisted of two phases. The first corresponds to the training of the system with the user's cognitive signals, and the second corresponds to the test in the surgical simulator with the system already trained. And the test with the surgical simulator has two phases also, one in which the procedure is performed using the headset (to manipulate the robotic endoscopic headset through the trained EEG signals), and another in which the same procedure is performed using the joystick, in order to have a point of comparison and facilitate validation based on the ISO/IEC 25010 standard.

3.3 Training protocol

The training was carried out taking into account the following steps:

Familiarizing the user with Emotiv technology: Briefly explain to the user the function of the helmet and the purpose of the training.

Adequacy of software and hardware for test start-up: Moisten the pads of the helmet and place it on the user's head. On the computer used for testing, open the EmotivControl Panel application and create a new user with the user's name.

Start the training of cognitive actions in the following order: Neutral, push, pull, right, left. For the expressive action of blinking only the sensitivity is adjusted as the software does not allow training. The first time that each of the actions are trained, they are performed in animated form, testing the movement of the cube. If the cube is successfully moved, the training is performed again without the animation.

Evaluate the progress of the training: The Skill Rating function provides information on the skills the user is acquiring as he/she trains and how the system is learning from the user. A training percentage greater than or equal to 50 percent was chosen because at that level the system recognizes signals acceptably. If this parameter is lower, the system is more likely to confuse the actions it is training.

End training.

3.4 Simulation protocol

Once the training is completed, we proceed with the second stage, the simulation of laparoscopic surgery, which consists of:

Description and familiarization of the SVRQ simulator and the use of the joystick device.

Demonstration and practice of the following tasks: Placement of the endoscope (camera) visualizing the gallbladder; switching between the different robots; exposure of the gallbladder and cystic duct; placement of clips; cutting of the cystic duct; removal of the gallbladder.

Execution of the procedure by the participant, first with joystick and then with the helmet. The order of the test was not considered to influence the results. The joystick was tested first because the participant had been using the helmet for a long period of time in the training phase, and was already showing signs of fatigue.

Completion of survey.

3.5 Results obtained from the survey

The survey consisted of 6 questions considered adequate for the validation of the usability of the manipulation method. This survey was filled out by each of the participants once they had performed the procedure with the joystick and the Emotiv headset. The survey conducted to validate the method of manipulation of the endoscope-holder robot was developed taking into account the subcategories described below:

Ability to recognize its suitability: Capability of the product that allows the user to understand if the software is suitable for his needs.

Learnability: Capability of the product that allows the user to learn its application.

Usability: Capability of the product that allows the user to operate and control it with ease.

Accessibility: Ability of the product to be used by users with certain characteristics and disabilities.

The results obtained for each question are as follows:

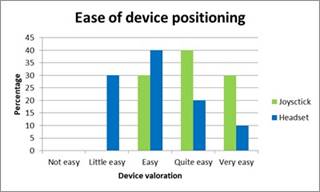

Question 1: ¿ How easy was it to position the endoscope to perform the surgery simulation?

Measuring how easy it is to position the endoscope seeks to find the method that best suits the user's needs, because the simplicity of the method makes its use more feasible. In Figure 8 it is possible to observe that for most of the respondents it was easier to carry out the positioning action through the joystick.

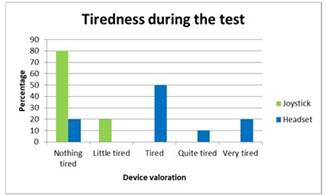

Question 2: ¿ How tired did you feel after doing the surgery simulation?

The ergonomics of a system is an aspect of great importance in an environment such this, in accordance with the time used, for each procedure the control system must ensure the user's comfort as much as possible. Figure 9 shows that the method that produced the least fatigue in users was the joystick, while half of the respondents mentioned feeling a moderate level of fatigue after using the headset.

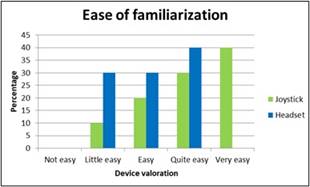

Question 3: ¿ How easy was it to become familiar with the endoscope's controls?

Ease of familiarization is related to the learning curve that users have to go through to manipulate the system correctly. With regard to this aspect, it was observed that, although there is no marked difference between the two tools (the joystick and the headset), and although the respondents became familiar with the headset quite easily, it is still noticeable that it is much easier to become familiar with the joystick because it is a more commonly used element (see Figure 10).

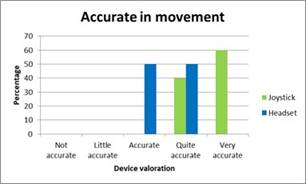

Question 4: ¿ How accurate did you feel the movement of the endoscope when positioning it?

Robotics in surgical environments seeks to increase the precision and accuracy of the movements at the time of performing a surgical intervention, in this sense, the precision of the control should be as high as possible. In this aspect, it was possible to show that the joystick manipulation method was considered more precise (see Figure 11).

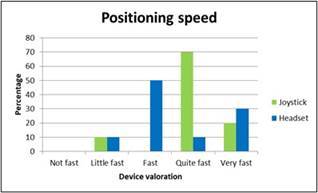

Question 5: ¿ How quickly you were able to reach the desired position of the endoscope?

The time it takes for the action commanded with the joystick or headset to be reflected shows the response speed of the system; the delay should be minimal to avoid latency. In this case it was observed that the response of both methods (joystick and headset) was considered fast, and there is no marked opinion among users about this factor (see Figure 12).

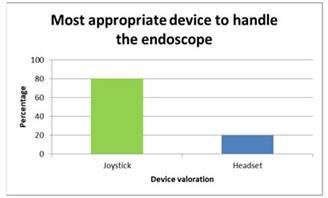

Question 6: ¿ In your opinion, which of the two controls do you consider to be the most appropriate for manipulating the endoscope in laparoscopic surgery?

Eighty percent of the participants said that the joystick remains the best alternative for manipulating the robotic endoscopic holder in a surgical environment, the headset device obtained 20% of acceptance (see Figure 13).

3.6 Analysis of the results obtained

The survey showed that 100% of the users gave answers of easy, quite easy and very easy to the question related to the ease of positioning the endoscope with the joystick, while for the headset, 70% of the participants gave the same evaluations to this question. It can be said that the headset control system is easy but not to the same extent as the joystick, this may be due to aspects such as not being able to remember exactly the way in which certain action was trained cause the response to imagine or think an action has a delay, or in some cases the system does not react because it does not recognize the way in which the user is mentally visualizing the action to be performed.

An important variable in this type of implementations is fatigue, which in the case of the headset can be due to different factors such as:

Some of the participants performed the training just before starting the cholecystectomy simulation, this could have caused the feeling of fatigue with the headset at the moment of starting the simulation.

Some of the participants were frustrated when they were not able to perform the training exercises; this frustration sometimes reached such a degree that it prevented them from continuing the correct execution of the tests when they required long periods of time.

The shape of the headset is adapted to each head on which it is to be worn, but in some participants it was observed that the electrodes exerted a little more pressure and, therefore, over long periods of time, could cause discomfort to the user.

These three factors result in loss of concentration and therefore hinder the objectivity of the respondents results, so we should try to reduce their effects as much as possible. The helmet was an item that most of the participants had never seen or worn, so they had no prior experience. Thus, when asked if users were familiar with the Emotiv headset or similar, the majority said they were not, while the same majority said they were familiar with the joystick. This caused that when asked how easy it was to become familiar with the controller, 90% of the participants chose between the options of easy, quite easy and very easy for the joystick, while for the headset this value was 70%. As for the accuracy of the headset, it was rated at 40 % and 50 % for quite accurate and accurate, respectively, this could be understood as that the headset presents an acceptable performance but not the highest possible in terms of accuracy in the movement. In addition to this, poor training can lead to confusion in cognitive actions, which decreases the accuracy in the detection of the actions, and consequently of the movement of the endoscope camera. In contrast, the joystick was clearly defined by the majority of users as a very precise manipulator.

Finally, to understand the responses obtained from the respondents it is also necessary to consider factors such as: the skill of the participant in performing the test, i.e., how quickly the user manages to position the endoscope; how good was the training of the cognitive actions performed; and also, the speed of the system's response.

4. Conclusions

This article showed the implementation of the Emotiv headset in a surgical simulator, with the purpose of using encephalographic signals to move an endoscope in the simulation of a gallbladder extraction. The simulator used is the SVRQ, built at the University of Cauca using the VTK graphics engine. This simulator consists of three robots, one robotic endoscopic holder and two surgical robots. The robotic endoscopic holder can be moved by joystick or by the user's thoughts. The steps of a cholecystectomy (gallbladder removal) were implemented and the two endoscope controls were tested by a group of ten users.

Once the results of this test were obtained, it was concluded that although the headset allows manipulation of the endoscope, it is not yet the best option for this purpose. Even if it can correctly identify the cognitive signals and after adequate training can be used as a remote control, it only works if the manipulation is performed immediately after training, since after a few days it is unlikely that the user will remember exactly the thoughts used by him/her to train the system. In addition to this, the headset has flaws in aspects such as ergonomics and practicality for the user, which is observed in the use during long periods of time or in conditions where the reading of the signals is not clear, and can affect the user's concentration and/or cause undesired behavior in the objects handled. In summary, it can be concluded, as several studies point out, that this technology is very promising but still has a lot of potential for improvement.

As future work, it is proposed to use a headset with a larger number of channels to determine the degree of improvement obtained in the performance of the BCI and its impact on the system to be controlled.