1. INTRODUCTION

Technology is present in various areas of society, which increases its relevance and use in different areas, including education [1]. We are in an evolutionary cycle in which school systems, institutions, and teaching should adapt to society’s demands. The role of teachers in the technological evolution of education, specifically in classrooms, is fundamental.

In addition, to adopt new educational models, areas where there are deficiencies can be identified [2]. This paper focuses on an educational technology called Pedagogical Conversational Agents (PCAs). PCAs are interactive systems that allow students to study in an entertaining and friendly manner [3].

Let us look at examples of agents classified by role:

Teacher: Autotutor, an intelligent tutoring system that makes use of an animated conversational agent with facial expressions, synthesized speech, and gestures [4]; Laura for learning Spanish [5], [6]; and Willow [7], [8].

Student: Betty [9], Challenging Teachable Agent (CTA) [10], Lucy [11], [12], [13], and The Teachable Agent Math Game [14].

In a previous study [18], we proved the benefits of using PCAs for education. However, methodologies for the design, integration, and evaluation of agents in the classroom are not found in the state of the art. For that reason, we proposed a methodology called MEDIE (stands for Methodology to Design) to design PCAs [18]. MEDIE was used to create the agent named Dr. Roland [18], which was the first agent to be used in Pre-Primary Education (see Fig. 1).

This paper focuses on applying Data Analytics techniques to improve the design of Dr. Roland. KDD (KDDIAE) and BIDAE are used to obtain information from agents and students.

The fruitful relationship between learning analytics and learning design is proven with an experiment, in which 72 children could use the new Dr. Roland.

They reported a 100 % satisfaction as they all enjoyed interacting with the agent.

This article is organized in 5 sections: Section 2 is a review of the related work.

Section 3 presents KDDIE and BIDAE.

Section 4 focuses on the results.

Finally, Section 5 presents the conclusions and future work.

2. STATE OF THE ART

2.1 Pedagogical Conversational Agents

Pedagogical Conversational Agents (PCAs) are integrated into learning environments, and they can be defined as interactive systems that allow students to study in an entertaining and friendly manner [3].

(Fig. 1) shows a sample interface of an agent. Agents can be represented as people, animals, or things that can speak with sound or text. Agents are used in a wide range of applications, such as commercial enterprises, health, employee training, or education [19]. Due to the importance of technology in education, the integration of agents into learning environments is gaining increasing attention [20].

As the use of PCAs increases, understanding how to design these characters for teaching and learning becomes more relevant.

Designing Pedagogical Conversational Agents remains a challenge that has not been completely solved [21]. A bad design generates negative feelings in students, hinders communication and interaction, and, ultimately, the completion of learning tasks [20]. Therefore, design methodologies for PCAs should be improved.

2.2 Data Analytics

Data analytics can be defined as “high volume, high speed and/or wide variety of information assets that require new forms of processing to enable improved decision making, discovery of knowledge and optimization of processes” [22], [23].

The application of Data Analytics in education can be considered an excellent resource due to the possibilities it offers to analyze, visualize, understand, and even improve education. The traditional method of observation in the classroom is losing momentum as the most effective way to understand and improve the educational process. As a result, other options such as Data Analytics are incorporated.

From Data Analytics, and its integration with different technologies, a series of methods have been derived. Such methods are being applied in the educational field. They include adaptive learning, which is based on the modification of the contents and forms of teaching according to the particular needs of each student; competency-based education [24], where the learning process is adapted to the pace and needs of each student; inverted classroom and Blended Learning [25], based on independent study and classroom practice; and gamification, which consists of using game mechanics in learning environments to stimulate the teaching-learning process among members of a student community [26].

3. KDDIAE AND BIDAE

Two analysis proposals are applied to PCAs: KDDIAE, application of KDD (Knowledge Discovery in Databases) to the Data of the Interaction between Agents and Students, and BIDAE (use of Data Analytics to obtain information of agents and students - Estudiantes in Spanish).

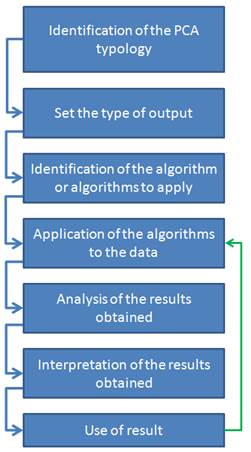

For KDDIAE (see Fig. 2), the following is an explanation of the steps applied to the interaction data between PCAs and students, following the practical approach of the process proposed by Brachman and Anand [25]:

Understand the application domain, relevant prior knowledge, and process goal identification. At this point, it is essential to have people with experience in the application area of PCAs (and all the areas involved) and, if possible, involve them in the process to better understand the context and the factors that may affect it and better interpret the results that should be obtained.

Selection of the dataset, identification of target variables to be predicted, calculated or inferred and independent variables useful to calculate, process, or sample available records taking into account the conversational pedagogical agent and its characteristics, as well as those of the people with whom it interacts and the context in which occurs.

Data cleaning and pre-processing. The data should present significant values, and decisions should be made regarding noise (missing, atypical, or incorrect values). Otherwise, introducing the raw data into a data mining algorithm would lead to difficult learning processes or results that do not represent the real behavior.

Reduction and projection of data. It aims to identify the most significant characteristics to represent the data, depending on the purpose of the process. For this reason, transformation processes can be used to reduce the effective number of variables or find other representations of the data.

Establish an adjustment of the goals of the KDD process (Step 1) with a particular data-mining method.

Explanatory analysis and selection of hypotheses and model. Researchers should decide which models may be suitable according to the general aim of the process.

Data mining. In this step, pattern search is performed over a given form of representation or set of representations.

Pattern interpretation, with a possible return to steps 1 through 7 for a complete iteration. The process can be feedback, repeating itself from the beginning or from any of the steps, until a valid model is obtained.

Acting on discovered knowledge, the model is ready for operation when it is considered to be acceptable with suitable outputs and/or permissible error margins.

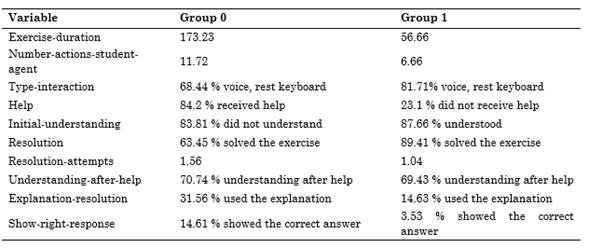

BIDAE (see Fig. 3) aims to provide a practical vision of a process that can be followed to obtain information about the interaction between PCAs and students based on the objective established at the beginning.

The type of information that BIDAE can serve to process could be: (i) to find out if variables (characteristics) that were initially thought to have a certain impact actually have it; (ii) to try to discover other variables that a priori were not considered significant in some respect; and, (iii) to try to reveal certain behaviors that maybe (based on the results) allow to generalize them, relationships between variables, or other aspects that are considered.

A first set of data related to PCAs (e.g., questionnaires) or results of the interaction of the agent with the students in its area of application should be available to start the process, and the following steps are followed:

Identification of the type of Pedagogical Conversational Agent in question in order to determine which techniques may be better for the results to be obtained. Any existing taxonomy can be used for this, such as the proposal in Pérez-Marín [7], which classifies agents using ten criteria.

Selection of the type of output in order to define the group of algorithms that best fits the data. For instance, one of the types of algorithms that are grouped in a taxonomy according to their output can be selected.

Identification of the algorithm or algorithms to apply in the group of algorithms identified in the previous step.

Application of the corresponding procedure of the algorithms to the data depending on the algorithm in question.

Analysis of the results obtained.

Interpretation of the results obtained and actions to be taken as a result.

If the results are as expected, continue to work using that information to provide feedback to the agent.

If the results are not as expected, analyze what may be failing by focusing on the following aspects: if the data obtained are sufficient and adequate to obtain the result that is intended, if the appropriate algorithm set is being applied, if the particular algorithm or algorithms in that previously selected group is suitable, or if the algorithm is being applied properly.

If the data with which the analysis is conducted are not correct or insufficient, the following steps are needed: (i) re-analyze what data are needed to obtain the desired result, (ii) identify the data to be captured, (iii) analyze what changes should be made in the conversational agent to capture that data, (iv) modify the agent to correct the capture of the erroneous data and/or to capture the new ones that are necessary.

If the appropriate algorithm set is not being applied, the following steps are needed: (i) analyze which set of algorithms is best adapted by examining its characteristics and those of the conversational agent, (ii) select that set of algorithms to perform the analysis.

If the particular algorithm or algorithms in that previously selected group is not suitable, the following steps are needed: (i) analyze which algorithm might be appropriate according to its characteristics, (ii) select an algorithm, (iii) apply this algorithm.

If the algorithm is not being applied properly, the following steps are needed: (i) study how the algorithm is applied, (ii) repeat the process and apply the algorithm.

Analyze what additional or new knowledge should be included.

Identify what data is needed for it.

Analyze the agent and its characteristics to identify what modifications could be made to adapt it to the aim.

Make the modifications in the agent.

Repeat the experimental phase for data collection.

When sufficient data are available, return to step one of the process.

4. RESULTS

MEDIE has been implemented to design Dr. Roland for several education levels. In this study, Dr. Roland was used for Pre-Primary Education. (Fig. 1) shows a sample snapshot of Dr. Roland with big buttons, a colorful interface, and many multimedia elements such as images, videos, and sounds. Given that young children cannot read, all the written text is also spoken, and the keyboard is shown as requested by teachers, who want to introduce children aged 5-6 to reading and writing.

Dr. Roland was used by 72 children to learn about animals in three schools in Spain. In the first school, there were three sessions in which Dr. Roland was used by 24 children (ages 4-5 years). In the second school, there were two sessions in which Dr. Roland was used by 25 children (ages 2-3 years). In the third school, there were two sessions in which Dr. Roland was used by 23 children (ages 2-3 years). KDDIAE and BIDAE were used to design the agent.

The procedure to apply KDDIAE followed the steps described in Section 3.

Since the beginning, Pre-Primary Education teachers were involved in the design of Dr. Roland, providing their expertise and advice so that programmers could understand the learning goal (in this case in the field of natural sciences; in particular, animals).

The dataset in this study is composed of students’ answers, student-agent interaction, and student-agent conversations. The target variables are the influence of the agent’s help to improve student’s ability to solve and/or understand the exercises, the dialogue that should be used to get the best results, and average times. The independent variables are exercises, questions, type of questions, methods of interaction and solution, help, key dialogue structures to understand exercises, and interface features.

The data was processed to identify the most significant characteristics in this case. Thus, we found the most relevant ones: exercise duration, number of actions between the user and the agent, average time between actions, type of interaction (spoken or written), help used, exercise understanding, completed exercises, attempted exercises, solved exercises, number of attempts to solve an exercise, if once users have been given help they are able to solve an exercise, if once an exercise is completed students keep working with the agent, if the students receive some explanation at the end of an exercise, and if students see the correct answers to exercises. All the times were measured in seconds.

The method adopted in this study is an Expectation-Maximization algorithm [27].

The goal is to establish if the agent captures the information as intended by the teachers, if this information is meaningful, if there is other information that should be included, or which information that has not been captured by the agent should be captured in the following design cycle of the agent. That information can be used to improve the design of the agents. In this way, the information provided by teachers and Data Analytics techniques would confirm the successful relationship between data analytics and the design of educational computer systems.

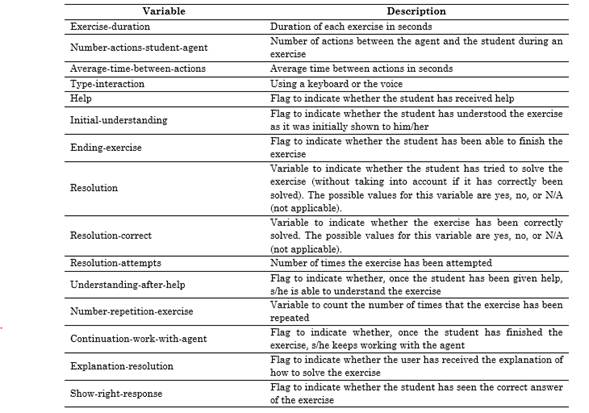

(Table 1) lists the variables defined to obtain information for the design of the agent as explained in [27]. (In the previous paper, the focus was on gathering data.

The focus of this paper is on the fruitful relationship between learning analytics and learning design.).

The number of clusters was 2, cluster (or group) 0 and cluster (or group) 1, using the variables in (Table 1). From the results, it can be deduced that group 1 is the largest, concentrating 78 % of the information. Regarding numerical attributes, the information provided focuses on the mean and standard deviation. (Table 2) presents the most significant results produced with both groups to find design rules.

As can be seen in (Table 2), there is a significant variability in the duration of the exercises of both groups. Regarding the number of attempts to resolve the exercise, there is a fairly homogeneous situation in both groups: it is close to 1 and there is a relatively small dispersion in each group.

In relation to the number of actions of the agent with the user, there are differences between the groups that represent the largest deviation.

With regard to interaction type, the voice predominates in both groups. Hence, this population should be characterized using their voices (which makes sense since young children cannot write well).

There is a difference in the initial understanding: group 1 is able to understand at the beginning, while group 0 is not. This is also related to reception of help since group 0 exhibits a higher value of reception of help, while group 1 did not receive as much help. As for the understanding after the help, group 0 has a relatively higher value, which makes sense since they initially did not understand, and the fact that they received the corresponding help implies an improvement in understanding. The children in both groups solved the exercises correctly in most cases; therefore, the options of explaining the solution and showing the correct response were used less.

The patterns were interpreted and the algorithms were applied in the previous step. Consequently, what was said in terms of interpretation in Step 7 is extrapolated to Step 8. In addition, in the light of the results, the provided information is considered sufficient for the initial aim; therefore, it is not necessary to go back.

With the information that can be obtained from the model, a detailed analysis of the variables is carried out to reveal which relationships should be maintained and/or strengthened, which changes can be made in those that are unrelated and what is wanted, or what new variables, information or relationships should be obtained. Thus, taking into account the characteristics of the agent, the algorithm analyzes and identifies the necessary changes and how they would have to be applied to the agent in the next phase of the iterative and incremental development process.

BIDAE is applied to data from agent Dr. Roland and children in Pre-Primary Education. In the taxonomy of roles of conversational pedagogical agents, Dr. Roland has a teacher role, since it tries to have students learn, it teaches them.

The selection of the group of algorithms is supported because the conversational pedagogical agent uses the responses and reactions of students at each interaction to produce the following response; to adapt to students’ actions, reactions, needs, and responses; and to offer appropriate responses in the interaction. In this study, classification algorithms will be applied as a refinement strategy in the analysis.

The evaluation can be conducted using different options: a training set (on the same set on which the predictive model is built to determine the error), a supplied test set (on a separate set), cross-validation, or percentage split (dividing the data into two groups, according to the indicated percentage).

In this case, we used the cross-validation mode, dividing the instances into as many folders as the folds parameter indicates (10); and, in each evaluation, the data of each folder is taken as test data, and the rest of the data is training. The calculated errors are the average of all the executions. Among the possible algorithms, a decision tree type C4.5-J48 will be applied to predict attributes.

For the following variables, no results were obtained: duration of the exercise (exercise-duration), number of agent actions with the user during interaction with an exercise (number-actions-student-agent), average time between actions (time-average-between-actions), number of times that the exercise is performed (number-repetition-exercise), and attempts to solve the exercise (resolution-attempts).

In relation to the analysis of the data obtained and the confusion matrices, the diagonal values are the successes and the others are errors. In this way, the percentage of the total number is known for each value (how many were well classified and how many with errors).

As for relations, their result and interpretation can be seen in their corresponding column. For example, all those who did not initially understand, except in a case of error, understood once they received the help (understanding-after-help = yes: no (11.0 / 1.0)). Moreover, no participant, after receiving the help, did not understand (understanding-after-help = no: yes (0.0)). The comprehension question after help did not apply to those who initially understood (understanding-after-help = na: yes (25.0 / 2.0)). All those who requested help understood after the help (understanding-after-help = yes: yes (11.0)) (no student claimed s/he did not understand the exercise once s/he requested help (understanding-after-help = no: no (0.0))).

Those who completed the exercise (ending-exercise), except one case of error, did so in two or fewer attempts to solve it (resolution-attempts <= 2: yes (34.0 / 1.0)).

The exercises of those who kept working with the agent (continuation-work-with-agent), except for three cases of error, lasted less than 196 seconds (exercise- duration <= 196: yes (34.0 / 3.0)) (for the others greater (exercise- duration> 196: no (2.0)))). Those who understood after the help (understanding-after-help), having requested it (help = yes), except for an error case, performed more than 7 actions with the agent (number-actions-user-agent> 7: yes (12.0 / 1.0)). Those who did not request to show the correct response (show-right-response) made 2 or fewer attempts to solve the exercise (resolution- attempts <= 2: no (34.0)). Therefore, in Steps 6 and 7, all of this will be incorporated into the agent.

Regarding the interpretation of the results and the actions to be performed accordingly, the algorithm will use those expected results to further consolidate and use that information in order to provide the agent with feedback.

As for the incorrect and insufficient data, the steps that should be taken are the following: analyze again what data is needed to obtain the desired result, identify the data to be captured, analyze what changes need to be made in the conversational agent to capture that data, modify the agent to correct the capture of the erroneous data, and/or capture new ones as necessary.

Regarding the use of the results, the following steps should be taken: analyze what additional or new knowledge should be included, identify what data is needed for it, analyze the agent and its characteristics to identify what modifications could be made to adapt it to the intended aim, make modifications to the agent, and repeat the experimental phase for data collection.

5. CONCLUSIONS

The use of Data Analytics techniques has allowed us to design a Pedagogic Conversational Agent for Pre-Primary Education following the MEDIE methodology. The use of KDDIE and BIDAE has highlighted the fruitful relationship between Learning Analytics and Learning Design, as shown below:

-(Learning Analytics) Children who initially do not know how to solve the exercise, after being given help, are able to understand it and solve it ( (Learning Design) An agent for small children should be able to provide help. Furthermore, help should be entertaining and adapted to their characteristics because it is a resource that children actually use.

-(Learning Analytics) Younger children use more voice interaction ( Learning Design) An agent interface for small age children must incorporate voice commands.

-(Learning Analytics) Children who completed the exercise needed two or fewer attempts to solve it ( (Learning Design) Students should be given the possibility of solving the exercises and trying again if they want to.

-(Learning Analytics) Most children who solved correctly the exercises used the option that explained how to solve the exercise, and less of them showed the correct answer ( (Learning Design) Agents should allow a flexible use of the explanation and visualization of the correct response depending on the case.

-(Learning Analytics) Children who initially did not understand and, therefore, used more help and a greater number of interactions with the agent spent more time in each exercise than those who understood from the beginning ( (Learning Design) Exercises should not be too long.

-(Learning Analytics) Children who initially understood used less help ( (Learning Design) Agents should allow a flexible use of the help depending on the case.

-(Learning Analytics) Children who understood the exercise after the help, having requested it, performed more than 7 actions with the agent ( (Learning Design) Providing help where and when it is necessary is an important part of the process.

-(Learning Analytics) Children who did not know how to solve the exercise used the option to show the correct answer ( (Learning Design) It is important to include, in the exercises, the correct answer, and, if possible, a visible explanation of it for children.

(Learning Analytics) The duration of the exercise was less than 196 seconds ( (Learning Design) The duration of each exercise should not be very long.

One hundred percent of the 72 Spanish children (aged 2-5 years) who used Dr. Roland claimed that they enjoyed interacting with the agent. Since the MEDIE proposes an iterative and incremental process, the comments of teachers and students will be applied to future versions of the agent.

Nevertheless, these procedures suffer from a general disadvantage: their dependency on the data provided by teachers and students. This is because, in some cases, it may be difficult to obtain enough information to apply the procedures. This is another research line for future work.