Introduction

Foreign language assessment has been a field of challenges and controversies along the decades for teachers and students. Generally, foreign language classes are ruled by summative assessment practices aimed to measure learners’ mastery of discrete language points and linguistic accuracy, rather than assessing students’ communicative competence (Shaaban, 2005). However, although summative speaking assessment continues provoking reluctant attitudes in students, teachers may hardly approach this process differently, which may eventually lead learners either to succeed, fail or give up on the learning process (Green, 2013).

Therefore, a change of mind in this regard would be welcome in the teaching practice. In this line, Green (2013) claims that when it comes to assessing students’ speaking skill through the implementation of a test, teachers may highlight its importance to improve teaching and learning processes rather than as a yardstick that determines control. In light of this problematic situation, I sought to characterize the teachers’ assessment approaches regarding the speaking skill in an English language teaching (ELT) program. Furthermore, the study by García and Artunduaga (2016) conducted in this context, together with my teacher experience, motivated me to explore teachers’ speaking assessment approaches and analyze how these relate to their actual classroom speaking assessment practices.

This exploratory and descriptive qualitative study focuses on characterizing teachers’ speaking assessment approaches and identifying the relationship between their stated assessment approaches and their actual classroom speaking assessment practices. I conducted the present qualitative study with four ELT program in-service teachers from a public university in Florencia (Colombia). English as a foreign language (EFL) teachers should bear in mind that some learners may encounter great difficulties when participating in activities and examinations that assess their oral production. These difficulties are mainly reflected in students’ negative results, due to lack of time in classroom speaking practices (Richards & Rodgers, 2001) or even emotional factors experienced before, while, and immediately after students are involved in oral production activities (Cook, 2002).

Theoretical Framework

Speaking is an essential means for our daily communication and a primary instrument of interaction among human beings in a certain community (Coombe & Hubley, 2011; Lado, 1961; Mauranen, 2006). Bygate (2001) claims that speaking is reciprocal, it is to say that “interlocutors are normally all able to contribute simultaneously to the discourse, and to respond immediately to each other’s contributions” (p. 14). Furthermore, in oral interaction people can participate in any spoken encounter by constructing meaning according to their intentions, their goals for communication, and the message the speaker wants to convey (Green, 2013); thus, this process makes speaking more unpredictable than writing as ideas are not usually premeditated and flow according to the rhythm of the conversation (Mauranen, 2006).

In consequence, assessing the speaking skill is a complex process that requires special considerations for educators (Burns, 2012). For instance, teachers need to identify a suitable instrument or strategy that allows them to properly assess learners either “live” or through recorded performances (Ginther, 2012). Moreover, speaking assessment processes have to be closely related to teachers’ instruction to help them make decisions considering students’ linguistic abilities and course goals in order to select appropriate speaking tasks (Fulcher, 2018; Ginther, 2012; Shaaban, 2005).

Summative Assessment of Speaking Skills

Some of the most common speaking assessment practices in foreign language learning are direct tests. They assess students’ speaking skill in actual performance, for example, interviews with semistructured or structured interaction (Ginther, 2012). Generally, testing practices are seen as summative assessment, which takes place at the end of a course cycle to determine and evaluate students’ knowledge and the skills developed throughout that particular period (Lado, 1961).

Summative assessment practices need to be carefully considered in higher education contexts where grades are mainly influenced by test results. If these test outcomes do not fulfill the educational standards established in the institution, it may in some cases end in sanctions for schools, educators, and even learners (O’Neil, 1992). Tests may be designed to tackle particular needs regarding foreign language learning, such as spoken interaction, listening comprehension, and reading and writing. However, Carter and Nunan (2001) mention that regardless of the tests’ scope, and their focal point (which is mostly viewed as numerical), there are elements needed for their administration. These elements include (a) validity, as tests should measure accurately what they were meant to measure; (b) reliability, as tests results need to be consistent between the sample of test-takers; and (c) practicality, as tests should by design allow adequate time and availability of the resources for their implementation, and facility for scoring and evaluation procedures (Brown, 2004; Coombe & Hubley, 2011; Lado, 1961).

Formative Assessment

An assessment variation as to testing methods may be the implementation of a more humanistic approach that stresses alternative and formative assessment practices (Ginther, 2012; Irons, 2007; O’Neil as cited in Shaaban, 2005). According to Huerta-Macias (as cited in Brown & Hudson, 1998), alternative assessment involves journals, logs, videotapes and audiotapes, self-evaluation, and any other task that encourages learners to show their potential in performance-based tasks (Shaaban, 2005). Moreover, Yorke (2003) states that formative assessment may be given in formal (high-stake) or informal (low-stake) practices. The former are planned and consider students’ preparation and assessment criteria for their respective development. The latter comprise the development of any activity that takes place in class where students do not need to follow specific instructions for their execution.

Similarly, teachers in formative assessment go beyond giving a specific grade to the students (Irons, 2007), that is, grades “help them identify areas that require further explanation, more practice, and methodological changes” (Muñoz et al., 2012, p. 144), in order to overcome difficulties presented in students’ learning process. In consequence, to inform learners about their difficulties or strengths in certain topics, feedback is essential (Green, 2013). This helps to improve and adapt teaching with the aim of meeting learning needs. Providing feedback to learners has to be specific, focused on the task developed, and imparted while it is still relevant (Black & William, 1998).

In short, Coombe and Hubley (2011) claim that whatever the assessment approach is, assessment practices may display the aimed course objectives which support the learning and teaching of the target language. Therefore, foreign language teachers should continuously analyze the effectiveness of their assessment procedures, especially in local contexts where tests are the only assessment method; thus, teachers become “gatekeepers for higher education opportunities for many high school or college graduates” (Herrera & Macías, 2015, p. 306).

Related Studies

This section briefly describes some recent studies on the effectiveness of teachers’ approaches and strategies to assess students’ speaking skills and how this assessment process shapes teachers’ instruction.

The action research study conducted by De la Barra et al. (2018) aimed to identify the effects of integrated speaking assessment based on the content and language integrated learning (CLIL) approach on 32 third-semester students enrolled in the translation and English teaching training program at Universidad Chileno-Británica de Cultura. Data collection involved two rubrics used to assess students’ speaking skill and one questionnaire to gather information about students’ opinions concerning the assessment approach used. Findings showed that students took responsibility for their improvement in both language and course content based on teachers’ feedback, which served as a guide to strengthen the speaking skill. This study highlights the importance for both teachers and students of familiarizing students with the speaking assessment instrument because this makes explicit beforehand the terms under which the students’ speaking performance will be judged by teachers.

Moreover, Köroğlu (2019) implemented an action research study to explore the efficacy of the interventionist model of dynamic assessment (DA) in speaking instruction and assessment on student-teachers. This research entailed 29 participants registered in the English language teaching department of a public university in Turkey. The data gathering instruments were questionnaires and video recordings. In short, findings revealed that DA was meaningful for participants as they demonstrated a significant improvement in their self-confidence making them able to express their ideas clearly and smoothly in front of the teacher and peers. Consequently, the importance of this study underpins DA not only as a speaking assessment method, but also as a permanent source of formative feedback for learners. This is due to teacher mediation and scaffolding strategies that took place during students’ speaking assessment. These two worked as a way of feedback for learners to explore their potential, identify their weaknesses, and take control of their own speaking improvement.

Additionally, Namaziandost and Ahmadi (2019) conducted an action research study to explore the incidences of holistic and analytic assessment approaches in 70 students’ speaking skill from an English language teaching program at Islamic Azad University of Abadan in Iran. The data gathering instruments were analytical and holistic rubrics, teacher’s notes, and audio recordings. The findings of this study revealed that the implementation of analytic and holistic approaches is suitable to get reliable scores from a student’s performance as these facilitate the evaluator to identify gaps in terms of fluency, intonation, grammar, or vocabulary. In essence, the relevance of this study lies in that it advocates the use of holistic and analytic approaches towards students’ speaking assessment. Likewise, by implementing these two approaches, teachers are capable of working on the students’ weaknesses in relation to the mastery of the target language to empower their learning and the performance of their future speaking practices.

Finally, Liubashenko and Kavytska (2020) developed a case study to explore the contributions of assessing interactional competence in the development of the speaking skill among 44 students from Taras Shevchenko National University of Kyiv, and 36 students from Igor Sikorsky Kyiv Polytechnic Institute. The data gathering instruments entailed surveys, video recordings, and questionnaires. Findings evidenced a significant improvement in students’ speaking skill thus promoting their interactional competence since they were capable of solving task-based problems presented by the instructors in their assessment. Moreover, learners worked in groups and co-constructed their knowledge, supporting each other in terms of problem-solving. Hence, it is important to acknowledge that instructors should take into account the development of students’ interactional competence since it enables them to convey their points of view under speaking assessments rather than focusing on well-structured language utterances.

The related literature showed the benefits of strategies and approaches with different samples in terms of students’ speaking skill assessment. Overall, the assessment alternatives explored in the aforementioned studies have a significant impact on participants’ speaking skill. Furthermore, it could be argued that the success of the different assessment strategies described in the previous studies mainly depended on one factor: teachers being consistent with the assessment practices they adopted. Thus, delving into a foreign language, teachers’ stated and actual speaking assessment approaches carries great importance because, ultimately, this may be an indicator of students’ speaking achievements.

The Problem

English language teachers at Universidad de la Amazonia feel the need to reflect upon their own teaching approaches so they can carry out meaningful processes in terms of students’ learning and assessment. Four EFL teachers from this university participated in the study, and my intention was to raise awareness of their speaking assessment approaches as a first step towards improving assessment practices at the university as well as towards changing the oral classroom assessment perceptions of both teachers and students. The study was then informed by these two questions:

Method

This is a qualitative, exploratory, and descriptive study (Glass & Hopkins, 1984; Hernández-Sampieri et al., 2007; Kumar, 2011) as it explored and described the speaking assessment approaches of the four participating teachers. Qualitative research works with the data of few participants given the depth of description, which mainly concerns their subjective opinions and experiences in their natural context (Mackey & Gass, 2005).

Descriptive studies describe a situation or a problem of a sample with few known aspects and which need to be explored (Dörnyei, 2007; Kumar, 2011). Basically, descriptive studies also involve gathering data that describe participants’ events and thinking, which are organized, tabulated, and depicted to have a better understanding of the issue (Glass & Hopkins, 1984). On the other hand, given its specific nature, one disadvantage of descriptive studies is that they do not provide an answer about how and why an issue takes place in participants; therefore, rather than examining consequences or associations, I focused on describing the characteristics towards the issue that is being explored in the four participants (Kumar, 2011).

Purposeful sampling method was implemented to select the participants for the present study. This has the aim of identifying information related to the issue of interest (Patton, 2002) through the selection of a sample considered to be knowledgeable regarding the phenomenon under study (Creswell & Plano Clark, 2011). Bearing in mind the aforementioned, the four participants were individually contacted in their workplace and invited to participate in the study. They accepted the invitation on a voluntary basis and signed consent forms.

Context and Participants

The English teacher education program at Universidad de la Amazonia is the only undergraduate program in the Amazon region that trains EFL teachers. The program seeks for graduates to become qualified in the teaching and learning processes of the English language at regional and national levels.

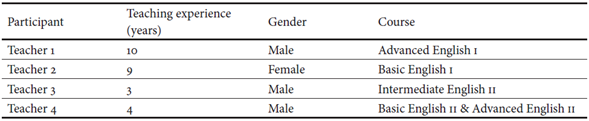

The participants of this qualitative study comprised four ELT program professors whose teaching experience ranged from two to ten years. They were three men and one woman; one of them holds a master’s degree and the other three hold bachelor’s certificate degrees. All teachers, except for Teacher 4, were in charge of one English course. Due to ethical considerations, I have labelled participants with a number: Teacher 1, Teacher 2, Teacher 3 and Teacher 4. Table 1 provides details about each participant.

Data Collection and Analysis

Creswell (2002) expresses that gathering data involves identifying and selecting individuals for a study, obtaining their permission to study them, and collecting information by asking questions or observing their behaviors in their natural settings. Bearing this in mind, the instruments I used for gathering data were one semistructured individual interview (Harklau, 2011; Kumar, 2011) with teachers about their speaking assessment approaches (see Appendix); observations (Creswell, 2002; Kumar, 2011) of their actual speaking assessment practices; and documental analysis (Kumar, 2011) of their rubrics used to conduct these speaking assessments. The use of a voice recorder and a video camera strategically placed allowed me to capture every piece of information from the individual teachers’ interviews and their speaking assessment practices respectively.

For data analysis, I implemented a grounded approach (Charmaz & Belgrave, 2015). Every piece of data gathered was examined to identify commonalities across teachers’ stated approaches to assessing students’ speaking skill, speaking assessment practices, and rubrics implemented. Thus, data were labeled and organized according to the instrument used: IR = interview recordings, OVR = observation/video recordings, and RT = rubrics.

For validity purposes, data were reduced and coded (Male, 2016) through a process of triangulation (Carter et al., 2014; Patton, 1999; Polit & Beck, 2012). Thus, the major categories that emerged from the data were: (a) The Balance Between the Humanistic and the Technical Dimensions of Speaking Assessment and (b) Depositioning Speaking Assessment as an Essential Element for Enhancing the Teaching and Learning Process.

Findings

This section elaborates on the findings that emerged from the interpretation of the data. Consequently, the first section includes findings related to the four teachers’ stated approaches and practices in speaking assessment. The second section reports findings that provide the relationships of stated teachers’ speaking assessment approaches and their actual speaking assessment practices.

The Balance Between the Humanistic and the Technical Dimensions of Speaking Assessment

Empowering the Teaching and Learning Process Through Speaking Assessment

This subcategory highlights three views among the participants towards speaking assessment. The data come from sample interview answers that display participants’ commonalities. First, they commonly stated that speaking assessment is a permanent process developed along the course, where educators closely monitor students’ learning.

Speaking assessment...in fact is a process...it involves students learning and it is continuous. It has to be worked each week, each class. (Teacher 1, IR)

If we assess students’ speaking skill throughout the course, it enables us to identify the needs to tackle, what activities they prefer, and know how much the learner has improved. (Teacher 4, IR)

In this regard, the samples above confirm that speaking assessment and teaching are connected. As Teacher 4 describes, speaking assessment allows educators to identify learning gaps and thus, implement activities to successfully contribute to students’ needs.

Similarly, these four teachers stated that feedback is an essential strategy to consolidate students’ learning as it recognizes the aspects they need to overcome for future performances.

Feedback in speaking assessment is always essential but no only provided as a score; let’s say...individual comments are meaningful especially to motivate students who are beginning to learn a foreign language, they require feedback to notice the mistakes, or the things they have to enhance through the time. (Teacher 4, IR)

Feedback is important in assessment, but spontaneous or unplanned activities do not need feedback on students’ performance. When you know your students have prepared and have considered your instructions, feedback is essential then. (Teacher 3, IR)

Samples show the importance of feedback in speaking assessment. Teacher 4 states that this is not always presented in scores, but in the way of comments to encourage learners to improve their speaking performances. Conversely, Teacher 3 claims that providing feedback on students’ speaking skill depends on the complexity of the activity conducted, that is, as long as it requires students’ preparation, feedback is provided; otherwise, it is not.

The second stated view relies on the implementation of alternative speaking assessment practices aimed to foster students’ participation and elicit authentic learners’ interaction in different contexts.

We sometimes use our WhatsApp group to talk about our day through voice notes. Also, we gather once a month in a restaurant to practice speaking by exchanging some ideas or ordering food in English…waiters don’t understand most of the time, and I have to translate what they said. However, it helps me to notice how much my students have improved regarding their speaking skill and they don’t even realize I’m assessing them. (Teacher 3, IR)

Class participation, video recordings, and role plays foster engagement among learners. They are useful for assessing speaking. However, speaking tests are always essential as they comprise an important part in measuring the learning process of all students. Basically, tests show you what students have learnt, and it is a strategy all teachers implement in certain moments of the courses. (Teacher 4, IR)

These excerpts highlight the use of alternative practices to promote comfortable spaces of interaction among learners and freely exchange ideas without even noticing they are under assessment. Notwithstanding, despite Teacher 4 recognizing the importance of using alternative assessment, he also suggests that summative practices, such as direct tests, are mainly implemented by teachers as these provide an accurate view of the learners’ speaking improvement.

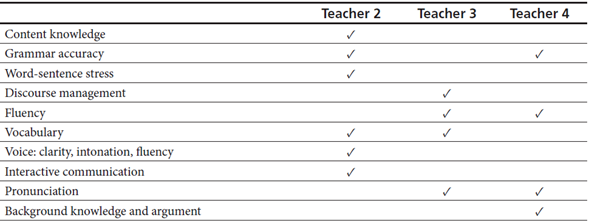

Third, although there are mainly four assessment criteria considered by participants for conducting their speaking assessment practices such as pronunciation (Teachers 1, 2, and 4), accuracy (all), vocabulary (Teachers 2 and 4), and intelligibility (Teachers 2 and 3), a dichotomy is presented in regard to how these aspects are approached to assess students’ speaking skill. While Teachers 2, 3, and 4 stated that they prioritize learners’ ability to exchange ideas in assessment practices, Teacher 1 stresses the accurate use of language under the light of these assessment criteria.

For assessing speaking you can pay attention to [students’] pronunciation, their vocabulary, and how ideas are structured, but you cannot ignore their level and the context they come from when you request them to use a foreign language…therefore…it is important to be flexible as long as they are able to communicate their ideas. (Teacher 2, IR)

The aim of communication must be to be understood...especially in our context where for no reasons sometimes we demand our students to adopt native idioms or expressions…therefore, I assess my students positively although they present mistakes in their speech because these mistakes do not hinder their understanding. (Teacher 3, IR)

Students can answer questions and more, but it must be very complete, the intonation, the accent. I do not like flat accents or local accents, because if we are speaking a foreign language then the idea is that we use the accents of that language as such. So, I think that intonation, obviously pronunciation, and grammar are absolutely important. (Teacher 1, IR)

Although Teachers 2 and 3 did not use the specific name for intelligibility, these two participants approached the concept proposed by Munro and Derwing (2011) to support its importance in students’ speaking assessment. These two teachers’ answers evidence the idea that speaking assessment is more than just measuring learners’ speaking skill. However, Teacher 1’s view restricts flexibility in his assessment practice as this underpins nativeness principles, which do not acknowledge the variety of students’ accents and language proficiency levels in the program.

Finally, participants stated that they incorporate assessment criteria through the implementation of rubrics to properly conduct and determine students’ performance in their speaking assessment practices.

I try to design a rubric…so that they can see it earlier, and students know…let’s say…what I keep in mind to be assessed and thus report a fair score. (Teacher 4, IR)

Rubrics are used to integrate the elements considered for their speaking assessment. This instrument allows me to inform learners what is their expected performance. (Teacher 2, IR)

These samples also indicate benefits of informing and providing students the rubrics with their respective evaluation criteria in advance, which allows them to recognize the elements that will be considered in their upcoming speaking performances.

Together, the sample data above suggest that these teachers’ stated assessment approaches towards students’ speaking skill were beneficial for strengthening the instruction and learning process. Furthermore, these views positioned speaking assessment not only as a way to measure learners’ speaking skill but as a way to engage them through alternative assessment activities supported by feedback.

Relying on Summative Practices to Measure Students’ Speaking Skill

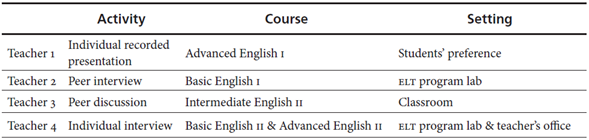

This subcategory includes what teachers actually did for assessing learners’ speaking skill in their courses. The data come from assessment practices observations and analysis of the instruments implemented during these activities. In this regard, summative assessment (Teachers 2, 3, and 4) and alternative assessment (Teacher 1) are the practices implemented among participants. Individual interviews, peer discussions, and peer interviews-which are also known as direct tests-were used for summative assessment; an individual recorded presentation was the alternative method (see Table 2).

Before the execution of both summative and alternative assessment practices, these four teachers informed students about the guidelines, characteristics, and the criteria considered for their assessment. Thus, learners had a clear understanding of what they had to do precisely on their examinations.

During summative methods, teachers elicited students’ insights and interaction using transcribed questions and by exchanging information in moments in which they wanted to highlight ideas contributed.

Teacher 2: That’s wonderful…Would you try it again?

Student: Yes…of course!...Yes.

Teacher 2: Perfect…why?…Did you like that?…Was the experience nice?

Student: Definitely, I felt I could make some friends easier than before. I felt secure. (OVR)

The sample above stresses the importance for the teacher to be part of the assessment activity development and thus allow students to feel uninhibited and motivated to expand their oral contributions.

Conversely, Teacher 1’s alternative method did not entail any kind of teacher’s interaction or exchanging of learners’ information given the nature of the activity. Instead, this comprised a speech which sought to evidence learners’ use of language to strategically address a free topic that triggered reflection and critical thinking.

Additionally, the use of analytic rubrics was integrated to assess students’ speaking skill during these direct tests. Rubrics were composed by following assessment criteria which were given in advance to learners, their descriptors, and a corresponding scoring scale (see Table 3).

These assessment criteria looked particularly appropriate for the suitable integration of a larger scope of language features in students’ performance. In this sense, teachers considered greater elements that required students to demonstrate the corresponding mastering of the course content, and their communication skill.

Moreover, during the conduction of these summative methods, feedback was generally provided at the end. Teachers relied on the notes taken regarding students’ utterances during the development of the examinations, and this sought to highlight strengths in students’ speaking performance:

Teacher 4: In general, you provided interesting ideas…you had a nice use of vocabulary…and…the mistakes observed did not hinder your performance.

Student: What kind of mistakes, teacher?...Pronunciation?

Teacher 4: No…well…there were minor aspects regarding pronunciation to improve…but precisely…the use of demonstratives with the use of plural and singular nouns needs to be revised, OK?

Student: OK. (OVR)

This sample shows that feedback was not only focused on pointing at the students’ weaknesses, but it also had formative purposes as it highlighted learners’ ability to convey meaning and provide ideas despite presenting some difficulties in their speech.

Similarly, feedback was only presented immediately during the development of Teacher 3’s direct test when students repeatedly mispronounced a word or used an L1 word to support their answers:

Student:…and so the scientit may…

Teacher 3: SCIENTIST!

Student:...the scientist may! (OVR)

Student: How do you say adictos?

Teacher: You mean...addicted?

Student: Yes...addicted, OK. (OVR)

These samples also confirm that feedback was presented in Teacher 3’s direct test as corrective to indicate the correct form of a word erroneously pronounced by the student. In the same way, Teacher 3 was willing to assist students when they asked for help during their performances, mainly to answer queries about unknown words.

It is important to mention that Teacher 1 did not incorporate any criteria or instrument to assess his students’ speaking skill. He orally informed students that the aspects to be assessed in his alternative method were interestingly the ones he emphasized during his individual interview: pronunciation and accuracy. Furthermore, Teacher 1 only reported learners’ scores to inform the overall performance obtained in his speaking assessment practice.

The last section of findings aims to answer Research Question 2: What is the relationship between teachers’ stated assessment approaches to speaking skill and actual classroom assessment speaking practices? The main relationships and discrepancies are directly reflected in three aspects: actual type of assessment, speaking assessment criteria, and feedback.

Depositioning Speaking Assessment as an Essential Element for Enhancing the Teaching and Learning Process

Prevailing Measurement Over Engagement in Students’ Speaking Assessment

This subcategory shows teachers’ preference towards the use of summative practices over alternative practices for the development of students’ speaking assessments. In this regard, data revealed that summative assessment (direct tests) is the approach by default present in the lessons of Teachers 2, 3, 4. Consequently, the execution of these practices reinforced participants’ initial views about considering tests as an essential strategy to be used at certain moments in their courses, but at the same time, made them prone to forget that speaking assessment should be an in-depth process if it aims to contribute positively to the teaching and learning needs of students.

On the other hand, Teacher 1’s alternative assessment did emerge as an activity certainly aligned with a continuous speaking assessment process that benefited teaching and learning practices. This was because Teacher 1’s practice did not resemble the regular teacher-student interaction and transcended the limitations of the summative assessment methods implemented by the other three participants.

Seeking Accuracy in Speaking Assessment

The second subcategory in this section displays the integration of technical assessment criteria for conducting students’ speaking assessment. Consequently, findings reflected the incorporation of criteria for summative assessment activities that requested learners to have an appropriate speaking performance. The above indicates that Teachers 2, 3, and 4 not only expanded the range of linguistic elements for students’ language use, but also contradicted their initial stated views about implementing flexible approaches primarily aimed at assessing learners’ ability to deliver spoken messages rather than the accuracy of those messages.

Although the use of rubrics was extended for the conduction of summative assessment practices, Teacher 1 was the only participant who did not use any instrument to assess his students despite having stated the importance of its use to conduct valid assessment practices. This participant orally informed his learners about the elements to consider for his activity, and valued their individual performances in relation to the appropriate integration of these criteria (pronunciation-accuracy) in their presentations.

The Need for In-Depth Teacher Feedback on Students’ Speaking Performance

The last subcategory highlights the need to incorporate feedback to positively impact learning and assessment practices. In this sense, data indicated that although the four participants positioned feedback as one of the essential elements to strengthen assessment and learning practices, it was only evidenced in the direct tests implemented by Teachers 2, 3, and 4.

Teacher 1 detached this process from his speaking assessment and did not provide his students with feedback that truly sought to contribute or generate a positive impact in relation to the students’ current and future speaking practices. While the rest of the participants relied on formative feedback to highlight learners’ strengths and to even make them aware of aspects for improving their speaking performance, Teacher 1 simply gave learners individual scores as an overall description of their performance.

In consequence, the data suggest that the relationship between teachers’ stated approaches towards the speaking skill and their actual practices did not truly reflect seeking an empowerment for the teaching and learning process. The conduction of direct tests with rigorous assessment criteria, and limiting feedback to summative functions and detaching the use of assessment instruments, are certainly practices that depositioned speaking assessment as a core element in the language classroom to close the gap in regard to learners’ needs and at the same time refine teaching.

Discussion

I will start the discussion by first pointing out that resorting to the implementation of tests as the main way to assess students’ speaking may not be significant if teachers fail to acknowledge that, regardless of its summative principles, testing may serve learners for formative purposes (López & Bernal; Muñoz et al. as cited in Giraldo, 2019). Therefore, an alternative approach to speaking assessment practices should be implemented so that learners are encouraged to show their potential and confidence when delivering ideas, and not restrict their speaking assessment to summative practices (Huerta-Macias as cited in Brown & Hudson, 1998).

In second place, the integration of feedback in speaking assessment should highlight learners’ speaking strengths rather than their weaknesses in order to foster their learning (Hattie as cited in Lynch & Maclean, 2003). This is aligned with Hatziapostolou and Paraskakis’s (2010) findings where feedback had formative purposes to ensure that students are engaged in their assessment process, and thus promote their learning. Similarly, in relation to the results presented in Pineda (2014), the rubrics implemented by Teachers 2, 3, and 4 allowed them to record evidence of their students’ speech and, at the same time, provided learners the opportunity to know what was expected of them during their performances because they were made aware of the assessment criteria beforehand (Chowdhury, 2019; De la Barra et al., 2018; Green, 2013).

On the other hand, the lack of the implementation of any instrument in Teacher 1’s speaking assessment practice is a negative indicator for contributing to valid and meaningful assessment practices. As explained in Jonsson and Svingby (2007), the absence of an instrument for conducting speaking assessment practices limits teachers’ ability to provide learners fair and consistent judgments about their performance. Similarly, Teacher 1’s feedback in the way of reporting scores is aligned with Hardavella et al.’s (2017) findings in that the learners may not identify the aspects to improve or strengthen assuming that their mistakes might be presented as usual, hence constructing a false perception of their performance improvement.

Third, the strategies observed in Teacher 3’s assessment activity, such willingness to help, and corrective feedback relate to those presented in Ebadi and Asakereh (2017), Namaziandost et al. (2017), and Tamayo and Cajas (2017) as these were positive for refining learners’ discourse and for helping students become aware of the elements to improve without affecting the flow of their ideas (Gamlo, 2019). However, and similar to findings in Hernández-Méndez and Reyes-Cruz (2012), Teacher 3 looked at corrective feedback only as a technique to improve accuracy in students’ speaking, particularly in pronunciation and morphosyntax. In consequence, Hernández-Méndez and Reyes-Cruz suggest that it is important to know more about corrective feedback effects and their role in interlanguage learners’ development if this is only limited to accurately improve learners’ pronunciation and ideas construction.

In the assessment process conducted by participants in this study, other valuable assessment practices such as peer-feedback, peer-assessment, or self-assessment were not mentioned or evidenced in the observations and interviews. These feedback and assessment practices can be also effective and contribute positively to the learning process, since they empower the student by making them an active participant in their process, and are not limited to the common teacher-student interaction where only the former provides tools for learning.

Conclusions

The present study explored four teachers’ stated speaking assessment approaches and the relationship these had to their actual speaking assessment practices. The stated approaches included the view of speaking assessment as a continuous process that improves teaching and learning through the development of activities with assessment criteria to engage and foster students’ communication. Additionally, the four teachers stated that they implemented feedback as an essential practice to highlight learners’ speaking strengths and aspects to improve. Conversely, their speaking assessment practices mainly entailed summative methods which integrated assessment criteria using rubrics to measure students’ speaking skill.

Surprisingly, during these summative practices feedback with formative purposes was evidenced to report and support students’ speaking performance after and during their tests. Notwithstanding, in the only alternative assessment activity conducted to assess students’ speaking skill, the lack of an assessment instrument and the use of summative feedback to report students’ speaking performance were aspects against the formative purposes that underpinned the principles of this practice.

In regard to the relationship among stated approaches and actual practices, findings yielded that the teachers’ humanistic understanding of students’ speaking assessment to benefit their learning and teaching process became highly limited by the integration of a summative approach. Results indicated that teachers seemed not to be aware of the dimensions of their implemented speaking assessment approaches, as they limited their scope to summative principles. The implementation of summative methods, the integration of technical assessment criteria, the use of summative feedback, and the lack of an instrument to properly conduct these practices detached students’ speaking assessment as a beneficial factor for learning and teaching, and merely summarized their assessment under summative purposes.

Finally, how teachers actually conducted their speaking assessment practices advocates Herrera and Macías’s (2015) call to provide training spaces for educators in terms of language assessment literacy (LAL) to support both their teaching and the learning process of students. In consequence, based on the findings in this study, LAL training may be an opportunity for participants to raise awareness about how instruction and learning are interrelated to develop assessment practices detached from summative principles, consolidate and implement assessment instruments, support students’ guidance through feedback, and interpret assessment results to take decisions based on these (Herrera & Macías, 2015).

Limitations of the Study

One of the main limitations is regarding the exploration of the assessment principles (validity, reliability, practicality). There was not an examination of these elements in the speaking assessment activities and instruments implemented by each educator, and the criteria articulated for developing the respective students’ speaking assessments. This study focused on the description of the stated teachers’ assessment approaches, and the relationships between what they state they will do and what they actually conduct in practice. Therefore, further research may delve into this aspect.

Further Research

This study focused on teachers’ assessment approaches regarding students’ speaking skill. It would be advisable for further research to explore the following questions: How teachers’ assessment approaches to speaking skill inform learners’ performance? What are learners’ perceptions towards teachers’ assessment approaches to speaking? What implications for the institution can be derived from students’ assessment results?