Introduction

Recent years have witnessed calls for teachers to view classroom assessment as an inseparable part of the teaching and learning process, and to use assessment data to enhance instruction and promote students’ learning (DeLuca et al., 2018; Shepard, 2013). In line with the educational shift in the conceptualization of assessment, it has been contended that teachers need to gain competency in utilizing a variety of methods in assessing students’ learning, irrespective of whether the assessment is employed to support learning through provision of feedback (Lee, 2017), or it is used to measure learning outcomes (Campbell, 2013). Despite repeated calls for teachers’ capitalization on various assessment methods and skills (e.g., Taylor, 2013), research has generally shown that teachers lack adequate assessment proficiency-or what has come to be known as assessment literacy-to take advantage of and inform their instructional practice (DeLuca & Bellara, 2013; Fives & Barnes, 2020; Popham, 2009). In one of the early attempts to introduce the concept of assessment literacy, Stiggins (1995) defined it as teachers’ understanding of “the difference between sound and unsound assessment” (p. 240). Popham (2011) defined assessment literacy as “an individual’s understandings of the fundamental assessment concepts and procedures deemed likely to influence educational decisions” (p. 265).

The term language assessment literacy (LAL) has recently appeared in the literature on assessment literacy owing to the distinctive features of the context of language teaching and learning (Inbar-Lourie, 2008; Levi & Inbar-Lourie, 2019). Although the concept of LAL is relatively new, it is a large and gradually developing construct in applied linguistics, which has been conceptualized in various ways in the literature (Fulcher, 2012; Inbar-Lourie, 2008, 2017; Lan & Fan, 2019; Lee & Butler, 2020). Due to the increasing importance of LAL in meeting the increased demand for and the use of assessment data by language teachers and other stakeholders for the new age (Inbar-Lourie, 2013; Tsagari & Vogt, 2017), researchers have paid close attention to the investigation of teachers’ LAL (e.g., DeLuca et al., 2018; Lam, 2019; Xu & Brown, 2017). The majority of these studies have utilized multiple-choice or scenario-based scales inquiring into preservice or in-service language teachers’ assessment knowledge, beliefs, and/or practice (e.g., Ölmezer-Öztürk & Aydin, 2018; Tajeddin et al., 2018). The scales used in these studies have been tightly aligned with the seven Standards for Teacher Competence in Educational Assessment for Students (American Federation of Teachers [AFT] et al., 1990). However, as Brookhart (2011) noted, the 1990 standards are outdated in terms of not considering current conceptions of formative assessment knowledge and skills as well as of accountability concerns. Moreover, the previous scales have briefly touched on teachers’ classroom-based assessment literacy. According to Xu (2017), classroom assessment literacy refers to “teachers’ knowledge of assessment in general and of the contingent relationship between assessment, teaching, and learning, as well as abilities to conduct assessment in the classroom to optimize such contingency” (p. 219). The present study, therefore, addresses the classroom-based assessment gap by developing a classroom-based language assessment literacy (CBLAL) scale to come up with items that solicit realistic and meaningful data applicable to the classroom context. Also, the study seeks to explore Iranian English as a foreign language (EFL) teachers’ status of classroom-based language assessment knowledge and practice using the newly-developed scale.

Literature Review

Conceptualization of Language Assessment Literacy

The term LAL has been conceptualized by many scholars in the past two decades (e.g., Fulcher, 2012; Inbar-Lourie, 2008; Pill & Harding, 2013; Taylor, 2013). LAL was defined by Inbar-Lourie (2008) as “having the capacity to ask and answer critical questions about the purpose for assessment, about the fitness of the tool being used, about testing conditions, and about what is going to happen on the basis of the test results” (p. 389). Fulcher (2012) defined LAL as “the knowledge, skills and abilities required to design, develop, maintain or evaluate, large-scale standardized and/or classroom-based tests, familiarity with test processes, and awareness of principles and concepts that guide and underpin practice, including ethics and codes of practice” (p. 125).

Taylor (2013) put forward a model of assessment competency and expertise for different stakeholder groups. Placing language teachers at an intermediary position between the measurement specialist and the general public, Taylor argued that LAL is best defined in terms of the particular needs of each stakeholder group. Likewise, Pill and Harding (2013) rejected a dichotomous view of “literacy” or “illiteracy,” arguing for viewing LAL in terms of a continuum from “illiteracy” to “multidimensional literacy.” They contended that non-practitioners do not require assessment literacy at the “multidimensional level” or the “procedural level;” rather, it would be desirable for policy makers and other non-practitioners to gain “functional level” of assessment literacy in order to deal with language tests.

Research on Language Assessment Literacy

The last two decades have witnessed an increasing number of studies investigating teachers’ self‐described levels of assessment literacy (e.g., Lan & Fan, 2019; Vogt & Tsagari, 2014; Xu & Brown, 2017), approaches to assessment (e.g., DeLuca et al., 2018; DeLuca et al., 2019; Tajeddin et al., 2018), perceptions about language assessment (e.g., Tsagari & Vogt, 2017), and assessment use confidence (e.g., Berry et al., 2019). For instance, Vogt and Tsagari (2014) explored the current level of language testing and assessment (LTA) literacy of foreign language teachers from seven European countries through questionnaires and teacher interviews. They found that the LTA literacy of teachers was not very well-developed. Xu and Brown (2017), utilizing an adapted version of the Teacher Assessment Literacy Questionnaire developed by Plake et al. (1993), investigated 891 Chinese university English teachers’ assessment literacy levels and the effects of their demographic characteristics on assessment literacy performance. The findings of the study revealed that the vast majority of the teachers had very basic to minimally acceptable competencies in certain dimensions of assessment literacy. Tajeddin et al. (2018) aimed at exploring 26 novice and experienced language teachers’ knowledge and practices with regard to speaking assessment purposes, criteria, and methods. The researchers concluded that, although divergence between novice and experienced teachers’ knowledge and practice of assessment purpose was moderate, the data revealed more consistency in the experienced teachers’ assessment literacy for speaking.

DeLuca et al. (2018) sought to explore 404 Canadian and American teachers’ perceived skills in classroom assessment across their career stage (i.e., teaching experience). The researchers observed that more experienced teachers, as opposed to less experienced teachers, reported greater skill in monitoring, analyzing, and communicating assessment results as well as assessment design, implementation, and feedback.

More recently, DeLuca et al. (2019) looked into 453 novice teachers’ classroom assessment approaches using five assessment scenarios. The researchers observed that teachers were quite consistent regarding their learning principles. However, they showed some difference in their actual classroom practice, indicating the situated nature of classroom assessment practice. In another study, Lan and Fan (2019) explored 344 in-service Chinese EFL teachers’ status of LAL. They observed that teachers’ classroom-based LAL was at the functional level, namely “sound understanding of basic terms and concepts” (Pill & Harding, 2013, p. 383). The researchers concluded that teacher education courses should acquaint teachers with the necessary knowledge and skills for conducting classroom-based assessment literacy.

Considering the variety of conceptualizations of the term LAL and its intricacies, more studies must be carried out in local contexts (Inbar-Lourie, 2017) with a focus on language teachers’ perspectives (Lee & Butler, 2020) in order to help the field come to grips with the dynamics of the issue. Also, as the above literature review shows, we still have a limited understanding of language teachers’ classroom-based assessment literacy. Against this backdrop, the main purpose of this study is twofold: developing and validating a new CBLAL scale to assess language teachers’ perceived classroom-based assessment knowledge and practice, and looking into Iranian EFL teachers’ perceived classroom-based language assessment knowledge and practice. The following are the questions that guided this study:

Method

Participants

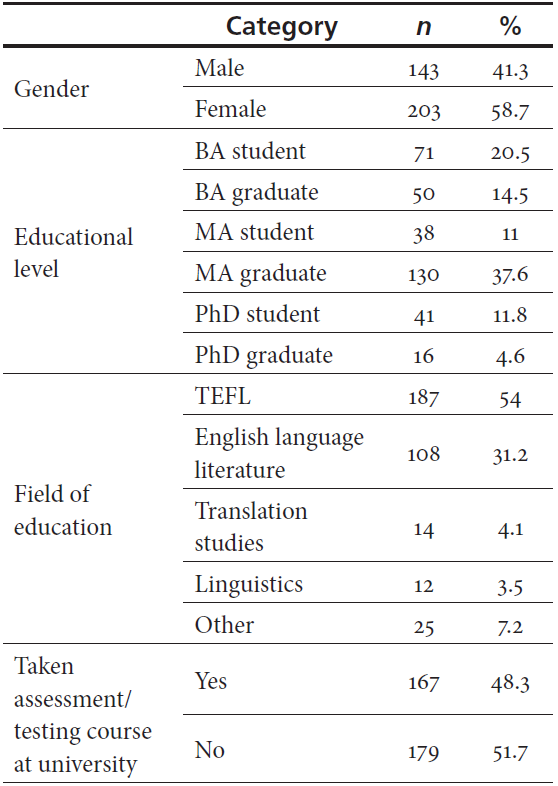

The participants of the study for the initial piloting of the scale were 54 Iranian EFL teachers, including 23 male (42.6%) and 31 female (57.4%) teachers. It should be noted that the pilot study participants, albeit identical to those in the main study, did not take part in the later study. A total of 346 EFL teachers, including 143 men (41.3%) and 203 women (58.7%), participated in the development and validation of the scale as well as the investigation of the classroom-based assessment literacy of EFL teachers. The teachers were all teaching general English (i.e., integrated four language skills) to various levels and age groups in private language schools in the Iranian context. In these language schools, teachers are required to follow a fixed syllabus using well-known communication-oriented international textbook series (Sadeghi & Richards, 2015). The private school supervisors commonly use written examinations and interviews to recruit qualified teachers and regularly observe their performance for promotional and career growth purposes (Sadeghi & Richards, 2015).

The participants’ ages ranged between 18 and 67, with the average age of 32. All teachers, based on a convenience sampling procedure, voluntarily took part in the study. More than half of the teachers had majored in teaching EFL. Moreover, almost half of the teachers had taken a language testing/assessment course at university (see Table 1).

Scale Development

The process of developing the CBLAL scale began with a review of previously validated assessment literacy scales in the literature (e.g., Fulcher, 2012; Mertler & Campbell, 2005; Plake et al., 1993; Zhang & Burry-Stock, 1994). It was observed that most previous studies exploring teachers’ assessment literacy used the Teacher Assessment Literacy Questionnaire (Plake et al., 1993), or its adapted version-the Assessment Literacy Inventory (Mertler & Campbell, 2005). Both scales included 35 items assessing teachers’ understanding of general concepts about testing and assessment, which were tightly aligned to the seven Standards for Teacher Competence in Educational Assessment for Students (AFT et al., 1990).

To address the recent conceptualizations of classroom-based assessment needs of language teachers (Brookhart, 2011), we set out to develop a CBLAL scale based on Xu and Brown’s (2016) six-component interrelated framework of teacher assessment literacy in practice (TALiP). Assessment knowledge base, constituting the basis of the framework, was used for developing the CBLAL scale in this study. According to Xu and Brown, teacher assessment knowledge base refers to “a core body of formal, systematic, and codified principles concerning good assessment practice” (p. 155). The key domains of teacher assessment knowledge base are briefly defined next:

Disciplinary knowledge and pedagogical content knowledge: knowledge of the content and the general principles regarding how it is taught or learned.

Knowledge of assessment purposes, content, and methods: knowledge of the general objectives of assessment and the relevant assessment tasks and strategies.

Knowledge of grading: knowledge of rationale, methods, content, and criteria for grading and scoring students’ performance.

Knowledge of feedback: knowledge of the types and functions of various feedback strategies for enhancing learning.

Knowledge of assessment interpretation and communication: knowledge of effective interpretation of assessment results and how to communicate them to stakeholders.

Knowledge of student involvement in assessment: knowledge of the benefits and strategies of engaging students in the assessment process.

Knowledge of assessment ethics: knowledge of observing ethical and legal considerations (i.e., social justice) in the assessment process.

It should be noted that as the present study sought to develop a language assessment-specific scale to be used for pinpointing language teachers’ classroom-based assessment knowledge and practice, the first domain of the assessment knowledge base (i.e., disciplinary knowledge and pedagogical content knowledge) was not taken into account in the scale development process. It was reasoned that an adequate understanding of the disciplinary content and the general principles regarding how it is taught or learned is a pre-requisite for all teachers regardless of the content area they are teaching (Brookhart, 2011; Firoozi et al., 2019).

After existing assessment literacy scales were reviewed by the researchers, 25 assessment knowledge items (i.e., 62.5%) which corresponded to the subcomponents of the Xu and Brown’s framework (i.e., assessment literacy knowledge base) were identified. Next, they were borrowed and reworded in order to measure teachers’ (a) perceived classroom-based assessment knowledge, and (b) perceived classroom-based assessment practice. According to Dörnyei (2003, p. 52), “borrowing questions from established questionnaires” is one of the sources that successful item designers mostly rely on. Then, 15 assessment knowledge items (i.e., 37.5%) were originally developed by the authors to ensure an acceptable number of items for each subcomponent. Later, 40 assessment practice items were developed corresponding to the 40 assessment knowledge items. The final draft of the CBLAL scale comprised a demographics part and two other sections. The demographics part consisted of both open-ended and close-ended items inquiring into the participants’ demographic information. The first of the other two sections aimed at exploring language teachers’ knowledge of classroom-based assessment. A pool of 40 items was generated in line with the six components of Xu and Brown’s framework. The items asked teachers to evaluate their own knowledge of assessment on a Likert scale ranging from 1 (strongly disagree), 2 (slightly disagree), 3 (moderately agree), to 4 (strongly agree). The items in the second part of the last two sections of the scale corresponded to the preceding one in terms of the targeted construct (i.e., the six components of Xu and Brown’s framework). However, they were modified to probe into the teachers’ perceptions of their classroom-based assessment practices. There was a total of 40 items on a Likert scale ranging from 1 (never), 2 (rarely), 3 (sometimes), 4 (very often), to 5 (always) in the last section of the scale.

Prior to subjecting the scale to psychometric analysis, it was filled out by six teachers to check the intelligibility of the items. Having resolved the ambiguities and unintelligible items based on the teachers’ feedback, the researchers pilot-tested the scale on 54 teachers to assure its reliability using Cronbach’s alpha. As for the validity of the scale, a panel of four instructors doing their PhD in applied linguistics was consulted to review the content validity of the scale. Also, exploratory factor analysis (EFA) was performed in the main phase of the study to extract major factors and item loadings of the scale. To do so, the scale was distributed among a large pool of language teachers through both online media (e.g., email) and personal contacts. Overall, a total of 346 teachers filled out the scale and their results were subjected to EFA. Regarding the status of language teachers’ classroom-based assessment knowledge and practice, descriptive statistics were run based on the teachers’ responses to the CBLAL scale.

Results

Having validated and administered the CBLAL scale to Iranian EFL teachers, the researchers explored teachers’ status of classroom-based assessment knowledge and practice. The findings are presented in the following sections.

Exploratory Factor Analysis

The initial 80 items of teachers’ CBLAL, including 40 classroom-based assessment knowledge items on a 4-point Likert scale and 40 classroom-based assessment practice items on a 5-point Likert scale, were subjected to EFA, namely principal axis factoring (PAF) with direct Oblimin rotation. The suitability of data for factor analysis was investigated prior to performing PAF. First, the normality of the distribution of the data was checked by considering the skewness and kurtosis measures of the items. It was found that all items’ statistics ranged between -2 and +2, satisfying the assumption of normality (Tabachnick & Fidell, 2013). Second, the Kaiser-Meyer-Olkin (KMO) measure was used to estimate the sampling adequacy for the analysis. As can be seen in Table 2, the KMO value was .91 for assessment knowledge items and .92 for assessment practice items, exceeding the recommended minimum value of .6 (Pallant, 2016; Tabachnick & Fidell, 2013). Further, as shown in Table 2, Bartlett’s test of sphericity reached statistical significance for both measures, which indicated that correlations between items were sufficiently large for PAF.

Table 2 Kaiser-Meyer-Olkin and Bartlett’s Test

| Assessment knowledge | Assessment practice | ||

|---|---|---|---|

| Kaiser-Meyer-Olkin measure of sampling adequacy | .916 | .922 | |

| Bartlett’s test of sphericity | Approx. Chi-square | 9367.020 | 9664.243 |

| df | 780 | 780 | |

| Sig. | .000 | .000 | |

After running PAF on the assessment knowledge items, an initial 7-factor solution emerged with eigenvalues exceeding 1, explaining 40.4%, 5.8%, 4.7%, 4.2%, 3.1%, 2.8%, and 2.6% of the variance, respectively. However, an inspection of the scree plot and parallel analysis showed only four factors with eigenvalues exceeding the corresponding criterion values for a randomly generated data matrix of the same size (40 variables × 346 respondents; Pallant, 2016). The final 4-factor solution of assessment knowledge measure explained a total of 55.3% of the variance. The internal consistency of the assessment knowledge scale as a whole was estimated and its Cronbach’s Alpha was found to be .95.

Regarding assessment practice items, an initial 7-factor solution emerged, with eigenvalues exceeding 1, explaining 40.6%, 7.2%, 4.5%, 4.0%, 3.3%, 2.9%, and 2.5% of the variance, respectively. The inspection of the scree plot and parallel analysis, however, yielded a 4-factor solution for the assessment practice scale, which explained a total of 56.5% of the variance. The internal consistency of the assessment practice scale as a whole was estimated and its Cronbach’s Alpha was found to be .94.

To aid in the interpretation of the extracted factors, Oblimin rotation was performed. Also, only variables with loadings of .4 and above were interpreted, as suggested by Field (2013). It should be noted that Items 25 and 26 were omitted from the assessment knowledge scale due to their low coefficients (see Table 3). Moreover, Item 21 was omitted from the assessment knowledge scale due to cross-loadings. Regarding the assessment practice scale pattern matrix (Table 4), Items 14, 15, 16, and 40 were suppressed by SPSS from the factor solution because of their low coefficients.

Table 3 Pattern Matrix of the Extracted Factors for Assessment Knowledge

| Assessment knowledge factors | |||||

|---|---|---|---|---|---|

| Item # | 1 | 2 | 3 | 4 | |

| 1 | I am familiar with using classroom tests (e.g., quizzes) to pinpoint students’ strengths and weaknesses to plan further instruction. | .875 | |||

| 2 | I know how to use classroom tests for the purpose of assigning grades to students. | .766 | |||

| 3 | I know how to use classroom tests to track students’ progress during the course. | .603 | |||

| 4 | I am knowledgeable about using classroom tests for the purpose of planning future instruction. | .721 | |||

| 5 | I have sufficient knowledge to use classroom tests to help me divide students into different groups for instructional purposes. | .704 | |||

| 6 | I know how to use various types of classroom tests (e.g., speaking tests or grammar quizzes) depending on the intended course objectives. | .770 | |||

| 7 | I can adapt tests found in teachers’ guidebooks to fit intended course objectives. | .577 | |||

| 8 | I know how to use a detailed description of intended course objectives to develop classroom tests. | .754 | |||

| 9 | I am familiar with using different types of classroom tests to assign grades to students. | .636 | |||

| 10 | I maintain detailed records of each student’s classroom test results to help me assign grades. | .497 | |||

| 11 | I know how to grade each student’s classroom test performance against other students’ test performance. | .623 | |||

| 12 | I can develop rating scales to help me grade students’ classroom test performance. | .703 | |||

| 13 | I have sufficient knowledge about grade students’ classroom test performance against certain achievement goals. | .742 | |||

| 14 | I know how to consult with experienced colleagues about rating scales they use to grade students’ classroom test performance. | .555 | |||

| 15 | I know how to consult with my colleagues about assigning grades to students. | .486 | |||

| 33 | I recognize students’ cultural diversity and eliminate offensive language and content of classroom tests. | .745 | |||

| 34 | I know how to match the contents of my classroom tests with the contents of my teaching and intended course objectives. | .464 | |||

| 35 | I know how to use the same classroom tests and the same rating scales for all students to avoid bias. | .631 | |||

| 36 | I observe assessment fairness by avoiding giving lower grades to students from lower socioeconomic status. | .497 | |||

| 37 | I am knowledgeable about how to help students with learning disability during classroom tests. | .586 | |||

| 38 | I know how to avoid using new items in my classroom tests which did not appear on the course syllabus. | .440 | |||

| 39 | I know how to inform students of the test item formats (e.g., multiple choice or essay) prior to classroom tests. | .417 | |||

| 40 | I know how to announce students’ classroom test scores individually, rather than publicly, to avoid making them get embarrassed. | .459 | |||

| 27 | I encourage students to assess their own classroom test performance to enhance their learning. | .472 | |||

| 28 | I help my students learn how to grade their own classroom test performance. | .446 | |||

| 29 | I can ask top students in my class to help me assess other students’ classroom test performance. | .811 | |||

| 30 | I know how to encourage students to provide their classmates with feedback on their classroom test performance. | .740 | |||

| 31 | I know how to give students clear rating scales by which they can assess each other’s classroom test performance. | .635 | |||

| 32 | I know how to explain to students the rating scales I apply to grade their classroom test performance. | .464 | |||

| 16 | I provide students with regular feedback on their classroom test performance. | -.529 | |||

| 17 | I provide students with specific, practical suggestions to help them improve their test performance. | -.690 | |||

| 18 | I praise students for their good performance on classroom tests. | -.688 | |||

| 19 | I know how to remind students of their strengths and weaknesses in their classroom test performance to help them improve their learning. | -.620 | |||

| 20 | I know how to encourage my students to improve their classroom test performance according to the feedback provided by me. | -.689 | |||

| 21 | I use classroom test results to determine if students have met course objectives. | .411 | -.465 | ||

| 22 | I know how to use classroom test results to decide whether students can proceed to the next stage of learning. | -.606 | |||

| 23 | I can construct an accurate report about students’ classroom test performance to communicate it to both parents and/or institute managers. | -.466 | |||

| 24 | I speak understandably with students about the meaning of the report card grades to help them improve their test performance. | -.597 | |||

The assessment knowledge items that clustered around the same factors (bolded items) in the pattern matrix presented in Table 3 suggested that factor one, containing 15 items, represented “Knowledge of Assessment Use and Grading.” The items elicit teachers’ familiarity with the purpose of classroom tests and how to choose appropriate classroom tests to fit intended course objectives. Factor one also probes into teachers’ knowledge of grading students’ classroom test performance and how to get assistance from experienced colleagues in this regard. Factor two comprised 8 items which represented “Knowledge of Assessment Ethics.” It elicits teachers’ knowledge of how to observe assessment fairness and to avoid assessment bias in classroom tests. Factor three comprised 6 items which tapped on “Knowledge of Student Involvement in Assessment.” The items examine teachers’ knowledge of strategies to encourage students to assess their own and their peers’ classroom test performance. Finally, factor four consisted of 8 items which represented “Knowledge of Feedback and Assessment Interpretation.” The items inquire into teachers’ knowledge of providing students with regular feedback (i.e., practical suggestions) to help them improve their test performance. The items also elicit teachers’ familiarity with reporting and communicating students’ classroom test performance to both parents and/or school managers.

Table 4 Pattern Matrix of the Extracted Factors for Assessment Practice

| Assessment practice factors | |||||||

|---|---|---|---|---|---|---|---|

| Item # | 1 | 2 | 3 | 4 | |||

| 1 | Using classroom tests (e.g., quizzes) to pinpoint students’ strengths and weaknesses to plan further instruction. | .790 | |||||

| 2 | Using classroom tests for the purpose of assigning grades to students. | .700 | |||||

| 3 | Using classroom tests to track students’ progress during the course. | .739 | |||||

| 4 | Using classroom tests for the purpose of planning future instruction. | .786 | |||||

| 5 | Using classroom tests to help me divide students into different groups for instructional purposes. | .620 | |||||

| 6 | Using various types of classroom tests (e.g., speaking tests or grammar quizzes) depending on the intended course objectives. | .648 | |||||

| 7 | Adapting tests found in teachers’ guidebooks to fit intended course objectives. | .558 | |||||

| 8 | Using a detailed description of intended course objectives to develop classroom tests. | .636 | |||||

| 9 | Using different types of classroom tests to assign grades to students. | .560 | |||||

| 10 | Maintaining detailed records of each student’s classroom test results to help me assign grades. | .461 | |||||

| 11 | Grading each student’s classroom test performance against other students’ test performance. | .621 | |||||

| 12 | Developing rating scales to help me grade students’ classroom test performance. | .698 | |||||

| 13 | Grading students’ classroom test performance against certain achievement goals. | .574 | |||||

| 17 | Providing students with specific, practical suggestions to help them improve their test performance. | .429 | |||||

| 32 | Explaining to students the rating scales I apply to grade their classroom test performance. | .497 | |||||

| 33 | Recognizing students’ cultural diversity and eliminating offensive language and content of classroom tests. | .724 | |||||

| 34 | Matching the contents of my classroom tests with the contents of my teaching and intended course objectives. | .591 | |||||

| 35 | Using the same classroom tests and the same rating scales for all students to avoid bias. | .752 | |||||

| 36 | Observing assessment fairness by avoiding giving lower grades to students from lower socioeconomic status. | .582 | |||||

| 37 | Helping students with learning disability during classroom tests. | .546 | |||||

| 38 | Avoiding using new items in my classroom tests which did not appear on the course syllabus. | .758 | |||||

| 39 | Informing students of the test item formats (e.g., multiple choice or essay) prior to classroom tests. | .651 | |||||

| 26 | Participating in discussion with institute managers about important changes to the curriculum based on students’ classroom test results. | .477 | |||||

| 27 | Encouraging students to assess their own classroom test performance to enhance their learning. | .587 | |||||

| 28 | Helping my students learn how to grade their own classroom test performance. | .729 | |||||

| 29 | Asking top students in my class to help me assess other students’ classroom test performance. | .803 | |||||

| 30 | Encouraging students to provide their classmates with feedback on their classroom test performance. | .860 | |||||

| 31 | Giving students clear rating scales by which they can assess each other’s classroom test performance. | .771 | |||||

| 18 | Praising students for their good performance on classroom tests. | -.586 | |||||

| 19 | Reminding students of their strengths and weaknesses in their classroom test performance to help them improve their learning. | -.723 | |||||

| 20 | Encouraging my students to improve their classroom test performance according to the feedback provided by me. | -.775 | |||||

| 21 | Using classroom test results to determine if students have met course objectives. | -.422 | |||||

| 22 | Using classroom test results to decide whether students can proceed to the next stage of learning. | -.507 | |||||

| 23 | Constructing an accurate report about students’ classroom test performance to communicate it to both parents and/or institute managers. | -.412 | |||||

| 24 | Speaking understandably with students about the meaning of the report card grades to help them improve their test performance. | -.469 | |||||

| 25 | Speaking understandably with parents, if needed, about the decisions made or recommended based on classroom test results. | -.507 | |||||

As for assessment practice items, those that clustered around the same factors (bolded items) in the pattern matrix presented in Table 4 suggested that factor one, comprising 13 items, represented “Assessment Purpose and Grading.” It elicits the frequency of the use of classroom tests to track students’ progress during the course, to plan future instruction, and to assign grades to students. Factor two, consisting of 9 items, represented “Assessment Ethics.” Factor two items inquire into observing assessment fairness and avoiding assessment bias in classroom tests. Factor three, comprising 6 items, implies “Student Involvement in Assessment.” It explores the frequency of assisting students to learn how to grade their own and their peers’ classroom test performance. Finally, factor four, with 8 items, refers to “Feedback and Assessment Interpretation and Communication.” The items elicit the frequency of reminding students of their strengths and weaknesses in their classroom test performance and of using classroom test results for decision-making purposes.

Overall, the classroom-based assessment knowledge and practice items loaded on four thematic areas with 37 items falling under classroom-based assessment knowledge and 36 items relating to classroom practice.

Teachers’ Classroom-Based Assessment Knowledge and Practice

The newly-developed CBLAL scale was used to probe into Iranian EFL teachers’ knowledge and practice of four factors of these teachers’ classroom-based assessment knowledge base (Xu & Brown, 2016). Table 5 presents the percentages of the teachers’ responses on classroom-based assessment knowledge factors.

Table 5 Mean and Response Percentages of Classroom-Based Assessment Knowledge Factors

| Factors | Strongly disagree | Slightly disagree | Moderately agree | Strongly agree | Mean | SD |

|---|---|---|---|---|---|---|

| Knowledge of Assessment Use and Grading | 3.93% | 19.47% | 41.25% | 35.35% | 3.08 | 0.82 |

| Knowledge of Assessment Ethics | 2.60% | 14.93% | 34.55% | 47.93% | 3.27 | 0.78 |

| Knowledge of Student Involvement in Assessment | 6.73% | 22.18% | 39.93% | 31.15% | 2.95 | 0.87 |

| Knowledge of Feedback and Assessment Interpretation | 0.95% | 10.99% | 35.69% | 52.38% | 3.39 | 0.69 |

As can be seen in Table 5, the teachers in the present study reported to be knowledgeable about classroom assessment by moderately or strongly agreeing with the items. More specifically, around 76% of the teachers self-reported to be knowledgeable about the uses of assessment and grading procedures in language classrooms. Regarding knowledge of assessment ethics, around 82% of the teachers believed that they were knowledgeable about ethical considerations in the classroom. As for knowledge of student involvement in assessment, 71% of the teachers believed they were knowledgeable about how to encourage students to assess their own and their peers’ classroom performance. Finally, regarding knowledge of feedback and assessment interpretation, 88% of the teachers were of the belief that they knew how to provide accurate feedback as well as report on students’ class performances.

As for the teachers’ classroom-based assessment practice, Table 6 presents the percentages of their responses on a 5-point Likert scale.

Table 6 Mean and Response Percentages of Classroom-Based Assessment Practice Factors

| Factors | Never | Rarely | Sometimes | Very often | Always | Mean | SD |

|---|---|---|---|---|---|---|---|

| Assessment Purpose and Grading | 4.03% | 10.54% | 24.08% | 37.22% | 24.13% | 3.66 | 1.05 |

| Assessment Ethics | 2.73% | 6.10% | 19.33% | 34.19% | 37.64% | 3.97 | 1.01 |

| Student Involvement in Assessment | 7.98% | 13.63% | 26.90% | 31.50% | 19.98% | 3.41 | 1.15 |

| Feedback and Assessment Interpretation and Communication | 1.98% | 5.50% | 19.90% | 37.06% | 35.56% | 3.98 | 0.94 |

Table 6 shows that around 61% of the teachers stated that they use classroom assessment for the purpose of grading students’ performance as well as informing future instruction. Also, around 71% of the teachers were of the belief that they very often consider ethical issues in their everyday classroom assessment tasks. As for student involvement in assessment, more than half (51%) of the teachers reported that they help students learn how to grade their own classroom test performance. Finally, as for feedback and assessment interpretation and communication, around 72% of the teachers were of the belief that they practice constructive feedback in their classes to help students set goals for their future success.

Discussion

The present study sought to develop and validate a new scale to tap teachers’ classroom-based assessment knowledge and practice. To do so, a total of 40 items on classroom-based assessment knowledge and a corresponding set of 40 classroom-based assessment practice items were developed and subjected to EFA. It was revealed that the six factors of Xu and Brown’s (2016) teacher assessment knowledge base collapsed into four factors in the context of the present study. The items on the scale clustered around the following themes: (a) purposes of assessment and grading, (b) assessment ethics, (c) student involvement in assessment, and (d) feedback and assessment interpretation. It can be reasoned that since a large number of students strive for international examinations (i.e., IELTS) at private language schools in Iran (Sadeghi & Richards, 2015), teachers’ view of assessment is largely quantitative (i.e., grade-based), and they see grading as the principal purpose of assessment procedures. As a result, the items on assessment purpose and grading loaded together in the EFA.

Moreover, not only was knowledge of assessment feedback and assessment communication and interpretation found to be interrelated in the context of the study, but it was also found that there is a high negative correlation between the factor of “feedback and assessment interpretation” and other three factors. It may be contended that teachers look at feedback and interpretation as part of “good” teaching practice rather than good assessment (Berry et al., 2019). In other words, teachers regard the process of providing feedback as a teaching mechanism helping students notice their strengths and weakness in order to facilitate their learning. Such a perspective has, however, been explained as “assessment for learning” in the literature (Lee, 2017).

Having validated the scale, the researchers investigated the status of Iranian EFL teachers’ classroom-based assessment knowledge and practice. The findings of the study reveal that most participants (around 80%) reported to be knowledgeable, to different extents, about classroom-based assessment by moderately or strongly agreeing with the items on the four factors. Also, most teachers (around 65%) reported practicing classroom-based assessment quite frequently in their classrooms in terms of the four classroom-based assessment practice factors. These findings corroborate those of Crusan et al. (2016) in that teachers reported to be familiar with writing assessment concepts and use various assessment procedures in their classes. The findings of the study, however, run counter to those of Lan and Fan (2019), Tsagari and Vogt (2017), Vogt and Tsagari (2014), and Xu and Brown (2017). For instance, Vogt and Tsagari (2014), exploring language testing and assessment (LTA) literacy of European teachers, concluded that the teachers’ LTA literacy is not well-developed, which was attributed to lack of training in assessment. Similarly, Lan and Fan (2019) investigated in-service Chinese EFL teachers’ classroom-based LAL. They observed that teachers lacked sufficient procedural and conceptual LAL for conducting classroom-based language assessment.

Language teachers’ high levels of self-reported assessment knowledge and practice in the present study might also be attributed to demographic (i.e., educational level) and affective (i.e., motivation) variables. Since many teachers teaching in Iranian private language schools hold an MA or PhD in teaching EFL, they may have been familiarized with some of the recent developments on the use of language assessment in classrooms (Sadeghi & Richards, 2015). Also, as private language school teachers face relatively less “meso-level” (i.e., school policies) constraints (Fulmer et al., 2015) and enjoy considerably more autonomy (i.e., space and support) in their teaching context (Lam, 2019), the chances of developing practical assessment knowledge and trying out renewed conceptualization of assessment in their classes is increased.

Reviewing the teachers’ responses to open-ended questions in the first section of the CBLAL scale (i.e., demographic information) showed that more than half of the participants (51.7%) had not taken any course with a particular focus on assessment. The remaining teachers (48.3%) unanimously reported to have taken such a course at university, indicating that teacher training courses (TTCs) offered at private language schools do not hold a special position in shaping teachers’ assessment literacy. To further support this contention, teachers’ responses to open-ended questions revealed that the topics covered in language testing courses offered at universities (i.e., including designing test items, test reliability and validation, and testing language skills and components) did not equip them with the dynamics of classroom assessment. Moreover, the teachers’ responses to open-ended questions confirm that most (around 80%) agreed to the allocation of more time to language assessment component in their preservice and/or in-service TTCs. They conceived of assessment as an essential component of the teaching process without which instruction would not lead to desirable outcomes. However, they unanimously stressed the need for the inclusion of a practical classroom-based assessment component in preservice and in-service teacher education courses.

Conclusion

The principal purpose of the present study was to develop and validate a new CBLAL scale, and then to explore Iranian EFL teachers’ status of classroom-based assessment knowledge and practice. It was found that teachers’ assessment knowledge base is composed of four main themes. Also, private language school teachers’ responses to the CBLAL scale revealed that they self-reported to be moderately assessment literate. However, the findings of the open-ended section of the scale painted a different picture. The majority of teachers expressed their need for a specific course on language assessment, which demands a cautious interpretation of the findings of the study.

The findings imply that in the absence of an assessment for learning component in teacher education courses for language teachers to equip them with recent updates on classroom assessment, teachers would resort to their past experiences as students, or what Vogt and Tsagari (2014) call “testing as you were tested.” Teacher education courses should then adopt Vygotsky’s sociocultural theory, particularly his concept of the “zone of proximal development.” With its emphasis on the contribution of mediation and dialogic interaction with teachers’ professional development (Johnson, 2009), a sociocultural theory perspective on teacher education can cater for an opportunity for teachers to “articulate and synthesize their perspectives by drawing together assessment theory, terminology, and experience” (DeLuca et al., 2013, p. 133). By externalizing their current understanding of assessment and then reconceptualizing and recontextualizing it, teachers can discover their own assessment mindset orientation and develop alternative ways of engaging in the activities associated with assessment (Coombs et al., 2020; DeLuca et al., 2019; Johnson, 2009).

The findings have important implications for language teacher education and professional development in that, by spotting classroom-based assessment needs of teachers and considering them in teacher education pedagogies, teachers’ conceptions and practices can be transformed (Loughran, 2006). Also, the findings have implications for materials developers, who are responsible for providing and sequencing the content of teaching materials. By becoming cognizant of the intricacies of classroom assessment, materials developers can include appropriate topics and discussions in their materials to help teachers acquire necessary classroom-based assessment knowledge base.

A number of limitations were, however, present in this study, which need to be acknowledged. The study only probed into language teachers’ self-reported account of classroom-based assessment knowledge and practice without any evidence of their actual practice. A further limitation was that teachers may have intentionally marked their assessment knowledge and practice items positive for some behavioral and “social desirability” reasons (Coombs et al., 2020). Therefore, future studies are invited to observe language teachers’ assessment practices (i.e., rather than their perceptions) to obtain a more realistic picture of their classroom-based assessment literacy. Future studies could also consider the implementation of “focused instruction” (DeLuca & Klinger, 2010) on the use of both formative and summative assessments in classrooms and the potential impact of such a course on language teachers’ classroom-based assessment literacy development.