Services on Demand

Journal

Article

Indicators

-

Cited by SciELO

Cited by SciELO -

Access statistics

Access statistics

Related links

-

Cited by Google

Cited by Google -

Similars in

SciELO

Similars in

SciELO -

Similars in Google

Similars in Google

Share

Universitas Psychologica

Print version ISSN 1657-9267

Univ. Psychol. vol.12 no.1 Bogotá Jan./March 2013

Fighting the Illusion of Control: How to Make Use of Cue Competition and Alternative Explanations*

Combatiendo la ilusión de control: cómo hacer uso de la competición de claves y las explicaciones alternativas

Miguel A. Vadillo **

University College London, United Kindom

Helena Matute

Fernando Blanco

Universidad de Deusto, Bilbao, España

* Support for this research was provided by Grant PSI2011-26965 from Ministerio de Ciencia e Innovación and Grant IT363-10 from Departamento de Educación, Universidades e Investigación of the Basque Government.

** University College London, United Kindom. Correspondence concerning this article should be addressed to either Miguel A. Vadillo, Division of Psychology and Language Sciences, University College London, 26 Bedford Way, London WC1H 0AH, UK, or Helena Matute, Departamento de Fundamentos y Métodos de la Psicología, Universidad de Deusto, Apartado 1, 48080 Bilbao, Spain. Fax: (34) (94) 4139089. E-mails: m.vadillo@ucl.ac.uk or matute@deusto.es

Recibido: noviembre 10 de 2011 | Revisado: enero 15 de 2012 | Aceptado: mayo 21 de 2013

Para citar este artículo:

Vadillo, M. A., Matute, H. & Blanco, F. (2013). Fighting the illusion of control: How to make use of cue competition and alternative explanations. Universitas Psychologica, 12(1), 261-270.

Abstract

Misperceptions of causality are at the heart of superstitious thinking and pseudoscience. The main goal of the present work is to show how our knowledge about the mechanisms involved in causal induction can be used to hinder the development of these beliefs. Available evidence shows that people sometimes perceive causal relationships that do not really exist. We suggest that this might be partly due to their failing to take into account alternative factors that might be playing an important causal role. The present experiment shows that providing accurate and difficult-to-ignore information about other candidate causes can be a good strategy for reducing misattributions of causality, such as illusions of control.

Key words authors:Illusion Of Control, Superstition, Pseudoscience, Discounting, Cue Competition.

Key words plus: Epistemology of Psychology, Experimental Psychology, Science, Causality

Resumen

Los errores en la percepción de la causalidad constituyen la base del pensamiento supersticioso y la pseudociencia. El principal objetivo del presente trabajo fue demostrar cómo puede utilizarse nuestro conocimiento sobre los mecanismos involucrados en la inducción causal para impedir o reducir el desarrollo de este tipo de creencias. La evidencia disponible mostró que a veces las personas perciben relaciones causales que en realidad no existen. La propuesta es que esto podría deberse, al menos en parte, a que las personas no suelen tener en cuenta factores alternativos que puedan estar jugando un papel causalmente relevante. El presente experimento demuestra que efectivamente se pueden reducir los errores en la atribución causal, tales como las ilusiones de control, proporcionando a los participantes información precisa y difícil de ignorar sobre otras causas posibles.

Palabras clave autores: Ilusión de control, superstición, pseudociencia, descarte de hipótesis, competición de claves.

Palabras clave descriptores: Epistemología de la Psicología, Psicología Experimental, ciencia, causalidad.

SICI: 1657-9267(201303)12:1<261:FICUCC>2.0.TX;2-A

Introduction

Most articles on causal induction begin with a remark on our outstanding abilities to detect causal relations and the importance of these abilities for our survival. Human beings, and probably other animals as well, are indeed superb causality detection machines. But our highly evolved capacities are not without their failures. The cognitive abilities responsible for our otherwise precise causal inferences do not prevent us from often perceiving causal patterns where only mere chance and luck exist. Not surprisingly, the study of causal misattribution and related issues is becoming a major topic in the current psychological research and theory (McKay & Dennett, 2009; Wegner, 2002).

These shortcomings are interesting not only because of the information they provide about the psychological processes underlying the perception of causality, but also because our systematic failures to correctly explain some events are a major source of suffering and superstition. Whenever we feel inclined to think that certain ethnic groups are more prone to crime than others, or that the gods will respond to our dancing around the fire with the long awaited rain, we are betrayed by the limitations of our causal reasoning abilities. No matter how much science and education have progressed, pseudoscience and superstition still wander at will through our developed societies (Davis, 2009; Dawkins, 2006; Goldacre, 2008; Lilienfeld, Ammirati & Landfield, 2009; Shermer, 1997). Fortunately, the abundant literature on causal learning and reasoning provides critical information about the mechanisms underlying these processes, and contains, either explicit or implicitly, many hints for reducing causal illusions (for reviews, see Gopnik & Schulz, 2007; Matute, Yarritu & Vadillo, 2011; Mitchell, De Houwer & Lovibond, 2009; Shanks, 2010; Sloman, 2005). However, to the present, researchers have dedicated little time and effort to exploring how their theories and empirical knowledge can be used to reduce superstitious beliefs. One of the main goals of the present work is to show how some of the most consistently replicated effects in causal induction studies can be used to impair the development of these beliefs and reduce their impact.

One of the most prominent and best-studied characteristics of causal induction is that the inferences regarding the causal status of one event are usually influenced by previous or subsequent knowledge about the causal relation that other events hold with the same outcome. Imagine, for example, that you read a text in which a celebrity advocates for a given political party. No doubt, you will probably attribute his writing to his political preferences (Jones & Davis, 1965). However, if you are later told that the author was paid in exchange for writing the paper, will this not cast doubt on his true political inclinations? Even when we have good reasons to believe that there is a causal relation between a given candidate cause and an event to be explained, our belief is usually revised, or even dramatically altered, if we later get to know about other potential causes that might account for the effect. This phenomenon, known as discounting (Einhorn & Hogarth, 1986; Kelley, 1973; Morris & Larrick, 1995) or cue competition (Baker, Mercier, Vallée-Tourangeau, Frank & Pan, 1993; Dickinson, Shanks & Evenden, 1984; Wasserman, 1990) in different research areas, addresses the competitive nature of causal induction. Although discounting and cue competition have received extensive attention in current research on causal induction (e.g., Goedert & Spellman, 2005; Laux, Goedert & Markman, 2010; Van Overwalle & Van Rooy, 2001), to our best knowledge, no serious attempt has been made to relate these effects to the development of superstitions and to the perception of illusory causal relations. However, as will be shown below, the available evidence suggests that a failure to take into account the role of alternative causes of an event is one of the many factors that can give rise to superstitions and causal misattributions.

The illusion of control is one of the most remarkable and puzzling instances of causal misat-tributions: People often tend to attribute actually uncontrollable events to their own behavior rather than to their real causes or to mere chance (Langer, 1975; Matute, 1995; Ono, 1987; Wright, 1962).

Sometimes, the reason why people believe that they can control some random event is just that they are more prone to expose themselves to the kind of evidence that would confirm these beliefs, than to alternative evidence that would prove the opposite (Nickerson, 1998; Wason, 1960). In other words, they are underexposed to the information that could be used to discount the role of their own behavior in producing these events. For example, students who carry lucky charms to their exams and obtain high grades usually fail to notice that they would have passed the exams anyway, even without the use of the amulet. In order to get that information, these students should keep their lucky charms at home at least in some exams, so that they could make the comparison between the grades they get when they use the charm and the ones they get when they do not. But, for obvious reasons, they are reluctant to do so. Indeed, recent research shows that leaving them at home increases anxiety and reduces self-esteem, which, in the end, can hinder performance (Damisch, Stoberock & Mussweiler, 2010). Given that most of their good grades are contiguous to the use of the lucky charm and that there are no or few instances of good grades in the absence of its use, it is just natural to conclude (erroneously) that the lucky charm had something to do with the results of the exam.

As illustrated by this example, people trying to control an important outcome usually expose themselves to evidence that suggests a positive relationship between their behavior and the desired outcome (i.e., a high number of coincidences between the target response and the outcome). Consequently, they are also underexposed to the evidence contrary to this hypothesis (i.e., occurrences of the outcome in the absence of that response, which would be indicative of the presence of alternative causes). Experiments conducted in the laboratory confirm that this pattern of behavior is an important factor in the emergence and maintenance of the illusion of control. When participants are highly involved in their attempts to control an event and refuse to check what would happen if they did not act, their illusion of control is enhanced (Blanco, Matute & Vadillo, 2011; Matute, 1996). However, when they are forced to refrain from responding in some trials, their illusion is reduced (Hannah & Beneteau, 2009). This might explain why depressed people, who are usually more passive and less motivated to control life events, usually tend to show few or no illusions of control (Blanco, Matute & Vadillo, 2009). In fact, the role of activity in the development of illusions of control is a straight-forward prediction of some formal models of causal learning (e.g., Rescorla & Wagner, 1972; see Matute, Vadillo, Blanco & Musca, 2007 for a computer simulation): The participants who are highly involved in trying to obtain an outcome experience more coincidences between their own actions and the outcome. Therefore, they have less opportunities to experience what would happen in the absence of their actions. As a consequence, they develop a stronger illusion of control.

In light of this evidence, one might think that the illusion of control should be easily reduced by alerting people about alternative, potential explanations of the events they are trying to control. However, both common experience and experimental findings suggest otherwise. Even when people are alerted about other potential explanations for the apparent success of alternative medicine or charms of any kind, they are still unwilling to reconsider the basis of their beliefs and usually prefer to consult other "experts" who will confirm their illusory beliefs. Just as an example, popular science writers and journalists who alert the general public against pseudoscientists and propose science-grounded explanations for their claims, have been sued in several countries (Gámez, 2007; Sense about Science, 2009). This illustrates quite well how, at least sometimes, our society places more value in the right to keep irrational beliefs than on the attempts to discover and teach the truth.

Experimental data gathered in the laboratory shows a similar pattern of behavior. For instance, in a recent experiment, conducted both in the laboratory and through the Internet (Matute, Vadillo, Vegas & Blanco, 2007), participants were asked to try to control, by pressing the space bar, the onset of a series of (actually uncontrollable) flashes appearing on the computer screen. Half of the participants were explicitly warned that they might have no real control over the flashes. However, this piece of information made no difference in their illusion of control, as measured by the subjective ratings of control provided at the end of the experiment.

An important fact that can explain why people refuse to consider alternative causes, even when they are informed about their potential role, is that the format in which this information about alternative explanations is provided might not be optimal for the revision of erroneous beliefs. Many researchers have argued that the psychological processes responsible for causal learning might vary depending on the way information is presented. For example, when people are given information about the covariation between a cause and an effect, whether this information is provided in a summary table or directly experienced in a series of trials, makes a difference in their ability to detect the cause-effect contingency (Shanks, 1991; Vallée-Tourangeau, Payton & Murphy, 2008; Waldmann & Hagmayer, 2001; Ward & Jenkins, 1965; but see Van Hamme & Wasserman, 1993). Thus, previous failures to reduce the illusion of control by alerting participants about the potential role of alternative causes (e.g., Matute, Vadillo, Vegas et al., 2007) might have been due to the fact that participants were not provided with direct experience on the presence and absence of the alternative cause during their attempts to control the target event.

In order to test the prophylactic effects of discounting upon the development of illusions of control, it is important to use a procedure that is known to produce strong illusions. Therefore, in the present experiment, the participants were exposed to a standard preparation for the study of the illusion that has been used extensively in previous studies (Blanco et al., 2009; Matute, 1996; Matute, Vadillo, Vegas, et al., 2007; see also Matute, Vadillo & Bárcena, 2007). Participants were instructed to try to turn off some blue and white flashes appearing on the computer's screen by using a joystick. These instructions, aimed at framing the task as a skill-based situation (Langer, 1975), are expected to promote the illusion of control. Moreover, this procedure is similar to other experimental tasks that are known to produce strong illusions of control and that have

been properly validated in previous research (e.g., Fenton-O'Creevy, Nicholson, Soane & Willman, 2003). For all the participants, the termination of the flashes was preprogrammed following a random sequence and was, therefore, uncontrollable. In one of the conditions these flashes were the only stimuli appearing on the screen. However, in the other condition, the offset of the flashes was always preceded by a signal that acted as an alternative cause that might account for the termination of the flashes. According to our hypothesis, this signal should compete with the participant's response as a potential explanation for the offset of the flashes, thereby reducing the illusion of control, in comparison to participants in the no-signal condition.

Method

Participants and Apparatus

Twenty students from the University of Deusto volunteered to take part in the experiment. Ten participants were assigned to the Signal condition and 10 to the No-Signal condition. Instructions and stimuli were presented on the screen by means of a computer program, and the participants had to make their responses with the computer keyboard and a joystick. All participants were tested individually.

Procedure and Design

Participants were told that a series of aversive, black and white color flashes would appear in the screen, and that their goal was to turn them off by moving the joystick, whose position in the screen was invisible for them. They were told that, if they were successful, the flashes would terminate in one second. Otherwise they would have to wait for 5 seconds before the flashes stopped. They were alerted that during the experiment they might also see some asterisks appearing on the screen and that if this happened, the annoying flashes would immediately turn off.

After reading the instructions, the participants were exposed to a sequence of 50 black and white flashes whose duration was predetermined beforehand and, therefore, were absolutely independent of the participant's behavior. The duration of these flashes was 1 second in half of the occasions and 5 seconds in the remaining occasions. Given that participants were trying to terminate the flashes, those flashes that lasted for just 1 second can presumably be regarded as occurrences of the desired event (flash termination), whereas the ones lasting 5 seconds were more likely to be perceived as failures to control their offset. Thus, all the participants were exposed to an average rate of reinforcing near 50%. In addition, for participants in the Signal condition, the end of the flashes was always preceded by a set of stars (asterisks) that filled the screen during 0.5 sec. These asterisks were not shown to participants in the No-Signal condition.

Immediately after this training phase, the participants were told that, regardless of whether they had seen any asterisks or not, we needed them to answer a couple of questions. First they were asked to rate the extent to which the offset of the flashes was influenced by the asterisks and then, below and in the same screen, the extent to which the termination of the flashes was dependent on their responses. Both questions were answered numerically on a visual scale ranging from 0 to 100. They were instructed that 0 meant that the offset of the flashes did not depend at all on that factor, and 100 meant that they depended completely on it.

Results

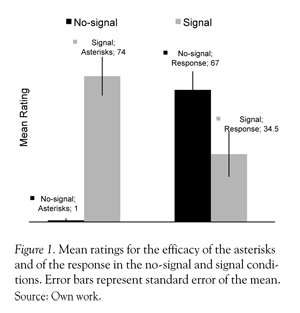

Figure 1 shows participants' mean ratings in both groups (Signal and No-Signal). The left part of the figure shows that, as expected, the perceived relevance of the asterisks was higher for the participants that had seen the asterisks preceding the outcome systematically than for participants who had not been exposed to them. The most important result is that, as shown in the right part of the figure, this manipulation not only affected the ratings for the asterisks, but also the ratings about the efficacy of the response: these were remarkably higher when the outcomes could not be predicted by an external signal. In other words, signaling the outcome by means of an alternative cue reduced the illusion of control.

Given that participants had no actual control over the task in any of the conditions, one would expect that they all should have given a zero rating for the efficacy of their response in both groups. However, t-tests show that these ratings were significantly higher than zero for the no-signal condition, t (9) = 7.10, p < 0.001, as well as for the signal condition, t (9) = 3.01, p < 0.05. Thus, compared to the theoretically appropriate value of zero, both groups showed some illusion of control. Furthermore, additional analyses confirmed that the introduction of the signal preceding the outcome, significantly reduced the illusion of control: Ratings about the causal status of the response were significantly higher in the No-Signal condition than in the Signal condition, t (18) = 2.19, p < 0.05.

Discussion

Discounting and cue competition are at the core of rational models of causal induction. For many of these models, to estimate the impact of a given cause on an effect consists precisely on isolating the unique contribution of that cause from the influence of the larger constellation of events that could also be producing that effect (Cheng, 1997; Cheng & Novick, 1992). Of course, our causal induction abilities are far from optimal and do not always match the predictions of these rational models. One of the reasons why we sometimes fail to correctly detect causal relations (or their absence) is that we do not always take into account the potential role of factors different from the target cause, whose influence we are assessing. This is especially likely to occur in situations in which our own behavior is involved, as an agency bias has been detected even in children, (Kushnir, Wellman & Gelman, 2009). This can easily give rise to illusions of control.

Fortunately, the results of the present experiment, together with previous research (e.g., Hannah & Beneteau, 2009; Matute, 1996; Matute et al., 2011), suggest that this tendency to perceive causal connections between our behavior and incontrollable events can be reduced by calling people's attention towards alternative explanations for those events. For instance, in the experiment conducted by Matute (1996) the participants no longer showed illusion of control when they were asked to refrain from always trying to control the outcome, so that they could see that the outcome was equally likely to appear when they did not respond. Similarly, in the present experiment the illusion of control was reduced by facing participants with an alternative potential cause of the outcome on a trial-by-trial basis.

Interestingly, although we found that directly perceiving the occurrence of alternative causes has an impact in our ability to discount the role or our own behavior, previous experiments failed to observe a similar attenuation by simply alerting participants about the potential role of alternative causes (Matute, Vadillo, Vegas, et al., 2007). Although it is difficult to see how the rational models of causal induction might explain this diversity of results (let alone the illusion of control itself), associative models provide an interesting insight into it (see, for example, Dickinson et al., 1984; Shanks & Dickinson, 1987). Most associative models view causal learning as the result of a selective learning process in which alternative predictors of a given outcome (including the participant's response) compete to become associated with it (e.g., Rescorla & Wagner, 1972; Van Hamme & Wasserman, 1994). If several events predict an outcome, those that are more contingent upon it will accrue more associative strength. This process results in an efficient discounting of the events that have little predictive value, in spite of their occasional pairings with the outcome. In the case of our experiment, this means that introducing a cue, the asterisks, with a high degree of contingency with the outcome, makes it easier for participants to discount the potential role of their responses in producing the outcome. However, this process is assumed to be at work only in situations in which information is presented on a trial-by-trial basis, and not when it is presented in a summary format or merely described verbally (see Shanks, 1991). Thus, these models might account not only for the susceptibility of the illusion of control to discounting, but also for the key role of presentation format in this process.

Perhaps a good explanation for the role of discounting in the illusion of control can be reached by carefully considering why the illusion of control exists at all (see Matute & Vadillo, 2009). If a rich and accurate representation of the causal relationships around us is so important for our survival, why has not evolution endowed us with a more sophisticated and precise causality-detection mental device? The error-management theory (Haselton, 2007; Haselton & Buss, 2000), originally proposed in the area of social cognition, can provide a nice solution for this dilemma. The basis of the theory is that not all the cognitive illusions are equally dangerous from an adaptive point of view: In uncertain situations, the cost of failing to perceive an existent pattern (e.g., a predator hidden in the darkness) is sometimes bigger than perceiving a pattern where none exists. This argument can be applied to the illusion of control: most of the times, failing to correctly detect a causal relation between one's behavior and a desired event is worse than erring on the opposite side, believing that one has control over actually incontrollable events (see Matute, Vadillo, Blanco et al., 2007). Although superstitious behaviors and pseudoscientific thinking can have a dramatic impact in our societies, in many occasions perceiving that one has control over actually incontrollable events can have little or no impact in our individual daily lives, beyond investing time and money in ineffective behaviors and treatments. On the other hand, failing to perceive that one has control over a controllable outcome involves losing opportunities to influence the course of events that can be more important for survival. This, in turn, might explain why positive illusions such as illusions of control, optimistic biases and superstitions sometimes tend to be related to higher levels of mental health (Alloy & Abramson, 1979; Taylor & Brown, 1988) and better performance (Damisch et al., 2010). On this view, it is not so strange that people overestimate their causal influence when the evidence for and against this belief is difficult to get and weight. However, as the present experiment shows, when the evidence against these beliefs grows (e.g., when the presence of alternative factors is noticeable), people might eventually detect their lack of control.

Regardless of their theoretical interpretations, these results shed light on the conditions under which illusions of control tend to appear or disappear, and suggest that a good means to reduce superstitious thinking and pseudoscience is to confront people with alternative explanations of the events they are dealing with. In fact, this strategy is already being used by popular science writers, who not only challenge commonly-held pseudoscientific beliefs, but also try to contrast them with rational explanations for the same phenomena. Our experiment shows that this strategy is supported by experimental data, as well as intuitively appealing.

References

Alloy, L. B. & Abramson, L. Y. (1979). Judgment of contingency in depressed and nondepressed students: Sadder but wiser? Journal of Experimental Psychology: General, 108(4), 441-485. [ Links ]

Baker, A. G., Mercier, P., Vallée-Tourangeau, F., Frank, R. & Pan, M. (1993). Selective associations and causality judgments: Presence of a strong causal factor might reduce judgments of a weaker one. Journal of Experimental Psychology: Learning, Memory, and Cognition, 19(2), 414-432. [ Links ]

Blanco, F., Matute, H. & Vadillo, M. A. (2009). Depressive realism: Wiser or quieter? Psychological Record, 59(4), 551-562. [ Links ]

Blanco, F., Matute, H. & Vadillo, M. A. (2011). Making the uncontrollable seem controllable: The role of action in the illusion of control. Quarterly Journal of Experimental Psychology, 64(7), 1290-1304. [ Links ]

Cheng, P. W. (1997). From covariation to causation: A causal power theory. Psychological Review, 104(2), 367-405. [ Links ]

Cheng, P. W. & Novick, L. R. (1992). Covariation in natural causal induction. Psychological Review, 99(2), 365-382. [ Links ]

Damisch, L., Stoberock, B. & Mussweiler, T. (2010). Keep your fingers crossed! How superstition improves performance. Psychological Science, 21(7), 1014-1020. [ Links ]

Davis, H. (2009). Caveman logic: The persistence of primitive thinking in a modern world. Amherts, NY: Prometheus Books. [ Links ]

Dawkins, R. (2006). The God delusion. London: Bantam Press. [ Links ]

Dickinson, A., Shanks, D. R. & Evenden, J. (1984). Judgement of act-outcome contingency: The role of selective attribution. Quarterly Journal of Experimental Psychology, 36A, 29-50. [ Links ]

Einhorn, H. J. & Hogart, R. M. (1986). Judging probable cause. Psychological Bulletin, 99(1), 3-19. [ Links ]

Fenton-O'Creevy, M., Nicholson, N., Soane, E. & Willman, P. (2003). Trading on illusions: Unrealistic perceptions of control and trading performance. Journal of Occupational and Organizational Psychology, 76(1), 53-68. [ Links ]

Gámez, L. A. (2007, July 27). Benitez contra Gámez: historia de una condena [Blogs. El Correo Digital: Magonia]. Retrieved May 12, 2008, from http://blogs.elcorreodigital.com/magonia/2007/7/27/benitez-contra-gamez-historia-una-condena [ Links ]

Goedert, K. M. & Spellman, B. A. (2005). Nonnormative discounting: There is more to cue interaction effects than controlling for alternative causes. Learning & Behavior, 33(2), 197-210. [ Links ]

Goldacre, B. (2008). Bad science. London: Fourth Estate. [ Links ]

Gopnik, A. & Schulz, L. (Eds.). (2007). Causal learning: Psychology, philosophy, and computation. New York: Oxford University Press. [ Links ]

Hannah, S. D. & Beneteau, J. L. (2009). Just tell me what to do: Bringing back experimenter control in active contingency tasks with the command-performance procedure and finding cue density effects along the way. Canadian Journal of Experimental Psychology, 63(1), 59-73. [ Links ]

Haselton, M. G. (2007). Error management theory. In R. F. Baumeister & K. D. Vohs (Eds.), Encyclopedia of social psychology (Vol. 1, pp. 311-312). Thousand Oaks, CA: Sage. [ Links ]

Haselton, M. G. & Buss, D. M. (2000). Error management theory: A new perspective on biases in cross-sex mind reading. Journal of Personality and Social Psychology, 78(1), 81-91. [ Links ]

Jones, E. E. & Davis, K. E. (1965). From acts to dispositions: The attribution process in person perception. In L. Berkowitz (Ed.), Advances in experimental social psychology (Vol. 2, pp. 219-266). New York: Academic Press. [ Links ]

Kelley, H. H. (1973). The process of causal attribution. American Psychologist, 28(2), 107-128. [ Links ]

Kushnir, T., Wellman, H. M. & Gelman, S. A. (2009). A self-agency bias in preschoolers causal inferences. Developmental Psychology, 45(2), 597-603. [ Links ]

Langer, E. J. (1975). The illusion of control. Journal of Personality and Social Psychology, 32(2), 311-328. [ Links ]

Laux, J. P., Goedert, K. M. & Markman, A. B. (2010). Causal discounting in the presence of a stronger cue is due to bias. Psychonomic Bulletin & Review, 17(2), 213-218. [ Links ]

Lilienfeld, S. C., Ammirati, R. & Landfield, K. (2009). Giving debiasing away: Can psychological research on correcting cognitive errors promote human welfare? Perspectives on Psychological Science, 4(4), 390-398. [ Links ]

Matute, H. (1995). Human reactions to uncontrollable outcomes: Further evidence for superstitions rather than helplessness. Quarterly Journal of Experimental Psychology, 48B, 142-157. [ Links ]

Matute, H. (1996). Illusion of control: Detecting response-outcome independence in analytic but not in naturalistic conditions. Psychological Science, 7(5), 289-293. [ Links ]

Matute, H. & Vadillo, M. A. (2009). The Proust effect and the evolution of a dual learning system. Behavioral & Brain Sciences, 32(2), 215-216. [ Links ]

Matute, H., Vadillo, M. A. & Bárcena, R. (2007). Web-based experiment control software for research and teaching on human learning. Behavior Research Methods, 39(3), 689-693. [ Links ]

Matute, H., Vadillo, M. A., Blanco, F. & Musca, S. C. (2007). Either greedy or well informed: The reward maximization-unbiased evaluation tradeoff. In S. Vosniadou, D. Kayser & A. Protopapas (Eds), Proceedings of EuroCogSci07: The European Cognitive Science Conference (pp. 341-346). Hove, UK: Erlbaum. [ Links ]

Matute, H., Vadillo, M. A., Vegas, S. & Blanco, F. (2007). Illusion of control in Internet users and college students. CyberPsychology & Behavior, 10(2), 176-181. [ Links ]

Matute, H., Yarritu, I. & Vadillo, M. A. (2011). Illusions of causality at the heart of pseudoscience. British Journal of Psychology, 102(3), 392-405. [ Links ]

Mitchell, C. J., De Houwer, J. & Lovibond, P. F. (2009). The propositional nature of human associative learning. Behavioral and Brain Sciences, 32(2), 183246. [ Links ]

McKay, R. T. & Dennett, D. C. (2009). The evolution of misbelief. Behavioral and Brain Sciences, 32(6), 493-561. [ Links ]

Morris, M. W. & Larrick, R. (1995). When one cause casts doubts on another: A normative analysis of discounting in causal attribution. Psychological Review, 102(2), 331-355. [ Links ]

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175-220. [ Links ]

Ono, K. (1987). Superstitious behavior in humans. Journal of Experimental Analysis of Behavior, 47(3), 261-271. [ Links ]

Rescorla, R. A. & Wagner, A. R. (1972). A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In A. H. Black & W. F. Prokasy (Eds.), Classical conditioning II: Current research and theory (pp. 6499). New York: Appleton-Century-Crofts. [ Links ]

Sense about Science. (2009). The law has no place in scientific disputes. Retrieved June 10, 2009, from http://www.senseaboutscience.org.uk/index.php/site/project/334 [ Links ]

Shanks, D. R. (1991). On similarities between causal judgments in experienced and described situations. Psychological Science, 2(5), 341-350. [ Links ]

Shanks, D. R. (2010). Learning: From association to cognition. Annual Review of Psychology, 61, 273-301. [ Links ]

Shanks, D. R. & Dickinson, A. (1987). Associative accounts ofcausality judgment. In G. H. Bower (Ed.), The psychology of learning and motivation (Vol. 21, pp. 229-261). San Diego, CA: Academic Press. [ Links ]

Shermer, M. (1997). Why people believe weird things: Pseudoscience, superstition and other confusions of our time. New York: W. H. Freeman & Co. [ Links ]

Sloman, S. A. (2005). Causal models: How we think about the world and its alternatives. New York: Oxford University Press. [ Links ]

Taylor, S. E. & Brown, J. D. (1988). Illusion and well-being: A social psychological perspective on mental health. Psychological Bulletin, 103(2), 193-210. [ Links ]

Vallée-Tourangeau, F., Payton, T. & Murphy, R. A. (2008). The impact of presentation format on causal inferences. European Journal of Cognitive Psychology, 20(1), 177-194. [ Links ]

Van Hamme, L. J. & Wasserman, E. A. (1993). Cue competition in causality judgments: The role of manner of information presentation. Bulletin of the Psychonomic Society, 31 (5), 457-460. [ Links ]

Van Hamme, L. J. & Wasserman, E. A. (1994). Cue competition in causality judgments: The role of nonpresentation of compound stimulus elements. Learning and Motivation, 25(2), 127-151. [ Links ]

Van Overwalle, F. & Van Rooy, D. (2001). How one cause discounts or augments another: A connectionist account of causal competition. Personality and Social Psychology Bulletin, 27(12), 1613-1626. [ Links ]

Waldmann, M. R. & Hagmayer, Y. (2001). Estimating causal strength: The role of structural knowledge and processing effort. Cognition, 82(1), 27-58. [ Links ]

Ward, W. C. & Jenkins, H. M. (1965). The display of information and the judgment of contingency. Canadian Journal of Psychology, 19(3), 231-241. [ Links ]

Wason, P. C. (1960). On the failure to eliminate hypotheses in a conceptual task. Quarterly Journal of Experimental Psychology, 12(3), 129-140. [ Links ]

Wasserman, E. A. (1990). Attribution of causality to common and distinctive elements of compound stimuli. Psychological Science, 1(5), 298-302. [ Links ]

Wegner, D. M. (2002). The illusion of conscious will. Cambridge, MA: MIT Press. [ Links ]

Wright, J. C. (1962). Consistency and complexity of response sequences as a function of schedules of noncontingency reward. Journal of Experimental Psychology, 63(6), 601-609. [ Links ]