Services on Demand

Journal

Article

Indicators

-

Cited by SciELO

Cited by SciELO -

Access statistics

Access statistics

Related links

-

Cited by Google

Cited by Google -

Similars in

SciELO

Similars in

SciELO -

Similars in Google

Similars in Google

Share

Universitas Psychologica

Print version ISSN 1657-9267

Univ. Psychol. vol.12 no.spe5 Bogotá Dec. 2013

On the activation of sensorimotor systems during the processing of emotionally-laden stimuli*

Sobre la activación de sistemas sensorimotores durante el procesamiento de estímulos emocionalmente cargados

Fernando Marmolejo-Ramos**

John Dunn***

University of Adelaide, Australia

*This work is an extended article version of F. M-R's PhD thesis supervised by J. D. The authors thank Daniel Casasanto and Ulrich Ansorge for reviewing the thesis version of this article and for their valuable comments.

**Correspondence concerning this article should be addressed to Fernando Marmolejo-Ramos, School of Psychology, Faculty of Health Sciences, University of Adelaide, Adelaide, S. A., 5005, Australia. Email: fernando.marmolejoramos@adelaide.edu.au, web page: http://sites.google.com/site/fernandomarmolejoramos/

***University of Adelaide, Australia. School of Psychology, Faculty of Health Sciences.

Recibido: junio 1 de 2012 | Revisado: agosto 1 de 2012 | Aceptado: agosto 20 de 2012

Para citar este artículo

Para citar este artículo: Marmolejo-Ramos, F., & Dunn, J. (2013). On the activation of sensorimotor systems during the processing of emotionally-laden stimuli. UniversitasPsychologica, 12 (5), 1515-1546. doi:10.11144/Javeriana.UPSY12-5.assp

Abstract

A series of experiments were devised to test the idea that sensorimotor systems activate during the processing of emotionally laden stimuli. In Experiments 1 and 2 participants were asked to judge the pleasantness of emotionally laden sentences while participants held a pen in the mouth. Experiments 3 and 4 were similar to the previous experiments, but the experimental materials were emotionally laden images. In Experiment 5 and 6 the same bodily manipulation used throughout the previous experiments was kept while participants judged facial expressions. The first pair of experiments replicated findings suggesting that sensorimotor systems are activated during the processing of emotionally laden language. However, follow-up experiments suggested that dual activation of both perceptual and motor systems is not always necessary. For the particular case of emotionally laden stimuli, results suggested that the perceptual system seems to drive the processing. It is also shown that a high resonance between sensorimotor properties afforded by the stimuli and the sensorimotor systems activated in the cogniser elicit emotional states. The results invite to review radical versions of embodiment accounts and rather support a graded-embodiment view.

Key words authors: Graded-embodied theory, emotions, images, faces, language comprehension.

Key words plus: Cognitive Science, Cognition, Language.

Resumen

Una serie de experimentos fueron diseñados para determinar si sistemas sensoriomotores se activan durante el procesamiento de estímulos con contenido emocional. En los experimentos 1 y 2, los participantes juzgaron cuan emocionales eran ciertas frases con contenido emocional mientras sostenían un lápiz en la boca. Los experimentos 3 y 4 fueron similares a los anteriores con la diferencia de que los materiales experimentales fueron imágenes con contenido emocional. En los experimentos 5 y 6 la misma manipulación facial fue usada mientras los participantes juzgaban expresiones faciales. El primer par de experimentos replicó estudios anteriores demostrando que sistemas sensoriomotores se activan durante el procesamiento de lenguaje con contenido emocional. Sin embargo, los experimentos subsecuentes sugirieron que la activación de sistemas perceptuales y motores no siempre son necesarios. Para el caso específico de estímulos con contenido emocional, los resultados sugirieron que el sistema perceptual está a cargo del procesamiento. También se argumenta que una resonancia alta entre los sistemas sensoriomotores asociados a los estímulos y los sistemas sensoriomotores activados en el participante, conllevan a la elicitación de estados emocionales. Los resultados invitan entonces a revisar versiones radicales de las teorías de la cognición corporeizada y en cambio sugieren adoptar versiones en las que existen grados de corporeidad.

Palabras clave autores: teoría de grados de corporeidad, emociones, imágenes, caras, comprensión del lenguaje.

Palabras clave descriptores: Ciencia Cognitiva, Cognición, Lenguaje.

doi:10.11144/Javeriana.UPSY12-5.assp

Introduction

The embodied cognition theory argues that the processing of language requires the activation of brain networks associated with the referents of the linguistic stream (see Barsalou, 1999; 2008; Glenberg, 1997; Glenberg & Gallese, 2012). Particularly, it is argued that perceptual and motor systems in the brain are activated whenever perceptual and motor features occur in the linguistic stream. Thus, if someone reads or hears the word "kick", brain motor areas involved in the implied action of kicking are activated. Indeed, in the case of larger linguistic units, people can also represent other dimensions like space and emotions. For example, in a sentence like "Messi kicked the football with all of his passion", the reader could not only activate the associated motor areas for the action of kicking, but also the reader could infer that the striker was in a rather positive emotional state that led him to kick the football so hard that it reached a long distance.

The embodied cognition further argues that not only the processing of concrete concepts requires the activation of sensorimotor representations, but also the processing of abstract concepts entails the activation of perceptual and motor systems (e.g., Niedenthal, Barsalou, Winkielman, Krauth-Gruber, & Ric, 2005; Wilson-Mendenhall, Barrett, Simmons, & Barsalou, 2011). In this article, this latter idea is evaluated. In particular, the experiments reported herein aim to examine whether the processing of emotional stimuli always requires the activation of both perceptual and motor systems.

Empirical evidence supporting an embodied view on the processing of concrete and abstract concepts

Processing of concrete concepts

There is mounting evidence suggesting that the processing of concepts entails the activation of sensorimotor properties. For the particular case of language processing, these results suggest that there is an activation of perceptual and motor properties during the processing of the linguistic input (see Hauk, Johnsrude, & Pulvermüller, 2004; Zwaan, Madden, Yaxley, & Aveyard, 2004). For instance, Pecher, Zeelenberg, and Barsalou (2003) demonstrated that perceptual representations are associated with language comprehension. In their study, switching from one modality to another incurred a switching cost, just as has been shown in perceptual processing tasks. Participants were faster to verify properties of concepts in a given perceptual modality if it was preceded by a trial in which the same perceptual modality had been verified. For example, participants were faster to verify BLENDER-loud (auditory modality) if it was preceded by LEAVES-rustling (auditory modality) rather than CRANBERRIES-tart (taste modality). This study suggests that language comprehension is grounded in the perceptual processing system, similar to the sensorimotor phenomena that have been shown for purely perceptual tasks. Similar studies suggest that people routinely activate perceptual representations of objects described in short sentences (Zwaan, Stanfield, & Yaxley, 2002; Zwaan et al., 2004).

Recent experimental tasks are implementing methodologies that are giving more detailed insight on how embodied knowledge is used and what are its contents. The use of the masked priming paradigm is particularly interesting. Such experimental paradigms have enabled researchers to determine that the retrieval of sensorimotor knowledge occurs in an automatic fashion, and that not only strategic retrieval processes are required (e.g., Ansorge, Kiefer, Khalid, Grassl, & Köning, 2010; Finkbeiner, Song, Nakayama, & Caramazza, 2008). In addition, variations of the priming paradigm task, like the cross-modal priming task, have provided information as to the dependency of knowledge construction on perceptual and motor systems (e.g., Brunyé, Ditman, Mahoney, Walters, & Taylor, 2010). And neuroscientific evidence has also shown that embodied knowledge is fine-grained, e.g., "pushing the piano" recruits different brain areas from "pushing the chair" (see Moody & Gennari, 2010).

Finally, there is evidence demonstrating that the influence between sensorimotor process and language processing is bidirectional. That is, listening to sentences about towards-the-body movements facilitates responses in which a congruent motor movement is performed (e.g., Glenberg & Kaschak, 2002). Also, motor actions that are not congruent with incoming linguistic input impair its comprehension (e.g., Rueschemeyer, Lindemann, van Rooj, van Dam, & Bekkering, 2010). A commonality for all these studies is that they use experimental stimuli that refer to concrete entities, which have a straightforward perceptual and motor link, e.g., listening to a sentence about someone pushing a piano can evoke the image of a piano and a particular motor program (see Glenberg & Kaschak, 2002, for the use of abstract sentences).

However, it has not been clearly determined whether the processing of abstract concepts also requires the activation of perceptual and motor systems. That is, while concrete concepts refer to physical entities with defined spatial boundaries and perceivable perceptual and motor attributes, abstract concepts refer to entities with indeterminate spatial boundaries and unperceivable senso-rimotor properties (see Wiemer-Hastings & Xu, 2005). Think of the word "justice"; it might evoke images popularised by media such as Themis, the lady justice, armed with a sword and a scale or it might even evoke any of these three entities (another possible perceptual correlate could be a gavel). However, such a concept might have only perceptual correlates and hardly any motor correlates (unless it is paired with other concepts that might entail not only perceptual but also motor actions, i.e., "justice hit him with full force"). Thus, it is open to question whether (all) abstract concepts require the activation of sensorimotor systems and, more importantly, if such activation is compulsory or not. It could be conceived that the processing of abstract concepts might require the activation of perceptual systems only while the activation of motor systems is done vicariously or simply by-passed. In addition, it could be entertained that abstract concepts can have perceptual and motor properties via metaphorical associations, e.g., "The sword of justice has no scabbard". Such analogical linkage between abstract concepts and concrete concepts could be one of the ways where abstract concepts are grounded in sensorimotor experience. The next section revises evidence in support of the idea that abstract concepts can have sensorimotor properties. Also, a non-radical embodiment view is presented along with a cognitive model of how abstract concepts can gain sensorimotor properties.

Processing of abstract concepts

Abstract concepts are a challenge for the embodied framework in that what the framework claims is that every concept grounds in sensorimotor properties, which seems not to be quite clear for the case of abstract concepts. The distinction between concrete and abstract concepts is supported by empirical data, which demonstrates that processing abstract and concrete concepts brings differences in recall and comprehension. For example, behavioural (Schwanenflugel, 1991) and brain (Sabsevitz, Medler, Seidenberg, & Binder, 2005) studies have demonstrated that processing times are longer for abstract than for concrete concepts; this has been examined by using naming and lexical decision tasks both for words and sentences. Also, such effects seem to occur regardless of the language under study. For instance, Brouillet, Heurley, Martin, and Brouillet (2010, Experiment 1) had French-speaking participants respond "yes to words" and "no to non-words" by pushing or pulling a custom-made lever. The researchers found that average response times for "yes" responses to concrete words were shorter than those for "yes" for abstract words. This study confirms the idea that concrete concepts are accessed faster than abstract concepts and that this seems to be the case also in languages other than English.

Note, however, that recent studies have shown that when words' imageability and context availability are partialled out, abstract words are processed faster than concrete words. In addition, statistical word analyses further suggest that abstract words are more emotionally laden than concrete words. These findings suggest that differences in the processing of abstract and concrete words rely on the level of linguistic, sensorimotor, and affective information they depend on. Thus, while concrete concepts seem to rely strongly on sensorimotor properties, abstract concepts rely more on affective associations, but both concrete and abstract words rely on linguistic properties (see Kousta, Vigliocco, Vinson, Andrews, & del Campo, 2011).

If abstract concepts do not have any perceptible referents, then they cannot be explained by the embodied framework, since, as above mentioned, this framework is based on the linkage between concepts and sensorimotor properties. Nevertheless, it could be entertained that if abstract concepts could be linked to closely and/or potentially related concrete concepts, the processing difference between abstract and concrete concepts could be overcome. Research on differences between abstract and concrete concepts suggests that this linkage is possible. Wiemer-Hastings and Xu (2005) compared the content of 18 abstract and 18 concrete concepts. Participants were asked to generate characteristics (i.e., intrinsic properties) of the concepts or their relevant context (i.e., context properties). Intrinsic properties are aspects that characterize a concept, whereas context properties refer to aspects of a situation that always occur with the concept. Quantitatively speaking, researchers found that participants generated less intrinsic properties for abstract than for concrete concepts, whereas they generated more properties expressing context properties, especially related to subjective experience (like mental and affective states), for abstract than for concrete concepts. Qualitatively speaking, abstract concepts were associated with mental and affective states, had less intrinsic properties than concrete concepts, and were more related to context properties (e.g., to other concepts) than to intrinsic properties.

The latter finding is relevant to the idea that abstract concepts might have some sensorimotor properties. Wiemer-Hastings and Xu (2005) argue that the reason why participants might have related abstract concepts to other concepts may reflect a cognitive parsimony where very complex abstract concepts are represented by less complex ones. This explanation suggests that there could be a "grounding level" where concepts move from the highly concrete to highly abstract, and where extremely abstract concepts could be linked to related concepts that have any sort of sensorimotor properties. In other words, the comprehension of abstract concept might encompass an addition of other related concepts that narrow down to concepts that have a higher level of concreteness. Wiemer-Hastings and Xu (2005) show as an example how the concept of emancipation can be described as oppression, then as liberty, and finally as liberation, which might have a visual referent (e.g., a person liberating from his chains).

Note however, that there is evidence suggesting that the processing of abstract concepts entails the direct activation of sensorimotor areas. In particular, that processing abstract language affects motor systems. Glenberg et al. (2008) had participants judge the sensibility of sentences implying movements towards, away from the reader, or no movement. Within each type of sentences, half of the sentences referred to concrete objects and the other half to abstract concepts (e.g., sensible + concrete object + toward direction = "Joe hands the book to you", sensible + abstract object + away direction = "you dedicate the song to John", nonsense + abstract + no transfer = "Sam digests democracy with you"). Half of the participants judged sentences as "sensible" by pressing a button located further away from their body, the other half did so by pressing a button closer to their body. The Results suggested an action-compatibility effect in which "toward" sentences were judged faster when participants performed a "toward-the-body" response movement than when the required movement was away from their bodies. More importantly, this effect also occurred for the case of abstract sentences, i.e., the concreteness factor was not statistically significant (see also Glenberg, Sato, & Cattaneo, 2008). A follow-up transcranial magnetic stimulation (TMS) experiment suggested that motor evoked potentials were higher for transfer than for non-transfer sentences. In addition, the TMS study showed that motor evoked potentials were similar for both concrete and abstract sentences (Experiment 2). These results led the researchers to argue that "the motor system is modulated during the comprehension of both concrete and abstract language" (Glenberg et al., 2008, p. 915).

A graded-embodiment view on the processing of concepts

Most experiments reported here implicitly assume that embodiment always occurs and that the processing of both concrete and abstract concepts entails sensorimotor properties. However, such a radical embodiment view has started to be challenged after critically reviewing some of the data, particularly neuroscientific, obtained thus far. For instance, the case of apraxia posits a challenge for the predictions made by the embodiment theory. Apraxia is characterised by loss of the ability to execute well-known movements despite having the physical ability to perform the movement, e.g., a patient is presented with a hammer and cannot perform the canonical action it affords, despite being physically able to do so. In the particular case of ideomotor apraxia, such patient cannot perform the action associated with the object, while he is able to name the object and even recognise pantomimes associated with the object (e.g., hammering a nail). A radical embodiment view would suggest that impairment in motor processes would affect recognition or naming of objects, but this scenario does not occur in the case of apraxia wherein object recognition and recognition of related actions remain mostly intact despite the patient being unable to perform the action (Mahon & Caramazza, 2008).

Evidence like the one exemplified in the case of apraxic patients suggests that other processes might be at stake when embodiment does not occur. Particularly, Mahon and Caramazza (2008) argue that the precise mechanisms supporting embodiment are not clarified, which leaves room to think that also amodal processes might take place, particularly in the interaction between linguistic information and sensorimotor representations. That is, sensorimotor representations can be encoded in linguistic forms that serve as a symbolic bypass to index embodiment (see Campanella & Shallice, 2011; Gallese, 2009; Louwerse, 2008). Thus, it can be suggested that an amodal symbol processing system can occur as it might represent a recent system that evolved to cope with new forms of cognition and communication (Marmolejo-Ramos, Elosúa de Juan, Gygax, Madden, & Mosquera, 2009). In addition, Mahon and Caramazza (2008) suggested that there should be a middle ground between the embodiment and the disembodiment of concepts, and that it is determined by the interaction between perceptual and motor systems. This new perspective on embodiment then focuses more on how perceptual and motor systems interact for the formation of concepts, rather than on demonstrating the effects of perception on motor system and vice versa (Mahon, 2008).

Chatterjee (2010) after critically reviewing recent neuroscientific evidence offers a complementary view that suggests that instead of determining whether embodiment occurs or not, it is better to determine levels of embodiment, i.e., graded grounding. The experiments reviewed, thus far, suggest that concrete concepts have strong senso-rimotor properties, thus a graded grounding view would suggest that abstract concepts can gain sen-sorimotor properties by relying on their potential association with related concrete concepts (see also Wiemer-Hastings & Xu, 2005).

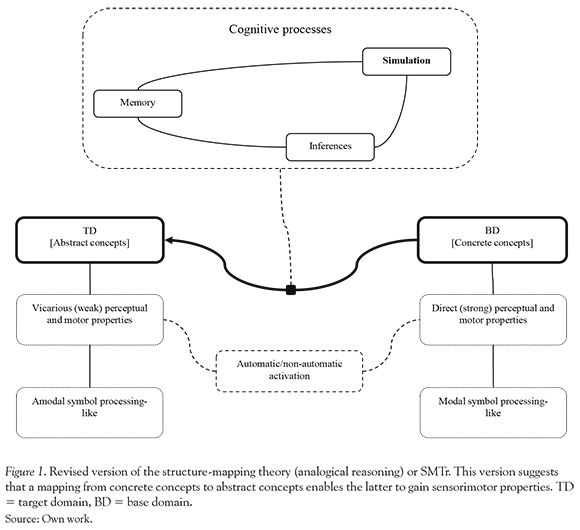

A revised version of the structure mapping theory as graded-embodiment framework

The structure mapping theory (SMT) (Gentner, 1983) presents a potential explanation as to the relationship between abstract and concrete concepts. This theory proposes that knowledge about a base domain (BD) is mapped onto a target domain (TD) via analogical reasoning. The target domain represents objects of any kind that need to be explained and for which few properties are available, whereas the base domain is the source that enables explanation given that the objects it has count on several properties. Note that objects can be concepts or any other entity. Given that the discussion thus far has referred to the processing of abstract and concrete concepts from an embodied view, the term concept is preferred over that of object. Additionally, the term concept encapsulates what is nowadays understood as knowledge construction mapped onto sensorimotor systems, i.e., concepts can have sensorimotor properties (see Arbib, 2008).

In the original account of the SMT, knowledge is understood as a network of nodes and predicates, i.e., an amodal perspective. In the present re-interpretation, knowledge is assumed to have gradations in sensorimotor (i.e., from less sensorimotorness to more sensorimotorness) properties (à la Chatterjee, 2010). The mapping from BD to TD is determined by the attributes and relations that concepts have. In the original STM it is argued that relations between concepts should be preserved, whereas their attributes should be disregarded. The reason for this is that a true analogy consists of just a few attributes and many relations (e.g., "the atom is like our solar system"). When there are many attributes and relations, it renders a literal similarity rather than an analogy (e.g., "the K5 solar system is like our solar system"). And when there are few attributes and relations, an anomaly emerges (e.g., "coffee is like the solar system") (Gentner, 1983).

The present proposal suggests a more flexible trade-off between attributes in relations in which they are simply tuned, maximised and minimised, until an understanding of the TD occurs. Thus, analogical reasoning basically consists in understanding one situation in terms of another (see Gentner, 1999). In addition, the present model suggests that the mapping process depends on memory systems, inference construction, and simulation (see Marmolejo-Ramos, 2007). These cognitive processes have been suggested as being essential in the construction of knowledge, e.g., visual processing in relation to language (see Mishra & Mar-molejo-Ramos, 2010). Thus, knowledge is stored in memory systems, coordinated and re-arranged via inferences, and manipulated dynamically to anticipate actions, perceptions, and events via simulation (see Figure 1).

The revised SMT proposed here (SMTr) can offer some insight into how people abstract (see Chatterjee, 2010). Mapping from concrete (or BD) to abstract (or TD) concepts facilitates the comprehension of abstract concepts in that they gain sensorimotor properties (e.g., Wilson & Gibbs, 2007) borrowed from related concrete concepts. That is, abstract concepts gain more attributes and relations that are gradually linked to sensorimotor properties that belong to more grounded concepts. However, abstraction would consist in mapping from concrete to abstract concepts until concepts' attributes and relations gradually start to rely less on sensorimotor properties. Indeed, empirical evidence suggests that people rely on surface properties of objects and their relations when they transfer information across domains (Rein & Markman, 2010). In a series of experiments Rein and Mark-man (2010) had participants learn various visual patterns. After the learning phase, participants were presented with novel configurations of the same patterns (in addition to other visual stimuli similar to the visual patterns originally presented). The Results showed slower identification times for novel configurations than for the already learned visual patterns. The authors argue that such results indicate that relational knowledge across domains can be influenced by concrete objects properties. Furthermore, in the transferring of information from one domain to another, people are not only disentangling some of the perceptual information from the relations, but also they are modifying the relational structures themselves1.

In terms of the embodied theory, those results could suggest that in the transfer of information from concrete concepts to abstract concepts some of the concrete concepts' perceptual and motor properties can be attached to the abstract concepts with which they are associated. Thus, abstraction processes seem to rely initially on at least some perceptual similarities until a gradual detachment from such similarities occurs. It is also conceivable to think that since abstract concepts seem to rely more on perceptual properties than on motor properties, the latter have a rather weak association to abstract concepts.

Under this re-conceptualization of embodiment it would be possible to entertain that some motor simulations occur in brain areas adjacent to the motor system in order to deal with abstract concepts (Mahon, 2008). Thus, the use of primary sensorim-otor areas seems to be determined by the perceptual and motor similarity between the actions implied by the stimuli being processed and the actual action performed. Abstract concepts might entail the activation of secondary sensorimotor areas, and less possibly primary motor areas, by the relationships established between these concepts and the associated concrete concepts. At this point is where structure mapping plays a central role by enabling the establishment of relationships between concepts chiefly via analogical processes and simulation. However, the implied action goals and meaning (Jacob & Jeannerod, 2005), contextual cues (Ocampo & Kritikos, 2010), and the stimuli used in experimental tasks (Raposo, Moss, Stamatakis, & Tyler, 2009) can influence on whether primary and secondary sensorimotor areas are to be necessarily and automatically activated when processing abstract and concrete concepts. For example, Raposo et al. (2009) found that verbs that implied arm or leg action elicited activation in brain motor regions when they were presented in isolation (e.g. "grab") or in literal sentences (e.g., "the fruit cake was the last one so Claire grabbed it") (see also Hauk et al., 2004). However, when action verbs were presented in idiomatic sentences (e.g., "the job offer was a great chance so Claire grabbed it") there was activation in fronto-temporal regions but no activation of motor or premotor areas occurred. These results support the idea that even concrete concepts, e.g., action verbs, not necessarily and automatically entail sensorimotor properties and that not only modal representations are at stake (see also Arbib, 2008 for a discussion against radical embodiment).

The processing of emotions as explained by the embodied framework

The revised embodied framework presented above indicates that abstract concepts can gain senso-rimotor properties via their association to related concrete concepts. And knowledge about concrete concepts can be grounded in actual physical interaction with the world. Emotion labels are considered abstract concepts and as such they should also entail some sensorimotor properties. Emotion words are linguistic marks socially used to refer to emotional states. Then, the study of emotions as psychological states could help to understand what the processing of emotion words entails.

Evidence supporting the link between emotions and sensorimotor properties. A social embodiment approach

Empirical evidence suggests that emotional states seem to have a link to sensorimotor properties and that emotion words are derived from emotional states (Clarke, Bradshaw, Field, Hampson, & Rose, 2005; Pecher, Zeelenberg, & Barsalou, 2003; Vermeulen, Niedenthal, & Luminet, 2007). But, do emotion concepts have links to sensorimotor properties, as emotional states do? Emotions are considered a relevant aspect in social behaviour, that is, people monitor others' emotional states in order to communicate effectively. As a result, emotions have a privileged status in the content of any social interaction. In a review of embodied theories of emotion understanding, Niedenthal et al. (2005) argued for an embodied approach to ground emotions in perception. According to these investigators, social information processing involves embodiment, where embodiment implies actual bodily states (on-line cognition) and simulation of experience (off-line cognition) in the brain's modality-specific systems for perception, action, and introspection. On this basis, they recast the embodied framework to explain social information processing.

The revised embodied framework proposed by Niedenthal et al. (2005) provides neurological and psychological foundations regarding social information processing in on-line and off-line cognition. From a neurological perspective, their framework pinpoints neuroscientific models that account for emotional comprehension. From a psychological perspective, their framework proposes that the comprehension of social information requires the simulation of modality-specific states. That is, simulation is the process by which brain areas in charge of processing specific information, such as concepts, activate without any input from the original stimulus. Note however that emotion concepts do not necessarily entail a defined set of particular social situations that differentiate them. For example, Elosúa de Juan and González Lara (1989) found that emotion concepts such as "happiness", "sadness", and "surprise" have representative verbal contexts, whereas emotion concepts such as "fear", "disgust", and "anger" do not. This finding could indicate that whereas emotions such as happiness and sadness seem to have a clear set of descriptors, other emotion concepts might have rather ambiguous descriptors since they can be associated to several social situations and therefore various perceptual and motor properties.

The embodied framework assumes that the comprehension of language involves the activation of brain specific systems usually employed when actual bodily, perceptual, and introspective activities are being performed. Then, for the case of emotions, if people are required to simulate bodily states that are characteristic of a particular emotional state, say to smile, then brain areas in charge of processing that emotional state will activate. As a whole net of neuronal connections will be congruent with the sensorimotor state being elicited, then it is expected that congruent incoming information will be processed faster than information that is not. In other words, the bodily state induced will elicit a particular cognitive state, which is congruent with the valence of the bodily state via neuronal connections.

Emotion elicitation via bodily manipulations

Since the pioneer works of William James (1884) and Charles Darwin (1872) it has been suggested that the expression of emotions entail bodily states, e.g., clenched fists as a gesture of distress or a broad smile as a demonstration of happiness. This idea has received empirical support from psychology. For example, experiments in social psychology suggest that facial disposition affects emotional experience (e.g., Buck, 1980; Strack, Martin, & Stepper, 1988; Niedenthal, 2007) and neuroscientific work proposes that the contraction of hand muscles, clenching fists, is related to negative emotions (e.g., Schiff & Lamon, 1994). For example, Rhodewalt and Comer (1979) had participants write short essays on some selected topics while sustaining a smile or a frown for around five minutes. For one of the analyses, the researchers used a standardised questionnaire to measure mood changes and found that participants in the smile condition experienced more positive moods than negative ones, while participants in the frown condition showed the opposite pattern.

Also, bodily states in association with emotional states assist in the encoding and retrieval of memories. Thus, it is argued that body dispositions facilitate the recall of autobiographical memories when the valence of the memory matches the valence of the body postures (Riskind, 1983). For example, Parzuchowski and Szymkow-Sudziarska (2008) found that participants who mimicked a facial expression of surprised recalled more surprising words and words spoken in a surprising manner than neutral words and words spoken in a neutral tone. Interestingly, the researchers did not find differences between the ratings measuring the experiential state of feeling surprised when participants mimicked a face of surprise and when they mimicked a neutral face. Such results seem to contradict those obtained by Rhodewalt and Comer (1979), mentioned above, in which differences in affect were found when participants wore different facial expressions.

Based on the premise that sensorimotor disposition affects cognitive processes, Havas, Glenberg, & Rinck, (2007) investigated whether the comprehension of emotions through language is affected by relevant bodily states. Havas et al. examined whether the time to identify the emotional valence of a sentence is affected by bodily states that are consistent or inconsistent with relevant emotional responses such as smiling or frowning. They asked participants to judge the pleasantness of sentences while holding a pen either in their lips, consistent with a frown, or in their teeth, consistent with a smile. The results revealed that participants were faster to identify unpleasant sentences when they held the pen with their lips and were faster to identify pleasant sentences when they held the pen in their teeth. Havas et al. suggested that bodily states might directly affect the comprehension of emotional sentences without activating any affective state (see also Rhodewalt & Comer, 1978 for similar claims). This is in contrast to the results found by Strack et al. (1988) who found that holding a pen with the lips or the teeth appeared to induce a relevant change in affect, but not on cognitions.

Other studies have shown that when participants were asked to rate their final moods, after an affective state was induced, there were no significant differences in moods between conditions meant to elicit opposite affective states (e.g., Cretenet & Dru, 2004; Förster & Strack, 1997). Hence, such results could suggest a pathway between body and cognition without the mediation of emotions. This idea is in contrast to findings in the neurobiology of emotions (see Lewis, 2005) and behavioural experiments (e.g., Strack et al., 1988) suggesting that behavioural expressions affect reported feelings and subsequent judgments.

This ambiguity in results deserves further examination in order to better understand how bodily states are linked to affective states and, in turn, how cognitions may be affected. However, a provisional explanation can be put forward. All of these experiments refer to a motor congruency effect where motor actions will influence the processing of valenced information (see Förster & Strack, 1997). However, emotional systems are composed of cognitive, sensorimotor, and neurophysiological subsystems that respond to external and internal stimulus events as relevant to major concerns of the organism (Scherer, 2005). This means that as there is a "grounding level" in the processing of abstract concepts, there could be a "motor congruency level" in the processing of valenced information. Namely, a motor disposition like smiling might have more cognitive and sensorimotor meaningful-connectivity to a positive valenced state than that of an arm flexion. Also, it would be expected, given social and contextual restrictions, that certain motor actions may have a higher priority than others given strong and meaningful associations between valenced states and their neuropsychological and sensorimotor referents.

The importance of the experiments reported thus far, particularly that of Havas et al., lies on the fact that they suggest that bodily states influence cognitive states without seemingly eliciting any significant affective state (although results by Vissers et al., 2010, suggest that both cognitive and affective states are influenced). This idea is also supported by research using other motor expressions. Förster and Strack (1997, 1998) had participants perform either approach (arm flexion) or avoidance (arm extension) behaviours while generating names for celebrities towards whom they had positive, negative, or neutral attitudes. Participants in the "arm flexion" condition generated more names of positively evaluated celebrities while participants in the "arm extension" condition generated more names of negatively evaluated persons.

A reversed pattern between motor response and judgment of stimuli is reported by Brouillet et al. (2010, Experiment 1). In their experiment, participants had to judge words and non-words by pulling or pushing a lever. The researchers found that participants were faster to indicate "yes, it's a word" by pushing a lever than by pulling it, whereas indicating "no, it's not a word" was faster when the lever was pulled than pushed. The difference between the results found by Förster and Strack (1997, 1998) and those found by Brouillet et al. can be explained in terms of the task requirements. Whereas the Förster and Strack's tasks required generating names, the Brouillet et al.'s experiment required evaluating words. Thus, Förster and Strack's tasks induced a context in which negative names had to be expelled (arm extension, similar to a pushing action), whereas positive names had to be retained (arm flexion, similar to a pulling action). However, in Brouillet et al.'s task the action of pushing (similar to an arm extension) can be associated with reaching for the word, whereas the action of pulling (similar to an arm flexion) can be associated with the action of avoiding the non-word (see also Eder & Rothermund, 2008). The message from these experiments is that there is an association between motor response directionality and evaluation of stimuli but such association can be reversed or altered given task demands (see also Markman & Brendl, 2005).

Processing emotional stimuli.

Processing of images

In most emotion research three types of stimuli are commonly used: negative, neutral, and positive. Ping, Dhillon, and Beilock (2009) reported that even seemingly neutral stimuli could receive some sort of emotional appraisal, which in turn affects their processing. So, how are non-neutral stimuli processed? Most research focuses on the processing of emotional vs. non-emotional stimuli; the former being composed of negative and positive stimuli. There is evidence demonstrating that negative stimuli take longer to be processed since they may pose a potential threat to the organism. During the encoding process, negative stimuli needs to be reliably appraised, which is translated into extra processing time (Flykt, Dan, & Scherer, 2009). However, during the retrieval process, negative stimuli are recognised more confidently and in shorter time than any other emotional stimuli (Gordillo León et al., 2010). There is also evidence suggesting a link between the processing of negatively valenced stimuli and motor processes. For example, it has been found that passive viewing of negatively-laden images produces reduced body sway, which can be understood as a bodily manifestation of a freezing strategy (Stins & Beek, 2007).

Other evidence suggests that emotionally laden stimuli are better identified over neutral stimuli since the former demands enhanced perceptual processing most possibly due to their emotional significance (see Zeelenberg, Wagenmakers, & Rotteveel, 2006). It has been shown also that recognition of neutral stimuli can be either enhanced or impaired given the sensory modality in which emotionally laden stimuli are presented. Thus, Zeelenberg and Bocanegra (2010) found that when emotionally laden stimuli were visually presented, recognition of visually presented neutral stimuli was impaired. However, when emotionally laden stimuli were auditorily presented, recognition of visually presented neutral stimuli was enhanced. These results could show that cross-modal cueing can enhance recognition of stimuli but at the same time could suggest that results are dependent on task requirements.

It is also important to note that the processing of emotional stimuli can be affected not only because of the emotional valence they represent (negative, neutral, or positive), but also because of the emotional intensity and arousal they have. In an ERP study Versace, Bradley and Lang (2010) showed that emotionally arousing pictures were recognised better than neutral pictures, particularly when pictures were semantically unrelated, even when pictures were presented for very brief periods of time (~184ms). Although in this experiment only ERPs and picture discrimination indexes were measured, it could be argued that higher recognition of emotionally arousing stimuli over neutral stimuli could occur in the form of RTs. Thus, if negative stimuli require further processing time (e.g., Flykt et al., 2009) and emotionally arousing stimuli are recognised better than neutral stimuli, then it is possible to believe that positive stimuli would be processed faster than neutral and negative stimuli. It could be further entertained that the processing of negative images might demand extra processing load since these types of images might not be very common in everyday life.

However, some evidence suggests that the judgment times for positive and negative images are not different and that they rather overlap regardless of their exposure time (Maljkovic & Martini, 2005; Experiment 2). Other evidence, on the contrary, indicates that negative images are detected quicker and easier than positive images (Dahl, Johansson, & Allwood, 2006). Such discrepancy in findings highlights the fact that processes other than perceptual might play a role in the judgment of emotional images, i.e., not only low-level cognitive processes might be at stake but also high-level processes might determine the results. In addition, methodological and probabilistic factors can account for such discrepancies in findings (see Miller, 2009). For example, while in Experiment 1 Dahl et al. (2006) found that recognition performance was not dependent of image valence, Experiment 2 did not replicate such result even though the whole experimental setting was the same as that of Experiment 1.

Processing of faces

From an embodied point of view, it would be expected that bodily states might affect the processing of emotionally laden images, as it has been demonstrated in the case of emotionally laden language (e.g., Havas et al., 2007). Current evidence suggests that indeed bodily states affect the perception of emotionally laden images, thus replicating the results found by Havas et al. but for the particular case of faces. Blaesi and Wilson (2010, Experiment 1) had participants hold a pen between the teeth ("smiling" condition) or not to hold a pen ("no-pen" condition) while looking at 11 pictures of the same face morphed in 10% increments ranging from smiling to frowning. Participants' task was to judge whether the face was "happy" or "sad". A Probit analysis showed that the threshold for perceiving faces as happy was significantly lower in the "pen-in-teeth" condition than in the "no-pen" condition.

That is, people in a "happy" state were more prone to judge faces as happier than when they were not in such emotional state. The original idea underlying this experiment was to demonstrate that people's motor actions (e.g., pen in teeth vs. no pen) affect the judgment of human-body stimuli (e.g., emotional faces). However, in this experiment, as in Havas et al.'s, it was implicitly assumed that the pen condition induced an affective state without directly measuring if that actually occurred. Thus, taking some measures of participants' affective states could assist in answering the question of whether the action-perception link is mediated by changes in affective states.

This is not a new question in emotion research but its answer is not agreed upon yet as the work revised thus far suggests. In addition, it is still open to question whether such bodily manipulations affect the judgment of emotional stimuli other than faces and sentences, e.g., emotionally laden pictures. For example, it is worth investigating whether a negative bodily manipulation (e.g., pen in lips) would produce differential effects during the processing of emotional images. Specifically, a study could be designed to replicate Blaesi and Wilson's (2010, Experiment 1) results and extend them by requiring participants to judge emotional faces while wearing valenced emotional faces that are congruent or incongruent with those being judged (e.g., judging happy vs. sad faces while participants' are in similar facial dispositions by means of the pen manipulation).

Methodological considerations regarding the elicitation of emotional states

Perceptual and motor systems are activated during the processing of emotional stimuli, but the automa-ticity of their activation is currently being explored. Nevertheless, it is still quite debatable how and when emotional states occur. Also, it is not clear yet whether emotional states are the cause or the consequence of evaluating emotionally laden stimuli.

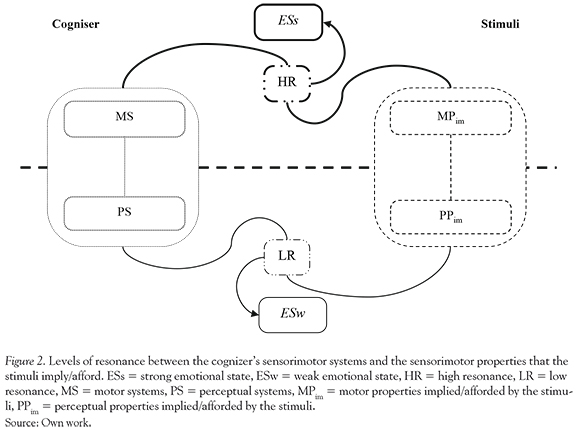

It can be entertained that the elicitation of emotional states can depend on the perceptual and motor resonance existing between the stimuli content and the cognisers' sensorimotor systems. Resonance is understood here as the level of similitude between the sensorimotor properties implied and afforded by the stimuli and the sensorimotor systems that can be activated in the cogniser in relation to the stimuli (see Rizzolatti, Fadiga, Fogassi, & Gallese, 1999, for a definition of resonance behaviour from a neurological perspective). Hence, it could be further argued that a high motor and perceptual resonance could lead to a strong elicitation of emotional states, while a low sensorimotor resonance could lead to a weak emotional elicitation (see Figure 2).

For instance, the processing of emotionally-laden sentences could elicit both perceptual and motor systems in the reader since usually sentences refer to events where agents carry out actions on objects and those events are enriched by linguistic figures that elicit perceptual properties. However, only if the cogniser is in a bodily state that activates sen-sorimotor systems that highly resonate with the sensorimotor properties afforded by the sentences, then a measurable emotional state might arouse. For example, sentences like "Your debate opponent brings up a challenge you hadn't prepared for. It's certain that now you're going to lose the point" might have high resonance with the motor behaviour of a frown, while it might not elicit the motor behaviour of running. If during the reading of this sentence, the reader is wearing a frown, most possibly a strong emotional state might arise since there is high resonance between the motor action implied by the sentence and the motor action being worn by the cogniser.

It is important to put forward another aspect that might occur during the processing of emotionally laden stimuli and that relates to the necessary activation of motor and perceptual systems or just one of them. The case of language processing could be one of the few instances in which both systems could be activated given the properties already mentioned about language. But even so, the activation of motor systems not always occurs during the processing of linguistic stimuli (recall the results of Raposo et al., 2009, in regard to the processing of concrete concepts). Thus, it could be argued that the processing of emotionally-valenced stimuli, as an instance of abstract concepts, could be driven by only one of the systems. From a cognitive and neurological point of view it results more parsimonious if only one of the systems can take control of the processing while the other system is left in a stand-by state. Motor theories of social cognition support this claim. These theories argue that the processing of social actions can rely only on perceptual processes (see Jacob & Jeannerod, 2005). In addition, single-cell recording studies in primate brains show that some groups of neurons in the anterior inferotemporal and the frontal eye fields covary only with behavioural motor responses, while other neurons covary only with perceptual responses. More interestingly, another group of neurons appear to have an intermediate role in the sensory-motor continuum (see DiCarlo & Maunsell, 2005).

This claim invites to think that even the processing of stimuli that entails motor properties might not always and necessarily call for the activation of motor systems. Such claim could be particularly evident for the processing of pictorial stimuli. In those cases it would be expected that perceptual systems take control over the processing. All in all, it could be expected that if there is a high resonance between the stimuli's sensorimotor properties and the cogniser's sensorimotor systems an emotional state can occur, regardless of the processing being driven by either perceptual and motor systems or just one of them.

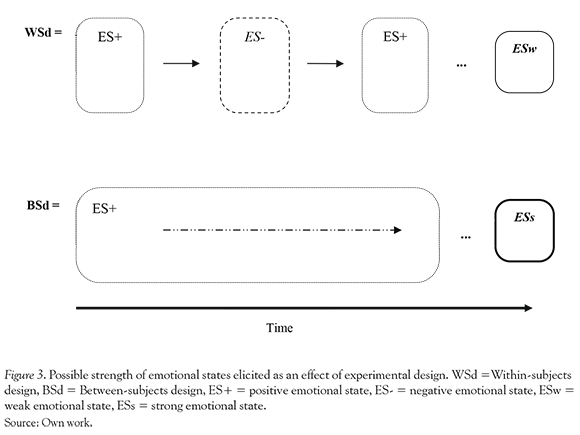

Strong levels of elicitation might be uncovered by simple rating tasks or questionnaires, while other measures, like EEG or EMG, might be needed to dredge up weak elicitations. Additionally, the time for which an affective state is sustained might also determine the strength with which an emotional state is elicited. For example, it could be argued that an emotional state is more likely to emerge when the state is sustained for long periods of time, rather than if it is sustained for shorts periods of time. In experimental design terms, emotions sustained for long periods of time (i.e., strong emotional states) could emerge in between-subjects designs, while emotions sustained for short periods of time (i.e., weak emotional states) could occur in within-sub-jects designs (see Buck, 1980). However, this claim is yet to be empirically tested (see Figure 3).

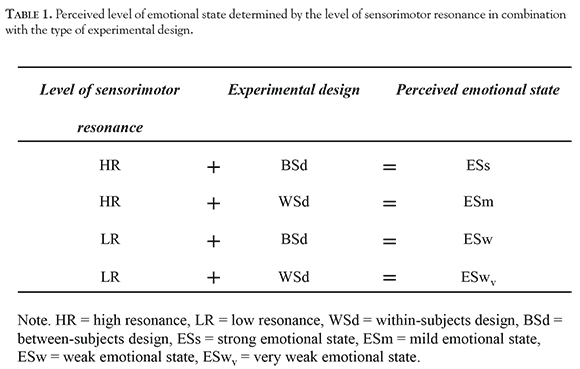

Additionally, it can be argued that a combination of the level of sensorimotor resonance between the cogniser and the stimuli with different experimental designs could lead to different levels of emotional state elicitation. As Table 1 shows, it could be expected that in general low sensorimotor resonance, regardless of occurring in between-subjects or within-subjects designs, would lead to weak emotional states. When high sensorimotor resonance occurs in within-subjects and between-sub-jects designs, mild and strong emotional states, respectively, could emerge.

Finally, it is worth mentioning that "picking up" the activation of perceptual and motor systems during the processing of emotional stimuli depends directly on the measures taken (e.g., behavioural vs. neurological) and the analysis performed on the data. Recall the experiments of Dahl et al. (2006). In that study, a replication of an experiment failed to produce the results already found in a former experiment even though the experimental conditions and the statistical analyses remained unchanged.

Details regarding the following series of experiments

The following series of experiments aims at replicating previous findings suggesting that judgment of emotionally-laden sentences requires the participation of sensorimotor systems (Experiments 1 and 2), and extend the results to the case of pictorial stimuli like emotionally-valenced images (Experiments 3 and 4) and facial expressions (Experiments 5 and 6).

Experiments 1 and 2 aimed at replicating the results obtained by Havas et al. (2007). In their study, participants held a pen in either their teeth or their lips while judging the pleasantness of sentences. The pen-in-teeth condition was employed to covertly elicit a smile, whereas the pen-in-lips condition sought to covertly elicit a frown. Researchers hypothesised that while the covert smile would activate a positive emotional state in participants, the covert frown would activate a negative emotional state. Being in a positive emotional state would in turn lead participants to be more prone to recognise similarly valenced input (i.e., pleasant sentences) than dissimilarly valenced input (i.e., unpleasant sentences). Being in a negative state would show an opposite pattern.

The hypotheses raised by Havas et al. (2007) were confirmed. That is, when participants were in a covert positive emotional state pleasant sentences were read faster than unpleasant sentences, while unpleasant sentences were read faster when participants were in a negative emotional state. Similar results are expected for the Experiments 1 and 2 reported here. However, in these experiments a couple of experimental conditions not reported in Havas et al.'s (2007) original study were included. One of the experimental conditions consisted in assigning participants to counterbalanced key responses. This is, half of the participants indicated that a sentence was pleasant by pressing a right hand key and indicated that a sentence was unpleasant by pressing a left hand key. For the other half of the participants the key response assignment was reversed.

This counterbalance is motivated simply as a methodological control (see Pollatsek & Well, 1995). The other condition was the inclusion of a measure of participants' changes in emotional states given the pen manipulation. This condition was kept across all the experiments reported here along with the pen manipulation. A measurement of participants' emotional states was included since it is still open to question whether cognitive processes, like judging sentences or images, are mediated by changes in emotional states. To tackle this question, participants' mood states were measured across experiments via Likert rating scales to determine whether the pen manipulation had an effect on participants' emotional states. Based on the review presented in previous sections, it is likely that a pen condition that induces a negative emotional state could lead participants to rate their emotional state as less happy than if they were in a positive emotional state. However, the review also suggested that this might not be always the case and methodological factors might play an important role in the results obtained.

In all experiments reported here, the traditional Likert rating scale was used. Traditional Likert scales consist of discrete measures that represent the level of agreement a person has regarding a specific question. The levels of agreement are in turn represented by a fixed amount of choices anchored by selected numeric values. For example, a 5-point Likert scale could range from 1 to 5, where 1 means a "low agreement" and 5 a "high agreement" and where the discrete numbers in between represent intermediate levels, i.e., 2, 3, and 4. The participant's choice is made by selecting one of the available options (see Likert, 1932). In the Experiment 5, however, a modified Likert rating scale was employed. The new Likert scale had a fixed range going from 0 to 1 but the scores in between those values were continuous and could be selected by moving a slider along the rating scale. The reasoning behind this sliding rating scale was that more granular measures about participants' emotional changes could be obtained.

Experiments 3 and 4 differed from the previous experiments only in the type of stimuli used. In those experiments emotionally-valenced images were used. In principle, it is predicted that the results originally reported by Havas et al. (2007) also hold for the case of pictorial stimuli. That is, pleasant images would be judged as pleasant faster than unpleasant images only when participants are holding a pen in their teeth (i.e., smile condition); whereas an opposite pattern could be expected when participants hold a pen in their lips. However, it is tenable to believe that the type of stimuli used can cause different results, all other factors being equal. As suggested by the review, pictorial stimuli seem to exert a strong effect that in turn overrides other type of manipulations. On the other hand, to our knowledge, there are no studies studying a potential interaction between body manipulations and pictorial stimuli. Thus, Experiments 3 and 4 seek to provide evidence in this front.

Possibly the strongest effect that pictorial stimuli can have on participants is that produced by facial expressions. As research shows facial expressions highly resonate with emotional states producing enhanced amygdale activation (Hauk et al., 2004). Although some studies have induced emotional states in participants via video-clips or music, the only one study designed to study how direct body manipulations affect the judgment of facial expressions is that of Blaesi and Wilson (2010) reported above. Thus, the Experiments 5 and 6 are designed to obtain results that complement or extend Blaesi and Wilson's claims.

In sum, the following series of experiment aims at fleshing out the framework provided by current embodied cognition theories as to how emotionally laden information is processed.

Experiment 1

Participants

One hundred and four undergraduate students at the University of Adelaide participated in the experiment in return for payment or course credit (22 males, Mage = 21.3, SD = 4.53). No information about handedness was obtained from participants. All participants were fluent in English. The School of Psychology Research Ethics Committee approved the experimental protocol and all participants signed a written consent form.

Materials

The stimuli consisted of the original set of sentences used in Experiment 1 by Havas et al (2007) although some of the sentences were modified for Australian participants.

There was a total of 96 sentences pairs each having a pleasant and an unpleasant version. Note that whereas in Havas et al. (2007) only the second pair of the sentence was presented, in this experiment both parts were presented, i.e., context and emotional sentence were shown together. An example of a sentence pair is given below.

Pleasant version:

Sweating after the long walk to the lake, you take off your shoes. The water is just perfect for a swim.

Unpleasant version:

Sweating after the long walk to the lake, you take off your shoes. The water isn't hot enough for a swim.

Participant's mood was measured using a 6-point scale labelled from 1 (very sad) to 6 (very happy). The sentences and the mood rating scale were presented to participants on a computer screen.

Procedure

The 96 sentence pairs were randomly assigned to 8 blocks of 12 items each (6 pleasant and 6 unpleasant versions presented in a random order). Before each block, participants were instructed to place a pen either between their lips or between their teeth. In order to make the manner of holding the pen clear, a photograph of a male and a female holding the pen according to the relevant condition was shown to participants on the screen as an example at the beginning of every block (see an example in Niedenthal, 2007).

At the end of each block, participants responded to the question "How do you feel right now?" on the 6-point mood scale using the computer mouse to record their answers. Participants were then instructed to read each sentence pair and to decide if they referred to a pleasant or unpleasant event. They were instructed to respond quickly but to try not to make any errors. Participants responded to the sentences by pressing a designated key on a computer keyboard. Two keys were used; the tab key on the left and the backslash key on the right. One half of the participants pressed the right-hand key to indicate a pleasant sentence and pressed the left-hand key to indicate an unpleasant sentence. The remaining half received the opposite response assignments.

Design and statistical analyses

There were three dependent variables — response time in the sentence judgment task, error rates, and mood ratings. Mean correct response times were analysed using a 2 X 2 X 2 mixed ANOVA with the factors of Pen Condition (teeth vs. lips), Sentence Valence (pleasant vs. unpleasant), and Response Key Assignment (pleasant-left / unpleasant-right vs. unpleasant-left / pleasant-right). The first two factors were manipulated within participants and the last factor was manipulated between participants. Mood ratings were submitted to repeated measures ANOVA with the factors of Pen Condition and Response key assignment. In this and the remaining experiments, effect sizes (n) are reported for interactions and main effects of interest 2.

Results

Average error across conditions was computed for each participant. Participants whose error rates were 2.5 SD below and above the overall mean performance were removed (four participants were removed)3. Thus, the data from 100 participants were submitted to analyses.

A mean RT analysis was performed on the data after removing four participants. Results showed only a borderline significant main effect of pen condition, F (1,98) = 3.59, p = 0.061, n = 0.18. No other main effect or interaction reached significance 4. These results suggested that regardless of sentence valence and key response allocation, when participants held the pen in their teeth, both pleasant and unpleasant sentences tended to be read faster (M = 5247, SE = 190) than when participants held the pen in their lips (M = 5355, SE = 194).

A proportion correct analysis showed a main effect of sentence valence, F (1,98) = 37.695, p < 0.001, n = 0.52, indicating that regardless of pen condition and key response allocation, pleasant sentences were judged less correctly (M = 0.8, SE = 0.010, 95% Cl = 0.78-0.82) than unpleasant sentences (M = 0.86, SE = 0.006, 95% CI = 0.850.88). No other main effects or interactions reached significance in the error analysis.

An analysis of mood ratings did show neither interactions nor main effects, all F < 1.

Experiment 2

Participants

One hundred and twenty five undergraduate students at the University of Adelaide participated in the experiment in return for payment or course credit. Fifteen participants were left-handed and the rest (110 participants) were right-handed (RHs). Left-handers (LH) were removed from the analyses and only the data of RHs were used for the anal-yses5. Handedness was determined by self-report (see Coren, 1993). RHs whose overall proportions of correct answers across conditions were 2.5 SD above and below the mean error rate were removed (1 participant). Also, the data of participants whose RTs across conditions were 2.5 SD above and below the mean RTs of all participants were removed (3 participants). Thus, the data from 106 participants were submitted to analyses (26 males, M = 20.1, SD = 5.63).

Materials

The stimuli consisted of a revised version of the sentences used in Experiment 1. Since in Experiment 1 pleasant sentences were judged less accurately than unpleasant sentences, it was suspected that a speed-accuracy trade-off (SAT) could have accounted for the results. The SAT functions are traditionally characterised by following an exponential form and positive correlation in which the faster the RTs are, the lower the accuracy is, and while the slower the RTs are, the higher the accuracy is (see Wickelgren, 1977). A correlation analysis showed that in Experiment 1 there was a significant negative correlation between the level of accuracy and RT for both pleasant and unpleasant sentences, r (104) = -0.4, p < 0.001, and r (104) = -0.341, p < 0.001, respectively. However, in both cases, linear fit lines were never below chance (well above 0.5).

For Experiment 2, sentences were re-worded given these results. A correlation analysis showed that for both pleasant and unpleasant sentences there was not a significant negative correlation between the level of accuracy and RT, r (125) = -0.154, p = 0.08, and r (125) = -0.123, p = 0.17, respectively. Once again, linear fit lines were never below chance. Also, the re-worded sentences used in Experiment 2 were more accurately judged, (M = 0.87, SD = 0.08), than the sentences used in Experiment 1, (M = 0.82, SD= 0.1), t (227) = 4.24, p < 0.0001. Thus, in Experiment 2, no SAT can account for the results found.

Procedure, design and statistical analyses

The procedure, design, and statistical analyses were the same as those used of Experiment 1.

Results

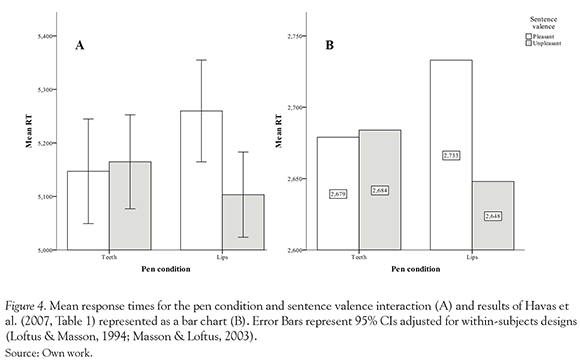

The results showed that there was a virtually significant interaction between pen condition and sentence valence, F (1,104) = 3.71, p = 0.057, n = 0.18 (see Figure 4A).

That is, while participants held the pen in their teeth, pleasant sentences (M = 5147, SE = 49.26) were read 17ms faster than unpleasant sentences (M = 5164, SE = 44.24), whereas when participants held the pen in their lips, pleasant sentences (M = 5259, SE = 47.95) were read 166ms slower than unpleasant sentences (M = 5103, SE = 40.18). This pattern of results not only resembled those obtained by Havas et al. (2007) (see Figure 4B), but also neared statistical significance. Additionally, the proximity between the effect size reported here and the computed effect size of Havas et al. (n = 0.21) lends support to the original finding reported by these researchers6.

No other main effect and interaction reached statistical significance, all p > 1.

A proportion correct analyses showed a main effect of sentence valence, F (1,104) = 24.33, p < 0.001, n = 0.43 and a 3-way interaction among pen condition, sentence valence, and key response assignment, F (1,104) = 5.22, p = 0.024, n = 0.21. Overall, there was less agreement for pleasant sentences (M = 0.856, SE =0.008, 95% CI = 0.840.86) than for unpleasant sentences (M = 0.904, SE = 0.006, 95% CI = 0.89-0.91).

No other main effect or interaction was significant in the error and RT analyses, all p > 0.1

An analysis of mood ratings did show neither interactions nor main effects, all F < 1.0

Experiment 3

Participants

One hundred and twenty eight undergraduate students at the University of Adelaide participated in the experiment in return for payment or course credit. Sixteen participants were left-handed and the rest (112 participants) were right-handed (RHs). Only the data of RHs were used for the analyses. The data from those RHs whose proportions of correct answers across conditions were below or above 2.5 SD were removed (5 participants). Also, the data from participants whose RTs across conditions were below or above 2.5 SD were removed (3 participants). Thus, the data from 104 participants were submitted to analyses (28 males, Mage = 20.4, SD = 4.93). The School of Psychology Research Ethics Committee approved the experimental protocol and all participants signed a written consent form.

Materials

Ninety six images from the International Affective Picture System (IAPS; Lang, Bradley, & Cuthbert, 2008) were used. Forty eight pictures were neutral (Mvalence=5, SD = 0.28 and Marousal= 5.02, SD= 0.61) and the remaining pictures were positive (Mvalence= 7.34, SD = 0.27 and Marousal= 4.96, SD = 0.24). The neutral pictures consisted mostly of photographs of people, animals, and objects. The positive pictures consisted mostly of couples, babies, and landscapes. The selection criteria were as follows: Pictures in the IAPS are rated on a 9-point Likert scale in which 1 represents a low rating (e.g., low pleasure, low arousal) and 9 a high rating on each dimension (e.g., high pleasure, high arousal). Thus, pictures with ratings close to 7.5 would be positive, and pictures with ratings close to 5 would be neutral. On this basis, the selection process had 3 steps: a) Selection of all pictures which valence ratings ranged between 7 and 8 (positive pictures), and between 4.5 and 5.5 (neutral pictures), b) to control for arousal, pictures which arousal ratings ranged between 4.5 and 5.5 were selected inside each category, and finally c) only 48 pictures were kept for each of the two categories. Because the neutral pictures category ended up with various pictures of the same kind (i.e., 12 erotic pictures), these were replaced with other neutral pictures already obtained in step a).

A two-tailed independent samples t test showed that the two images categories were significantly different at their valence dimension, t (94) = 40.812, p < 0.001 (equal variances assumed, F < 1), and were not significantly different at their arousal level, t (61.673) = -0.549, p = 0.585 (equal variances not assumed, F (1, 94) = 33.844, p < 0.001).

Procedure

Procedure was similar to that of Experiment 1. This experiment differed in that images were used instead of sentences and that participants were asked to decide whether images were positive or negative7. Images remained on the screen until a response was made. As in Experiment 1 and 2, the images were presented across 8 blocks of 12 trials each. At the end of each block, participants responded to the question "How do you feel right now?" on the 6-point mood scale using the computer mouse to record their answers. As in Experiment 1 and 2, one half of the participants pressed the right-hand key to indicate a pleasant image and pressed the left-hand key to indicate an unpleasant image. The remaining half received the opposite response assignments.

Design and statistical analyses

As in Experiment 1 and 2, there were three dependent variables — response time in the image judgment task, error rates, and mood ratings. Mean correct response times were analysed using a 2 X 2 X 2 mixed ANOVA with the factors of Pen Condition (teeth vs. lips), Image Valence (positive vs. negative), and Response Key Assignment (positive-left / negative-right vs. negative-left / positive-right). The first two factors were manipulated within participants and the last factor was manipulated between participants.

Mood ratings were submitted to repeated measures ANOVA with the factor of Pen Condition and Response key assignment.

Results

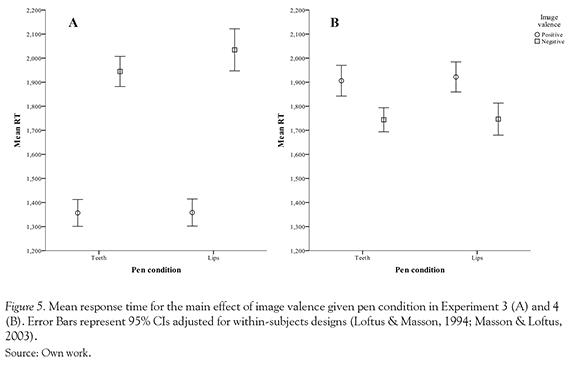

The results showed only a large main effect of image valence, F (1.102) = 154.88, p < 0.001, n = 0.77. No other main effect or interaction reached significance, all p > 0.1

Results suggested that, regardless of pen manipulation and key response allocation, positive images were judged faster (M = 1356, SE = 41.12) than negative images (M = 1990, SE = 71.47) (see Figure 5A).

A proportion correct analyses showed only a large main effect of image, F (1,102) = 172.68, p < 0.001, n = 0.79. These results showed that, independent of pen manipulation and key response assignment, positive images were judged more correctly (M = 0.92, SE = 0.01, 95% CI = 0.9-0.94) than negative images (M = 0.65, SE = 0.01, 95% CI = 0.63-0.67).

An analysis of mood ratings did show neither interactions nor main effects, all F < 1.

Experiment 4

Participants

One hundred and seventeen undergraduate students at the University of Adelaide participated in the experiment in return for payment or course credit. Thirteen participants were left-handed and the rest (104 participants) were right-handed (RHs). Only the data of RHs were used for the analyses. Participants, whose RTs across conditions were 2.5 SD above and below the grand mean, were removed (2 participants). Because in this experiment only neutral images were used and participants were forced to judge whether the images were positive or negative, no participants were removed due to incorrect answers. Thus, the data from 100 participants were submitted to analyses (37 males, Mage = 20.4, SD = 3.21). The School of Psychology Research Ethics Committee approved the experimental protocol and all participants signed a written consent form.

Materials

Ninety six images from the International Affective Picture System (IAPS; Lang, Bradley, & Cuthbert, 2008) were used (Mvalence = 5.02, SD = 0.3 and Marousal = 4.53, SD = 0.72). Forty-eight pictures

were the neutral images used in the previous experiment. Another set of 48 neutral pictures was taken from the IAPS. The neutral pictures consisted mostly of photographs of people, animals, and objects. A similar selection criteria to that used in the previous experiment was applied here for the selection of the other 48 neutral images.

Procedure

The procedure was similar to that of Experiment 3. However, the present experiment differed in that only neutral images were used. Participants' task was to indicate, as quickly and accurately as possible, whether the image was positive or negative while holding a pen in their lips or their teeth. As in the previous experiments, one half of the participants pressed the right-hand key to indicate a pleasant image and pressed the left-hand key to indicate an unpleasant image. The remaining half received the opposite response assignments.

Design and statistical analyses

There were three dependent variables — proportion of images judged as positive and negative, judgment response times for each type of image, and mood rating. Mean proportion of images judged as positive and negative were analysed using a 2 X 2 X 2 mixed ANOVA with the factors of Pen Condition (teeth vs. lips), image valence (negative vs. positive) and Response Key Assignment (positive-left / negative-right vs. negative-left / positive-right). The first two factors were manipulated within participants and the last factor was manipulated between participants.

A further analysis was designed to determine the mean response time for each type of image type given the pen condition and response key assignment. This analysis was performed using a 2 X 2 X 2 mixed ANOVA with the same factors mentioned above.

Mood ratings were submitted to repeated measures ANOVA with the factor of Pen Condition and Response key assignment.

Results

The results showed that only a main effect of image was significant, F (1,98) = 11.39, p < 0.001, n = 0.32. Regardless the pen manipulations and key response allocation, RTs for images judged as positive were 171ms slower (M = 1915, SE = 25.35) than RTs for images judged as negative (M = 1744, SE = 25.35) (see Figure 5B). No other significant interaction or main effect was significant, all F < 1.

An analysis of the amount of images judged as positive and negative given the pen manipulation and key response assignment, showed only a main effect of image, F (1,98) = 34.07, p < 0.001, n = 0.5. Regardless the key response assignment and pen manipulation, on average more images were judged as negative (M = 27.91, SE = 0.78, 95% CI = 26.35-29.47) than positive (M = 18.72, SE = 0.78, 95% CI = 17.16-20.28).

An analysis of mood ratings did show neither interactions nor main effects, all F < 1.

Experiment 5

Participants

Seventy six undergraduate students at the University of Adelaide participated in the experiment in return for payment or course credit. Ten participants were left-handed and the rest (66 participants) were right-handed (RHs). Only the data of RHs were used for the analyses. Because the interest in this experiment was to determine how "happy" or "sad" the 11 morphed faces are rated, no participants were removed due to incorrect answers. Thus, the data from 66 participants were submitted to analyses (19 males, = 20.5, SD = 2.9). The School of Psychology Research Ethics Committee approved the experimental protocol and all participants signed a written consent form.

Materials

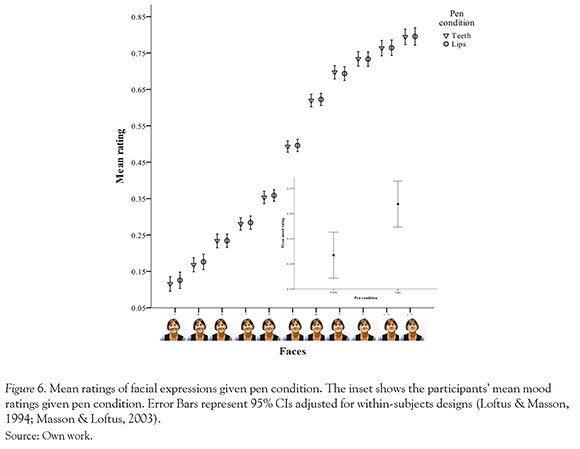

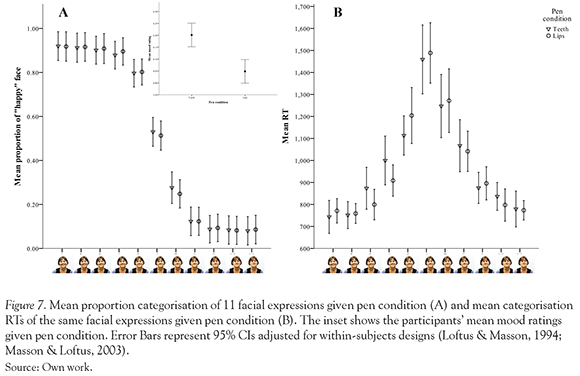

The stimuli consisted of 11 facial expression used in a previous study by Blaesi and Wilson (2010). The 11 images are the pictures of a woman's facial expressions on continuum from smiling ("happy" face) to frowning ("sad" face) (see Figure 6).

Procedure

Participants' task was to move a slider along a scale to rate the 11 emotional facial expressions while holding a pen in their lips or their teeth. The 11 faces were presented twice in random order, across the 8 blocks containing all 11 stimuli and were accompanied by a rating scale placed underneath them. Each stimulus was shown 88 times in each of the 2 conditions (pen-in-teeth and pen-in-lips), for a total of 176 trials.

For one half of the participants the scale was labelled with the word "unhappy" at the left end of the scale and the word "happy" at the right end of the scale. The remaining half received the opposite rating assignment. Participants used the computer mouse to move the slider along the rating scale. As in the previous experiments, participants rated their mood at the end of each block. This time though, a sliding rating scale was also used for participants to rate their mood. The rating scale for both the 11 faces and participants' mood ranged between 0 and 1, being 0 the highest value for "happy" and 1 the highest value for "sad".

Design and statistical analyses

The dependent variables were the ratings given to each of the 11 facial expressions and to participants' mood. Mean ratings for the facial expressions were analysed using a 2 X 2 X 11 two-way ANCOVA with the factors of Pen Condition (teeth vs. lips), Rating Assignment (left-"unhappy" / right-"happy" vs. left-"happy" / right-"unhappy"), and Facial Expression (face 1["happy", smiling] ~ face 11["sad", frowning]). The first factor was manipulated within participants, the second factor was manipulated between participants, and the third factor was entered as a covariate.

Mood ratings were submitted to repeated measures ANOVA with the factor of Pen Condition and Rating assignment.

Results