Services on Demand

Journal

Article

Indicators

-

Cited by SciELO

Cited by SciELO -

Access statistics

Access statistics

Related links

-

Cited by Google

Cited by Google -

Similars in

SciELO

Similars in

SciELO -

Similars in Google

Similars in Google

Share

International Journal of Psychological Research

Print version ISSN 2011-2084

int.j.psychol.res. vol.6 no.spe Medellín Oct. 2013

Alexithymia Slows Performance but Preserves Spontaneous Semantic Decoding of Negative Expressions in the Emostroop Task

La alexitimia disminuye el rendimiento pero preserva la decodificación semántica espontánea de expresiones negativas en la Emostroop Task

Courtney K. Hsinga, Alicia Hofelich Mohra, R. Brent Stansfieldb, Stephanie D. Prestona,*

a Department of Psychology, University of Michigan, Ann Arbor, Michigan, United States.

b Department of Medical Education, University of Michigan, Ann Arbor, Michigan, United States.

* Corresponding author: Stephanie D. Preston, Department of Psychology, 530 Church Street, Ann Arbor, MI 48109, United States. prestos@umich.edu

Received: 24-08-2013-Revised:23-09-2013-Accepted: 04-10-2013

ABSTRACT

Alexithymia is a multifaceted personality construct related to deficits in the recognition and verbalization of emotions. It is uncertain what causes alexithymia or which stage of emotion processing is first affected. The current study was designed to determine if trait alexithymia was associated with impaired early semantic decoding of facial emotion. Participants performed the Emostroop task, which varied the presentation time of faces depicting neutral, angry, or sad expressions before the classification of angry or sad adjectives. The Emostroop effect was replicated, represented by slowed responses when the classified word was incongruent with the background facial emotion. Individuals with high alexithymia were slower overall across all trials, particularly when classifying sad adjectives; however, they did not differ on the basic Emostroop effect. Our results suggest that alexithymia does not stem from lower-level problems detecting and categorizing others’ facial emotions. Moreover, their impairment does not appear to extend uniformly across negative emotions and is not specific to angry or threatening stimuli as previously reported, at least during early processing. Almost in contrast to the expected impairment, individuals with high alexithymia and lower verbal IQ scores had even more pronounced Emostroop effects, especially when the face was displayed longer.To better understand the nature of alexithymia, future research needs to further disentangle the precise phase of emotion processing and forms of affect most affected in this relatively common condition.

Key Words: Alexithymia, emotion processing, emotion identification, facial expressions, semantic encoding, Emostroop.

RESUMEN

La alexitimia es un constructo de personalidad multifacética relacionada con déficit en el reconocimiento y la verbalización de las emociones. No se sabe qué causa la alexitimia o en qué etapa de procesamiento de las emociones se ve afectada primero. El presente estudio fue diseñado para determinar si el rasgo de alexitimia se asocia con una deficiencia en la decodificación semántica temprana de la emoción facial. Los participantes realizaron la tarea Emostroop, que varió el tiempo de presentación de las caras que representan expresiones neutrales, de enojo o de tristeza antes de la clasificación de los adjetivos de enojo y de tristeza. El efecto Emostroop se repitió, representado por las respuestas lentas cuando la palabra clasificada era incongruente con el antecedente de la emoción facial. Las personas con alta alexitimia eran en general más lentas en todos los ensayos, sobre todo al clasificar adjetivos tristes; sin embargo, no fue diferente en el efecto básico Emostroop. Nuestros resultados sugieren que la alexitimia no se debe a problemas de bajo nivel de detección y categorización de las emociones faciales de los demás. Además, su discapacidad no parece extenderse uniformemente a través de las emociones negativas y no es específico a los estímulos de enojo o amenaza como se informó anteriormente, al menos durante el proceso inicial. Casi en contraste con el deterioro esperado, los individuos con alta alexitimia y puntuaciones inferiores de CI verbal tuvieron efectos Emostroop aún más pronunciadas, especialmente cuando se muestra la cara por más tiempo. Para entender mejor la naturaleza de la alexitimia, la investigación futura debe separar aún más la fase precisa de procesamiento de las emociones y de las formas de afectar a los más afectados en esta condición relativamente común.

Palabras Clave: Alexitimia, procesamiento de las emociones, identificación de las emociones, expresiones faciales, codificación semántica, Emostroop.

1. INTRODUCTION

Alexithymia is a multifaceted, personality construct characterized by multiple interrelated issues, including a reduced ability to identify, describe, and express emotional feelings, difficulty distinguishing feelings from sensations like arousal, and a tendency to focus on external over inner events (Nemiah, Freyberger, & Sifneos, 1976; Taylor, 1984). Despite being a subclinical phenomenon, it is also often accompanied by multiple psychosomatic and psychiatric disorders such as anxiety, depression, fibromyalgia, and chronic gastrointestinal distress (Taylor, 2000). It is currently unclear what causes alexithymia or the mechanisms by which it affects emotion processing, but it is widely agreed that these individuals suffer from a deficit in the cognitive processing and regulation of emotion (Parker, Taylor, & Bagby, 1993a). For example, alexithymia is inversely related to emotional intelligence (Parker, Taylor, & Bagby, 2001) and it affects the processing of one’s own emotions as well as the ability to recognize emotions in words or others’ faces (e.g. Gil et al., 2009; Lane et al., 1996; Lane, Sechrest, Riedel, Shapiro, & Kaszniak, 2000; Parker et al., 1993a; Parker, Taylor, & Bagby, 1993a; Prkachin, Casey, & Prkachin, 2009; Suslow & Junghanns, 2002; Vermeulen, Luminet, & Corneille, 2006).

Despite these effects, which have been observed across types of stimuli and levels of processing, most researchers assume that alexithymia is associated with higher-level cognitive problems linked to one’s ability to think about emotions, such as differentiating or translating nonverbal feelings into verbal or abstract concepts (e.g., see Holt, 1995; Taylor, Bagby & Parker, 1997). For example, individuals with high levels of alexithymia are impaired at communicating subjective feelings in words (Taylor et al., 1997) and only weakly associate their own bodily sensations during emotional arousal with words (Bucci, 1997). Similarly, individuals with high alexithymia are impaired at recall but not recognition for previously presented emotional words (Luminet, Vermeulen, Demaret, Taylor, & Bagby, 2006). Moreover, alexithymia is associated in development with delayed speech (Kokkonen et al., 2003) and the emotion recognition impairments in alexithymia are sometimes eliminated after verbal IQ is taken into account (Montebarocci, Surcinelli, Rossi, & Baldaro, 2011). However, whether alexithymia is specifically a semantic or conceptual problem is difficult to confirm because almost all tasks that measure basic emotion-processing impairments require verbal responses and rarely control for verbal IQ. Moreover, the instrument most often used to measure alexithymia (the TAS-20, Bagby, Parker & Taylor, 1994) is a simple self-report questionnaire that may produce spurious data, not only because it is a verbal measure and one that is subject to self-reporting biases (Mueller, Alpers, & Reim, 2006), but also because the subscale that most often predicts impairment (“difficulty describing feelings”) also taps the degree to which people feel shame about their emotions (Suslow, Donges, Kersting, & Arolt, 2001)—the latter of which could cause people to inhibit, ignore, and fail to report emotions even if they were capable of doing so.

Other researchers assume that alexithymia results from more basic emotion-processing impairments that are not necessarily explicit, conceptual, or verbal, but may pertain more to negative states. However, the results from these studies are highly mixed as they sometimes extend to positive emotions and other times only occur for specific negative states. For example, in a PET brain imaging study, individuals with alexithymia had reduced brain activity in multiple regions for angry and sad faces compared to neutral, but not happy (Kano et al., 2003). The insula and anterior cingulate cortex (ACC)—which represent subjective pain states that increase with alexithymia—were also uniquely underactivated in the PET study during angry compared to neutral faces (Kano et al., 2003). Alexithymia has been associated with reduced activity in the amygdala to masked sad (but not happy) faces, even after controlling for trait anxiety and depression (Kugel et al., 2008; Reker et al., 2010). However, other neural regions besides the amygdala also show reductions in brain activity in alexithymia that extend to happy faces (Reker et al., 2010). Another study replicated the reduced activity in the right amygdala and medial prefrontal cortex (MPFC) in alexithymia, but the effects were only significant for disgust and did not occur during anxiety-producing images (Leweke et al., 2004). Thus, individuals with alexithymia clearly have different psychological and neural processes for affective stimuli, even when they are not verbal, but these impairments may or may not be specific to negative states.

Priming studies have helped demonstrate that deficits in alexithymia extend to earlier phases of emotion processing, which are not necessarily under conscious control, particularly during speeded processing (Parker, Prkachin, & Prkachin, 2005; Prkachin et al., 2009). Individuals with alexithymia show differences in the degree to which they are distracted by emotional primes when categorizing nonaffective stimuli (as in clinical emotional Stroop tasks) or show facilitation (interference) from rapidly presented emotional primes when categorizing congruent (incongruent) stimuli (as in affective priming tasks or the current Emostroop task). However, these effects also require verbal processing and results have been extremely mixed. Sometimes individuals with high alexithymia only show facilitation for positive and not negative prime-target pairs (Suslow, 1998), sometimes the opposite occurs (Suslow, Junghanns, Donges, & Arolt, 2001), and sometimes neither facilitation or interference is affected, but individuals with alexithymia show faster responses when the emotional prime is incongruent with the target (Suslow & Junghanns, 2002).In a carefully controlled series of three studies comparing priming effects from words or faces (positive, negative, or neutral) on the classification of words or faces (positive or negative), the only effect observed was a reduced priming effect in alexithymia from angry faces (Vermeulen et al., 2006). The authors interpreted this effect as evidence that alexithymia reflects a specific inability to process threat cues or to marshal appropriate bodily states after their detection. However, sad primes were not significantly different from angry primes in this study, and they were marginally related to alexithymia scores across their experiments (p = .06; Vermeulen et al., 2006). Moreover, in clinical studies alexithymia is highly associated with somatization (Kano, Hamaguchi, Itoh, Yanai, & Fukudo, 2007), which is inconsistent with an inability to generate bodily states from external situations.

Others have also proposed that alexithymia reflects a problem processing threat, based on results from clinical emotional Stroop tasks in which participants label the ink color of words of varying valence. In one study, individuals with high alexithymia showed less interference from emotionally-arousing words (Martínez-Sanchez & Serrano, 1997). In another, patients with high alexithymia were less distracted by emotional words that did slow performance for patients with low alexithymia, with effects increasing from positive to negative to bodily-symptom words (only the last category being significant). This reduced distraction from emotional words occurred despite the fact that individuals with high alexithymia rated the words similarly and the researchers factored out other relevant trait variables (Mueller et al., 2006). These authors agreed with Vermeulen and colleagues (2006) that alexithymia was associated with impaired threat processing, but acknowledged that alexithymics could be so sensitive to threat that it is no longer distracting or conversely could be too insensitive to threat, causing somatic disorders due to chronic dismissal of key internal cues (see also Martin & Pihl, 1985). This latter interpretation is again inconsistent with the fact that alexithymics show increased subjective distress to physical pain and present as hypochondriatic (Kano et al., 2007). Adding to the confusion, prior studies found that interference to arousing sexual words was greater in individuals with high alexithymia (Parker, Taylor, & Bagby, 1993b) or they were neither more nor less affected by emotionally arousing words (Lundh & Simonsson-Sarnecki, 2002). Therefore, there is some indication that alexithymia is associated with reduced automatic processing of negative states—perhaps particularly anger—but the results and their meaning are far from clear.

The current framework assumes that emotional stimuli are categorized into fine-grained emotional concepts with semantic labels early in processing (see review in Preston and Hofelich, 2012). For example, in the Emostroop task, when typical adults view another’s angry facial expression, even when the face is irrelevant to their task, they spontaneously categorize the face as angry and activate the semantic label (“angry”), which in turn facilitates (interferes) with classification of congruent (incongruent) foreground words (Hofelich & Preston, 2012; Preston & Stansfield, 2008). In this model, the association of affective stimuli with semantic labels is an early, spontaneous, and necessary phase of emotion recognition, even when it is not required for the task. Any impairment in this semantic decoding would in turn influence all downstream emotion processing and discrimination of others’ states. Because of this feature, the Emostroop task could be applied to alexithymia to determine if their impairment emanates from this early phase of semantic decoding. If they do not, alexithymia could still stem from disorder-relevant biases introduced later in processing during the conscious recall, rumination, and reflection upon activated states, for example because of an inability to distinguish physiological from affective feelings or to avoid unpleasant or shameful feelings.

Emostroop performance needs to be examined, even though automatic responses have already been studied with color-naming emotional Stroop and affective priming tasks (above). The Emostroop task does not measure distraction from personally-relevant emotions like clinical Stroop tasks, but rather directly measures the extent to which people spontaneously decode nonverbal facial emotion into semantic categories—a process that is known to support basic emotion recognition. In addition, the Emostroop task does not simply measure effects of valence, like affective priming tasks do, because the semantic interference can be linked to particular basic emotions—a feature Vermeulen and colleagues (2006) previously called to be examined. Moreover, the Emostroop task can be modified to examine effects across a range of perceptual speeds, from brief and minimal awareness to full conscious perception.

To systematically investigate the point in processing where semantic encoding and relevant impairments emerge, we modified the Emostroop task to present the faces for different durations of time. To test the hypothesis from priming studies that the impairment in alexithymia is specific to threat or anger, we measured responses to anger, sadness, and neutral affect, assuming that sad faces are not normally considered threatening or threatened per se. Positive emotions were omitted here because they are less often implicated in alexithymia and removing them permitted more trials per remaining condition. Alexithymia was measured with the Toronto Alexithymia Scale (TAS-20) (Bagby et al., 1994), a widely used scale with good reliability, validity, and generalizability (Taylor, Parker, & Bagby, 2003). To control for possible confounding variables, we also measured trait depression, anxiety, and verbal ability (Hendryx, Haviland, & Shaw, 1991).

Overall, we expected to replicate the Emostroop effect when the faces were consciously processed but perhaps not at the shortest presentation intervals, when valence information alone is more likely to be available. We also expected individuals with high alexithymia to have slower responses when categorizing negative emotions, consistent with prior research (Lane et al., 2000; Parker et al., 1993a). However, we did not necessarily expect them to have impaired Emostroop interference effects, or effects specific to anger, since most of the prior literature pointed to problems in alexithymia with managing multiple, subjectively-arousing feeling states online, rather than a basic intellectual problem decoding and labeling threatening emotions.

2. METHOD

2.1. Participants

One hundred and fifteen undergraduate students (65 female, 50 male) from a Midwestern university in the United States participated in the study for course credit. The sample size was selected to match representative prior studies using the TAS-20 (e.g., Prkachin et al., 2009) and the Emostroop Task (e.g., Preston & Stansfield, 2008). The average age was 18.95, with a range between 18 and 26. Most of the participants were freshman students (Freshman 75%, Sophomore 17%, Junior 2%, Senior 6%) and the majority was Caucasian (African-American 4%, Asian 15%, Caucasian 75%, Hispanic 4%, Other 1%). Participants were prescreened from a pool of introductory psychology course students using questions from the 7-item TAS-20 Difficulty Identifying Emotions Subscale (Bagby et al., 1994), in order to recruit comparable numbers of high and low alexithymia scorers. Students with TAS-20 Difficulty Identifying Emotions subscale scores that were at least 1.5 SD above or below the total subject pool’s mean of 14 were recruited to participate, to ensure good coverage of higher and lower distribution participants. High and low alexithymia scorers were grouped for analyses using a median split on their overall TAS-20 scores. Four participants were dropped from the study for not completing the task or having a median reaction time over 4 SD.

2.2. Procedure

Participants were tested in a computer room in groups of one to 12 individuals. After giving their informed consent via a paper form, each subject completed the computerized task followed by online questionnaires. The computerized task consisted of a practice block to familiarize participants with the response keys (sad/angry). Then, in random order, participants completed the Emostroop task, a facial emotion identification task, and a word emotion identification task. Written instructions were provided on a piece of paper and on the computer screen. The online questionnaires included the TAS-20 (Bagby et al., 1994), the Beck Depression Inventory (Beck & Steer, 1987), the Extended Range Vocabulary Test (Educational Testing Service, 1976), and the State-Trait Anxiety Inventory (Spielberger, 1983). Participants were then debriefed and thanked for their participation.

2.3. Tasks

Emostroop task. The Emostroop stimuli were as reported previously (Preston & Stansfield, 2008). The stimuli first appeared as in the classic Emostroop task with emotion words superimposed over pictures of facial emotion created using Adobe Photoshop (Adobe Systems Inc., San Jose, CA). However, this time, each picture of facial emotion was visible for a varying amount of time before being replaced by a scrambled face mask, with the overlaid word to be classified still visible above the mask until the participant responded. The faces were visible before the mask in eight different presentation time bins (16.667 ms, 33.333 ms, 83.333 ms, 100 ms, 150 ms, 200 ms, 300 ms, 400 ms), selected in randomized order without replacement. Twenty-four trials appeared in each bin. The facial stimuli were one of 16 Pictures of Facial Affect (PFA; Ekman & Friesen, 1976).

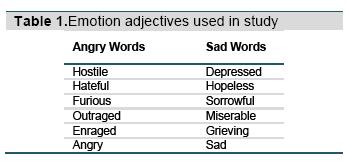

The stimuli consist of four actors (two male) displaying one of three expressions (sad, angry, or neutral). The overlaid words were six prototypical sad or angry adjectives (Table 1). A word was randomly selected for each trial. The neutral category was used to examine facilitation effects and only applied to facial expressions (there were no neutral words). Of the 192 trials, the background facial emotion matched the word one-third of the time (congruent trials), and of the remaining trials, half of the time the word was overlaid on a different facial emotion (incongruent emotional trials), and half of the time on a neutral face (incongruent neutral trials). All four actors were randomly selected within each time bin and combination without repetition. Subjects categorized the emotion of the adjective as sad or angry as quickly and as accurately as possible, using their index fingers on both hands on keyboard buttons that were labeled with stickers “S” (for sad) or “A” (for angry). Accuracy feedback was provided after each trial using a green (correct) or red (incorrect) border.

For all analyses, error trials and trials with reaction times (RT) over 2.5 standard deviations greater than each participant’s mean RT were first removed before calculating their median RT for congruent, incongruent emotional, and incongruent neutral trials for each of the eight presentation time bins. The basic Emostroop effect was calculated by subtracting median reaction times for the congruent trials from the median reaction time for the incongruent emotional trials within each subject.

Face and word tasks. For the separate word-only and face-only blocks, the same angry and sad faces or words were displayed. No neutral faces were included in the face-only block. Participants responded to a total of 16 trials for the faces-only block (each picture was viewed twice) and 24 trials for the words-only block (each word was viewed twice). The words and faces were displayed in a randomized order. Again, participants categorized the emotion using their index fingers on both hands by pressing buttons S or A marked on the keyboard, followed by accuracy feedback.

2.4. Measures

TAS-20.The Toronto Alexithymia Scale (TAS-20) (Bagby et al., 1994Bagby et al., 1994) is one of the most commonly used self-report measures of alexithymia. The TAS-20 includes three subscales: (1) Difficulty Identifying Feeling (e.g., “when I am upset, I don’t know if I am sad, frightened, or angry”), (2) Difficulty Describing Feelings (e.g., “I find it hard to describe how I feel about people”), and (3) Externally Oriented Thinking (e.g., “I prefer talking to people about their daily activities rather than their feelings”). The scale is comprised of 20 items. Items are rated using a 5-point Likert scale whereby 1 = strongly disagree and 5 = strongly agree. The total alexithymia score is the sum of responses to all 20 items. In our study, the average TAS-20 score for participants was 47.64 (SD = 12.04) and the median was 47, which is below the level considered alexithymic (61 or above is alexithymic, 52 to 60 is possibly, 51 or below is not; ). Due to the low number of participants scoring 61 and above (n = 19), the median-split was used during analyses (29 participants scored in the middle range). Of the 56 participants that fell into the upper half of the distribution (the high alexithymia group), 19 had scores at or above the 61 TAS-20 alexithymia cut-off score. All 59 participants that fell into the lower half of the distribution (the low alexithymia group) had TAS-20 scores of 51 or below. There was no significant difference in the TAS-20 scores of male (M = 48.10) and female (M = 47.29) participants, (t(113) = .36, p = .723), so gender was not included in subsequent analyses.

STAI. To measure anxiety, we used the State-Trait Anxiety Inventory (STAI) (Spielberger, 1983). Respondents used a four-point Likert scale ranging from not at all (1) to very much so (4). This measure includes the same 20 items that are assessed for both the degree to which participants experience the emotion typically (trait anxiety) and are currently experiencing that emotion (state anxiety).

BDI-II. To measure depression, we used a 21-item Beck Depression Inventory (BDI-II) (Beck & Steer, 1987). Items are rated on a four-point Likert scale ranging from (0) to severe (3). One question regarding suicide was eliminated due to human subjects concerns.

ERVT. The Extended Range Vocabulary Test (ERVT, Version 3; Educational Testing Service, 1976) was administered to measure verbal ability or verbal IQ. This 48-item measure tests participants’ knowledge of word meaning. For each item, participants were asked to select one of five words with the same or nearly the same meaning as the given word.

3. RESULTS

3.1. Ability to categorize the basic emotion of faces and words alone

There were no differences between high and low alexithymia groups on the RT to classify either emotional words (F(1, 113) = 3.37, p = .069) or emotional faces alone (F(1, 113) = .10, p = .749), or for specific emotions (angry and sad) within the words-only block (F(1, 113) < 2.4, p> .1) or the faces-only block (F(1, 113) < .2, p> .6). Therefore, there was no reason to assume that any impairment in the Emostroop task emanated from trouble understanding the stimuli or classifying basic facial or word emotions during unspeeded, conscious perception.

3.2. Replicating the emostroop effect across participants

3.2% of the trials were removed due to error and 2.9% of trials were eliminated because they were greater or less than 2.5 SD from the participant’s RT mean. Error rates did not differ significantly by time bin (F(7, 22840) = .94, p = .473) or by alexithymia status (F(1, 113) = 1.24, p = .268). Overall, reaction times (RT) were significantly slower for incongruent than congruent trials, which replicates the basic Emostroop effect, F(1, 114) = 14.95, p< .001. A repeated-measures ANOVA using Greenhouse-Geisser correction further indicated differences in median RT across the three trial types (congruent, incongruent emotional, incongruent neutral), F(1.57, 178.49) = 11.22, p< .001. Neutral trials (M = 729.94, SD = 158.25) were nonsignificantly intermediate between the shorter congruent (M = 723.40, SD = 151.77) and longer incongruent (M = 737.97, SD = 174.67) trials, using post hoc tests with Bonferroni correction. An additional ANOVA using Greenhouse-Geisser correction found no differences in performance based on the specific emotion of the word, F(1, 114) = .2.09, p = .151, or face, F(2, 222.01) = .38, p = .681; however, word and face emotion interacted, which again simply reflects the replicated Emostroop effect, F(1.88, 214.77) = 5.04, p = .008.

3.3. The duration to decode semantic facial emotions across participants

Every time bin showed the Emostroop effect in the predicted direction (incongruent RTs longer than congruent), but the observed effect sizes differed non-monotonically across time bins. From the shortest to longest time bins, effect sizes were 16.667ms d = 0.099; 33.333ms d = 0.018; 83.333ms d = 0.260; 100ms d = 0.088; 150ms d = 0.186; 200ms d = 0.080; 300ms d = 0.158; 400ms d = 0.112. These data are consistent with the hypothesis that the Emostroop effect is present at all time bins. The 95% confidence intervals for all effect means overlapped across all time bins between the highest lower bound (10ms in the 83.333ms time bin) and the lowest upper bound (32ms in the 200ms time bin). While both groups had a peak Emostroop effect between 83.33 and 100ms, and the smallest observed effect size (d = 0.018) occurred at the second shortest (33.333ms) time bin, even the very shortest time bin (16.333ms) generated a moderately large effect (d = 0.099) comparable to several of the longer time bins; this suggests that the Emostroop effect does not require significant processing time. However, a test of the Emostroop effect performed separately within each time bin was not significant for the shortest bins (16.67ms and 33.33ms), which is not surprising given that we had 80% power to detect a d of 0.264, roughly double the mean observed effect size across all time bins (d = 0.125).

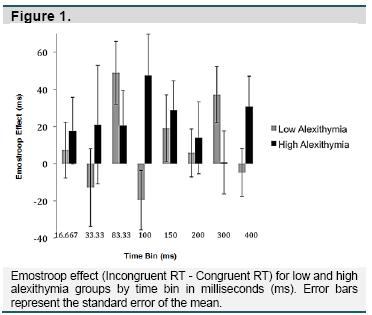

3.4. Effects of High or Low Alexithymia on the Emostroop Effect

Repeated-measures ANOVA using Greenhouse-Geisser correction was used to compare the Emostroop effect between time bins and between high and low alexithymia groups. Overall, the high alexithymia group performed the task more slowly than the low alexithymia group (F(1, 20851) = 169.533, p< .000). However, the Emostroop effect did not differ by alexithymia group (F(1, 113) = .115, p = .736). In addition, there was no significant interaction between time bin and alexithymia group in predicting the Emostroop effect (F(5.23, 590.76) = 1.65, p = .141). Within the low alexithymia group, the Emostroop effect tended to be stronger in some time bins (F(4.79, 277.92) = 2.08, p = .071), with greater effects in intermediate (83.33ms, B = 48.89, p = .005) and longer (300ms, B = 37.14, p = .017) duration bins. This pattern was similar in the high alexithymia group, with similar peaks in the intermediate (100ms, B = 47.42, p = .038; 150ms, B = 28.80, p = .074), and longer (400ms, B = 30.69, p = .067) time bins; however, this difference was not significant due to the amount of noise across high alexithymia participants (F(4.58, 252.06) = .43, p = .809) (Figure 1).

Including trait covariates. The high alexithymia group had significantly lower verbal IQ scores on the ERVT (M = 10.08, SD = 8.89) than the low alexithymia group (M = 13.91, SD = 8.21), t = 2.40, p =.018. The high alexithymia group also had significantly higher BDI-II scores (M = 18.68, SD = 11.28) than the low alexithymia group (M = 5.00, SD = 4.87), t = 8.52, p< .000 and higher STAI scores (M = 96.82, SD = 18.20) compared to the low alexithymia group (M = 66.29, SD = 15.78), t = 9.59, p< .000. After adding ERVT as a covariate, the interaction between time bin and alexithymia group on the Emostroop effect was marginally significant (F(5.19, 581.75) = 1.99, p = .075), because lower verbal IQ scores within the high alexithymia group conveyed (unexpectedly) greater Emostroop effects, especially at intermediate and longer time bins (overall r(54) = -.29, p = .033; 83.33ms, 300ms, & 400ms bins r(54) < -.22, p< .106). This indicates that even when verbal IQ is compromised, individuals with high alexithymia are not impaired at decoding irrelevant background faces; indeed, they appear to be—if anything—encoding them more strongly. BDI-II scores and STAI scores produced similar but nonsignificant effects (BDI-II: F(5.13, 569.47) = 1.71, p = .103; STAI: F(7, 571.74) = 1.38, p = .228).

Specific Emotions. While differences in RT among high and low alexithymia groups are not apparent when contrasting congruent and incongruent trials, deficits may appear for specific emotions. Examining the effect of different face prime and target word emotion combinations on RT with an independent-samples t-test, the high alexithymia group was significantly slower when responding to sad words that followed sad or angry facial emotion primes. The high alexithymia group responded slower than the low alexithymia group on congruent trials with sad primes followed by sad words (high M = 745.84, low M = 698.28; t = 2.11, p =.037) or on incongruent emotional trials with angry primes followed by sad words (high M = 766.79, low M = 724.64; t = 2.39, p =.019).

4. DISCUSSION

Alexithymia has been linked to deficits in the cognitive processing and regulation of emotion (Parker et al., 1993a), but the point in processing at which the impairment emanates, and which emotions are most affected, is unclear. The current study administered the Emostroop task (Hofelich & Preston, 2012; Preston & Stansfield, 2008), with varying durations of exposure to irrelevant sad, angry, and neutral facial expressions. The goal was to determine how long it takes for people to spontaneously process the semantic category of a specific emotional expression, as well as to determine if any differences in Emostroop performance correlated with trait alexithymia. We also added a basic word and face classification task using the same stimuli to ensure that any difficulties on the Emostroop task did not stem from more fundamental problems identifying or recognizing the stimuli.

We replicated the basic Emostroop effect across participants, with longer response times to trials where the facial emotion was incongruent compared to congruent with the emotion of the word to be categorized. Our facial emotion pictures were shown in time bins ranging from subliminal (~30ms) to supraliminal (> 200ms) (Tsuchiya & Adolphs, 2007). Despite this range, we did not observe significant differences in the Emostroop effect across time bins. However, the mean effect for both high and low alexithymia groups was larger during intermediate (83.33 or 100ms) and longer time bins (300 or 400ms), compared to the other intervals. It is possible that semantic facial decoding peaks in waves, such as when information first arrives in the visual and ventral temporal cortices and again after full processing has occurred along the entire cortex (Pessoa & Adolphs, 2010). From the graph in Figure 1, these peaks appear to occur slightly earlier for low alexithymia individuals, but these omnibus group effects and interactions are not significant, with groups only differing statistically within those bins.

Individuals with high alexithymia in our sample were not impaired at classifying the non-verbal basic emotion of sad or angry facial expressions or the more verbal adjectives when the stimuli were presented alone and with unlimited time. This suggests that alexithymia does not result from a fundamental problem detecting and categorizing facial or verbal emotion, which is inconsistent with prior research suggesting that alexithymic individuals are impaired on verbal and non-verbal recognition of external emotions per se (e.g. Lane et al., 1996; Lane et al., 2000; Parker et al., 1993a). However, our face-only and word-only blocks may have been too easy, and our range of alexithymia scores may have been too small, to detect differences. Parker and colleagues (2005) described alexithymic individuals’ facial emotion impairments to be fairly subtle and Mueller and colleagues (2006) posited that their results could be attributed to their non-college patient sample, which had more pronounced emotional problems.

Further suggesting spared processing, those with high alexithymia were also not impaired in the Emostroop effect across time bins. It is possible that their slower performance overall reflects a real underlying impairment in processing, which they compensated for by slowing performance to sufficiently improve accuracy. However, this is unlikely given all of the other patterns of data in our study. The less verbal individuals with high alexithymia actually had enhanced Emostroop effects at typical durations, which suggests that they can and do attend to facial affect and decode it at a semantic level. If anything, our results could indicate that these individuals have a tendency to attend too much to negative expressions, at least at first.

The possibility of alexithymics attending more to sad or angry information early on is consistent with some contradictory findings reviewed in the introduction and with their slowed responses on trials with sad words paired with sad or angry expressions in our task. Trait or mood induced attention to negative affect is known in other studies to delay responses, as in clinical Emotional Stroop tasks (McKenna & Sharma, 1995). Sad words are also typically responded to more slowly than angry or neutral words, likely due to embodiment effects (Preston & Stansfield, 2008). Perhaps our individuals with high alexithymia attended more to the sad affect, which naturally captured and slowed their task responses, which further augmented their overall slowness on the task, producing the significant interaction for those sad trials only. Importantly though, the lack of alexithymia effects specific to anger cues, and the significant slowing only when classifying sad words, undermines interpretations of alexithymia as an early processing deficit specific to angry or threatening stimuli (Mueller et al., 2006; Vermeulen et al., 2006), though we did not test fearful. It is possible that threat-specific deficits only occur when sufficient arousal is generated by the stimuli, which may be more common for anger cues or tasks that present the stimuli for longer.

Where null effects are involved in clinical populations, the sample size and severity of the population is always a possible issue. We did recruit participants with high and low TAS-20 scores, but still only found 19 participants with scores extreme enough to be considered clearly alexithymic. This was dealt with by using median-split analyses—which is commonly used in studies on alexithymia, but still could have limited our findings. However, the fact that we were able to replicate known links between alexithymia and lower verbal IQ, higher depression, and higher anxiety indicates that our participants had at least sufficient levels of alexithymia to produce known, typical relationships—relationships that still could not predict Emostroop performance (likely because they were preserved on the task anyway).

One could try to increase the sample size substantially to try to find group differences on our task, particularly for the potentially shifted time bin effects between groups. However, a sample of N = 505 is needed for 80% power for the observed mean effect size within time bins—a sample that seems unwarranted given that we have no other indication that alexithymia is associated with impaired Emostroop performance. Despite our view that these null effects are real, future research could further confirm spared performance by recruiting more extreme candidates and adding clinical interviews and ancillary measurements of alexithymia. Measurement issues are particularly germane to alexithymia because, as Lane and colleagues pointed out, the trait is characterized by impairments in monitoring and identifying internal emotional states, particularly through verbal responses (Lane, Quinlan, Schwartz, Walker, & Zeitlin, 1990). Thus, perhaps more reliable relationships could be found by adding the Levels of Emotional Awareness Scale (Lane et al., 1990), the Toronto Structured Interview for Alexithymia (Bagby, Taylor, Parker, & Dickens, 2006), and third-party ratings from family (Mueller et al., 2006).

In summary, our research does not suggest that alexithymia stems from early processing impairments in decoding others’ sad and angry facial expressions or sad and angry emotional words. We also do not find support for the idea that the disorder stems from early processing problems with specific threat-relevant cues. Given the large parallel literature on clinical disorders associated with alexithymia (e.g., irritable bowel syndrome, fibromyalgia, panic disorder), a parsimonious possibility is that they have difficulty interpreting internal feeling states as affective, as suggested previously by clinicians and neuroscientists (e.g., Lane & Schwartz, 1987; Lumley, Tomakowsky & Torosian, 1997; MacLean, 1949). Interoceptive confusion could both increase somatic complaints and make it difficult to recognize one’s own and others’ emotional states, especially for feelings that require significant access to internal cues, such as the arousal from anger and the gastric contractions from disgust. Such impairments would be harder to detect on tasks that require simple access to conceptual or emotion information, but would emerge on tasks that require access to felt, internal cues (e.g., making subtle affective discriminations, deciding whether one’s own internal state results from experienced anger/fear or a non-affective physiological dysfunction, deciding whether one feels disgust or anger). This hypothesis is supported by the fact that individuals with alexithymia do have neural differences in medial brain systems (ACC, MPFC, insula) that are known to participate in the conscious, subjective perception and manipulation of emotional feeling states, even if they are not required to initially perceive or generate emotions (Damasio, 1999; Lane & Schwartz, 1987; MacLean, 1949). As further evidence, individuals with alexithymia were impaired at the Iowa Gambling Task, a task designed to measure people’s ability to use interoceptive, affective cues to guide advantageous decision making—a deficit that was accompanied by reduced blood flow in the medial prefrontal cortex (Kano, Ito, & Fukudo, 2011).

In the future, research should more carefully contrast performance on tasks that only require abstract processing versus explicit reference to internal feeling states, paying particular attention to states that do or do not hinge upon subtle differences in these feelings. Given the high utilization of the health care system by individuals with diffuse physical complaints that are difficult to diagnose, better research into these issues would help individuals with alexithymia as well as the doctors and systems designed to treat them.

5. ACKNOWLEDGMENTS

The authors thank Lauren Ripley for help collecting data.

6. REFERENCES

Bagby, R. M., Parker, J. D. A., & Taylor, G. J. (1994). The twenty-item Toronto Alexithymia Scale-I. Item selection and cross-validation of the factor structure. Journal of Psychosomatic Research, 38, 23-32. [ Links ]

Bagby, R. M., Taylor, G. J., Parker, J. D. A., & Dickens, S. E. (2006). The development of the Toronto Structured Interview for alexithymia: Item selection, factor structure, reliability and concurrent validity. Psychotherapy and Psychosomatics, 75, 25-39. [ Links ]

Beck, A. T., & Steer, R. A. (1987) Beck Depression Inventory Manual. San Antonio, TX: The Psychological Corporation. [ Links ]

Bucci, W. (1997).Psychoanalysis and cognitive science: a multiple code theory. New York: Guilford. [ Links ]

Damasio, A. R. (1999). The feeling of what happens: Body and emotion in the making of consciousness. New York, NY: Harcourt Brace. [ Links ]

Educational Testing Service. (1976). Kit of factor-referenced tests. Princeton, NJ: Author. [ Links ]

Ekman, P., & Friesen, W. V. (1976).Pictures of facial affect [Slides]. Palo Alto, CA: Consulting Psychologists Press. [ Links ]

Gil, F. P., Ridout, N., Kessler, H., Neuffer, M., Schoechlin, C., Traue, H. C., & Nickel, M. (2009). Facial emotion recognition and alexithymia in adults with somatoform disorders. Depression and Anxiety, 26, E26-E33. [ Links ]

Hendryx, M. S., Haviland, M. G., & Shaw, D. G. (1991). Dimensions of alexithymia and their relationships to anxiety and depression. Journal of Personality Assessment, 56, 227-237. [ Links ]

Hofelich, A. J., & Preston, S. D. (2012). The meaning in empathy: Distinguishing conceptual encoding from facial mimicry, trait empathy, and attention to emotion. Cognition & Emotion, 26, 119-128. [ Links ]

Holt, C. S. (1995). Evidence for a verbal deficit in alexithymia. Neurosciences, 7, 320-324. [ Links ]

Kano, M., Ito, M., & Fukudo, S. (2011). Neural substrates of decision making as measured with the Iowa gambling task in men with alexithymia. Psychosomatic medicine, 73(7), 588-597. [ Links ]

Kano, M., Hamaguchi, T., Itoh, M., Yanai, K., & Fukudo, S. (2007). Correlation between alexithymia and hypersensitivity to visceral stimulation in human. Pain, 132, 252-263. [ Links ]

Kano, M., Fukudo, S., Gyoba, J., Kamachi, M., Tagawa, M., Mochizuki, H., ... Yanai, K. (2003). Specific brain processing of facial expressions in people with alexithymia: an H215O‐PET study. Brain, 126, 1474-1484. [ Links ]

Kokkonen, P., Veijola, J., Karvonen, J. T., Laksy, K., Jokelainen, J., Jarvelin, M., & Joukamaa, M. (2003). Ability to speak at the age of 1 year and alexithymia 30 years later. Journal of Psychosomatic Research, 54, 491-495. [ Links ]

Kugel, H., Eichmann, M., Dannlowski, U., Ohrmann, P., Bauer, J., Arolt, V., ... Suslow, T. (2008). Alexithymic features and automatic amygdala reactivity to facial emotion. Neuroscience Letters, 435, 40-44. [ Links ]

Lane, R. D., & Schwartz, G. E. (1987). Levels of emotional awareness: A cognitive‐developmental theory and its application to psychopathology. American Journal of Psychiatry, 144, 133-143. [ Links ]

Lane, R., Sechrest, L., Riedel, R., Shapiro, D. E., & Kaszniak, A. W. (2000). Pervasive emotion recognition deficit common to alexithymia and the repressive coping style. Psychosomatic medicine, 62, 492-501. [ Links ]

Lane, R .D., Quinlan, D.M., Schwartz, G. E., Walker, P.A., & Zeitlin, S.B. (1990). The levels of emotional awareness scale: A cognitive-developmental measure of emotion. Journal of Personality Assessment, 55, 124-134. [ Links ]

Lane, R.D., Sechrest, L., Reidel, R., Weldon, V., Kaszniak, A., & Schwartz, G.E. (1996). Impaired verbal and nonverbal emotion recognition in alexithymia. Psychosomatic Medicine, 58, 203-210. [ Links ]

Leweke, F., Stark, R., Milch, W., Kurth, R., Schienle, A., Kirsch, P., ... Vaitl, D. (2004). Patterns of neuronal activity related to emotional stimulation in alexithymia. Psychotherapie Psychosomatik Medizinische Psychologie, 54, 437-444. [ Links ]

Luminet, O., Vermeulen, N., Demaret, C., Taylor, G. J., & Bagby, R. M. (2006). Alexithymia and levels of processing: Evidence for an overall deficit in remembering emotion words. Journal of Research in Personality, 40, 713-733. [ Links ]

Lumley, M. A., Tomakowsky, J., & Torosian, T. (1997). The Relationship of alexithymia to subjective and biomedical measures of disease. Psychosomatics, 38, 497-502. doi: 10.1016/S0033-3182(97)71427-0. [ Links ]

Lundh, L.-G. & Simonsson-Sarnecki, M. (2002). Alexithymia and cognitive bias for emotional information. Personality and Individual Differences, 32, 1063-1075. [ Links ]

MacLean, P. D. (1949). Psychosomatic disease and the visceral brain: Recent developments bearing on the Papez theory of emotion. Psychosomatic Medicine, 11, 338-353. [ Links ]

McKenna, F., & Sharma, D. (1995).Intrusive cognitions: An investigation of the emotional Stroop task. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(6), 1595-1607. [ Links ]

Martin, J. B., & Pihl, R. O. (1985). The stress-alexithymia hypothesis: Theoretical and empirical considerations. Psychotherapy and Psychosomatics, 43(4), 169-176. [ Links ]

Martínez-Sánchez, F. & Serrano, J.M. (1997). Influencia del nivel de alexitimia en el procesamiento de estímulos emocionales en una tarea Stroop. Psicothema, 9(3), 519-527. [ Links ]

Montebarocci, O., Surcinelli, P., Rossi, N., & Baldaro, B. (2011). Alexithymia, verbal ability and emotion recognition. Psychiatric Quarterly, 82, 245-252. [ Links ]

Mueller, J., Alpers, G. W., & Reim, N. (2006). Dissociation of rated emotional valence and Stroop interference in observer-rated alexithymia. Journal of Psychosomatic Research, 61, 261-269. [ Links ]

Nemiah, J., Freyberger, H., & Sifneos, P. E. (1976). Alexithymia: a view of the psychosomatic process. In O. W. Hill (Ed.), Modern trends in psychosomatic medicine (pp. 430-439). London: Butterworths. [ Links ]

Parker, P. D., Prkachin, K. M., & Prkachin, G. C. (2005). Processing of facial expressions of negative emotion in alexithymia: The influence of temporal constraint. Journal of Personality, 73, 1087-1107. [ Links ]

Parker J. D., Taylor, G. J., & Bagby R. M. (1993a). Alexithymia and the recognition of facial expressions of emotion. Psychotherapy Psychosomatics, 59,197-202. [ Links ]

Parker J. D., Taylor, G. J., & Bagby, R. M. (1993b). Alexithymia and the processing of emotional stimuli: An experimental study. New Trends in Experimental and Clinical Psychiatry, 9, 9-13. [ Links ]

Parker, J. D., Taylor, G. J., & Bagby, R. M. (2001). The relationship between emotional intelligence and alexithymia. Psychotherapy and Psychosomatics, 230, 107-115. [ Links ]

Pessoa, L., & Adolphs, R. (2010). Emotion processing and the amygdala: From a "low road" to "many roads" of evaluating biological significance. Nature Reviews Neuroscience, 11, 773-783. [ Links ]

Preston, S. D., & Hofelich, A. J. (2012). The many faces of empathy: Parsing empathic phenomena through a proximate dynamic-systems view of representing the other in the self. Emotion Review, 4, 24-33. [ Links ]

Preston, S. D., & Stansfield, R.B. (2008). I know how you feel: Task-irrelevant facial expressions are spontaneously processed at a semantic level. Cognitive, Affective, & Behavioral Neuroscience, 8, 54-64. [ Links ]

Prkachin, G. C., Casey, C., & Prkachin, K. M. (2009). Alexithymia and perception of facial expressions of emotion. Personality and Individual Differences, 46, 412-417. [ Links ]

Reker, M., Ohrmann, P., Rauch, A. V., Kugel, H., Bauer, J., Dannlowski, U., ... Suslow, T. (2010). Individual differences in alexithymia and brain response to masked emotion faces. Cortex, 46, 658-667. [ Links ]

Spielberger, C.D. (1983). Manual for the State-Trait Anxiety Inventory. Palo Alto, CA: Consulting Psychologists Press. [ Links ]

Suslow, T. (1998). Alexithymia and automatic affective processing. European Journal of Personality, 12, 433-443. [ Links ]

Suslow, T., & Junghanns, K. (2002). Impairments of emotion situation priming in alexithymia. Personality and Individual Differences, 32, 541-550. [ Links ]

Suslow, T., Donges, U. S., Kersting, A., & Arolt, V. (2001). 20-Item Toronto Alexithymia Scale: Do difficulties describing feelings assess proneness to shame instead of difficulties symbolizing emotions? Scandinavian Journal of Psychology, 41, 329-334. [ Links ]

Suslow, T., Junghanns, K., Donges, U. S., & Arolt, V. (2001). Alexithymia and automatic processing of verbal and facial affect stimuli. Current Psychology of Cognition, 20, 297-324. [ Links ]

Taylor, G. J. (1984). Alexithymia: Concept, measurement, and implications for treatment. American Journal of Psychiatry, 141, 725-732. [ Links ]

Taylor, G. J. (2000). Recent developments in alexithymia theory and research. Canadian Journal of Psychiatry, 45, 134-142. [ Links ]

Taylor, G. J., Bagby, R. M., & Parker, J. D. A. (1997). Disorders of Affect Regulation: Alexithymia in Medical and Psychiatric Illness. Cambridge: Cambridge University Press. [ Links ]

Taylor, G. J., Parker, J. D. A., & Bagby, R. M. (2003). The twenty-item Toronto Alexithymia Scale-IV. Cross-cultural validity and reliability. Journal of Psychosomatic Research, 55, 277-283. [ Links ]

Tsuchiya, N., & Adolphs, R. (2007). Emotion and consciousness.Trends in Cognitive Sciences, 11, 158-167. [ Links ]

Vermeulen, N., Luminet, O., & Corneille, O. (2006). Alexithymia and the automatic processing of affective information: Evidence from the affective priming paradigm. Cognition and Emotion, 20, 64-91. [ Links ]