1. Introduction

Identification of emotional facial expressions (EFEs) is a key element of non-verbal interpersonal communication between humans. EFEs contain within them socially relevant information as well as cues of the emotional state of the person, on which they have little conscious control (Troisi, 1999). Fruitful social interactions require accurate identification of EFEs. This not only creates rapport, butal so helps the observer formulate and modulate their own behavior in response to the expresser (Isaacowitz et al., 2007).

Although EFEs are known to contain visual cues of a number of different emotional categories and be ambiguous, some of them have shown ‘universality’, being recognizable across geographical and ethnic boundaries, with little to no cultural modifications (Elfenbein et al., 2002; Matsumoto et al., 2008). Six ‘universal’ EFEs, namely anger, sadness, fear, surprise, happiness, and disgust have been identified till date (Ekman et al., 1971). While being recognizable across cultures, however, the accuracy of the identification of these EFEs varies across individuals, with the intensity of the EFE and the characteristics of the observers playing the most significant roles (Hess et al., 1997). Furthermore, psychiatric ailments like mood and personality disorders also affect the accuracy and interpretation of EFEs, as it has been reported in research done a priori (Heuer et al., 2010).

It has been seen that identification of EFEs depend on certain anatomical changes in certain regions of the face (Kestenbaum, 1992). Various studies have employed different occlusal methods to identify that the eyes region and the mouth region are the two most important areas of the faces that determine the identification of EFEs (Noyes et al., 2021; Wegrzyn et al., 2017). Research utilizing eye tracking technology has also been conducted to observe to what extent observers focus on these facial regions while identifying emotions from faces (Calvo et al., 2018; Eisenbarth et al., 2011). It has also been observed that while for individual emotional facial expressions, the extent of the observers’ focus on the eyes and the mouth vary, but these two regions remain the most focused on parts of the emotional faces (Calvo et al., 2018; Wegrzyn et al., 2017). Studies using ‘bubbles’ of emotional facial expression stimuli have also found that fixation of the observer’s gaze on the eyes and the mouths of the expressors were almost inexorably linked with the identification of the emotion itself (Neumann et al., 2006). However, a question that has still remained largely unexplored is that of whether the other regions of the face (ears, cheeks, forehead) provide more information to the observer or serve only as distractions during the identification of EFEs, with only one study being conducted among a small group of Caucasian adults (Kestenbaum, 1992).

Although Indians form 17.7% of the world’s population, research on the identification of EFEs among the Indian population are sparse (Bandyopadhyay et al., 2021; Mishra et al., 2018). Furthermore, no study comparing accuracy differences during identification of EFEs from full and partial faces has been conducted in this populace. Thus, the current study was conducted with an aim to compare the accuracy of recognition and identification of universal EFEs under full-face and partial face conditions (only showing the eyes and the mouth regions), using static images showing the six universal facial expressions at 100% intensity.

The hypothesis of the present research was that when presented with images of emotional facial expressions at 100% intensity, Indian young adult observers would be able to identify the EFE with a higher accuracy from partial face images (showing only the eyes and mouth region) as compared to complete face images.

2. Materials and Methods

An analytical study with a cross-sectional design was conducted in a tertiary care hospital cum teaching institute of Eastern India from August to October of 2019. Relevant ethical permissions were obtained from the Institutional Ethics Committee of the study Institution.

2.1 Study Population and Sampling

The study population consisted of young Indian adults who volunteered for the study.

Based on the study conducted by Kestenbaum (1992) among Caucasian adults, sample size was calculated based on the difference between the mean scores of the participants while identifying the least accurately recognized negative EFE, fear from complete and partial faces. The sample size was calculated using the formula:

Where, Z α is the critical value of the Normal distribution at α (e.g., for a confidence level of 95%, α is .01 and the critical value is 2.58), σ 1 and σ 2 are the standard deviations of the two groups and d is the estimated difference between the means of the two groups, and n is the required sample size for each group. Considering σ 1 as .41, σ 2 as .5, and d as .2, the final required sample size for each group was 70 participants.

The 140 study participants were recruited from adults aged between 18-26 years, who responded to fliers and social media advertisements of the study. Everyone meeting the age criteria and responding to the call for the study were considered as participants. The participants were mostly students at the study institution or nearby the university campus and volunteered for the study. They were screened for any neuropsychiatric illnesses using the Primary Care Evaluation of Mental Disorders Patient Health Questionnaire (PRIME-MD PHQ) selfadministered questionnaire, which has been utilized in and validated for the Indian population (Avasthi et al., 2008; Spitzer et al., 1999), as well as by a registered psychiatrist working in the Department of Neuropsychiatry of the study institution. Of the 258 total respondents, 12 were excluded for either not meeting the age criteria or for being screened out by the psychiatric evaluation. Of the remaining 246 respondents, random sampling was used to assign 70 volunteers to the full-face group using a random number table. Once a participant was assigned to the group, corresponding another age (2 years), sex, residence and socioeconomic status matched participant was recruited for the other group. None of the participants were paid any remuneration for taking part in the present study.

2.2 Study Tools

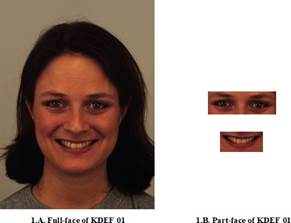

Four (4) full-color image sets from the Karolinska Directory of Emotional Faces (KDEF) were utilized for the current study (Lundqvist et al., 1998). Each of these image sets comprised of seven individual images corresponding to full faces of a person (expressor) expressing universal facial expressions at 100% intensity as well as a neutral image of the expressor. Two of the selected image sets (KDEF no. 01 and 02) showed EFEs of women expressors, and the other two (KDEF no. 14 and 35) were of men. In addition to these four image sets, two extra images were selected for demonstration purposes, one of a male expressor expressing sadness (KDEF nos. 09) and the other one of a female expressor expressing happiness (KDEF nos. 07). All of these image sets were selected randomly using a random number table. Subsequently, all of these 30 images were modified by an image editing software (GIMPTM) to produce partial faces, where only the eyes and mouth regions of the participants were kept, while the rest of the face was removed. The extents of the ‘eye region’ and ‘mouth region’ were adapted from the study done by Schurgin et al. (2014), where both eyes (LY and RY) along with the eyebrows (LB and RB) and the nasion (NS) were considered to be part of the eye region and the upper and lower lips (UL and LL) were considered to be parts of the mouth region for the purposes of the current study.

The full-face images of the EFEs of the four study sets were shuffled using a random number table to produce a superset (hereafter called ‘Full-face set’). Similarly, the partial faces corresponding to each of the emotions were aggregated and shuffled to form the ‘Partialface set’ of images.

2.3 Study Technique

The present study was conducted after obtaining written informed consent from the study participants in an environment isolated from any distracting stimuli such as loud noises, bright lights. The four full face neutral expression images of the four expressors were at first shown to all the participants to control for any confusion arising from unfamiliarity with the faces. This was followed by a demonstration session. For each of the two groups, two demonstration images were used to explain and demonstrate the whole identification task to the participants (Palermo et al., 2004).

Participants were shown the Full-face and the Partialface sets on a 19.35 34.42 cm computer screen with 1208 768 resolution and a refresh rate of 60Hz. After the demonstration for the image set, each image from that set was presented on the screen for a period of two seconds, after which it was replaced by a black screen. The images were shown to the participants using the MicrosoftTM Powerpoint software, and presenting time was controlled via the same. Each participant then marked on the given response sheet the emotion that they could identify being expressed in that image, from a list of the six universal EFEs. After each response was marked, the participant was shown the next image. This process continued till the end of the image set. None of the images in the sets were repeated for a participant, and there was no provision for choosing multiple options on the EFE list.

Answers provided by the participants were marked as being ‘correct’ or ‘incorrect’, with scoring of 1 and 0, respectively. The more faces a participant correctly identified, the higher score was given to him/her.

2.4 Data Analysis

After response marking, each participant was scored out of 24 for each image set. Data collected was compiled using MS-Excel 19 (Microsoft Inc) and analyzed using frequencies and percentages. Difference between the identification accuracy of the participants with respect to the two image sets was measured using the student’s t test and Chi-squared test (with Yates correction wherever applicable) and a p-value of <.05 was considered to be statistically significant. All statistical calculations were made using the Statistical Package for Social Sciences (SPSS) v. 25 (IBM Corp).

3. Results

Analysis of the collected data showed that the mean SD age of the participants was 21.3 1.7 years for full face group, and 21.2 1.6 years for the partial face group. Most of the participants were male (62.9%), were from rural areas (61.4%), and belonged to higher socioeconomic status families -per capita monthly family income of > |8000; 65.7%- (Khairnar et al., 2021). Most of the participants were medical students of the study institution (37.8%), followed by engineering students (23.6%), students pursuing bachelor’s degree in the arts (15%), medical technologists (14.3%), and lawyers (9.3%) (see Table 1). The sex distribution and socioeconomic status of the study participants were similar to that observed in other studies working with similar populations.

Table 1 Sociodemographic Characteristics of the Participants (n = 140)

| Characteristic | Full-face group (n = 70) | Part-face group (n = 70) |

| Age in completed years (mean±SD) | 21.3±1.7 years | 21.2±1.6 years |

| Sex (male, %) | 44 (62.9%) | 44 (62.9%) |

| Residence (rural, %) | 43 (61.4%) | 43 (61.4%) |

| Per capita monthly income (>|8000, %) | 46 (65.7%) | 46 (65.7%) |

| Occupation (n, %) | ||

| Medical student | 26 (37.1%) | 27 (38.6%) |

| Engineering student | 18 (25.7%) | 15 (21.4%) |

| Lawyer | 7 (10.0%) | 6 (8.6%) |

| Medical technologists | 9 (12.9%) | 11 (15.7%) |

| Arts student | 10 (14.3%) | 11 (15.7%) |

The mean score of the participants who identified the Full-face set was 18.1 1.8, with a median score of 18. The identification rate was higher in the Part-face set group, with participants scoring a mean of 19.7 1.6 out of 24, with a median score of 20 (Table 2). This difference between the mean scores of the two groups was also found to be statistically significant (p-value = .0007).

When the correct identification rates for individual EFEs were explored, those participants who correctly identified 3 images depicting an EFE out of a possible four (75%) were considered to have a high accuracy of identifying the said EFE. It was seen that the most correctly identified EFE in the full-face group was happiness, with 100% of participants being able to identify 3 images depicting the emotion. This was followed by disgust (90%) and sadness (80%). The least identified EFE was fear, with only 22.9% of the participants being able to identify three or more images of the emotion (Table 3). Participants belonging to the partial-face group also identified happiness the most correctly, with all of them identifying at least three of the four EFEs shown to them.

It was also observed that the accuracy of correctly identifying the emotions was higher across the board for every other emotions, except disgust, where the proportion of participants identifying at least 3 out of 4 EFEs was lower than the full-face set. These differences were found to be statistically significant for all EFEs except fear and anger (Table 3).

4. Discussion

It was observed in this study that participants scored on an average 18.1 out of 24 (75%) of EFEs from full face images and 19.7 out of 24 (83.3%) from images showing only the eyes and the mouth regions. This points to a high general accuracy of Indian adults to identify universal facial expressions and conform to findings that have been reported by Mishra et al. and Lohani et al. in their studies as well as a study done by two of the authors previously (Bandyopadhyay et al., 2021; Lohani et al., 2013; Mishra et al., 2018). However, an interesting observation in this regard was the statistically significant increase of accuracy when participants tried to identify EFEs from partial faces as compared to full face images. Research done a priori using eye-tracking have shown that there is an increased focus on specific regions of face when humans try to identify emotions (Schurgin et al., 2014). Focus on the eyes and the mouth regions has been observed in most people when attempting to identify a particular universal EFE. Therefore, it follows that the activity of identification of emotion from an expressive face will be easier where only the specific areas of interest are shown, free from the potential ‘distractor’ regions, which was substantiated by the present study.

When individual emotions were assessed, it was seen that the increase in accuracy was observed for all of the EFEs except that of happiness and disgust. This contrasts with the results reported by Kestenbaum in her study, where she found that, except happiness, all other EFE identification accuracies decreased for eyes/mouth combinations (Kestenbaum, 1992). Thiscanbeexplained in terms of cultural and geographical modulations on emotion perception. Kestenbaum, with her observers being primarily Caucasian, observed such results, as it has been reported by Jack et al., that Westerners showed more generalized focus on the face while identifying human emotions. On the other hand, Asians showed a higher region-specific fixation in identifying emotions (Jack et al., 2009). A thorough search of the available literature did not yield any similar study done among Indians. The results of the current study suggest, however, that they share their EFE identification methods with the other Asian populations, with whom they share large portions of their culture.

Table 2 Differences of Scores Between Full-face and Partial Face Groups (n = 140)

| EFEs | Full-face group (n = 70) | Part-face group (n = 70) | t -test value ( p -value) |

|---|---|---|---|

| Total score | |||

| Mean | 18.1 | 19.7 | 4.2 (.0007)* |

| Standard deviation | 1.8 | 1.6 |

Note. *statistically significant.

Table 3 Accuracy of Identification of Emotional Facial Expressions by Participants (n = 140)

| EFEs identifieda | Full-face group (n = 70) | Part-face group (n = 70) | Z -/Chi-squared ∧ value ( p -value) |

|---|---|---|---|

| Positive EFEs Happiness (n, %) | 70 (100%) | 70 (100%) | - (.00) |

| Surprise (n, %) Negative EFEs | 63 (90%) | 70 (100%) | 2.92 (.003*) |

| Sadness (n, %) | 56 (80%) | 67 (95.7%) | 2.59 (.009*) |

| Disgust (n, %) | 63 (90%) | 53 (75.7%) | 2.02 (.043*) |

| Fear (n, %) | 16 (22.9%) | 23 (32.9%) | 1.13 (.258) |

| Anger (n, %) | 32 (45.7%) | 42 (60%) | 1.52 (.128) |

Note. aCorrect identification of 3 out of 4 images of each EFEs; *statistically significant; ^with Yates correction, wherever applicable.

Significantly, the EFEs depicting happiness were identified with 100% accuracy in both full-face as well as part-face scenarios. This points to the well-established ‘happy face advantage’, in which the idiosyncratic transformation of the facial region (open mouth, showing teeth etc.) are distinctly and instantly recognizable, and are therefore identifying the EFE of happiness as not affected by the other distractor regions of the face (Kirita et al., 1995). Interestingly, the findings of the present study suggest that the opposite is true for the EFE of disgust, where just the changes in the eyes and the mouth regions of the expressor were not enough and visualizing the other regions of the face was also needed by the observer to properly identify the EFE. A decrement in the accuracy of the participants to identify disgust from partial-face images can be explained by the fact that in expressing disgust, there occurs a largely global nonspecific change in the facial musculature instead of more intense changes of specific regions like those occurring in other EFEs (Waller et al., 2008). Furthermore, in the expression of disgust, there often occur wrinkling up of the nose, an event that was excluded from the partial face images, which excluded the nasal regions of the face (Widen et al., 2008). Therefore, the findings of the present study indicate that for most facial expressions, the other regions of the face serve as distractors, and provide more evidence towards establishing the eyes and the mouth regions as the key facial areas for the expression as well as recognition of EFEs.

The findings of the present study have important implications. As it was identified by the researchers in a previous study, the current study also found that Indian adults identified negative emotions such as fear and anger to a much lesser extent than positive emotions (Bandyopadhyay et al., 2021). This has important social and behavioral implications, as misidentification of emotions can lead to interpersonal conflicts (Wells et al., 2016). Furthermore, in certain medical conditions, such as among patients of chronic diseases, and psychiatric ailments as depressive and anxiety disorders, there is a distinct lowering of accuracy in the identification of negative EFEs (Gollan et al., 2010; Prochnow et al., 2011). Improvement of EFE identification rates for negative emotions (except disgust) from partial faces, as observed in the present study, may have certain applications in this regard in the form of training modules used in educational and psychological counseling. Interventions using images of partial faces to improve the identification accuracy of different EFEs can also find use in the management of social-communication problems suffered by patients of conditions such as autism spectrum disorders in the Indian population (Russo-Ponsaran et al., 2016). However, further research in this regard needs to be conducted.

While it has been seen by others as well as the authors themselves that there exists difference between males and females in the identification of EFEs, this phenomenon was controlled for in the study by ensuring the matching for sex in the two study groups (Bandyopadhyay et al., 2021; Montagne et al., 2005). Apart from these, however, the study had several limitations. The primary limitation for the present study was that KDEF, a directory of emotional facial expressions of Caucasian expressors were used in the present study. This was done as there is not yet a validated emotional faces directory for the Indian population, which is exceedingly difficult to create due to the extreme diversity of culture and ethnicities present within the population of the subcontinent. Although it has been widely used in studies across the world, the use of KDEF in the present study might have led to certain biases in the response of the participants owing to their limited exposure to Caucasian faces. Another limitation of the present study was the use of static EFEs with 100% intensity. In most real-life situations, EFEs are expressed dynamically at much lower intensities, which leads to a wide variation of accuracy between observers (Wells et al., 2016). Nevertheless, in the present study, due to the lack of resources and appropriate infrastructure, an ‘ideal’ situation was simulated, and therefore the inferences drawn might have limited generalizability. Finally, in the present study, only between-group comparisons, and not within-group comparisons were made. This is prone to bias when there are present one or more neuro-divergent individuals in one of the study groups, especially those with high functioning autism spectrum disorders who could not be screened with the tools at hand. Therefore, a cross-over design in a future study is warranted to generate more valid and generalizable results.

5. Conclusion and Future Directions

It can be concluded from the present study that among young Indian adults, accuracy of identification of universal EFEs was high. This accuracy was further significantly enhanced when they were shown EFE images with only combinations of eyes and mouth regions visible, except disgust. This suggests that the other regions of the face serve as distractors in expression and identification of all universal EFEs, except for disgust, where the opposite happens. Further research into the topic needs to be undertaken using a facial expression directory validated for Indian populations. Also, studies using EFEs of lesser intensities, cross-over design, and using a combination of different EFEs can generate more robust data on the topic.