Introduction

For professionals specializing in voice analysis, auditory-perceptual evaluation is an essential process in the measurement exercise that enables them to clinically determine the presence or absence of a voice disorder [1]. Speech-language pathologists must have a thorough understanding of the conceptual framework underlying auditory-perceptual evaluation and the necessary conditions for conducting a reliable auditory-perceptual analysis. In the Colombian context, theoretical and practical training is provided during the undergraduate level. In such manner, basic knowledge and skills are offered to perform the evaluation process. Furthermore, this knowledge is a fundamental requirement for their professional practice [2]. Perceptual scales are part of the training and common use, specially GRBAS, as well as its derivatives RASAT and RASATI, which are tools widely used, not only by speech-language pathologists but also by other professionals such as ENT specialists [3].

Conceptual framework of auditory-perceptual evaluation of voice

Kreiman et al. [4] proposed a conceptual model that incorporates various intervening factors in assigning specific ratings to vocal acoustic signals. During perceptual evaluation, listeners compare several (potentially nonspecific) qualities they perceive in a speaker's voice with their own subjective understanding of how these qualities should be heard in a voice. Therefore, perceptual assessment involves comparing the evaluator's internal standards with the vocal production of the individual being assessed, allowing the evaluator to make judgments based on their own criteria or standards [5]. These internal reference standards are "average" or "typical" examples (normal or altered) of certain qualities being rated [4,6]. These standards are stored in memory and are developed through exposure to multiple voices. As a result, the standards can vary among listeners and are inherently unstable, influenced by factors such as memory and attention lapses or external factors like the acoustic context [5,7]. Given the variability of internal standards, the use of external standards or anchors, which are reference stimuli that listeners employ for comparison with the voice they are evaluating, is currently suggested [8].

However, the perception of voice quality by an evaluator is influenced by various factors, starting with listener attributes. These include internal reference standards and specific perceptual biases (such as being a native speaker of a particular language), professional training, or general sensitivity to certain vocal qualities [9-12]. It is widely acknowledged that training and extensive exposure to diverse voices are instrumental in refining internal reference standards [7,8,13,14]. Regarding training methods, Walden and Khayumov [15] discuss three theoretical foundations for auditory-perceptual assessment training: multiple exposures to rating demands participants to listen to voices repeatedly to enhance reliability; use of external references, in which the learner must compare the stimuli to be evaluated with a reference sample [16]; finally, incorporation of perceptual input provide additional support to the listener through the visual sensory channel, typically using spectrograms, although laryngeal images can also be used.. Additionally, random errors such as fatigue, attention lapses, or transcription mistakes fall into this category as well [12,17].

Up to this point, this could explain the fact that different scores exist between experienced and novice listeners. On one hand, a body of evidence suggests that most individuals have relatively stable internal references for normal voice because the experience with typical voices is comparatively similar. On the other hand, training methods may or may not differ among individuals. Therefore, when novice listeners rate vocal quality, they do so with reference to normalcy, whereas experienced listeners compare the signal to their internal repository of pathological voices acquired through training and rating practice [12,18,19]. Nevertheless, inter-rater reliability (agreement) appears to be low among experienced raters, even though intra-rater reliability (consistency) indices are high for these same subjects [12,20,21].

The second factor that influences perceived quality is related to the task itself, encompassing various aspects such as the scale used to quantify voice sound phenomena, instructions for completing the scale, the rating environment, and the quality of the voice sample [22-24]. The literature describes different types of scales, including categorical ratings, direct magnitude estimations, equal-appearing interval scales, visual analog scales, and paired comparisons [4,25]; a detailed description of each of the scales described in the literature is presented in Table 1. The use and reliability of these scales heavily rely on the level of training of the judges and the analysis strategies employed [4,12], hence, the various efforts to establish the diagnostic validity of the different scales [16,21,26,27].

Table 1 Types of vocal quality rating scales.

| Type of scale | Characteristics | Example |

|---|---|---|

| Categorical ratings | Assignment of specific categories, with or without a specific order | Descriptors such as muffled, hoarse, high-pitched, low-pitched, among others |

| Direct magnitude estimations | Ordinal scale that presents numbers in a natural order but where the distances between one number and another are not equal. In this type of scale, the assigned number indicates the extent to which you have a certain quality | GRBAS scale or its derivative versions GBA. RASAT or RASATI [45] |

| Equal-appearing interval (EAI) | Ordinal scale with equidistant points between the quantities. Requires listeners to assign a number between 1 and n (number of points determined on the scale) | Buffalo Voice Profile [46] |

| Visual Analog Scale | Undifferentiated line is usually 100 mm that shows two extremes: one indicating the absence of disturbance and the other related to a complete disturbance. To establish the score, a vertical line is created crossing the undifferentiated line | The Consensus Auditory-Perceptual Evaluation of Voice CAPE-V[1] |

| Paired comparisons | Two stimuli are compared, usually opposite, where it is judged how different they are for each dimension | Bipolar vocal self-estimate scale [47] |

Moreover, speech-language pathologists need to consider the conditions of the speech samples, particularly the quality of the audio recordings. Additionally, authors like Maryn et al. [28] and Maryn and Roy [29] suggest the inclusion of vowels and connected speech in different modalities, such as phonetically balanced readings/phrases or spontaneous speech [30]. Lastly, it is crucial to implement the auditory-perceptual rating process in controlled environmental conditions to minimize biases or errors [31-33].

The final factor to consider is the interaction between the listener and the task, and how it relates to the signal being evaluated. This includes the selection of the scale utilized and the use of anchor stimuli [8,13]. Additionally, there is a phenomenon where the internal standards unconsciously shift when evaluating stimuli of varying severity, which can affect the assessment of subsequent samples [34].

So far, a portion of the robust conceptual framework for auditory perceptual assessment of voice has been described. It is important to acknowledge that in the Colombian context, it is unknown if the information obtained from auditory perceptual evaluation is only a component of a broader voice evaluation protocol and, if so, is not considered decisive in providing relevant information about the case of an individual, or selecting appropriate evaluation instruments, or making decisions regarding vocal treatment.

On the other hand, it is assumed that the training in auditory-perceptual assessment varies across the country. While this is a challenge inherent in the tool itself, the training provided to Colombian speech therapists in this area shows significant variability and may not be supported by a comprehensive conceptual framework like the one proposed by Walden and Khayumov [15]. Consequently, the practice of auditory-perceptual assessment may lack a solid theoretical foundation that considers the underlying variables and how they systematically influence the scores assigned to collected speech samples.

Furthermore, the relevance of auditory perceptual assessment of speech was highlighted in the context of speech-language services during the Covid-19 pandemic [35]. With the urgent need to make the transition to telepractice and the impossibility of performing instrumental examinations, several authors have emphasized the use of auditory perceptual assessment due to its compatibility with remote connections [36-38].

Considering this issue, one of the hypotheses of this study is that training in auditory perceptual evaluation of voice is variable among professionals of speech-language pathology in the nation. Furthermore, it is hypothesized that Colombian speech-language pathologists perform the auditory perceptual evaluation procedure without control of the factors associated with the process.

Accordingly with the description above, the objective of this research was to explore the training and use of auditory perceptual evaluation of the voice reported by Colombian speech-language pathologists. It is worth noting the importance of knowing the training that professionals receive in this field, as well as the specificities of the voice quality ratings, in order to facilitate decision-making processes aimed at standardizing auditory-perceptual evaluation practices in Colombia. Additionally, it can inform the qualification process for current and future generations of speech-language pathologists in the country.

Material and methods

This cross-sectional observational research employed a quantitative approach. A digital questionnaire was designed and distributed to speech-language pathologists in Colombia, which serves as a valuable tool to obtain initial information on a specific situation [39]. The initial version of the questionnaire comprised 26 questions categorized into five sections. Each section consisted of questions of various types, such as closed-ended questions with dichotomous options or multiple-choice questions with a single or varied response. Additionally, open-ended questions provided an opportunity to get concise or detailed answers. To ensure the content and grammatical structure of each statement, an evaluation instrument was developed and administered by an external evaluator whose profile is speech-language pathologist with PhD and master's degree in education, the survey assessment instrument filled with observations by the advisor is attached (see Appendix 1), After the necessary revisions, a final version of the structured questionnaire was obtained, and the sections are presented in Table 2. It is worth emphasizing that the second section was created with the understanding that this research is considered risk-free, as it employs questionnaires that do not intentionally modify biological, physiological, psychological, or social variables. Furthermore, the questions in the fourth and fifth sections align with the task and evaluator variables proposed by Kreiman et al. [4].

Table 2 Description of the sections in the questionnaire.

| Sections | Objective | Variables | Number of questions |

|---|---|---|---|

| Inclusion criteria | Define suitability to respond the survey | Not applicable | 1 |

| Informed consent | Voluntary manifestation of willingness to participate in the research | Not applicable | 1 |

| Sociodemographic data | To establish the general characteristics of the participants | City, age, gender, year of graduation, highest level of education attained, years of experience in the area, types of populations served | 7 |

| Dimension 1: Training process | To inquire how participants were trained in auditory perceptual assessment in typical and pathological voices: hours of training, types of scales, training samples, and continuing education programs | Task aspects Evaluator aspects | 9 |

| Dimension 2: Implementation of the procedure | To inquire about the implementation of auditory perceptual voice assessment in clinical practice: voice tasks, sample recording procedure, scale used, and perceived usefulness | Task aspects Evaluator aspects | 9 |

| Total | 27 |

In order to distribute the instrument, distribution requests were made to colleagues through the email of the Colegio Colombiano de Fonoaudiólogos (CCF) and other electronic channels. The CCF disseminated the invitation through mass communication among its registered members nationwide (n = 161). Simultaneously, a chain distribution was carried out through instant messaging applications (n = 25). It was made available to the public in August 2021 and remained accessible for 15 days, during which it was redistributed solely through instant messaging applications. The sample was conveniently selected, taking the precaution of including the professors from the 14 speech therapy schools that teach the subject of perceptual auditory voice evaluation in the country. This type of sampling was preferred because it is currently difficult to define random sampling, as there are no official statistics indicating the number of professionals dedicated to the field of voice. In addition, the following inclusion criteria were established: professionals attending voice consultation. Professionals focusing on other areas of speech-language pathology were excluded from the sample. Additionally, it was mandatory for participants to answer each of the questions presented in the instrument.

All responses were recorded and processed in a data table in Microsoft Excel. Descriptive analyses were conducted, including frequency counts and percentages for each item. Frequency graphs were also created to observe trends and response patterns. Additionally, several generalized linear models with binomial response and logit link function were fitted, with the response and predictor variables of each statistical model shown in Table 3. It is important to highlight that all variables were dichotomized so that statistical models with binomial response were possibly fitted. These analyses aimed to verify if, within the analyzed dataset, the response variable could be explained by the predictor variables. All analyses were conducted with a 95% confidence level using R software. Finally, open questions were analyzed considering trends identified in the participants' responses.

Table 3 Established generalized linear models with binomial response.

| Predictor variables | Response variables | Number of models fitted |

|---|---|---|

| Highest level of education and years of experience in the field. | Training in normal and pathological voice | 2 |

| Hours of training in normal and pathological voice | Type of scale trained | 5 |

| Type of scale trained | Type of task trained | 6 |

| Type of scale trained | Type of trained vowels | 5 |

| Hours of training in normal and pathological voice | Performance of auditory perceptual evaluation | 1 |

| Type of scale trained | Type of scale used | 5 |

| Type of task trained | Type of task used | 6 |

Results

Sociodemographic information

Sociodemographic information related to the participants was included in Table 4.

Table 4 Demographic information.

| Category | Results |

|---|---|

| Sex | 4 men (10%) |

| 36 women (90%) | |

| Average age | 40.98 years (±10.25) |

| Study level | 1 doctorate (2.5%) |

| 11 master’s degree (27.5%) | |

| 18 specialization degree (45%) | |

| 4 diploma course (10%) | |

| 18 undergraduate degree (45%) | |

| Average years of experience in the field of voice | 11.93 years (±9.1) |

| Populations served | 4 Neonates (10%) |

| 2 Early childhood (5%) | |

| 7 Middle childhood (17.5%) | |

| 13 Adolescents (32.5%) | |

| 40 Adults (100%) | |

| 19 Elderly (47.5%) |

Training of auditory-perceptual evaluation of voice

In this survey, 35 of the respondents (87.5%) reported receiving training in auditory-perceptual evaluation of voice, which involved listening exercises and analysis of typical voices across the lifespan. Meanwhile, 37 of the respondents (92.5%) stated that they had received training in listening exercises and analysis of disordered voices. The average training hours for the first and second tasks were 50.55 hours (±103,302) and 54.05 hours (±92,787), respectively. None of the generalized linear models showed a statistically significant association between training in normal and pathological voices with the participants' educational level and years of experience in vocology (p > 0.05). A detailed summary of the statistical results is displayed in Appendix 2.

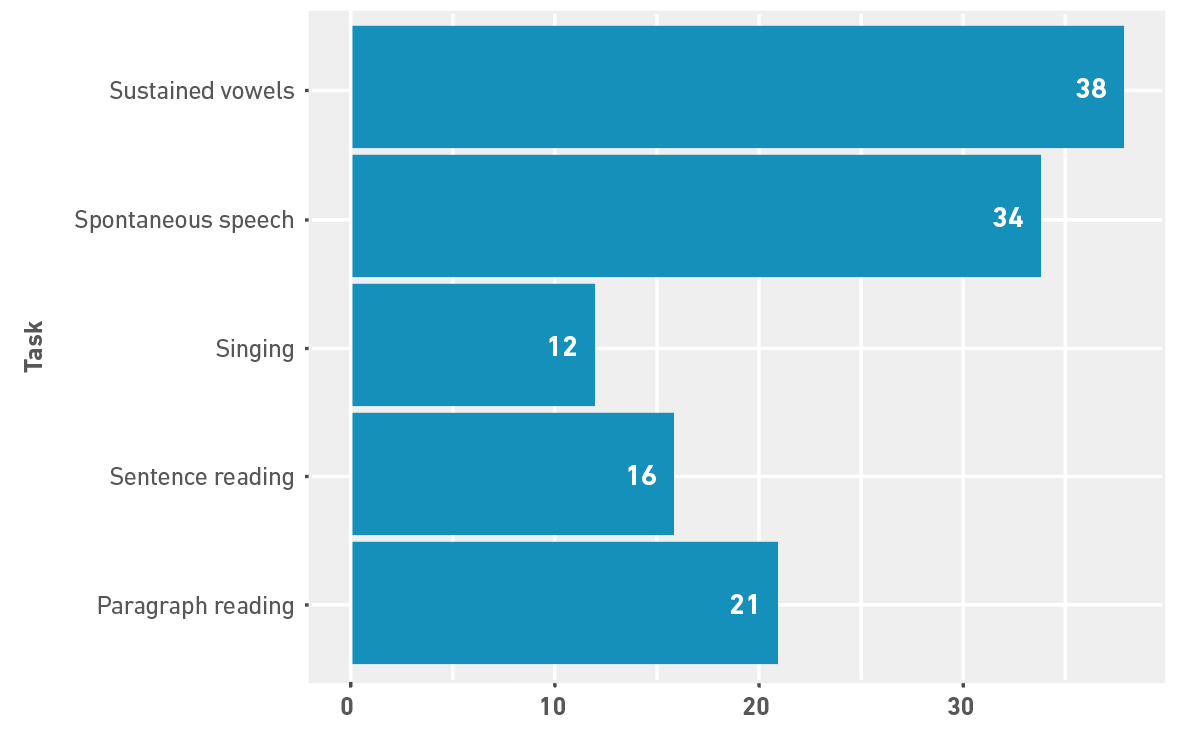

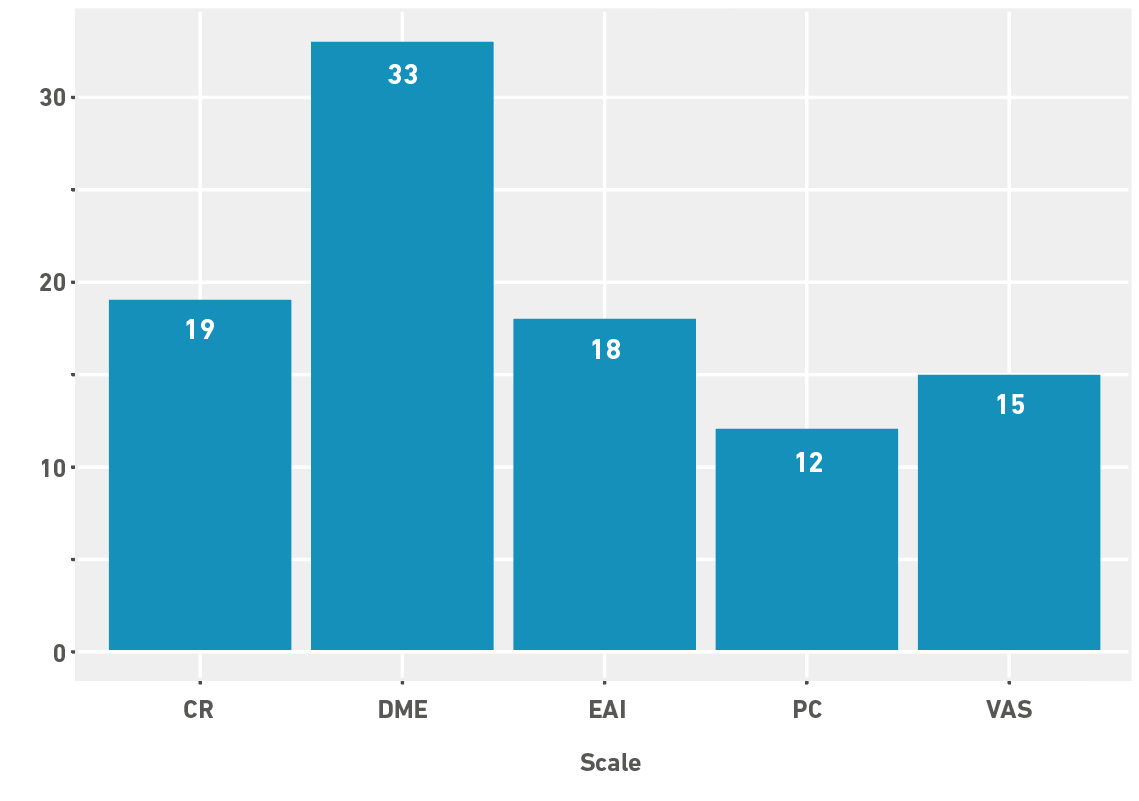

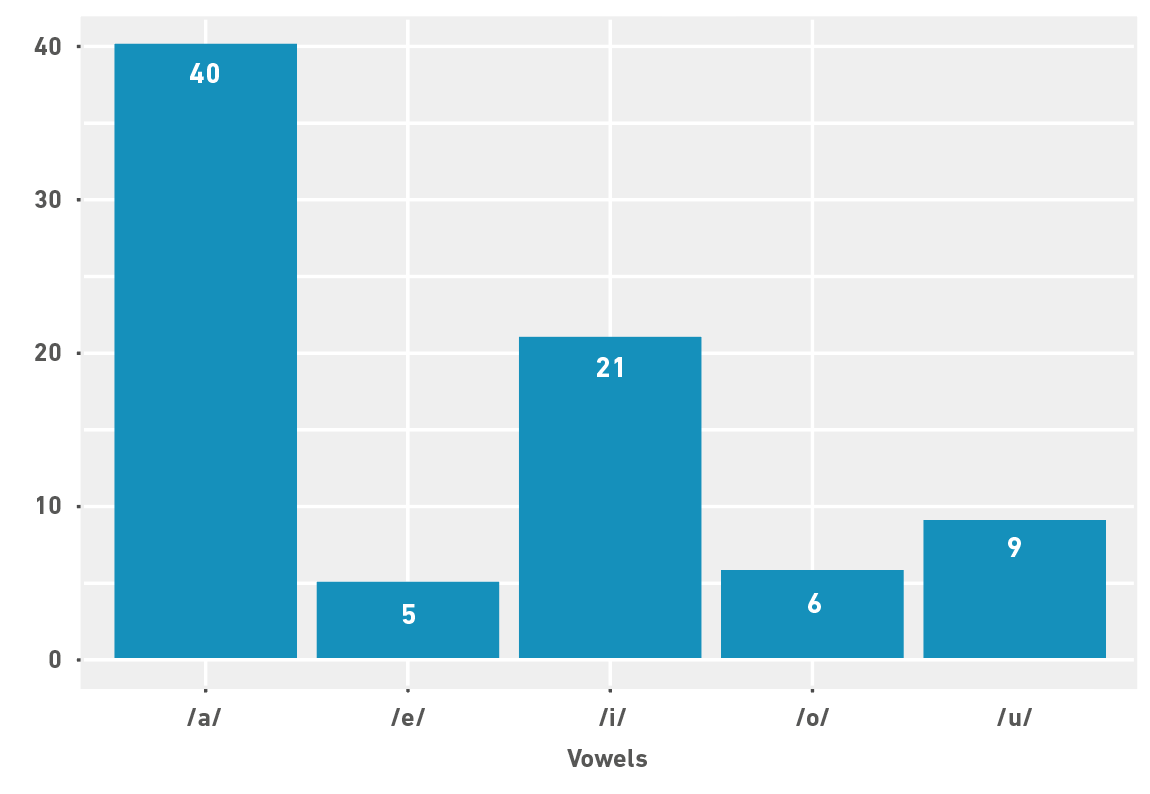

Figure 1 displays the type of scale and the number of participants who received training with each scale. On the other hand, Figure 2 and Figure 3 depict the voice and speech tasks that respondents received training with, along with the number of respondents for each task. The statistical model that explained the use of direct magnitude scales resulting from training with normal voice was found to be significant (p = 0.015). Similarly, the statistical model that explained the use of the vowel /i/ resulting from training with the equal appearing interval was also significant (p = 0.013). However, none of the statistical models established an association between the use of a specific task and the scale on which the participants received training (p > 0.05).

Note. The bar graph shows the number of participants using different scales of auditory perceptual evaluation. The included scales were CR: categorical ratings, DME: direct magnitude estimation, EAI: equal appearing interval, PC: paired comparisons, VAS: visual analog scale.

Figure 1 Type of scale used in training

Note. The bar graph shows the number of participants who received training in auditory perceptual evaluation using different voice tasks.

Figure 2 Vocal tasks used for training

Note. The bar graph shows the number of participants who received training in auditory perceptual evaluation using different vowels.

Figure 3 Vowels used for training

Some professionals received multiple forms of training, which is why the total number of participants for each type of training does not match the total study sample. Additionally, when a participant's response did not allow for inference regarding the type of training received, it was classified as undetermined. The conceptual framework of training is presented in Table 5.

Table 5 Conceptual framework for training and number of participants.

| Type of training | Participants | Percentage |

|---|---|---|

| • Use of external references | ||

| ○ Anchor | ||

| - Consensus | 1 | 2.5% |

| • Multiple exposures to rating | ||

| ○ Practice | ||

| - With feedback | 5 | 12.5% |

| - No feedback | 9 | 22.5% |

| - Feedback unclear | 3 | 7.5% |

| ○ Group consensus | 4 | 10% |

| • Addition of perceptual input | ||

| ○ Use of spectrograms | 2 | 5% |

| • Undetermined by response | 18 | 45% |

Note: This conceptual framework is taken from Walden and Khayumov [15].

Out of the total respondents, 92.5% (n = 37) reported conducting auditory-perceptual evaluations as part of their clinical practice. However, the adjusted statistical model that aimed to explain test performance based on the hours of training in normal and impaired voice was not statistically significant (p > 0.05). Furthermore, 80% of the respondents stated that this assessment strategy is very useful, while 15% considered it useful. Only 5% rated it as moderately useful, and none of the respondents considered perceptual assessment as not very useful or useless. The purposes of auditory perceptual assessment were categorized based on the participants' responses (refer to Table 6 for details).

Table 6 Purposes of auditory-perceptual evaluation of voice.

| Purposes of voice evaluation | Number | Percentage |

|---|---|---|

| Initial stage | ||

| • Determine: | ||

| - Presence/absence of a voice disorder | 11 | 27.5% |

| - Severity of voice disorder | 9 | 22.5% |

| - Nature of voice disorder | 16 | 40% |

| Treatment stage | ||

| • Define goals and methods | 10 | 15% |

| • Educate/counsel the patient about the voice disorder | 1 | 2.5% |

| • Identify outcomes | 12 | 30% |

| Undetermined | 14 | 35% |

A total of 10 individuals reported correlating the results of perceptual evaluation with acoustic analysis of voice to establish a vocal diagnosis. Additionally, 2 participants mentioned that time plays a significant role in deciding whether or not to perform auditory-perceptual evaluation of voice in their daily clinical practice. Regarding the procedures for recording voice signals for auditory-perceptual evaluation of voice, diverse responses were obtained (refer to Table 7). It is worth noting that only one participant reported not making recordings due to a shortage of supplies.

Table 7 Recording practices reported by participants.

| Components | Participant’s report |

|---|---|

| Microphone | Type of microphone |

| Condenser | |

| Microphone of recorder device | |

| From smartphone | |

| With WDRC | |

| Unidirectional with frequency response (50Hz-20kHz) | |

| Flat frequency omni-directional | |

| Anti-pop | |

| Mouth distance | |

| One quarter | |

| 5-10 cm | |

| 7-10 cm, measured with ruler | |

| 15 cm | |

| 30 cm | |

| Angulation from the mouth | |

| 30° angulation | |

| Preamplifier | Audio interface |

| Digital recording | Software y Hardware |

| Audio editing (Audacity) | |

| Acoustic analysis (Praat, WaveSurfer) | |

| Smartphone application | |

| Professional recorder | |

| Format specifications | |

| 16-bit or 32-bit resolution | |

| 44,000 or 44,100 Hz sampling rate | |

| WAV format | |

| Decibel calibration | Instrument |

| Not reported | |

| Recording environment | Sonometer to verify that samples have noise below 40 dB |

| Sound-proof cabinet | |

| Quiet space |

Note: WDRC: Wide dynamic range compression, WAV: Waveform audio file format.

A total of 25 participants (62.5%) indicated that they always perform auditory-perceptual evaluation of voice, while 8 (20%) reported doing it almost always, 4 (10%) mentioned doing it sometimes, 2 (5%) stated they almost never do it, and 1 (2.5%) reported never performing the procedure. Regarding the rating scales used by professionals, the most frequently utilized was direct magnitude estimations (n = 31; 77.5%), specifically with GRBAS and RASAT/RASATI. This was followed, in order of usage, by categorical ratings (n = 12; 30%), paired comparisons (n = 9; 22.5%), equal-appearing intervals (n = 8; 20%) with Buffalo Vocal Profile, and visual analog scale (n = 7; 17.5%) with CAPE-V. Only 2% of the participants reported not using any rating scale. The adjusted statistical models showed significant associations between the scale used and the scale on which the participants were trained: GRBAS (p = 0.012), Buffalo Vocal Profile (p = 0.033), and paired comparisons (p = 0.013).

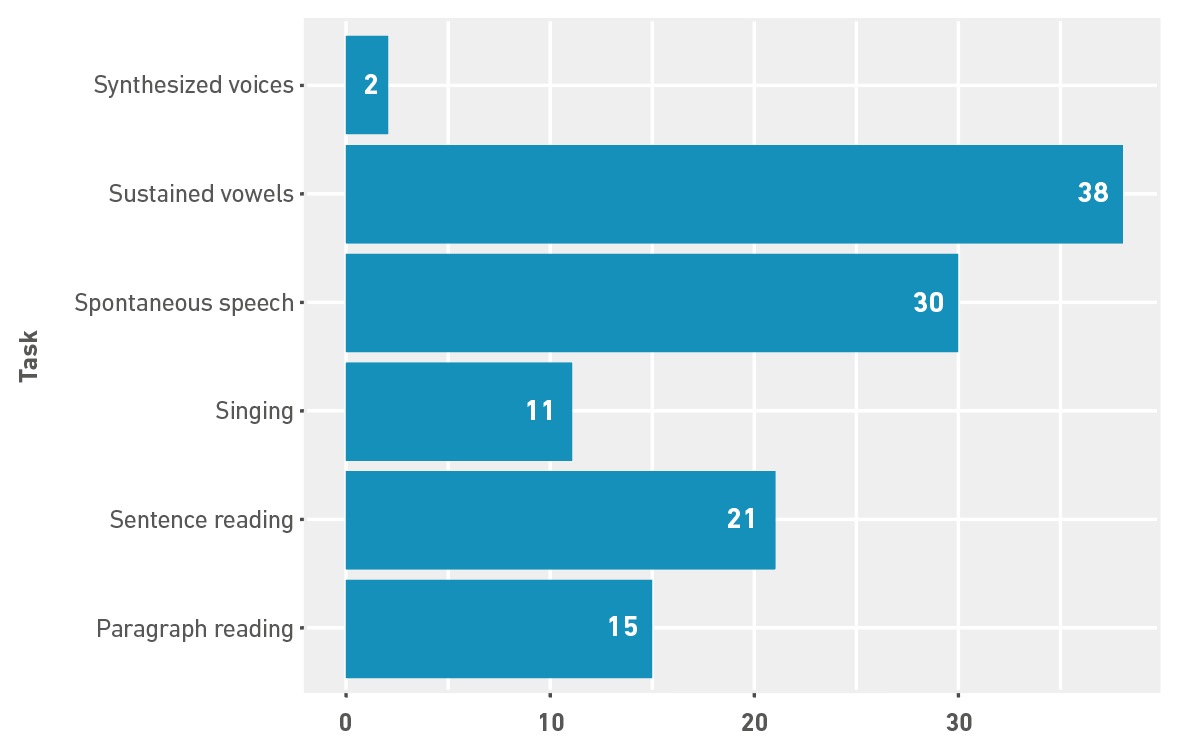

Figure 4 displays the speech and voice tasks utilized by respondents in their daily auditory-perceptual evaluation practice. None of the statistical models used to associate task usage with the participants' training proved to be statistically significant. Regarding the timing of perceptual assessment, 20 respondents (50%) reported conducting the assessment in real-time, while 15 respondents (35%) stated that they perform a recording and subsequently rate it through one or multiple opportunities to listen. Additionally, 3 participants (7.5%) reported performing the process using a combination of the aforementioned conditions, and another 3 participants (7.5%) reported conducting a rating after recording and subsequently performing a new rating.

Discussion

Training in auditory perceptual evaluation of the voice

The association between training in auditory-perceptual evaluation of the voice, educational level, and years of experience in the field of voice/vocology was assessed. It is important to recognize that there is a reduction in generalizability based on the sampling method chosen for conducting this research. However, it can be concluded that, based on the analyzed data, a higher educational level or more years of experience in the field does not guarantee a higher level of training in this evaluation strategy. Consequently, further studies are necessary to investigate this matter.

Considering that the auditory-perceptual evaluation procedure is taught at the undergraduate level in Colombia [40], it would be expected that all professionals who participated in the survey had received training in performing this procedure. However, only a small percentage indicated that they had received training in evaluating both typical and pathological voices. One possible explanation is the variation in auditory-perceptual assessment training across the country. This assumption is based on the fact that the 14 existing speech therapy programs in the country provide training in auditory-perceptual evaluation either through dedicated voice courses or as part of fundamental courses in the speech area. These courses typically have a duration of 96 to 144 working hours, but the specific time dedicated to studying this tool varies between 6 to 9 hours. As a result, the training provided to Colombian speech-language pathologist in this area exhibits significant variability and lacks depth. Furthermore, it is important to highlight concerns about the reliability of the reported data due to the possibility of memory bias. Several professionals reported receiving training over ten years ago, which raises questions about the accuracy of their recollection.

Regarding training duration, the results indicated an average of approximately 50 hours for both normal and altered voices. This value significantly exceeds the training durations reported in the literature, which typically range from 1 to 20 hours. Analysis of the responses to open-ended questions revealed a confusion between the concept of auditory perceptual evaluation of the voice, patient-reported outcome measures, and even acoustic analysis. It is highly likely that the reported training hours encompass a combination of auditory perceptual evaluation and other vocal evaluation strategies. Furthermore, it is plausible that more training is focused on pathological voices rather than normal voices. This poses a problem, as it creates a bias by setting specific internal standards for certain pathologies instead of general vocal quality, which negatively impacts the training and execution of auditory perceptual assessments. However, it is important to highlight that the data collection instrument used in this study did not include questions about the number of training sessions. Therefore, it is recommended to investigate this aspect in future research.

Statistical tests revealed a relationship between the use of normal voice stimuli and direct magnitude estimation scales, despite other scales also providing a space for rating normal voices. This result may be associated with the fact that direct magnitude estimation scales were the most commonly used in the training of clinicians of the study.

Similarly, categorical ratings are utilized not only by healthcare professionals, but also by arts professionals due to their unique training methods. However, in the field of speech-language pathology, descriptors should align with physiological reasoning. The large number and variety of terms used in categorical ratings make it challenging to characterize and establish relationships between each attribute and their corresponding sound emission in auditory-perceptual evaluation [41]. Therefore, it raises concerns about the extensive use of this type of measurement scale in Colombian speech-language pathology practice, even in the present day.

Likewise, the Buffalo vocal profile was extended in certain areas of the country as a result of the initiative of some schools of speech-language pathology to extend the scientific advances of that time; the CAPE-V or paired comparison scales have been used in the country for a relatively short period of time. Based on the above, a national union reflection is sought in order to develop more training processes and promote the use of robust instruments/tools for auditory-perceptual assessment.

Regarding the speech tasks that professionals were trained in, it is evident that there is a preference for using vowels over other speech tasks. It is equally noteworthy that all respondents reported being trained with the vowel /a/ more frequently than other vowels. Although this research did not explore the reasons behind the choice of specific vowels for training in auditory perceptual evaluation, most protocols suggest the use of these vowels. For instance, authors such as Kempster et al. [1] recommend the use of /a/ due to its neutrality in the configuration of the vocal tract, and /i/ because it is the stimulus used in stroboscopy to observe laryngeal behavior [42]. Based on the above, the statistical tests only confirm an association between training with the equal-appearing interval scale and the use of the vowel /i/ as part of the trained tasks. This finding reinforces the idea that the stimuli used in training are selected without any apparent specific criteria or based on the stimuli available to the trainer.

At this point, it should be noted that tasks such as vowels, sentence reading, and spontaneous speech were not statistically associated with visual analog scales, since tools such as the CAPE-V have clearly defined the types of stimuli it should be trained and executed with. The findings mentioned so far differ from what has been reported in the literature in that the main stimulus for training evaluators is connected speech (spontaneous speech and sentence and paragraph reading) [7,43]. Simultaneously, the findings agree that the use of synthesized stimuli (both vocal and speech) are the least used ways to prepare judges' perceptual skills [14,44]. Finally, speech tasks with which the trainings were performed are not clear; it is possible that they were part of the casuistry of those conducting the trainings or a pre-existing database.

A different point of discussion is the theoretical basis of training in auditory-perceptual evaluation most commonly reported by respondents, which is multiple exposures to rating. Within this category, the most frequently indicated practice was to perform without feedback. From this finding, it can be inferred that expertise in voice rating is acquired simply by listening to stimuli a certain number of times or in multiple sessions. Consequently, internal standards would be developed and reinforced through the act of listening itself. While limited feedback can be beneficial for sensory learning, the complete absence of feedback for novice judges is particularly problematic. Without adequate feedback, the establishment and calibration of internal standards become uncertain [18].

It is noteworthy that only one participant mentioned receiving training that involved the use of external standards, specifically employing consensus-based anchors for scoring. It is striking that one of the most effective training methods has been underutilized in the country, as it has the potential to enhance the validity and reliability of auditory-perceptual evaluation of voice [8,13,32]. Furthermore, it is important to highlight the inability to classify nearly half of the respondents based on a theoretical training framework. Initially, it might be assumed that the participants' lack of reference for training suggests they did not receive it, which contradicts previous reports regarding the number of trained professionals. Additionally, the way in which participants discuss their training experiences reveals certain shortcomings in the training itself. However, the authors postulate that this may be the root cause of the prevailing notion in the country that auditory-perceptual evaluation does not require training. The widespread belief that this assessment process is straightforward and does not necessitate a deep understanding of the underlying processes or the appropriate scoring procedures for each tool indicates a difficulty to reflect on optimal training methods and an obstacle to recognize the need for training to establish and calibrate internal standards.

Performance of auditory-perceptual evaluation of voice

Regarding the implementation of the procedure, it is worth noting that not all professionals reported perform it. While some participants justified this response based on time constraints in their daily clinical practice, it is possible that speech-language pathologists themselves may not be fully aware that they are indeed implementing it. Numerous authors have emphasized that this is a crucial component for measuring vocal quality and conducting research [32,33]. Furthermore, speech-language pathologists often rely on the results of auditory perceptual evaluation to inform their efforts in training or rehabilitating individuals with voice disorders, which raises questions about this finding. The statistical test examining the relationship between training hours and the execution of the procedure supports the notion that, in the studied dataset, the number of training hours does not significantly impact the performance of the process in routine clinical practice.

It is encouraging that no participant considered this strategy as not very useful or useless in daily clinical practice. This confirms that even when presented with alternative tools for measuring vocal function, the inherent value of auditory perceptual evaluation is recognized by Colombian colleagues. However, when inquiring about the reasons for rating the usefulness of the strategy, it is observed that less than half of the professionals indicate their reasons. The aspects that are most frequently mentioned relate to the ability to determine the nature of the voice disorder and identify treatment outcomes. These trends in the results allow the authors to infer that there is a perception that auditory perceptual evaluation serves only as a partial baseline and post-treatment comparison measure. Nevertheless, it is evident that auditory-perceptual evaluation should also be directed towards achieving a diagnosis and establishing treatment goals and methods that educate and counsel the patient about their voice disorder and ways to improve it [34,35].

The findings presented in Table 7 highlight the significant disparity among Colombian speech-language pathologists in terms of adhering to established quality standards when recording vocal signals [36]. This is of utmost importance, considering that inaccurately or inadequately recorded voices can impact the reliability of auditory-perceptual judgments [38]. In future studies, it is crucial to thoroughly investigate the recording practices of acoustic vocal signals, with a particular focus on standardizing the fundamental conditions for audio sample recording across the country.

The latter data may be more reliable in relation to the number of professionals who actually perform this type of assessment. However, with respect to the measurement scale, the hypothesis that the GRBAS and RASAT/RASATI scales, together with categorical ratings, are the most widely used tools in the country is confirmed. The statistical results confirm that if the professionals were trained in GRBAS, then they would use this scale. The same happens with the Buffalo vocal profile; therefore, the use behavior may be due to the fact that most of the professionals were trained with these instruments.

When examining the tasks requested from the clients during auditory-perceptual evaluation, a divergence is observed compared to the use of scales. The tasks with which the participants were trained do not necessarily align with the tasks employed in their daily practice. Moreover, there is a consistent emphasis on using vowels over other voice and speech tasks. It is hypothesized that this preference may stem from the desire to obtain vowel samples for subsequent acoustic analysis, potentially at the expense of compromising auditory-perceptual evaluation. This discovery raises two uncertainties that warrant further exploration: firstly, the physiological mechanisms underlying the selection of certain tasks over others, which participants may not be aware of, leading to a limited utilization of available tasks without a clear rationale; secondly, there is uncertainty regarding the tasks associated with each auditory perceptual evaluation tool, suggesting that participants may not be familiar with the specific procedures required by each tool, resulting in inconsistent task selection [28].

Lastly, it is important to note that half of the surveyed speech-language pathologists reported conducting real-time auditory perceptual evaluation during consultations. This raises concerns regarding the validity of the results obtained through this approach, as many published reports emphasize the need to record voices and listen to them repeatedly to mitigate the potential impact of auditory memory or attention lapses [36].

Limitations

It is necessary to acknowledge various significant limitations of this study: first, a common risk when selecting a non-random sample is the inability to make generalizations about a population. Conducting convenience sampling significantly reduces the strength of any resulting generalizations due to selection bias. For this reason, it is not feasible to assume that the findings of this study can be applied to the entire Colombian speech-language pathologist´s population.

It is recognized that to enhance appearance validity, the questionnaire should have undergone a pilot test in a sample with similar characteristics before its administration to a definitive sample. Since this activity was not conducted, the results obtained with the questionnaire may be subject to bias. Likewise, consultation with experts in the field should have been sought to obtain indicators of content validity. Although the questionnaire was content evaluated by an external evaluator, the content could have benefited from a thorough review by experts.

Finally, this study considered Colombian speech-language pathologists as the population of interest. However, within this sample, teachers from different speech pathology schools that teach perceptual auditory voice evaluation were included. This selection of participants could introduce a bias in the sample, as these individuals may have a different level of involvement in the clinical field compared to other speech-language pathologists. Additionally, the sample represents individuals from different professional backgrounds, and the high level of education among the participants is noteworthy, as the sample included individuals with doctoral degrees, a significant proportion with master's degrees, and specialization degrees. This may also contribute to a potential selection bias.

Conclusions

Based on the findings presented in this study, there is an urgent need to establish systematic training programs for auditory-perceptual evaluation of voice. These programs should be based on a conceptual framework of sensory learning that considers both normal voices throughout the lifespan and disordered voices across different degrees of severity. It is crucial to recognize the differences in internal standards between those who have analyzed populations with pathological voices and those who have only had experience with typical voices.

Given the specific context in Colombia described earlier, it is essential to receive training in conducting evaluations that align with international standards, encompassing diverse scales, indices, and precise terminology. These standards should be adapted to meet the specific needs of the country. Similarly, voice clinicians must undergo training with standardized parameters to ensure consistency within the evaluation team, considering the characteristics of the population they serve.

Lastly, each institution or working group should develop controlled and systematic protocols for auditory-perceptual evaluation. These protocols should include reproducible and interpretable tests, as well as intra- and inter-rater comparisons, to guide the initial and ongoing training of the team. It is imperative to use methods that incorporate descriptors, scale values, and well-organized speech samples to foster group consensus and maintain a high level of reliability in the judgments issued during auditory-perceptual evaluation. This approach will facilitate clear diagnosis, goal setting, and selection of appropriate treatment methods.