1. Introduction

Image manipulation is more common today due to the massification of low-cost/high-resolution cameras, along with the availability of programs for image processing such as Inkscape, Photoshop, and Corel Draw, among others. Although image alteration is common in entertainment, when images are taken as evidence in a legal process maintaining the integrity of the original image is fundamental. Thus, digital forensics has the challenge of ensuring the accuracy and integrity of images in a legal process.

Various researchers have made significant efforts to identify manipulated images using digital image processing algorithms. This is because the process of visually identifying alterations to digital images is complicated for the human eye. Researchers have found that the process of falsifying an image modifies the neighborhood and statistics of the host image [1]. Thanks to these traces of alterations, it is possible to detect altered images. The scientific literature describes different approaches to detect the falsification of digital images, including methods based on the type of camera, as well as the format, physics, geometry, and pixels of the image [2]. One of the main advantages of pixel-based methods is that they do not require knowledge of either the camera manufacturer's parameters or the original image.

Among the most common alterations in pixel-based methods are copy-move, resampling, and splicing. According to [3], copy-move alteration takes place when a region of an image is copied and pasted into the same image without any geometric transformation. In contrast, if at the time of pasting the copied region some geometric transformation is performed, this is known as resampling. Splicing is generated by copying a region of an image and pasting it in a different one to add or hide important information. Although these operations may be visually imperceptible, it is possible to find statistical changes in the image due to a correlation change between neighboring pixels at the edges of the base image [2].

1.1 Copy-move detection

Various state-of-the-art approaches are available to identify copy-move alterations. However, for most of the algorithms studied, the detection of forgeries consists of four main stages: preprocessing feature extraction, pairing, and visualization [4]. One of the most used methods for copy-move forgery identification is the Scale-Invariant Feature Transform (SIFT), as this method works on the image’s key points extraction. In [5], authors presented a hybrid method for detecting copying on an image using SIFT and the Principal Component Analysis (PCA) kernel to extract the main points by blocks. A variation of the previous method was presented in [6], in which they used the KAZE point detector together with SIFT to extract more crucial points. A method to identify copy-move forgeries with the Discrete Wavelet Transform (DWT) was proposed in [7]. Initially, they applied the DWT on the image to obtain both the detail and approximation coefficients; then, they extracted the critical points with SIFT on the detail coefficients. Subsequently, they compared the feature vectors to identify which regions were falsified. Another recognized feature extractor is the Speeded Up Robust Features (SURF), which detects interest points and descriptors [8-9]. A technique to detect copy-move forgeries based on SURF and KD-Tree for the comparison of multidimensional data was presented in [10]. Recently, many researchers applied deep learning methods in areas such as mechanics [11], medicine [12], and a solution for copy-move detection [13]. [14]proposed a deep learning approach with a transfer learning model that uses VGG-16 custom design convolutional neural network (CNN). A deep learning technique based on the CNN model with multi-scale-input and multi-stages of the convolutional layer was proposed [15]. This method used three phases: encoder block, decoder block to extract feature maps and classification. In the encoder block, the images are downsampled in multiple levels to extract features maps. In the decoder block, features maps are combined and upsampled until the output feature's dimension matches the input image's dimension. Finally, a sigmoid activation function was adopted as a classifier in the last phase. The authors in [16] suggested a dual branch CNN model with multi-scale input by choosing different kernel sizes. First, the images were resized and standardized on-the-fly; then, the images were passed to the CNN. The CNN architecture had two branches with standard input but different kernel sizes to extract distinct features maps. From the experimental result, the method was lightweight and performed high-grade prediction accuracy for the MIC-F2000 dataset.

1.2 Resampling detection

Several authors have focused their work on the identification of unnatural periodicities in digital images to detect resampling alteration. A method to detect the periodicity in images introduced by resampling and the compression of the JPEG format was implemented. To achieve this, they calculated the probability map of the image using the EM (Expectation-Maximization) algorithm [17]. Another method for resampling detection based on the EM algorithm and the probability mapping (m-map) of pixels in the frequency domain was used in [18]. This technique worked in the absence of any watermark or digital signature. In [19], a method capable of detecting traces of geometric transformation was proposed, using the periodic properties present in interpolated signals to detect whether the image has been modified or not.

1.3 Splicing detection

For this type of falsification, the paste operation alters the image’s general statistics by incorporating textures and borders that contrast with the original image [20,21]. In recent years, some work carried out for splicing detection has focused on the extraction of the characteristics of an altered image and its original. The most relevant characteristics are used to train and validate a classifier, then the trained model is used to detect forgery on the image [22,23]. In [24,25], the authors presented a Local Binary Pattern (LBP) method due to its ability to represent textures with a low computational cost. LBP and the approximation coefficients of the DWT to extract the frequency characteristics were proposed in [26,27], incorporating the histograms of the DWT detail coefficients to the vector support machine. Several authors have proposed LBP methods, such as LBP-DCT [28] and LBP-Enhanced [29]. In [20], an excellent method to highlight statistical changes from Markov's transition probability characteristics was introduced. Other authors have proposed combinations to improve results, such as Markov-DCT-DWT[30], Markov-DCT [31], Markov-QDCT[32], and Markov-Octonio DCT [33]. As copy-move detection, a deep learning approach is used for splicing detection. In [34], a two-branch CNN learns hierarchical representations from the input RGB color or grayscale test images and feeds a support vector machine as a classifier.

In this study, a hybrid algorithm for the detection of copy-move, resampling, and splicing forgery is proposed. The main contributions of this work are as follows:

The proposed algorithm allows the identification of the three most common types of image forgery at a time. Most previous studies found in scientific literature only permitted the classification of some type of forgery per algorithm.

Experiments were carried out with eight datasets, all with different resolutions and format features, to assess the robustness of the introduced hybrid algorithm.

An improvement in the thresholding of the Markov-based preprocessing was achieved that allowed a significant reduction in the features used for the classifier.

2. Proposed algorithm

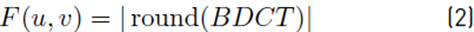

The general framework of the proposed algorithm methodology is presented in Figure 1. The proposed method is based on the outstanding work presented by E-Sayed [31] and on the improved thresholding method presented by Kumar et al. [35]. Unlike previous studies, we reduced the derivative matrices in space and frequency to 2 (vertical matrix and horizontal matrix), and the improved threshold was used to reduce the feature vector even more.

This proposed algorithm is divided into two parts: copy-move detection and Markov preprocessing that is used for resampling detection. In the proposed method, the main contribution is the identification of the type of alteration of a digital image either by copy-move, resampling, or splicing.

2.1 Markov preprocessing

First, the image was converted to grayscale. Then, the horizontal and vertical derivatives of the image in space were calculated, after which the Markov process was carried out to obtain the characteristics in space. Subsequently, the image was divided into 8x8 non-overlapping blocks, and the DCT was applied to each block. After that, the horizontal and vertical derivatives were calculated for the resulting image. Next, the Markov process was performed to obtain the frequency characteristics. Finally, a vector of spatial and frequency characteristics was created to train a polynomial SVM.

DCT block

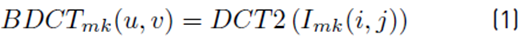

Since the altered image had changes in its local frequency distribution, it was possible to detect these changes with the DCT coefficients. First, the grayscale image was divided into 8×8 blocks without overlapping, and the DCT (version 2) was applied to each block individually. Finally, the values of the DCT coefficients were rounded, and the absolute value was calculated, as presented in Equation (1) and (2):

Where BDCTmk (u, v) represents the DCT of each 8 × 8 block and F (u, v) is the complete image of the absolute rounded value.

Derivative matrices

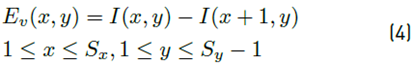

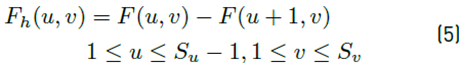

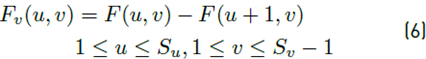

It is possible to use an edge detector to observe statistical changes in the image because the operation of placing one image over another (splicing) introduces statistical alterations on the image edges [31]. To detect them, the horizontal and vertical derivatives of the image were calculated using Equation (3) and (4):

Where l(x-y) is the image, S x and S y are the image’s dimensions. Likewise, the horizontal and vertical derivatives of the frequency image are calculated using Equation (5) and (6):

Where S u and 𝑆 𝑣 are the image’s dimensions in frequency. It should be noted that up to this point, all resulting matrices had positive integer values.

Thresholding

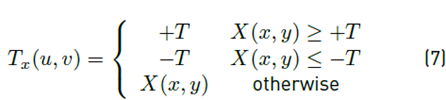

To reduce the feature vector dimensions and the algorithm complexity, a threshold T must be performed. If the value of the derivative matrix is higher than T, this value is replaced by T. Conversely, if the value of the matrix is less than -T, this value is replaced by -T, as shown in Equation (7):

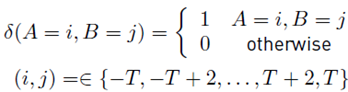

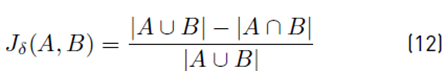

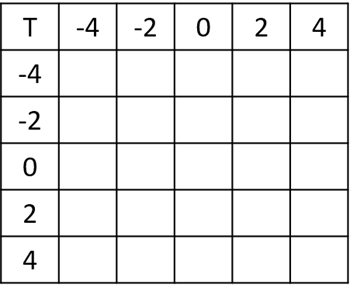

Where X(x, y) are all derivative matrices (E h (x, y), E v (x, y), F h (u, v), F v (u, v)). For this study, an adjustment was made in the range of thresholding based on the work presented by Kumar et al. [35], who stated that better results were obtained when using a range of (i, j) =∈ {−T, −T + 2, . . . , T + 2, T }. Attention should also be given to the selection of an appropriate threshold, because a small T value may cause the transition probability matrix (TPM) not to be sensitive enough to detect alterations due to information loss. On the other hand, a high T value can cause the TPM to be extensive and therefore increase the feature vector and the complexity of the algorithm. In general, a threshold of T = 3 should be used to maintain a balance between sensitivity and complexity.

Probability transition matrix (TPM)

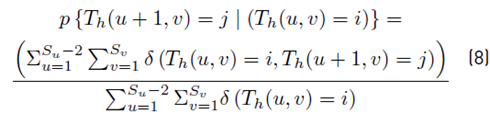

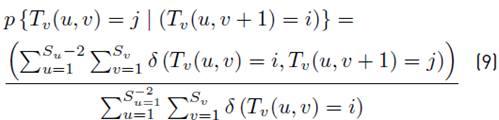

As previously mentioned, the splicing operation changes the correlation between the pixels of the original image. A random Markov process can be used to describe these correlation changes. In this case, the transition probability matrix was applied to each of the thresholded derivative matrices to characterize the Markov process. The horizontal and vertical transition probability matrices were calculated using Equation (8) and (9):

Where:

So, when applying (8) and (9) on horizontal and vertical derived matrices on space (Eh(x, y), Eh(x, y)) and the frequency (Fh(u, v), Fh(u, v)) a vector whose characteristics were 4 × (T + 1)2 was generated. igure 2 shows the TPM with a set threshold T = 4. Finally, the TMP became a data vector that fed a support vector machine (SVM) to be trained.

2.2 Copy-move detection

In case SVM has not detected any resampling alteration, the algorithm proceeds to perform copy-move detection. For this, the algorithm presented by Amerini et al. [36] was used to detect key points with the SIFT algorithm, and the library VLFeat 0.9.21 [37] was employed.

Key point detection with SIFT

The SIFT feature extraction algorithm on the altered image was used; this way, a descriptor vector of 128 features was obtained S = {si, . . . , sn}.

Matching features through g2NN

Because two copied regions within the image have the same descriptors, the g2NN algorithm was used, which calculates the ratio between the Euclidean distance between a pair of candidate points and the next-nearest neighbor [38]. If the condition presented in Equation (10) is met, a pair of matching points is created P = {p i , . . . , p n }; where each pi is each pair of (s i , s j ).

Where d i is the Euclidean distance between the candidate point and the point to verify, d i +1is the Euclidean distance between the candidate point and the next nearest neighbor, and τ is a defined threshold that allows the rejection of pairs with very different descriptors. If two or more pairs of matching points are not found, the algorithm identifies the image as authentic.

J-linkage grouping

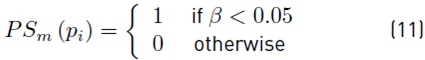

To identify the areas where the alteration occurs, the J-Linkage cluster, also called Hierarchical Agglomerative Clustering, (HAC) was used [45]. This procedure was performed with the pair of coinciding points coordinates but not with the Euclidean distance value. First, we proceeded with a random sampling of the pairs of coincident points p to generate m hypothesis of related transformations T = {T1, . . . , Tm}. For each pair, a set of related transformations called ”preference set vector”: P S = {P S1 (pi) , . . . , P S m (p i )} where each P S m (p i ) was defined in Equation (11).

In other words, β is the distance between the T m model and the pair of points p. If this value is less than 0.05, the pair of primary points and the pair of points of the copied region have similar transformations [38]. The preference set vector is used in the HAC algorithm to find the transformations of both the original points and the copied points. After establishing the preference set vector, these were assigned to a cluster; then, for each iteration, each pair of clusters was merged with the smaller distance in space. The preference set vector of a cluster was calculated as the intersection of the preference sets of the matched pair, and the distance between the paired clusters was determined with the Jaccard distance (J δ ) between the corresponding preference sets using Equation (12).

The sets without similarities have a value of 1, while similar sets have a value of 0. According to these parameters, Amerini et al. [36] set a value of 1 as the cut-off grouping value. As a result, each cluster obtained at least one matching transformation among all its pairs. If more transformations are shared among all the elements of the cluster, they should be similar; for this reason, an estimation of the final transformation is determined by least-squares fitting. To dismiss outliers in cluster transformations, the fixed threshold N was used. Finally, if eight or more transformations (ind) are detected, or if there are four or more clusters (Cluster), the method alleges the image as falsified. Otherwise, it assumes that the image does not contain any modifications.

2.3 Algorithm adjustment

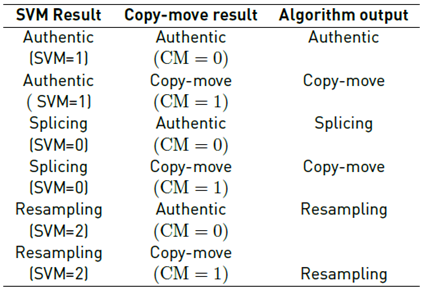

Given that the algorithm must identify different types of alterations, an adjustment was made for the final algorithm output. In the Markov preprocessing phase, an SVM was trained with authentic, resampling, and splicing images. If the SVM detected that the image had resampling, the algorithm indicated this. On the other hand, if the SVM identified the image as authentic or having splicing, the algorithm executed the copy-move detection process. If during this process, the copy-move block found the image to be free of copy-move alterations, the algorithm considered the image authentic. Table 1 presents the algorithm response depending on the SVM response and the copy-move algorithm. Because copy-move is a particular case of resampling, where there is no scaling or rotation, once the algorithm detects resampling, the output of the copy-move block is always resampling. Future work is expected to locate the region affected by copy-move or resampling.

3. Methodology

In this section, we present a brief description of the experimentation process with which the proposed method was evaluated. The first part shows the datasets used for the algorithm evaluation. Next, the metrics used to evaluate the performance of the algorithms are presented. Then, an estimate of the optimal threshold T for the evaluation of the algorithm is shown. Finally, the methodology used to evaluate the proposed algorithm is presented. All tests were performed on MATLAB 2017b, on a 64-bit Dell computer, 8GB RAM with Windows 10, and an Intel Xenon processor.

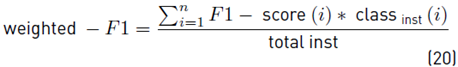

3.1 Databases

In order to evaluate the algorithm, the most well-known forgery image evaluation datasets for splicing, resampling, and copy-move were selected. The datasets selected for evaluating splicing were CVLAB [40], CASIA V1 [41], Columbia [42] and Uncompressed Columbia [43]. Ardizzone-dataset [45], Coverage [45], MIC-F2000 [38] and CMFDdb-grip [46] datasets were used for the evaluation of copy-move and resampling. A selection of datasets rich in formats, sizes, and quantities of images with different types of alteration were used. Table 2 shows the main characteristics of all the datasets that were used to evaluate the proposed algorithm, such as format, number of original images, number of altered images, and resolution. Taking into consideration all the datasets used for the study, a total of 7100 images were evaluated, of which 3666 were unaltered, 791 had resampling, 2213 had splicing, and 430 had copy-move alterations.

3.2 CVLAB forgery database

For this study, a new database called CVLAB forgery database was created and published in [40]. This dataset contains 650 jpg format images that are 720 × 480. It contains 200 authentic images, 200 spliced images, and 250 copy-move images. The dataset has 50 images of animals, 40 of landscapes, 35 of flowers, 50 of buildings, 25 of people, and 50 of common objects. Figure 3 shows a sample of the images of the dataset.

3.3 Classifier performance

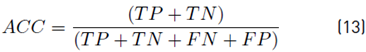

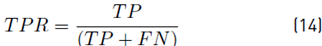

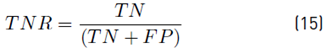

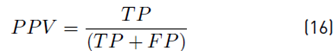

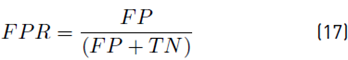

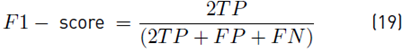

To demonstrate the detection performance, the classifier was evaluated by using the confusion matrix resulting in a 4 × 4 matrix that shows the classifier performance through the known values and predictions of the trained model. To determine the degree of reliability of the model, we calculated the accuracy (Acc), true positive rate (TPR), true negative rate (TNR), positive predictive value (PPV), the false positive rate (FPR), and the false-negative rate (FNR) using Equation [13-18] respectively. Alternative metrics that considered class imbalance were used, such as F1-score per class and weighted F1-score using Equation (19) and (20).

Where TP is the number of values predicted as positive that is indeed positive, TN is the number of values predicted as negative that is indeed negative, FP is the number of values predicted as positive that are negative, and FN is the number of values predicted as negative that are positive. 𝐹1−𝑠𝑐𝑜𝑟𝑒 𝑖 is 1 − score(i) is F1-score of each class, class inst (i) are the total of instances of each class and total inst are the total of instances used for classification.

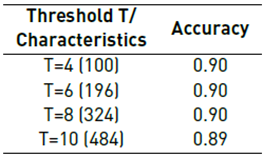

3.4 Threshold T selection

As mentioned before, the selection of a threshold T is essential since this allows adjusting the robustness of the method together with the computational cost. At a higher value of T, a better sensitivity with a higher computational cost is obtained, and, conversely, a lower value of T loses sensitivity with a lower computational cost. Therefore, to identify the selection of the ideal threshold T, the splicing and resampling datasets mentioned above were trained with values of T equal to 4, 6, 8, and 10. Subsequently, the accuracy and precision of each model were estimated. All evaluations were performed with cross-validation of 10 folds. Finally, with the best value of the threshold T obtained, a model with an SVM with a Radial Basis Function (RBF) kernel was trained as a classifier to identify splicing and resampling alterations with the CASIA V1, Columbia, Columbia Uncompressed, CVLAB, and MICC-F2000 for a total of 7100 images. Only 161 random images of the Ardizzone-dataset were evaluated because this dataset only has 50 original images; therefore, imbalanced classes may affect the results of the evaluation.

4. Experimental results

This section presents the results of the SVM trained with different T thresholds and the results of the evaluation of the proposed algorithm.

4.1 Detection performance for SVM model

Table 3 shows the results of the evaluation carried out for the Resampling and Splicing detection with different T thresholds with multiple databases.

As is evidenced in Table 3, a threshold of T = 4 obtained an accuracy of 90%, while T = 10 obtained an accuracy of 89%.

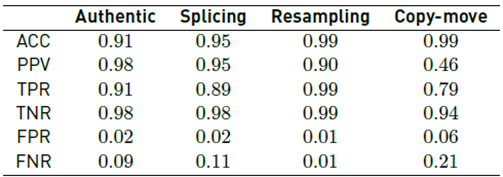

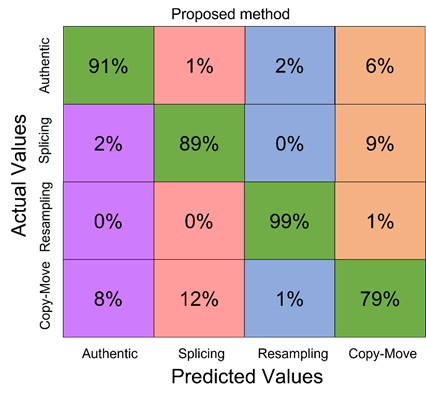

4.2 Performance of the proposed method

The confusion matrix that presents the result of evaluating all the datasets with the proposed methodology for the identification of splicing, resampling, and copy-move is presented in Figura 4. For this evaluation, a trained model with an SVM was selected with an RBF kernel with a threshold of T = 4 since it obtained an outstanding result with a lower computational cost. The confusion matrix, shown below, was adjusted with the following color code: green represents true positives, purple represents false authentic positives, red represents false splicing positives, blue represents false resampling positives, and orange represents false copy-move positives.

As presented in Figure 4, the proposed method obtained a general accuracy of 91% and weighted-F1 of 92%. Where its true positive rate for authentic images was 91%, 89% for images altered by splicing, 99% for images altered by resampling, and 79% for images altered by copy-move, Table 4 illustrates the results of the algorithm in greater detail.

The high TPR values for each of the classes indicate that the algorithm is effective at identifying each type of alteration, with resampling identification being the highest with 0.99 and copy-move being the lowest with 0.79. The highest PPV value was 0.98, while the lowest accuracy was copy-move with 0.46. On the other hand, the highest accuracy corresponded to the resampling class with 0.99 and the lowest to authentic images with 0.91.

A comparison of our proposed algorithm with other techniques for the identification of various types of alterations [47-49] is presented in Table 5.

Table 5 shows a 97% and 99% overall accuracy for the algorithm proposed by Sharma and Hema [48,49], respectively, while the proposed algorithm’s accuracy in this study is 91%. It is important to note that Prakash [47] only used three, Sharma, and Ghanekar [48] four, Hema Rajni [49] three, and the proposed technique eight datasets.

5. Discussion

In [47], splicing and copy-move alterations from Markov and the Zernike moment were identified. However, a resampling alteration can be considered a case of copy-move when there is scaling and rotation. This might have increased the computational cost of the results of the proposed algorithm since the estimation of both forgeries is more challenging. Additionally, the datasets used by the researchers have the same size and format; therefore, there are no considerable variations in the evaluation. [48] used a camera-based method in which they employed the Bayer matrix of the camera sensor to identify whether the image contained splicing, so they did not use a pixel-based methodology for the estimation. Additionally, the authors proposed a different equation from the one presented in Equation (14) to estimate the sensitivity of the copy-move alterations, which may vary the results of the comparison. [49] estimated the copy-move and resampling forgery using neural networks. The Markov process was used initially to feed a CNN that detected whether the image had been doctored. If it had been altered, the algorithm would transfer the image to a new CNN that classified whether it was a copy-move or splicing forgery. If the image had been altered using copy-move, the combined process of the circular harmonic transform (CHT) and the Zernike moments would be adopted to locate the copied region. Like Prakash, the author only classified copy-move and splicing alterations, while our proposed algorithm also recognized resampling forgery, making the challenge even greater. Further, it is well known that one of the main drawbacks of CNNs is their high computational cost due to their complex models and the limitations of datasets quantity for copy-move, resampling, and splicing due to deep learning requires much data, especially for training and testing [13]. In our proposal, we estimated the most remarkable types of forgery in the state of the art. Additionally, we evaluated our algorithm with eight different datasets to assess the robustness to the format and the resolution, in contrast with the authors previously introduced.

6. Conclusion

In this study, a hybrid algorithm was presented for the identification of copy-move, splicing, and resampling alterations from the Markov and SIFT process, which we call HA-MS (hybrid Algorithm - Markov and SIFT). Initially, a grayscale image was converted, then Markov features in space and frequency were extracted to train a model with an RBF-kernel SVM. The SVM was used to classify whether the image was altered by splicing or resampling or if the image was authentic. If it was suspected that the image was authentic or had copy-move alterations, SIFT was carried out to verify if the image had similar regions. The algorithm can identify several types of alterations with a general accuracy of 91. Some of the principal novelties of this proposal are the reduced number of features needed to carry out detection, in contrast to the method, and the algorithm robustness to variations in image format and resolution. [50].