1 Introduction

Image classification has been widely used in many areas of the industry. Convolutional neural networks (CNNs) have achieved high accuracy and robustness for image classification (e.g. Lenet-5 [1], GoogLenet [2]). CNNs have numerous potential applications in object recognition [3, 4], face detection [5], and medical applications [6], among others. In contrast, modern models of CNNs demand a high computational cost and large memory resources. This high demand is due to the multiple arithmetic operations to be solved and the huge amount of parameters to be saved. Thus, large computing systems and high energy dissipation are required.

Therefore, several hardware accelerators have been proposed in recent years to achieve low power usage and high performance [7-10]. In [11] an FPGA-based accelerator is proposed, which is implemented in Xilinx Zynq-7020 FPGA. They implement three different architectures: 32-bit floating, 20-bits fixed point format and a binarized (one bit) version, which achieved an accuracy of 96.33 %, 94.67 %, and 88 %, respectively. In [12] an automated design methodology was proposed to perform a different subset of CNN convolutional layers into multiple processors by partitioning available FPGA resources. Results achieved a 3.8x, 2.2x and 2.0x higher throughput than previous works in AlexNet, SqueezeNet, GoogLenet, respectively. In [13] a CNN for a low-power embedded system was proposed. Results achieved 2x energy efficiency compared with GPU implementation. In [14] some methods are proposed to optimize CNNs regarding energy efficiency and high throughput. In this work, an FPGA-based CNN for Lenet-5 was implemented on the Zynq-7000 platform. It achieved a 37 % higher throughput and 93.7 % less energy dissipation than GPU implementation, and the same error rate of 0.99 % in software implementation. In [15] a six-layer accelerator is implemented for MNIST digit recognition, which uses 25 bits and achieves an accuracy of 98.62 %. In [16] a 5-layer accelerator is implemented for MNIST digit recognition, which uses 11 bits and achieves an accuracy of 96.8 %.

It is important to note that most of the works mentioned above have used High-Level Synthesis (HLS) software. This tool allows creating a software accelerator directly, without the need to manually create a Register Transfer Level (RTL). However, HLS software generally causes higher hardware resource utilization, which explains why our implementation required less logical resources than previous works.

In this work, we implemented an RTL architecture for Lenet-5 inference, which was described by using directly Hardware Description Language (HDL) (eg. Verilog). It aims to achieve low hardware resource utilization and high throughput. We have designed a Software/Hardware (SW/HW) co-processing to reduce hardware resources. The work established the number of bits in a fixed-point representation that achieves the best ratio between accuracy/number of bits. The implementation was done using 12-bits fixed-point on the Zynq platform. Our results show that there is not a significant decrease in accuracy besides low hardware resource usage.

This paper is organized as follows: Section 2 describes CNNs; Section 3 explains the proposed scheme; Section 4 presents details of the implementation and the results of our work; Section 5 discusses results and some ideas for further research; And finally, Section 6 concludes this paper.

2 Convolutional neural networks

CNNs allow the extraction of features from input data to classify them into a set of pre-established categories. To classify data CNNs should be trained. The training process aims at fitting the parameters to classify data with the desired accuracy. During the training process, many input data are presented to the CNN with the respective labels. Then a gradient-based learning algorithm is executed to minimize a loss function by updating CNN parameters (weights and biases) [1]. The loss function evaluates the inconsistency between the predicted label and the current label.

A CNN consists of a series of layers that run sequentially. The output of a specific layer is the input of the subsequent layer. The CNN typically uses three types of layers: convolutional, sub-sampling and fully connected layers. Convolutional layers extract features from the input image. Sub-sampling layers reduce both spatial size and computational complexity. Finally fully connected layers classify data.

2.1 Lenet-5 architecture

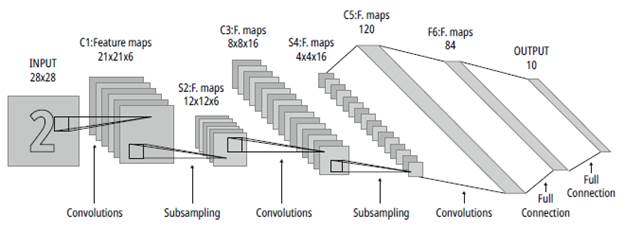

The Lenet-5 architecture [2] includes seven layers (Figure 1): three convolutional layers (CI, C3, and C5), two sub-sampling layers, (S2 and S4) and two fully connected layers (F6 and OUTPUT).

In our implementation, the size of the input image is 28x28 pixels. Table 1 shows the dimensions of inputs, feature maps, weights, and biases for each layer.

Table 1 Dimensions of CNN layers

| Layer | Size | |||

|---|---|---|---|---|

| Input n×n×p | Weight k×k×p×d | Bias d | Feature Map m×m×d | |

| C1 | 28×28×1 | 5×5×1×6 | 6 | 24×24×6 |

| S2 | 24×24×6 | N/A | N/A | 12×12×6 |

| C3 | 12×12×6 | 5×5×6×16 | 16 | 8×8×16 |

| S4 | 8×8×16 | N/A | NA | 4×4×16 |

| C5 | 4×4×16 | 4×4×16×120 | 120 | 1×1×120 |

| F6 | 1×1×120 | 1×1×120×84 | 84 | 1×1×84 |

| Output | 1×1×84 | 1×1×84×10 | 10 | 1×1×10 |

Source: The authors.

Convolutional layers consist of kernels (matrix of weights), biases and an activation function (eg. Rectifier Linear Unit (ReLu), Sigmoid). The convolutional layer takes the feature maps in the previous layer with depth and convolves them with kernels of the same depth. Then, the bias is added to the convolution output and this result passes through an activation function, in this case, ReLu [17]. Kernels shift into the input with a stride of one to obtain an output feature map. Convolutional layers have feature maps of n-k+lxn-k+1 pixels.

Note that weights and bias parameters are not found in the S2 and S4 layers. The output of a sub-sampling layer is obtained by taking the maximum value from a batch of the feature map in the preceding layer. A fully connected layer has feature maps. Each feature map results from the dot product between the input vector of the layer and the weight vector. The input and weight vectors have elements.

3 Software/hardware co-processing scheme

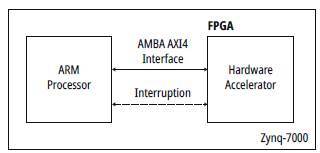

Fig. 2 presents the proposed SW/HW co-processing scheme to perform the Lenet-5 inference on Zynq-7000 SoC. It consists of two main parts: an Advanced RISC Machine (ARM) processor and an FPGA, which are connected by the Advanced extensible Interface 4 (AXI4) bus [18]. A C application runs into the ARM processor, which is responsible for the data transfer between software and hardware. Furthermore, a hardware accelerator is implemented on the FPGA. This accelerator consists of a custom computational architecture that performs the CNN inference process.

3.1 Hardware accelerator

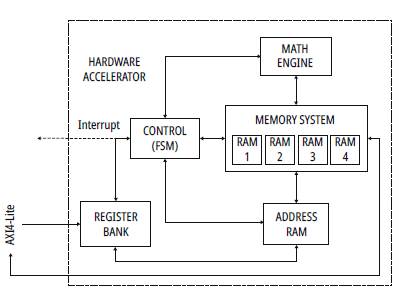

Fig. 3 shows the hardware accelerator that develops the inference process of CNN. The FSM controls the hardware resources into the accelerator. REGISTER_BANK contains the dimensions of the entire architecture (Table 1). MEMORY_SYSTEM consists of four Block RAMs (BRAMs). The first one stores weights, and the second one stores biases. RAM_3 and RAM_4 store the intermediate values of the process. These memories are overwritten in each layer. All four memories are addressed by the ADDRESS_RAM module.

MATH_ENGINE is the mainstream module in our design because it performs all the necessary arithmetic operations in the three types of layers mentioned in Section 2. All feature maps of the layers are calculated by reusing this module. It is necessary to highlight the saving of hardware resources with the implementation of this module.

This module is used to:

■ Perform a convolution process in a parallel fashion

■ Calculate a dot product between vectors

■ Add bias

■ Evaluate the ReLu activation function

■ Perform the sub-sampling process

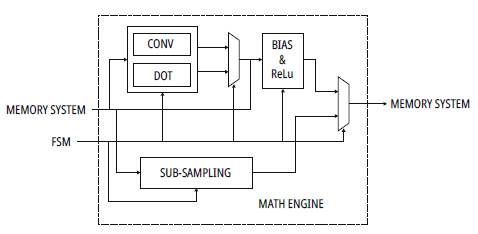

To perform all the processes mentioned above, the module MATH_ENGINE is composed of CONV_DOT, BlAS_&_ReLu, and SUB_SAMPLING. The overall architecture of the MATH_ENGINE is shown in Fig. 4.

CONV_DOT consists of six blocks. Each block performs either a 5x5 convolution or a dot product between vectors with a length of 25. The BIAS_&_ ReLu submodule adds a bias to the output of the CONV_DOT submodule and performs the ReLU function. The SUB_SAMPLING submodule performs a max-pooling process (layers S2 and S4).

3.2 Description of the SW/HW scheme process

Before the accelerator classifies an image, it is necessary to store all the required parameters (weights, biases, and dimensions of CNN layers). The processor sends the input image and the parameters to the MEMORY_SYSTEM and the BANK_REGISTER, respectively. Initially, RAM3 stores the image. Then, the processor sets the start signal of FSM, which initiates the inference process. The FSM configures MATH_ENGINE, ADDRESS_RAM, and MEMORY_SYSTEM according to the data in the BANK_REGISTER.

As mentioned, for convolutional and fully connected layers, the CONV_DOT submodule performs the convolution and dot product operations, respectively. The Bias_&_ReLu submodule adds the bias and performs the ReLu activation function. Moreover, for subsampling layers, the input is passed through the SUB_SAMPLING submodule.

According to the current layer, the MATH_ENGINE output could be either the result of Bias_&_ReLu or SUB_SAMPLING.

To reduce the amount of memory required, we implemented a re-use memory strategy. The strategy is based on the use of just two memories, which are switched to store the input and output of each layer. For example, in Layer_i input is taken from RAM3 and output is stored into RAM4. Then, in Layer_(i+1) input is taken from RAM4 and output is stored into RAM3 and so on.

Lenet-5 has ten outputs, one for each digit. Image classification is the digit represented by the output that has the maximum value. The inference process is carried out by executing all the layers. Once this process is finished, the FSM sends an interruption to the ARM processor, which enables the transfer of the result from the hardware accelerator to the processor.

4 Results

This section describes the performance of the SW/ HW co-processing scheme implementation. The implementation was carried out into a Digilent Arty Z7-20 development board using two complement fixed-points. This board is designed around the Zynq-7000 SoC with 512 MB DDR3 memory. The SoC integrates a dual-core ARM Cortex-A9 processor with Xilinx Artix-7 FPGA. The ARM processor runs at 650 MHz and the FPGA is clocked at 100 MHz. The project was synthesized using Xilinx Vivado Design Suite 2018.4 software.

4.1 CNN training and validation

CNN was trained and validated on a set of handwritten digits images from the MNIST database [1]. The MNIST database contains handwritten digits from 0 to 9. The digits are centered on a 28 x 28-pixel grayscale image. Each pixel is represented by 8 bits, obtaining values in a range from 0 to 255, where 0 means white background, and 255 means black foreground. The MNIST database has a training set of 60,000 images and a validation set of 10m000 images. API Keras with TensorFlow backend was used to train and validate the CNN in python. Python was set up to use a floating-point representation to achieve high precision.

Furthermore, the inference process was iterated eight times on the proposed scheme by changing word length and fractional length. CNN parameters and the validation set were quantized in second's complement fixed-point format using MATLAB. Table 2 shows the percent of accuracy obtained by each validation.

Table 2 Accuracy for different data representations

| Word Length [bits] | Integer, Fraction | Accuracy % | |

|---|---|---|---|

| Floating Point | 32 | N/A | 98.85 |

| 17 | «7,10» | 98.85 | |

| 15 | 6,9 | 98.70 | |

| 16 | 8,8 | 98.70 | |

| 15 | 7,8 | 98.70 | |

| Fixed Point | 14 | 6,8 | 98.68 |

| 12 | 6,6 | 97.59 | |

| 11 | 6,5 | 91.46 | |

| 16 | 5,11 | 61.31 |

Source: The authors.

The W, F notation indicates integer length (W) and fractional length (F). A starting point was set by finding the number of bits that represents the maximum value in the integer part.

4.2 Execution time

The number of clock cycles spent in data transfer between software and hardware is counted by a global timer. A counter was implemented on the FPGA, which counts the number of clock cycles required by the hardware accelerator to perform the inference process. Table 3 shows the execution time of the implementation.

Table 3 Execution times per image

| Process | Time [ms] |

|---|---|

| Load parameters | 7.559* |

| Load image input | 0.122 |

| Inference process | 2.143 |

| Extract output data | 0.002 |

| Total Time | 2.268 |

Source: The authors.

The parameters are sent to the hardware only once when the accelerator is configured by the processor. Therefore, the time to upload the parameters* was not considered in the total execution time.

The execution time per image is 2,268 ms. Thus, our implementation achieves a throughput of 440,917 images per second.

4.3 Hardware resource utilization

Table 4 shows hardware resource utilization for different word lengths. As the MATH ENGINE performs 150 multiplications in parallel, the amount of Digital Signal Processors (DSPs) used in all cases is constant. In this case, 150 DSPs in the MATH ENGINE and 3 DSPs are used in the rest of the design.

Table 4 Comparison of hardware resource utilization for different word lengths

| Precision | Hardware resources | Max. LUTs FFs BRAM [KB] accuracy % | |||

|---|---|---|---|---|---|

| Word Length [bits] | LUTs | FFs | BRAM [KB] | ||

| Fixed point | 17 | 4738 | 2922 | 173.25 | 98.85 |

| 16 | 4634 | 2892 | 164.25 | 98.70 | |

| 15 | 4549 | 2862 | 155.25 | 98.70 | |

| 14 | 4443 | 2832 | 146.25 | 98.68 | |

| 12 | 4254 | 2772 | 119.25 | 97.59 | |

| 11 | 4151 | 2742 | 114.75 | 91.46 |

Source: The authors.

Note that a shorter word length reduces hardware resources. However, it is important to consider how accuracy will be affected by the integer and fractional lengths (Table 2).

4.4 Power estimation

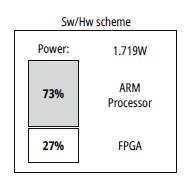

The power estimation for the proposed scheme was made by using Xilinx Vivado software (Fig. 5). This estimation only reports the consumption of Zynq-7000 SoC (a DDR3 RAM is considered part of the ARM processor).

The FPGA consumes power in the software implementation due to the architecture of the Zynq-7000 platform. Note that the ARM processor consumes most of the total power even when the hardware accelerator performs the inference process, which takes most of the execution time.

5 Discussion

We compared the implementation of our co-processing scheme with two different implementations on the Zynq-7000 platform: software-only and hardware-only solutions. In the software-only solution, the input image and CNN parameters are taken to the DDR3 RAM. The hardware-only solution uses a serial communication (Universal Asynchronous Receiver-Transmitter (UART)) module to replace the ARM processor in the co-processing scheme.

Table 5 shows the results of the three implementations on the Arty Z7 board. Although the proposed scheme implementation uses less than twice the word length of the software-only solution, the accuracy only fell by 1.27%. Also, our co-processing scheme achieved the highest throughput. The hardware-only implementation is a low power version of the proposed scheme. Future research will focus on improving the performance of the hardware-only solution. Note that the hardware-only implementation has the lowest throughput because of the bottleneck imposed by the UART (to transfer the input image, CNN parameters are stored in BRAMs). However, this implementation could be the best option for applications in which power consumption is critical and not throughput.

Table 5 Power estimation for different implementations

| Co-processing scheme | Hardware-only | Software-only | |

|---|---|---|---|

| Data format | 12-bit fixed-point | 12-bit fixed-point | 32-bit floating-point |

| Frequency (MHz) | 100 | 100 | 650 |

| Accuracy (%) | 97.59 | 97.59 | 98.85 |

| Throughput (images/second) | 441 | 7 | 365 |

| Power estimated (W) | 1.719 | 0.123 | 1.403 |

Source: The authors.

As mentioned, it is difficult to compare our results against other FPGA-based implementations because they are not exactly like ours. However, some comparisons can be made regarding the use of logical resources and accuracy. Table 6 presents a comparison with some predecessors.

Table 6 Comparison with some predecessors

| Metric | Our Design | [11] | [17] | [18] |

|---|---|---|---|---|

| Model | 3 Conv 2 Pooling 2 FC | 2 Conv 2 Pooling 1 FC | 2 Conv 2 Pooling 2 FC | 2 Conv 2 Pooling |

| Fixed Point | 12 bits | 20 bits | 25 bits | 11 bits |

| Operation Frequency | 100 MHz | 100 MHz | 100 MHz | 150 MHz |

| BRAM | 45 | 3 | 27 | 0 |

| DSP48E | 158 | 9 | 20 | 83 |

| FF | 2 772 | 40 534 | 54 075 | 40 140 |

| LUT | 4 254 | 38 899 | 14 832 | 80 175 |

| Accuracy | 97.59 % | 94.67% | 98.62 % | 96.8 % |

Source: The authors.

Note that our design uses less Look-Up Tables (LUTs) and Flip-Flops (FFs) than these previous works. Only [15] achieves better accuracy because this implementation uses more bits; however, this number of bits increases the number of logical resources.

6 Conclusion

In this paper, an SW/HW co-processing scheme for Lenet-5 inference was proposed and implemented on a Digilent Arty Z7-20 board. Results show an accuracy of 97.59 % using a 12-bit fixed-point format. The implementation of the proposed scheme achieved a higher throughput than a software implementation on the Zynq-7000 platform. Our results suggest that the usage of a fixed-point data format allows the reduction of hardware resources without compromising accuracy. Furthermore, the co-processing scheme makes it possible to improve the inference processing time. This encourages future advances in energy efficiency on embedded devices for deep learning applications.